Abstract

Variational quantum algorithms (VQAs) are hybrid algorithms that combine quantum computing capabilities with classical machine learning (ML) tasks, utilizing classical optimizers to train parametrized quantum circuits. This work demonstrates their practical utility by addressing regression tasks in an industrially relevant context: modelling dendritic solidification in metals, a process crucial for determining mechanical properties in additive manufacturing. By comparing a classical surrogate model based on extreme gradient boosting with a hybrid classical-quantum VQA model, this work shows that VQAs can serve as a viable alternative for computationally expensive surrogate modelling. These findings highlight the potential of VQAs to tackle complex physical phenomena in high-cost computational scenarios, advancing their application in real-world industrial ML challenges.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Quantum computing (QC) is a field of research dedicated to developing computer technology based on the principles of quantum mechanics, the theory that describes the physical world as probabilistic rather than deterministic [1].

Variational quantum algorithms (VQAs) are hybrid algorithms that integrate parametrized quantum circuits, known as Variational Quantum Circuits (VQCs), with classical optimizers [2]. These algorithms are particularly promising for quantum machine learning (QML) tasks, including classification and regression [3], and are considered effective for both near-term and long-term quantum hardware [4]. VQCs, with their parametrized structure, can be seen as quantum analogues of neural networks, highlighting their potential for solving complex machine learning (ML) problems. By leveraging an iterative feedback loop, VQAs integrate quantum and classical computations. Quantum computers evaluate the VQCs [3, 5], while classical systems process the results and adjust the circuit parameters to improve performance [6].

Although several theoretical results demonstrate that quantum algorithms can solve complex problems more efficiently than classical alternatives in terms of worst-case time complexity [7], these results assume the availability of quantum computers with exceptionally low logical error rates. Despite advancements in the field, today’s quantum computers are not yet capable of executing practical quantum algorithms that would bring immediate changes. The current stage of quantum technology is characterized by noisy intermediate-scale quantum (NISQ) devices, which have a restricted number of qubits and are susceptible to errors [8].

The main challenge is to develop a practical and efficient quantum-classical hybrid model that can utilize the strengths of both approaches to achieve high accuracy in ML tasks. The model needs to be optimized for the limited qubit capacities of NISQ devices while also demonstrating better efficiency than classical-only models. The early development stage of quantum technologies and the inherent noise in current quantum devices partially restrict the full potential of QML. However, exploring how VQAs can improve the performance of classical ML algorithms is expected to significantly impact numerous areas, such as regression problems.

Recent studies have also explored the challenges posed by the curse of dimensionality in VQAs. Three major issues have been identified: barren plateaus (BPs), highly non-convex objective landscapes with numerous suboptimal local minima, and the expressibility of the chosen circuits [9]. Notably, BPs, where the gradient of the cost function vanishes exponentially with the number of qubits, are closely linked to the expressibility of the circuit and the prevalence of suboptimal minima in the optimization landscape [10, 11]. These challenges significantly hinder the convergence of VQA-based QML models as the system scales with more qubits. While active research addresses these issues by proposing alternative approaches, these limitations highlight the intricate balance required in designing scalable and effective QML models.

This emerging quantum approach holds significant promise for tackling computationally expensive problems in industrial applications, such as those requiring numerical resolutions of equations through simulations that can take hours or even days. The case of dendritic solidification in metals is a prime example of a problem frequently encountered in additive manufacturing industrial environments [12], which currently relies on resource-intensive numerical simulations to solve phase field modelling problems [13].

One of the most studied alternatives in the literature for accelerating costly simulations has been surrogate models [14]. The idea behind this approach is to train surrogate models, usually ML models, using a representative dataset derived from simulations to replace the original complex model [15]. Surrogate modelling has been widely studied for model approximation, design space exploration, problem formulation, sensitivity analysis, and optimization [16, 17]. Surrogate models not only allow for exploration or a better understanding of the relationships within our problem, but they also represent a key research avenue in solving costly problems.

This work demonstrates the utility of VQA for solving data-based regression problems as a potential substitute for current ML algorithms in a complex material microstructure representation challenge. The possibility of addressing computationally expensive issues with the aid of quantum computers, which are currently being developed and have already become available, is shown by this novel technique.

This paper is structured as follows. Section 2 details the fundamentals of QML by explaining VQA components. Then, in Sect. 3 the industrial problem of dendritic solidification of metals is introduced and the phase field model is formulated. The methodology followed from data acquisition to quantum surrogate modelling is explained in Sect. 4, and the results obtained are discussed in Sect. 5. This work is concluded in Sect. 7, where several future directions are outlined to bring this approach closer to real-world quantum hardware implementation.

2 Quantum machine learning fundamentals

In quantum regression problems, some initial tasks such as feature engineering or data cleaning and the final task of evaluating the performance are carried out in the same manner as in classical ML. However, the key distinction lies in the fact that the prediction algorithm used is not a conventional ML algorithm but rather a VQA.

In general, VQA proceeds as follows: Classical data is introduced into the quantum circuit, where it is encoded and processed. The circuit’s output is then measured, and these measurements are used to compute the cost function, which quantifies the performance of the circuit. A classical optimization algorithm subsequently adjusts the circuit’s parameters based on the cost function values. This iterative process continues until convergence is achieved or a specified stopping criterion is met.

Finally, the model’s performance is evaluated using specific hyperparameters, including the choice of observable for the expectation value, the batch size (number of samples used in each training iteration), and the number of layers in the quantum circuit. The performance is measured by calculating error metrics such as MSE or \(R^2\) score, similar to classical ML regression problems.

-

Variational Quantum Circuit (VQC): It consists of two main phases: an encoding phase, where classical data is mapped to quantum initial states, and an ansatz, which is the unitary transformation applied in the circuit for processing the information encoded and it generates a collection of parametrized states that the VQA explores.

Both phases are composed of quantum logic gates, which are unitary operators capable of altering the state of one or more qubits. These gates can depend on adjustable parameters, such as rotation gates, or be fixed gates like CNOT that do not rely on parameters [5, 18].

Let \(\theta = (\theta _0, \theta _1, \ldots , \theta _{n-1})\) be the set of n parameters and let \(\hat{\textbf{U}}(\theta )\) denote. When applied to the initial state \(|\psi _0\rangle\), the circuit generates the state \(|\psi (\theta )\rangle\).

$$\begin{aligned} |\psi (\theta )\rangle = \hat{\textbf{U}}(\theta ) |\psi _0\rangle \end{aligned}$$(1)The set of parameters \(\theta\) is iteratively adjusted to minimize the cost function, enabling optimization of the circuit’s performance [19, 20].

-

Quantum Measurement: After applying the VQC to the qubits, the resulting quantum state represents a superposition of multiple basis states. Quantum measurement is then conducted to extract information from this given output quantum state. When a quantum state is measured under a specific observable with a set of parameters \(\theta\), the qubit collapses to a quantum state on a computational basis, obtaining classical results. This collapse is key to understanding the probabilistic nature of quantum predictions [21].

There are several types of quantum measurements, each serving different purposes and providing different types of information about the quantum state. Projective measurements are a common type of quantum measurement where the quantum state is projected onto one of the eigenstates of an observable [22, 23]. The results of these measurements correspond to the eigenvalues of the observable.

Projective measurements can be performed along the axes defined by the Pauli operators. For a single qubit, this includes measurements along the X, Y, and Z axes:

-

Pauli-X (X): Measures in the basis \(\{|+\rangle , |-\rangle \}\), where the matrix is

$$\begin{aligned}\sigma _{x} = \begin{pmatrix} 0 & 1 \\ 1 & 0 \end{pmatrix} \end{aligned}$$ -

Pauli-Y (Y): Measures in the basis \(\{|+i\rangle , |-i\rangle \}\), where the matrix is

$$\begin{aligned}\sigma _{y} = \begin{pmatrix} 0 & -i \\ i & 0 \end{pmatrix} \end{aligned}$$ -

Pauli-Z (Z): Measures in the standard computational basis \(\{|0\rangle , |1\rangle \}\), where the matrix is

$$\begin{aligned}\sigma _{z} = \begin{pmatrix} 1 & 0 \\ 0 & -1 \end{pmatrix} \end{aligned}$$

Measurements are generally considered irreversible operations that replace quantum information with classical information [24] and are essential for evaluating the cost function, which subsequently guides the optimization process to identify the optimal parameter configuration.

-

-

Cost Function: The parameters are generally optimized with respect to some cost function \(C(\theta )\), analogue to loss function used in ML, and which assesses the quality of the proposed solution based on the parameter set \(\theta\) [5].

The cost functions that can be used in ML approaches with VQAs are those that are used for classical methods [25]. In the case of regression, two of the most commonly used are MSE and Huber losses.

Let \(y_i\) be the actual values and \(\hat{y}_i\) are predicted values, the MSE is formulated as:

$$\begin{aligned} \text {MSE}(y, \hat{y}) = \frac{1}{n} \sum _{i=1}^{n} (y_i - \hat{y}_i)^2 \end{aligned}$$(2)On the other hand, Huber loss \(L_{\delta }(y, \hat{y})\) is a robust cost function that behaves quadratically for small errors and linearly for larger errors [26].

$$\begin{aligned} L_{\delta }(y, \hat{y}) = \frac{1}{n} \sum _{i=1}^{n} L_i(y_i, \hat{y}_i, \delta ) \end{aligned}$$(3)Where \(L_i(y_i, \hat{y}_i, \delta )\) is the Huber loss for each pair \((y_i, \hat{y}_i)\), given by:

$$\begin{aligned} L_i(y_i, \hat{y}_i, \delta ) = {\left\{ \begin{array}{ll} \frac{1}{2}(y_i - \hat{y}_i)^2 & \text {if } |y_i - \hat{y}_i| \le \delta \\ \delta \cdot (|y_i - \hat{y}_i| - \frac{1}{2} \delta ) & \text {if } |y_i - \hat{y}_i| > \delta \end{array}\right. } \end{aligned}$$(4) -

Classical Optimizer: This component adjusts the parameters \(\theta\) to minimize the cost function \(C(\theta )\) [3] [27]. The measurement results are used to compute the cost function and the VQA employs a classical optimizer to find the optimal parameters \(\theta\) that minimize this function. Therefore, VQA’s success relies on the reliability of the chosen optimization method [4].

The most common optimization methods are gradient-based ones. They consist of computing the gradient, \(\nabla _{\theta } C(\theta )\), of the cost function, \(C(\theta )\), with respect to its parameter \(\theta\) [4]. The gradient indicates the direction at point \(\theta\) in parameter space where the cost function increases most rapidly. To minimize the cost function, parameters are adjusted slightly in the opposite direction of the gradient.

$$\begin{aligned} \theta ^{(t+1)} = \theta ^{(t)} - \eta \nabla _{\theta } C(\theta )\bigg |_{\theta ^{(t)}}, \quad t = 1, \ldots , T \end{aligned}$$(5)where \(\nabla _{\theta } C(\theta ) = \begin{pmatrix} \frac{\partial C(\theta )}{\partial \theta _1}, \ldots , \frac{\partial C(\theta )}{\partial \theta _{n_{\theta }}} \end{pmatrix}\) and \(\eta\) are the step size, often referred to as the learning rate. Here, T is the total number of iterations [5] [27].

3 Phase field problem

3.1 Industrial context

The solidification of metals plays a crucial role in some of the most important manufacturing processes in today’s industry, such as casting, welding, and additive manufacturing, which normally constitute the first step in a complex process chain. The complexity of solidification processes in materials science arises from the intricate interplay of thermal, mechanical, and chemical phenomena at different scales, from the atomic to the macroscopic.

Phase field methods have become a very powerful tool in the modelling and simulating of microstructure evolution, including solidification processes, due to their ability to tackle arbitrarily complex interface shapes using continuous fields [28]. Phase field methods introduce an Order Parameter, OP, \(\varphi \in\) [−1, 1] or [0, 1], depending on the author’s choice, where the central point of the interval represents the interface between different phases or states of matter within the system.

These physics-based numerical solvers deliver accurate insights into targeted phenomena. Nonetheless, the computational complexity associated with solving such equations is typically significant and can become overwhelming when exploring a wide parametric space necessitates numerous simulations [17].

3.2 Physics-based phase field model

In this use case, a simplified, two-dimensional phase field model of dendritic solidification is considered [29], which disregards effects associated with fluid dynamics, expansion, and shrinking brought about by phase transitions and the possible presence of thermal noise. Besides the non-conserved OP \(\varphi (r, t)\), a temperature field T(r, t) is also evolved.

The time-dependent Allen–Cahn equation is assumed for the evolution of the system,

where \(\tau\) is a characteristic time scale and the term on the right-hand side denotes the functional derivative of Ginzburg–Landau free energy,

The local free energy density \(f(\varphi , m)\) is chosen as a double-well potential, in such a way that it has two stable states or local minima, located at \(\varphi =0\) and \(\varphi =1\),

Anisotropy is introduced by assuming that \(\varepsilon\), a small parameter related to the thickness of the interface, depends on the direction of the outward-pointing normal vector of the interface. In particular,

with \(\bar{\varepsilon }\) the mean value of \(\varepsilon\) and the anisotropy \(\sigma (\theta )\) expressed as

The factor j is related to the degree of symmetry of the crystal structure. For example, n = 4 for cubic symmetry in a (100) plane and n = 6 for hexagonal symmetry in a (0001) plane. \(\delta\) is a parameter controlling the strength of the anisotropy, \(\theta\) is the angle measured from a reference direction, and \(\theta _0\) is the initial angle offset [30].

The parameter m gives the thermodynamic driving force so that as the melt becomes under-cooled, the well will tilt towards the solid phase.

where \(\alpha\) is a positive constant satisfying \(\alpha < 1\) [31] and \(T_{eq}\) is the equilibrium temperature. Considering the explicit form of the local energy density, it is possible to obtain the evolution of the order parameter,

Finally, the time evolution of the normalized temperature is given by:

So that the equilibrium temperature is 1. The diffusion constant \(\kappa\) is assumed to be identical for both the liquid and solid phases. The domain is discretized using a 100x100 grid with a \(dx = dy = 0.03\) spacing. A finite difference algorithm, utilizing a five-point stencil in two-dimensional space, is used to approximate the Laplace operator and the directional derivatives, while a simple explicit Euler time marching scheme is employed for the time integration. The code provided in [29] was employed within a Python wrapper, to effectively control the parametric simulations conducted in MATLAB.

4 Methodology

All methods used in this study are described in detail below. The code used to train and validate the surrogate models and data supporting this work are available on GitHub at https://github.com/eidergarate/phase_field_qml, to allow reproducibility.

4.1 Data and simulations

Seven experiments were conducted, varying the parameter \(\theta _0 = \{0.1, 0.15, 0.2, 0.25, 0.3, 0.35, 0.4\}\), respectively, labelled as experiments 1 through 7. The remaining three parameters were held constant, with k fixed at 1.8, j fixed at 4, and \(\alpha\) fixed at 0.9.

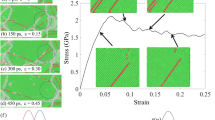

For each experiment, as shown in Fig. 1, a sequence of spatiotemporal data is obtained. This data can be visualized in two dimensions to understand the evolution of the Order Parameter (OP) that ranges between 0 and 1 in this case. These visualizations provide insights into the dynamic changes and patterns that occur over time, allowing for a detailed analysis of the solidification process under varying conditions of theta.

Certain time instances from the start \(t=0\) until \(t = tp\) are used to train a surrogate prediction model capable of estimating the value of the OP at the final instant \(t = T\). The choice of tp is crucial, as the observations temporally closer to the output state are more similar to it. However, the number of iterations used as predictors defines the computational dependence of the surrogate model, as those iterations of the phase field used as inputs are required each time a new solidification pattern needs to be predicted.

In this case, the duration of the simulations is fixed at 4000 steps, so \(T=4000\). By discretizing the time, values of Temperature and OP are obtained for each time step from \(t=0\) to \(t=4000\).

4.2 Feature extraction

Regarding the problem under study, the numerical resolution of the phase field represents the spatiotemporal evolution of solidification in metals, presenting a problem of this nature. The computational advantage brought by the use of surrogate models, particularly ML models, lies in the ability to predict the final solidification state of the metal without the need to solve the equations iteration by iteration.

In deciding at which point in time the features should be extracted, a challenge is presented. On the one hand, the closer tp is to the final instant \(t = 4000\), the greater the relevance of the inputs to the output will be, and therefore, higher accuracy is to be expected in the predictive model. On the other hand, the utilization of more temporally proximate partial resolutions from the numerical solver demands increased computational resources when predictions are made by the surrogate model. Thus, for the representation of the predictor variable space of the model, two temporal instances are set. The first one is placed after \(t=1000\) iterations, where a visible solidification of the material begins to be appreciated. The second instance is positioned on the midpoint of the process, that is, at \(t=2000\), which is the halfway point between the start at \(t=0\) and the end at \(t=4000\).

For the spatial representation of the system, a strategy was selected that allows the extraction of the spatial characteristics of solidification. The nature of the problem studied necessitated a pixel-by-pixel prediction, with the issue beginning and ending in spaces of the same dimensions. Consequently, the spatial characteristics of the problem were extracted on a pixel-by-pixel basis.

Each of the pixels of the problem is defined by the space discretization made by the phase field’s numerical solver using a 100x100 grid.

Each pixel in the image is defined by its position (i, j), where \(i = 1,...,100\) and \(j = 1,..., 100\). For each pixel at position (i, j), 25 features for Temperature and 25 features for the Order Parameter (OP) were extracted. These values correspond to the 25 pixels formed by the current pixel and its surrounding pixels up to a distance of 2, as shown in Fig. 2.

In summary, seven datasets are available, one for each experiment. Each dataset contains 10.000 observations, as the space has been discretized with a 100x100 grid, so there are 10.000 pixels. For each pixel, 150 features have been extracted, corresponding to the Temperature and the Order Parameter (OP) of the current pixel and its surrounding pixels performed for the time instants \(t=1000\), \(t=2000\), and \(t=4000\).

4.3 Surrogate modelling approaches

The information used as inputs for the different predictive models were the features extracted at instants \(t=1000\) and \(t=2000\) and the output variable of the regression model is the state of solidification at the final instant of the process, OP at \(t=4000\).

Furthermore, only one quadrant of the image was used to simplify computational resources. As can be observed in the previous images, the solidification in the final state is expected to achieve a high degree of symmetry. More precisely, the complete image can be achieved by \(90^{\circ }\) rotations through each of the quadrants using only one of them as shown in Fig. 3. Therefore, accurately predicting the state of the lower-left quadrant is deemed sufficient to generalize the solidification state for the entire experiment. In this manner, by filtering \(0< x \le 50\) and \(0 < y \le 50\), the 7 datasets used in the modelling task contain 2.500 observations each instead of 10.000.

4.3.1 Classical machine learning

Following a comprehensive comparative review, the extreme gradient boosting (XGBoost) algorithm was chosen for the regression task due to its superior performance and flexibility compared to other evaluated ML algorithms. XGBoost, recognized for its efficiency in managing large and complex datasets, has demonstrated notable capabilities in enhancing model accuracy through its gradient boosting framework.

XGBoost is a powerful and adaptable ML algorithm, renowned for its performance in structured or tabular data scenarios. Developed to improve upon traditional gradient boosting techniques, XGBoost uses an ensemble of decision trees to iteratively refine and enhance predictive accuracy [32].

All experiments will be conducted in Python, with the scikit-learn [33] and XGBoost [32] libraries utilized for implementation and analysis.

For the validation of the model, several hyperparameters were tested. For the number of estimators values of \(\{50, 100, 150, 200\}\) were used; for the maximum depth on the trees, 3, 5, and 7 were used; for the learning rate \(\{0.01, 0.1, 0.2\}\); for the subsample ratio in the training instances two values were tested, 0.8 and 1; and finally, for the variable subsample in each level of the tree two values were tested, 0.8 and 1.

4.3.2 Quantum machine learning

This section describes the implementation in Python using libraries such as PennyLane [34]: the data preprocessing, the components selected in the VQA, and the optimization loop to adjust the parameters of the circuit.

-

Data preprocessing.

-

Feature scaling is a crucial preprocessing step that involves transforming numerical features to a standardized scale. Using StandardScaler independent variables are standardized to have a variance of 1, while the dependent variable is scaled to range between −1 and 1 with the MinMaxScaler as the expected value of Pauli-X observable is ranged in \([-1, 1]\).

-

Data is converted into tensors to take advantage of the simulators provided by PennyLane, which offer an organized and efficient method for storing and manipulating data [35].

-

-

Quantum Circuit Structure depicted in Fig. 4.

-

For the encoding of classical data, angle encoding is employed using RY rotation gates. This method uses a parameter to rotate the original value to a qubit state, performing a rotation around the Y-axis of the Bloch sphere [36]. The rotation angle is determined by the classical data to be encoded, translating classical information into a quantum state for further processing. For an \(n\)-dimensional sample, \(n\) qubits are needed to generate the corresponding quantum state. Since the dataset consists of 352 samples with 7 features each, angle encoding requires 7 qubits to represent each sample.

-

The circuit employs a predefined high-level ansatz provided by PennyLane called StronglyEntanglingLayers. This template is designed to create multiple layers within the quantum circuit. Each layer consists of rotational quantum logic gates with variational parameters and CNOT gates. The rotations involve, by default, three parameters per qubit, which are applied across all qubits in the circuit. This is complemented by cyclic CNOT gates that ensure the entanglement of qubits. This design ensures connectivity between qubits, enhancing the circuit’s ability to explore a wider range of quantum states.

-

The circuit is structured in 7 layers of StronglyEntanglingLayers, with each layer’s sequence of gates being repeated a specified number of times. This arrangement allows the circuit to process a wide range of quantum states effectively.

-

Classical data is repeatedly reintroduced into the quantum circuit at each new StronglyEntanglingLayers, a process known as data re-uploading, which addresses the no-cloning theorem [37] which states that it is not possible to copy quantum data.

-

For quantum measurement, after comparing the accuracy obtained in some preliminary tests using the Pauli-X, Pauli-Y, and Pauli-Z operators, it was found that Pauli-X yielded more precise results. Therefore, Pauli-X is selected as the observable in this case.

-

-

Optimization Process.

-

Huber loss was chosen for this problem due to its effectiveness in handling the distribution of output values, which are real numbers between 0 and 1, with a higher frequency of values closer to 0.

-

The Adam optimizer was employed, which is a successful algorithm for optimizing ML models and it is the default optimizer of scikit-learn and PyTorch [5].

-

The optimization of the model’s parameters is done iteratively. Firstly, the dataset is divided into batches; smaller random subsets of data points from the training set for each iteration. Each of these batches is used to compute the gradient and update the model parameters. Secondly, the optimizer calculates gradients based on the cost function evaluated using the current state of the quantum circuit. Finally, the optimizer updates the parameters based on the computed gradients, minimizing the chosen cost function.

-

4.4 Validation strategies

Two validation approaches were implemented: the two-set cross-validation (2SCV), using a limited training set of two datasets, and the leave-one-group-out cross-validation (LOGOCV), where the model is trained on six datasets to predict the remaining dataset. This dual approach aims to assess whether a reliable estimation can be achieved with a limited training set, or if including information from all datasets significantly improves the model’s performance.

In the 2SCV, there are \(\left( {\begin{array}{c}7\\ 2\end{array}}\right)\) pairs of numbers with no repetitions that can be used to train the model and each of them can be used to predict in the remainder 5 sets. In total, there are 105 combinations. Since these tests would entail an excessively high computational cost for the objective of this study, the number of cross-validations performed was fixed by 20, where the training and test sets were defined following a uniform distribution.

Finally, the evaluation of the predicted values focussed on the coefficient of determination (\(R^2\)), which measures the proportion of variance in the dependent variable explained by the independent variables, providing insight into the model’s fit. An \(R^2\) value of 1 signifies that the independent variables fully explain the variance in the dependent variable, whereas a value of 0 indicates that the independent variables do not account for any of the variability.

where \(y_i\) is the actual value of the dependent variable, \(\hat{y}_i\) is the predicted value of the dependent variable, and \(\bar{y}\) is the mean of the actual values.

5 Results and discussion

This section presents the results of the classical ML approach using the XGBoost algorithm and the quantum approach via the quantum variational circuit. Both models were evaluated using the two proposed strategies, 2SCV and LOGOCV. A detailed discussion is provided to assess which strategy is more appropriate for the given context.

The accuracy of each prediction is assessed by first calculating the \(R^2\) score across the entire grid and subsequently evaluating the MSE (mean squared error) separately within the regions corresponding to the solid, liquid, and interface phases as defined in the actual data. Additionally, visual representations of the final solidification state estimated by each method are provided to facilitate an understanding of the result’s reliability. Furthermore, the actual images, which represent the target to be accurately estimated by the models, are shown in Fig. 5 to allow for a visual comparison of the effectiveness of the proposed predictive approaches.

5.1 Classical machine learning

5.1.1 2SCV Strategy results

The results obtained from the XGBoost model in the entire grid using the 2SCV strategy are gathered in Table 1. As it can be appreciated, the difference between the train and test metrics in some cases is significant. More specifically, the mean absolute difference between them is about 0.138, having a maximum difference of 0.39.

This huge difference implies that the model does not generalize accurately and that the results depend on the specific experimental sets used in the training and testing tasks. Moreover, the mean \(R^2\) across all the training sets is 0.993 with a minimal and even negligible standard deviation of 0.009; while the testing mean \(R^2\) value is 0.856 with a quite low standard deviation of 0.095. Thus, in some cases, the algorithm has tended to overfit the training set, as near-perfect prediction is achieved in these cases, but not in the test set.

Furthermore, the predicted dendritic patterns are shown in Fig. 6. As previously noted, 20 tests were conducted across the various experiments. The image presents one from each of the 7 experiments, specifically, the test that exhibited the most stable performance, with the ratio of \(R^2\) in the training set to \(R^2\) in the testing set remaining closest to 1. These tests are highlighted in bold in Table 1.

As can be observed, the dendritic shape is captured satisfactorily. However, the boundary region between the solid and liquid phases appears slightly diffuse. Table 2 presents the MSE score calculated for each of the three areas (liquid, solid, and interface) based on the reference solidification state provided by the real data. The results indicate that the MSE generally increases in the test set across all three geometry zones. In the solid zones, the error remains low, even in the test set. In contrast, the liquid zones exhibit a noticeably higher error during testing. However, the most significant challenge lies in the boundary between the solid and liquid phases. This region shows the highest errors, with a mean absolute error exceeding \(10^{-1}\) in most cases. Given the target range of \([-1,1]\), this represents a substantial deviation, underscoring the difficulty of accurately predicting phase boundaries and capturing the complex dynamics in this transitional zone.

5.1.2 LOGOCV strategy results

Regarding the LOGOCV strategy, the results obtained in the entire grid are shown in Table 3. In this case, the mean absolute difference between the train and test \(R^2\) values is 0.064, and the maximum difference is 0.097, both values indicating the consistency of this approach. Furthermore, comparing the mean \(R^2\) values for training and testing, 0.993 and 0.929, respectively, highlights the model’s ability to generalize and predict effectively when additional experiments are included in the training phase. This is further supported by the low value of the \(R^2\)’s standard deviations in the training and the testing, 0.004 and 0.022, respectively.

In addition, the results obtained with the LOGOCV approach are depicted in Fig. 7. The microstructure shape is predicted satisfactorily, although the boundaries between solid and liquid exhibit slightly blurred values.

Finally, Table 4 presents the MSE scores obtained using the LOGOCV strategy, applied to the three solidification phases. As it can be observed, the errors in the solid and liquid zones remain consistent from training to testing. However, the error increases in the boundary region, though this increase is minor compared to 2SCV. Specifically, in the LOGOCV strategy, the error exceeds \(10^{-1}\) in only one instance, while it is considerably lower in the remaining cases.

5.1.3 Discussion

In the LOGOCV strategy, both the training and testing results exhibit strong performance, showing significant improvement over those obtained with the 2SCV strategy. Additionally, this approach demonstrates a superior ability to generalize the predicted dendrite solidification patterns in this study, maintaining a more stable ratio between the \(R^2\) train and \(R^2\) test scores compared to the 2SCV strategy.

Based on visual inspection, the predictions provided by both strategies appear to be quite similar. Therefore, to allow for a more precise comparison, pixel-by-pixel absolute errors between the predicted and actual images are calculated.

In most cases, the region with the greatest prediction error is the boundary between the solid and liquid phases. However, due to differences in the final microstructure shapes across the experiments, the conclusions drawn differ between experiments 1 to 3 and experiments 4 to 7. In the first group, a greater error is observed at the tips of the protrusions of the blades when training is performed with 2SCV, as opposed to the LOGOCV. This is illustrated by the prediction example from experiment 2 in Fig. 8. In contrast, as shown in the example from experiment 6 in Fig. 9, no discernible areas exhibit greater error in one validation strategy compared to the other, although the overall error remains higher when 2SCV is used.

5.2 Variational quantum algorithm

5.2.1 2SCV strategy results

The train and test \(R^2\) scores for the entire grid, obtained using the 2SCV strategy (with two experiments for training and one unseen experiment for testing), are presented in Table 5. As can be appreciated, the values between training and testing are very close in most cases, with a mean absolute error of 0.079, meaning that overfitting is avoided. Even though, some of the validation sets get larger differences between them, getting a maximum difference of 0.279. The mean scores in the training and testing were 0.629 and 0.628, respectively, which are considerably low but stable from training to testing. In addition, the variation across different iterations is minimal, with a standard deviation of 0.039 in the train and 0.085 in the test.

The predicted results are drawn in Fig. 10. Only 7 of the 20 experiments conducted are presented in the figure, one for each testing set. The selected experiments are those that demonstrated the greatest stability in the \(R^2\) score, specifically, those with a train \(R^2\) to test \(R^2\) ratio closest to 1. These tests are highlighted in bold in Table 5.

In this case, the shape is also captured reasonably well, with a noticeable distinction between the first three figures and the last four. Furthermore, the boundary between the liquid and solid states does not achieve complete prediction accuracy and appears diffuse. For this reason, the performance of the algorithm is calculated by the MSE score in the three different solidification zones and presented in Table 6. As can be seen in the results, the errors remain stable from training to testing across all regions. While the errors for the liquid and solid phases are generally higher compared to the XGBoost model, they are still acceptable in most cases. However, in the case of the boundary between the liquid and solid phases, errors exceeding 1 are observed, indicating an absolute error of over 50% in most instances, given the output range of [−1, 1]. This suggests that the model struggles to predict this boundary accurately. Interestingly, the stability of errors from training to testing in these boundary cases may point to a convergence issue in the model, which seems unable to predict these regions with high precision.

5.2.2 LOGOCV strategy results

Similarly to the approach used for the classical model, the experimentation is repeated using the LOGOCV method. The results are presented in Table 7. In this case, the differences between the training and testing phases are also visible, but the mean absolute error between the two performances is 0.080, with a maximum difference of 0.113. The mean \(R^2\) values are again close to each other, with training and testing mean values of 0.623 and 0.615, respectively. Focussing on the standard deviation across the different testing sets, the values obtained are 0.020 for training and 0.077 for testing.

Regarding the visual validation of the results, the predicted dendritic patterns for the LOGOCV strategy are shown in Fig. 11, where the experiment identifiers are also printed on the top left edge.

In general, the shape is well captured, with the most challenging areas across all images being those at the boundary between solid and liquid phases, as well as the four liquid spots at the centre of the dendritic structures. This is evident from the diffuse areas observed at the boundaries between the different phases. Additionally, a noticeable error appears along a perimeter near the centre of the images in both validation strategies. To differentiate the performance across zones, the MSE scores are calculated for the three solidification phases, and the results are presented in Table 8. As can be observed, the errors remain stable from training to testing across all regions. In this case, the predictions at the liquid–solid boundary are particularly inaccurate again, with errors exceeding 1 and deviations often surpassing 50%. Expanding the train set under the LOGOCV strategy does not lead to improvements compared to the 2SCV strategy, as the results remain almost unchanged. This consistency suggests a persistent limitation in the model’s convergence.

5.2.3 Discussion

The difference between the \(R^2\) scores for the train and test sets in both strategies across the entire grid is almost identical, with a mean of 0.08. Furthermore, the standard deviation of the train–test difference in LOGOCV is nearly half that observed in 2SCV, indicating slightly more consistency in the results with this strategy.

However, it can be observed that the \(R^2\) values are slightly lower in the results obtained with the LOGOCV strategy, particularly for testing. This indicates that using all other experiments as the training set does not provide better generalizability than using only two experiments in the case of the proposed VQA. Therefore, given the trade-off between model performance and computational efficiency, it would be more beneficial to choose the strategy of training with two sets instead of using all six experiments. This approach not only reduces the computational cost but also avoids the additional memory usage required by the larger training set.

Moving on to the visual results, these are virtually identical in both strategies, with the prediction error being almost the same and similarly distributed. However, to learn more about the prediction errors made by the VQA, the absolute pixel-to-pixel errors between the actual and predicted experiments were calculated. To enable a comparison with the errors obtained using XGBoost, the results for experiments 2 and 6 are presented. It is important to note that the results from the 2SCV strategy correspond to the training configuration with the most stable \(R^2\) performance.

Figure 12 illustrates the results for experiment 2 obtained with the VQA model trained using both validation strategies, where a high degree of similarity can be observed. A similar observation applies to experiment 6, as shown in Fig. 13.

6 Conclusions

Both classical and quantum ML models have demonstrated effectiveness in accurately modelling the phase field problem. A comparison of the validation strategies reveals that LOGOCV enhances the generalizability of the classical approach, while both strategies yield similar results for the proposed VQA. In both cases, it has been observed that predicting the boundary between the solid and liquid phases is particularly challenging. Additionally, when the protrusions of the dendritic blades are large, both models tend to exhibit significant errors. Finally, since the LOGOCV strategy does not improve the results of the quantum model, it is concluded that the selected circuit architecture is adequately validated using the 2SCV strategy, which provides a computational advantage over the LOGOCV strategy.

Regarding the innovative proposal of applying a quantum methodology to address a real-world regression task, the findings indicate that the variational quantum algorithm (VQA) attains a satisfactory degree of accuracy in forecasting the terminal state of the phase field problem. This assertion is substantiated by the congruence observed between the predicted and actual geometries. However, the convergence of the model may have been affected by the use of an 8-qubit system, which could limit its ability to fully capture intricate features, such as the boundaries between solid and liquid phases and the dendritic protrusions. This limitation may be attributed to several factors discussed in the literature, including barren plateaus (BPs), the high expressibility of the circuit, and the presence of non-convex optimization landscapes with suboptimal local minima. These challenges highlight the complexity of scaling VQAs and underline the need for improved circuit architectures and training strategies to achieve better convergence and predictive performance.

Nonetheless, this work shows that quantum algorithms are not only of theoretical value but are also moving closer to solving real-world problems in industrial sectors. This represents significant progress towards the practical use of quantum computing, particularly in optimizing complex industrial processes.

VQA approaches are designed to leverage the advantages of quantum computing in addressing complex problems. The successful application to an industrial case such as the phase field solidification problem demonstrates that VQAs can also be effective in other industrial problems where classical methods could encounter limitations. This adds VQAs as another possible method for solving ML regression problems and demonstrates that they can be considered an efficient option as a surrogate model.

In this context, the use of surrogate modelling proves crucial in scenarios where physical models are highly complex, offering significant computational efficiency while providing valuable insights into the phenomena under study. In the case of the phase field model, its ability to yield information about the mechanical properties of metals makes it particularly relevant across various industries, including additive manufacturing. Implementing a VQA approach shows promise, as it could provide a computational advantage over traditional models in these contexts. The results of this work demonstrate that VQAs can serve as effective surrogate models for tackling such complex systems.

While applicability has been demonstrated, challenges still need to be addressed to improve the efficiency and robustness of these methods on noisy or limited quantum hardware. The widespread practical use of quantum computing will depend on advancements in quantum error correction and the scalability of algorithms. While this work demonstrates the feasibility of achieving relevant results with existing NISQ hardware, it is crucial to acknowledge that current technological constraints, including limited qubit counts, noise, and hardware instability, present significant barriers to immediate deployment in industrial contexts.

A key challenge identified in this study is the impact of high-dimensional data on the convergence of variational quantum algorithms (VQAs), particularly due to issues like barren plateaus and non-convex optimization landscapes. As future work, we aim to explore advanced optimization techniques and strategies to mitigate these issues. By improving the optimization process and circuit design, we hope to enhance the convergence of VQAs and unlock their potential for tackling high-dimensional problems more effectively.

Data Availability

Not applicable.

Code Availability

References

Yang Z, Zolanvari M, Jain R (2023) A survey of important issues in quantum computing and communications. IEEE Communications Surveys & Tutorials 25(2):1059–1094. https://doi.org/10.1109/COMST.2023.3254481

Cerezo M, Arrasmith A, Babbush R, Benjamin SC, Endo S, Fujii K, McClean JR, Mitarai K, Yuan X, Cincio L et al (2021) Variational quantum algorithms. Nature Rev Phys 3(9):625–644. https://doi.org/10.1038/s42254-021-00348-9

Adebayo P, Basaky F, Osaghae E (2023) Variational quantum-classical algorithms: A review of theory, applications, and opportunities. UMYU Sci 2(4):65–75. https://doi.org/10.56919/usci.2324.008

Lockwood O (2022) An empirical review of optimization techniques for quantum variational circuits. https://doi.org/10.48550/arXiv.2202.01389. arXiv preprint arXiv:2202.01389

Wold K (2021) Parameterized quantum circuits for machine learning. Master’s thesis, University of Oslo, Oslo, Norway (September). Thesis for the degree of Master of Science in the Department of Physics, Faculty of Mathematics and Natural Sciences

Bauckhage C, Bye R, Iftikhar A, Knopf C, Mustafic M, Piatkowski N, Sifa R, Stahl R, Sultanow E (2022) Quantum machine learning-state of the art and future directions. Federal Office for Information Security, 122

Arute F, Arya K, Babbush R, Bacon D, Bardin JC, Barends R, Biswas R, Boixo S, Brandao FG, Buell DA et al (2019) Quantum supremacy using a programmable superconducting processor. Nature 574(7779):505–510. https://doi.org/10.1038/s41586-019-1666-5

Preskill J (2018) Quantum computing in the nisq era and beyond. Quantum 2:79. https://doi.org/10.22331/q-2018-08-06-79

Larocca M, Thanasilp S, Wang S, Sharma K, Biamonte J, Coles PJ, Cincio L, McClean JR, Holmes Z, Cerezo M (2024) A Review of Barren Plateaus in Variational Quantum Computing. arXiv. https://doi.org/10.48550/arXiv.2405.00781

Zhang Kaining (2023) Training Theory of Variational Quantum Machine Learning. PhD thesis, The University of Sydney

Garcia RJ, Zhao C, Bu K, Jaffe A (2023) Barren plateaus from learning scramblers with local cost functions. J High Energy Phys 2023(1):90. https://doi.org/10.1007/JHEP01(2023)090

Choi JY, Xue T, Liao S, Cao J (2024) Accelerating phase-field simulation of three-dimensional microstructure evolution in laser powder bed fusion with composable machine learning predictions. Addit Manuf 79:103938. https://doi.org/10.1016/j.addma.2023.103938

Li W, Fang R, Jiao J, Vassilakis GN, Zhu J (2024) Tutorials: Physics-informed machine learning methods of computing 1D phase-field models. APL Mach Learn 2(3):031101. https://doi.org/10.1063/5.0205159

Palar PS, Liem RP, Zuhal LR, Shimoyama K (2019) On the use of surrogate models in engineering design optimization and exploration: the key issues. In: Proceedings of the Genetic and Evolutionary Computation Conference Companion, pp. 1592–1602. ACM, Prague Czech Republic. https://doi.org/10.1145/3319619.3326813

Montes De Oca Zapiain D, Stewart JA, Dingreville R (2021) Accelerating phase-field-based microstructure evolution predictions via surrogate models trained by machine learning methods. npj Computational Materials 7(1), 3 https://doi.org/10.1038/s41524-020-00471-8

Tian J, Hou M, Bian H, Li J (2023) Variable surrogate model-based particle swarm optimization for high-dimensional expensive problems. Complex Intell Syst 9(4):3887–3935. https://doi.org/10.1007/s40747-022-00910-7

Alizadeh R, Allen JK, Mistree F (2020) Managing computational complexity using surrogate models: a critical review. Res Eng Design 31(3):275–298. https://doi.org/10.1007/s00163-020-00336-7

Winker T, Çalikyilmaz U, Gruenwald L, Groppe S (2023) Quantum machine learning for join order optimization using variational quantum circuits. In: Proceedings of the International Workshop on Big Data in Emergent Distributed Environments, pp. 1–7. https://doi.org/10.1145/3579142.3594299

Guo X, Muta T, Zhao J (2024) Quantum circuit ansatz: Patterns of abstraction and reuse of quantum algorithm design. https://doi.org/10.48550/arXiv.2405.05021. arXiv preprint arXiv:2405.05021

Choquette A, Di Paolo A, Barkoutsos PK, Sénéchal D, Tavernelli I, Blais A (2021) Quantum-optimal-control-inspired ansatz for variational quantum algorithms. Phys Rev Res 3(2):023092. https://doi.org/10.1103/PhysRevResearch.3.023092

Gil Fuster E, Latorre JI (2019) Variational quantum classifier. Master’s thesis, Universitat de Barcelona, Facultat de Física

Flammia ST, Liu Y-K (2011) Direct Fidelity Estimation from Few Pauli Measurements. Phys Rev Lett 106(23):230501. https://doi.org/10.1103/PhysRevLett.106.230501

Busch P, Lahti P, Pellonpää J-P, Ylinen K (2016) Quantum Measurement. Theoretical and Mathematical Physics. Springer, Cham. https://doi.org/10.1007/978-3-319-43389-9

Nielsen MA, Chuang IL (2001) Quantum Computation and Quantum Information vol. 2. Cambridge University Press, Cambridge. https://doi.org/10.1017/CBO9780511976667

Wang Q, Ma Y, Zhao K, Tian Y (2022) A Comprehensive Survey of Loss Functions in Machine Learning. Annals Data Sci 9(2):187–212. https://doi.org/10.1007/s40745-020-00253-5

Huber PJ (1964) Robust Estimation of a Location Parameter. Ann Math Stat 35(1):73–101. https://doi.org/10.1214/aoms/1177703732

Qi H, Xiao S, Liu Z, Gong C, Gani A (2024) Variational quantum algorithms: fundamental concepts, applications and challenges. Quantum Inf Process 23(6):224. https://doi.org/10.1007/s11128-024-04438-2

Tourret D, Liu H, LLorca J (2022) Phase-field modeling of microstructure evolution: Recent applications, perspectives and challenges. Prog Mater Sci 123:100810. https://doi.org/10.1016/j.pmatsci.2021.100810

Biner SB (2017) Programming Phase-Field Modeling. Springer, Cham. https://doi.org/10.1007/978-3-319-41196-5

Dantzig JA, Rappaz M (2009) Solidification. Engineering sciences. EPFL press CRC press, Lausanne (Suisse) Boca Raton (Fla.)

Kobayashi R (1993) Modeling and numerical simulations of dendritic crystal growth. Physica D 63(3–4):410–423. https://doi.org/10.1016/0167-2789(93)90120-P

Chen T, Guestrin C (2016) XGBoost: A scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD ’16, pp. 785–794. ACM, New York, NY, USA. https://doi.org/10.1145/2939672.2939785

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B et al (2011) Scikit-learn: Machine learning in python. J Mach Learn Res 12:2825–2830

Bergholm V, Izaac J, Schuld M, Gogolin C, Ahmed S et al (2022) PennyLane: Automatic differentiation of hybrid quantum-classical computations. arXiv. https://doi.org/10.48550/arXiv.1811.04968

Xanadu (2024) Interfaces – PennyLane Documentation. https://docs.pennylane.ai/en/stable/introduction/interfaces.html

Telahun M (2020) Exploring information for quantum machine learning models. Master’s thesis, University of Louisville, Computer Engineering and Computer Science. https://doi.org/10.18297/etd/3433

Wootters WK, Zurek WH (2009) The no-cloning theorem. Phys Today 62(2):76–77. https://doi.org/10.1063/1.3086114

Acknowledgements

This work has received funding from the Basque Government through the ELKARTEK programme, project "KUBIT - Kuantikaren Berrikuntzarako Ikasketa Teknologikoa" (KK-2024/00105).

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This work has received funding from the Basque Government through the ELKARTEK programme, project "KUBIT - Kuantikaren Berrikuntzarako Ikasketa Teknologikoa" (KK-2024/00105).

Author information

Authors and Affiliations

Contributions

All authors contributed to the investigation, data curation, formal analysis, software developing, and writing of the original draft. Methodology and validation were performed by Eider Garate, Naroa Martin, and Meritxell Gómez. Conceptualization, supervision, and writing—review and editing were done by Meritxell Gómez and Eider Garate.

Corresponding author

Ethics declarations

Conflict of interest

There are no conflict of interest.

Ethics approval and consent to participate.

The research meets all ethical guidelines, including adherence to the legal requirements of the study country.

Consent for publication.

All authors have approved the manuscript and consent to its submission for publication.

Materials availability

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eider, GP., Meritxell, GO., Jon, L. et al. Quantum surrogate modelling to solve phase field simulation problems in metal solidification. J Supercomput 81, 656 (2025). https://doi.org/10.1007/s11227-025-07032-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-025-07032-0