Abstract

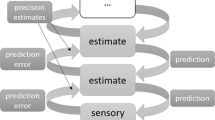

The so-called “dark room problem” makes vivd the challenges that purely predictive models face in accounting for motivation. I argue that the problem is a serious one. Proposals for solving the dark room problem via predictive coding architectures are either empirically inadequate or computationally intractable. The Free Energy principle might avoid the problem, but only at the cost of setting itself up as a highly idealized model, which is then literally false to the world. I draw at least one optimistic conclusion, however. Real-world, real-time systems may embody motivational states in a variety of ways consistent with idealized principles like FEP, including ways that are intuitively embodied and extended. This may allow predictive coding theorists to reconcile their account with embodied principles, even if it ultimately undermines loftier ambitions.

Similar content being viewed by others

Notes

Note that ‘equilibrium’ can mean two things: thermodynamic equilibrium and local equilibrium with the environment. All organisms attempt to maintain local equilibrium with their environment in order that they may avoid pure thermodynamic equilibrium. Following Friston’s usage in this quote, I will use ‘equilibrium’ to mean local equilibrium with the environment.

The original formulation was suggested by Mumford (1992) (though as an observation, not an objection): “In some sense, this is the state that the cortex is striving to achieve: perfect prediction of the world,like the oriental Nirvana...when nothing surprises you and new stimuli cause the merest ripple in your consciousness” (p. 247; fn. 5).

Compare the old proverb: “If you give a man a fire, you warm him for a day. If you set a man on fire, you warm him for the rest of his life.”

I will assume that the organism must ultimately model adaptive states, though on most stories this gets cashed out in moment-to-moment predictions of sensory input (both exteroceptive and interoceptive). I will assume, as most authors do, that the point of predicting sensory states is to support models of the state of the world. Further, trying to spell out the adaptive states directly in terms of the sensory states themselves only adds a layer of complication to a story that I will argue is already unreasonably complicated. I don’t want to stack the deck too much.

That would solve another problem for prediction in terms of states. On most versions of PC, “...perceptual content is the predictions of the currently best hypothesis about the world” (Hohwy 2013, p. 48). As Wolfgang Schwartz points out (personal communication), if we took this literally it would mean that we act because we hallucinate the goal state and thereby move towards it. That is absurd. An action-based view, by contrast, lets the organism veridically model both the problem and progress towards the solution.

Compare: universal Turing machines can also be made very simple in the sense of having relatively few states and symbols. Further, such simple machines are completely universal in the sense that the same process does whatever can be done. But that comes at the cost of considerable complexity in coding the inputs (Minsky 1967, §14.8). As such, the simplicity and universality of minimal Turing machines does not constitute an argument in their favor as a plausible architectures of mind. (There are, of course, many other considerations against TM architectures).

An anonymous reviewer suggested that Friston simply uses ‘existence’ and ‘being well-adapted’ as synonyms. Perhaps so, but they are not synonyms, precisely because there must be variation in fitness to drive natural selection. Further, a change in environment can make an existing organism poorly adapted (by, for example, rendering it sterile) without changing whether or not it continues to exist. Finally, evolution and adaptation requires organisms to do more than exist—they must also reproduce. Replication is fundamental to Darwinian theory (Godfrey-Smith 2009). Yet FEP says very little, as far as I know, about that more specific requirement.

General principles about optimal function may also offer guidance on the boundaries and constraints on such organisms. These constraints are likely to be very broad, and so very easy to satisfy.Friston, for example, notes that “the free-energy bound on surprise tells us that adaptive agents must perform some sort of recognition or perceptual inference” (Friston et al. 2012b, p. 6). Undoubtedly true, but not particularly controversial. Leibniz was probably the last major philosopher to deny that adaptive action required causal contact with the external world. Few were convinced.

References

Anderson, M. L., & Chemero, T. (2013). The problem with brain GUTs: Conflation of different senses of ‘prediction’ threatens metaphysical disaster. Behavioral and Brain Sciences, 36(3), 204–205.

Anscombe, G. E. M. (1957). Intention. Cambridge: Harvard University Press.

Ashby, W. R. (1956). An introduction to cybernetics. London: Chapman & Hall Ltd.

Clark, A. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences, 36(3), 181–253.

Clark, A. (2015). Surfing uncertainty: Prediction, action, and the embodied mind. New York: Oxford University Press.

Conant, R. C., & Ashby, W. R. (1970). Every good regulator of a system must be a model of that system. International Journal of Systems Science, 1(2), 89–97.

Craver, C. (2007). Explaining the brain. New York: Oxford University Press.

Dahlberg, M. D. (1979). A review of survival rates of fish eggs and larvae in relation to impact assessments. Marine Fisheries Review, 41(3), 1–12.

Fabiani, A., Galimberti, F., Sanvito, S., & Hoelzel, A. R. (2004). Extreme polygyny among southern elephant seals on Sea Lion Island, Falkland Islands. Behavioral Ecology, 15(6), 961–969.

Feldman, J. (2013). Tuning your priors to the world. Topics in Cognitive Science, 5(1), 13–34.

Friston, K. (2010). The free-energy principle: A unified brain theory? Nature Reviews Neuroscience, 11(2), 127–138.

Friston, K. (2013). Active inference and free energy. Behavioral and Brain Sciences, 36(3), 212–213.

Friston, K., Samothrakis, S., & Montague, R. (2012a). Active inference and agency: optimal control without cost functions. Biological Cybernetics, 106(8–9), 523–541.

Friston, K., Thornton, C., & Clark, A. (2012b). Free-energy minimization and the dark-room problem. Frontiers in Psychology, 3, 1–7.

Gawande, A. (2010). The checklist manifesto: How to get things right. New York: Henry Holt and Company.

Godfrey-Smith, P. (2009). Darwinian populations and natural selection. New York: Oxford University Press.

Griffiths, P. E., & Gray, R. D. (1994). Developmental systems and evolutionary explanation. The Journal of Philosophy, 91(6), 277–304.

Hohwy, J. (2013). The predictive mind. New York: Oxford University Press.

Huang, Y., & Rao, R. P. (2011). Predictive coding. Wiley Interdisciplinary Reviews: Cognitive Science, 2(5), 580–593.

Huebner, B. (2012). Surprisal and valuation in the predictive brain. Frontiers in Psychology, 3(415), 1–2.

Klein, C. (2008). An ideal solution to disputes about multiply realized kinds. Philosophical Studies, 140(2), 161–177.

Klein, C. (2015). What the body commands: The imperative theory of pain. Cambridge, MA: MIT Press.

Larson, E. (2004). The Devil in the White City. New York: Vintage Books.

McMullin, E. (1985). Galilean idealization. Studies in the History and Philosophy of Science, 16(3), 247–273.

Melzack, R. (1999). From the gate to the neuromatrix. Pain, 82, S121–S126.

Melzack, R., & Wall, P. (1965). Pain mechanisms: A new theory. Science, 150(699), 971–979.

Minsky, M. L. (1967). Computation: Finite and infinite machines. Englewood Cliffs, NJ: Prentice-Hall Inc.

Mumford, D. (1992). On the computational architecture of the neocortex. Biological Cybernetics, 66(3), 241–251.

Putnam, H. (1967/1991). The nature of mental states. In Rosenthal DM (Ed.), The nature of mind (pp. 197–210). New York: Oxford University Press.

Rao, R. P., & Ballard, D. H. (1999). Predictive coding in the visual cortex: A functional interpretation of some extra-classical receptive-field effects. Nature Neuroscience, 2(1), 79–87.

Seth, A. K. (2014a). The cybernetic Bayesian brain: From interoceptive inference to sensorimotor contingencies. In T. K. Metzinger & J. M. Windt (Eds.), Open mind. Frankfurt am Main: MIND Group.

Seth, A. K. (2014b). Response to Gu and FitzGerald: Interoceptive inference: From decision-making to organism integrity. Trends in Cognitive Sciences, 18(6), 270–271.

Simon, H. A. (1996). The sciences of the artificial (3rd ed.). Cambridge: MIT Press.

Sterelny, K., & Griffiths, P. E. (1999). Sex and death. Chicago: University of Chicago Press.

Woodward, J. (2003). Making things happen. New York: Oxford University Press.

Acknowledgments

Research on this work was funded by Australian Research Council Grant FT140100422. For helpful discussions, thanks to Esther Klein, Julia Staffel, Wolfgang Schwartz, the ANU 2013 reading group on predictive coding, and participants at the 2015 CAVE “Predictive Coding, Delusions, and Agency” workshop at Macquarie University. For feedback on earlier drafts of this work, additional thanks to Peter Clutton, Jakob Hohwy, Max Coltheart, Michael Kirchhoff, Bryce Huebner, Luke Roelofs, Daniel Stoljar, two anonymous referees, the ANU Philosophy of Mind work in progress group, and an audience at the “Predictive Brains and Embodied, Enactive Cognition” workshop at the University of Wollongong.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Klein, C. What do predictive coders want?. Synthese 195, 2541–2557 (2018). https://doi.org/10.1007/s11229-016-1250-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11229-016-1250-6