Abstract

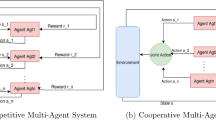

The packet scheduling in router plays an important role in the sense to achieve QoS differentiation and to optimize the queuing delay, in particular when this optimization is accomplished on all routers of a path between source and destination. In a dynamically changing environment a good scheduling discipline should be also adaptive to the new traffic conditions. We model this problem as a multi-agent system in which each agent learns through continual interaction with the environment in order to optimize its own behaviour. So, we adopt the framework of Markov decision processes applied to multi-agent system and present a pheromone-Q learning approach which combines the Q-multi-learning technique with a synthetic pheromone that acts as a communication medium speeding up the learning process of cooperating agents.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Anker, T., Cohen, R., Dolev, D., & Singer, Y. (2001). Probabilistic fair queuing. In IEEE 2001 workshop on high performance switching and routing (pp. 397–401).

Baird, K. (1995). Residual algorithms: reinforcement learning with function approximation. In Machine learning twelfth international conference. San Francisco, USA.

Bellman, R. E. (1957). Dynamic programming. Princeton: Princeton University Press.

Bonabeau, E., Dorigo, M., & Theraulaz, G. (1999). From natural to artificial swarm intelligence. London: Oxford University Press.

Bourenane, M., Mellouk, A., & Benhamamouch, D. (2007). A QoS-based scheduling by neurodynamic learning. System and Information Sciences Journal, 2(2), 138–144.

Boutilier, C. (1999). Sequential optimality and coordination in multiagent systems. In IJCAI (pp. 478–485).

Evans, J., & Filsfils, C. (2007). Deploying IP and MPLS QoS for multiservice networks: theory and practice. San Mateo: Morgan Kaufmann.

Ferra, H., Lau, K., Leckie, C., & Tang, A. Applying reinforcement learning to packet scheduling in routers. In Proceedings of the fifteenth innovative applications of artificial intelligence conference (IAAI-03) (pp. 79–84). 12–14 August 2003, Acapulco, Mexico.

Hadeli, Valckenaers, P., Kollingbaum, M., & Van Brussel, H. (2004). Multi-agent coordination and control using stigmergy. Computers in Industry, 53, 75–96.

Hall, J., & Mars, P. (1998). Satisfying QoS with a learning based scheduling algorithm. In 6th international workshop on quality of service (pp. 171–176).

Hu, J., & Wellman, M. P. (2003). Nash Q-learning for general-sum stochastic games. Journal of Machine Learning Research, 4, 1039–1069.

Hoceini, S., Mellouk, A., & Amirat, Y. (2005). K-shortest paths Q-routing: a new QoS routing algorithm in telecommunication networks. In Lecture notes in computer science (Vol. 3421, pp. 164–172). Berlin: Springer.

Kapetanakis, S., & Kudenko, D. (2002). Reinforcement learning of coordination in cooperative multi-agent systems. In AAAI 2002 (pp. 326–331).

Kortebi, A., Muscariello, L., Oueslati, S., & Roberts, J. (2004). On the scalability of fair queuing. In Proc. of ACM hot nets III. San Diego.

Mellouk, A. (2008). End-to-end quality of service engineering in next generation heterogeneous networks. New York: Wiley.

Mellouk, A., & Hoceini, S. (2005). A reinforcement learning approach for QoS based routing packets in integrated service web based systems. In Lecture notes in artificial intelligence (Vol. 3528, pp. 299–305). Berlin: Springer.

Mellouk, A., & Chebira, A. (2009). Machine learning. I-TECH Education and Publishing Ed., Intechweb.org.

Mellouk, A., Hoceini, S., & Amirat, Y. (2007). Adaptive quality of service based routing approaches: development of a neuro-dynamic state-dependent reinforcement learning algorithm. International Journal of Communication Systems, 20(10), 1113–1130.

Mellouk, A., Lorenz, P., Boukerche, A., & Lee, M. H. (2007). Impact of adaptive quality of service based routing algorithms in the next generation heterogeneous networks. IEEE Communication Magazine, 45(2), 65–66.

Mellouk, A., Hoceini, S., & Cheurfa, M. (2008). Reinforcing probabilistic selective quality of service routes in dynamic heterogeneous networks. Computer Communication, 31(11), 2706–2715.

Monekosso, N., & Remagnino, P. (2004). The analysis and performance evaluation of the pheromone-Q-learning algorithm. Expert Systems, 21(2), 80–91.

Nichols, K., Blake, S., Baker, F., & Black, D. (1998). Definition of the differentiated services field (DS field) in the IPv4 and IPv6 headers. In RFC 2474.

Nouyan, S., Ghizzioli, R., Birattari, M., & Dorigo, M. (2005). An insect-based algorithm for the dynamic task allocation problem. Künstliche Intelligenz, 4/05, 25–31.

Puterman, M. (2005). Markov decision processes: discrete stochastic dynamic programming. New York: Wiley.

Shreedhar, M., & Varghese, G. (1996). Efficient fair queuing using deficit round robin. IEEE/ACM Transactions on Networking, 4(3), 375–385.

Sutton, R. S., & Barto, A. G. (1998). Reinforcement learning: an introduction. Cambridge: MIT Press.

Vlassis, N. (2003). A concise introduction to multiagent systems and distributed AI. San Rafael: Morgan & Claypool Publishers. Informatics Institute, University of Amsterdam, pp. 2, 19, 21, 30, 31, 35, 115, 129.

Wang, H., Shen, C., & Shin, K. (2001). Adaptive-weighted packet scheduling for premium service. In IEEE int. conf. on communications (ICC 2001) (pp. 1846–1850).

Watkins, J. C. H. (1989). Learning from delayed rewards. Ph.D. thesis, King’s College of Cambridge, UK.

Watkins, C. J. C. H., & Dayan, P. (1992). Q-learning. Machine Learning, 8(3), 279–292.

Weinberg, M., & Rosenschein, J. S. Best-response multiagent learning in nonstationary environments. In Proceedings of the third international joint conference on autonomous agents and multi-agent systems (AAMAS-04). Columbia University, New York City, July 2004.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bourenane, M., Mellouk, A. & Benhamamouch, D. State-dependent packet scheduling for QoS routing in a dynamically changing environment. Telecommun Syst 42, 249 (2009). https://doi.org/10.1007/s11235-009-9184-7

Published:

DOI: https://doi.org/10.1007/s11235-009-9184-7