Abstract

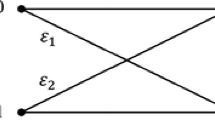

Information-theoretic analysis of the communication systems performances is of practical and theoretical importance. In this paper, (i) Specifically, we generalize the Cover–Chiang work on point to point channel with two-sided state information to two-receiver less noisy broadcast channel (2R-LN-BC) with different side information non-causally known at the encoder as well as the decoders, and obtain the capacity region including the previous works as its special cases. Then, (ii) the obtained result is expanded to the continuous alphabet Gaussian version. In this Gaussian version, the side information available at the receivers are supposed to be correlated on the channel noises and the impact of these receivers cognition of the noises on the capacity region is analyzed.

Similar content being viewed by others

References

Cover, T. (1972). Broadcast channels. IEEE Transactions on Information Theory, 18(1), 2–14.

Marton, K. (1979). A coding theorem for the discrete memoryless broadcast channel. IEEE Transactions on Information Theory, 25(3), 306–311.

Gamal, A. E. (1979). The capacity of a class of broadcast channels. IEEE Transactions on Information Theory, 25(2), 166–169.

Bergmans, P. (1973). Random coding theorem for broadcast channels with degraded components. IEEE Transactions on Information Theory, 19(2), 197–207.

Gallager, R. G. (1974). Capacity and coding for degraded broadcast channels. Problemy Peredachi Informatsii, 10(3), 3–14.

Ahlswede, R., & Körner, J. (1975). Source encoding with side information and a converse for degraded broadcast channels. IEEE Transactions on Information Theory, 21(6), 629–637.

Korner, J., & Marton, K. (1977). General broadcast channels with degraded message sets. IEEE Transactions on Information Theory, 23(1), 60–64.

Csiszár, I., & Korner, J. (1978). Broadcast channels with confidential messages. IEEE Transactions on Information Theory, 24(3), 339–348.

Shannon, C. E. (1958). Channels with side information at the transmitter. IBM journal of Research and Development, 2(4), 289–293.

Gel’fand, S. I., & Pinsker, M. S. (1980). Coding for Channels with Random Parameters. Problems of Control and Information Theory, 9(1), 19–31.

Cover, T. M., & Chiang, M. (2002). Duality between channel capacity and rate distortion with two-sided state information. IEEE Transactions on Information Theory, 48(6), 1629–1638.

Costa, M. (1983). Writing on dirty paper (corresp.). IEEE Transactions on Information Theory, 29(3), 439–441.

Anzabi-Nezhad, N. S., Hodtani, G. A., & Kakhki, M. M. (2012). Information theoretic exemplification of the receiver re-cognition and a more general version for the costa theorem. IEEE Communications Letters, 17(1), 107–110.

Anzabi-Nezhad, N. S., Hodtani, G. A. & Kakhki, M. M. (2015). A new and more general capacity theorem for the gaussian channel with two-sided input-noise dependent state information. arXiv preprint arXiv:1507.04924.

Kim, Y.-H., Sutivong, A. & Sigurjonsson, S. (2004) Multiple user writing on dirty paper. In International symposium on information theory, 2004. ISIT 2004. Proceedings., p. 534. IEEE.

Steinberg, Y. (2005). Coding for the degraded broadcast channel with random parameters, with causal and noncausal side information. IEEE Transactions on Information Theory, 51(8), 2867–2877.

Steinberg, Y., & Shamai, S. (2005). Achievable rates for the broadcast channel with states known at the transmitter. In Information theory, 2005. Proceedings international symposium on ISIT 2005, pp. 2184–2188. IEEE.

Khosravi-Farsani, R., & Marvasti, F. (2011). Capacity bounds for multiuser channels with non-causal channel state information at the transmitters. In Information theory workshop (ITW), 2011 IEEE (pp. 195–199). IEEE.

Ghabeli, L. (2021) The inner and outer bounds on the capacity of the 3‐user GBC‐SKT with unequal state variances. IET Communications, 1–11.

Hajizadeh, S., & Hodtani, G. A. (2012). Three-receiver broadcast channels with side information. In 2012 IEEE International Symposium on Information Theory Proceedings (pp. 393–397). IEEE.

Bahrami, S., & Hodtani, G. A. (2015). On capacity region of certain classes of three-receiver broadcast channels with side information. IET Communications, 9(6), 795–807.

del Aguila, F. R., Imran, M. A. & Tafazolli, R. (2013). On the three-receiver multilevel broadcast channel with random parameters. In SCC 2013; 9th international ITG conference on systems, communication and coding, pp. 1–6. VDE.

Mao, Y., & Clerckx, B. (2020). Beyond dirty paper coding for multi-antenna broadcast channel with partial CSIT: A rate-splitting approach. IEEE Transactions on Communications, 68(11), 6775–6791.

Pakravan, S. & Hodtani, G. A. (2020). Capacity region for wireless more capable broadcast channel with channel state available at the receivers. In 2020 28th Iranian conference on electrical engineering (ICEE) (pp. 1–5), IEEE.

de Kerret, P., Gesbert, D., Zhang, J., & Elia, P. (2020). Optimal DoF of the K-user broadcast channel with delayed and imperfect current CSIT. IEEE Transactions on Information Theory, 66(11), 7056–7066.

Pakravan, S., & Hodtani, G. A. (2022). Analysis of side information impact on the coverage region of wireless wiretap channel. Wireless Personal Communications, 126(4), 3253–3268.

Lin, S.-C., Wang, I.-H., & Vahid, A. (2021). Capacity of broadcast packet erasure channels with single-user delayed CSI. IEEE Transactions on Information Theory, 67(10), 6283–6295.

Pakravan, S., & Hodtani, G. A. (2022). Analysis of side information impact on the physical layer security performances in wireless wiretap channel. Transactions on Emerging Telecommunications Technologies, 33(1), e4401.

Yao, Y., & Jafar, S. A. (2022). Capacity of 3-user linear computation broadcast over F q with 1D demand and side-information. In 2022 IEEE International Symposium on Information Theory (ISIT), (pp. 1151–1156), IEEE.

Pakravan, S., & Hodtani, G. A. (2020). Semi-deterministic broadcast channel with side information: A secrecy capacity outer bound. In 2020 10th international conference on computer and knowledge engineering (ICCKE) (pp. 245–249), IEEE.

El-Gamal, A., & Kim, Y.-H. (2011). Network information theory. Cambridge University Press.

Hodtani, G. A. (2015). The effect of transceiver recognition on the Gaussian channel capacity. In 2015 Iran workshop on communication and information theory (IWCIT), (pp. 1–3). IEEE.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Proof of Theorem 1

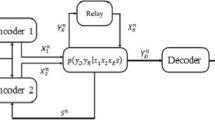

Achievability: The coding scheme used to achieve this capacity region is combines of superposition and GP coding. Fix \(n\) and a joint distribution on the set of all RVs \(\left( {U, V, X, S_{1} , S_{2} , Y_{1} , Y_{2} } \right)\) with finite alphabets so that \(p\left( {u, v, x, s_{1} , s_{2} , y_{1} , y_{2} } \right) = p\left( {s_{1} , s_{2} } \right)p\left( {u\left| {s_{1} } \right.} \right)p(v\left| {u, s_{1} )} \right.p(x\left| u \right.,v,s_{1} )p(y_{1} , y_{2} \left| {x,s_{1} , s_{2} )} \right.a\). Assume SI \((S_{1i} , S_{2i} )\) are distributed i.i.d.\(\sim p\left( {s_{1} , s_{2} } \right), i = 1, 2, \ldots\).

Codebook generation: For each message \(m_{2}\), \(m_{2} \in \left\{ {1, \ldots ,2^{{nR_{2} }} } \right\}\), we generate a bin including of \(2^{{nR_{2}^{\prime} }}\) sequences \(u^{n} \left( {m_{2} , m_{2}^{\prime} } \right)\), \(m_{2}^{\prime} \in \left\{ {1, \ldots ,2^{{nR_{2}^{\prime} }} } \right\}\), that each one are i.i.d. presume to \(\prod\nolimits_{i = 1}^{n} {p_{U} } \left( {u_{i} } \right)\). Then for each \(u^{n} \left( {m_{2} , m_{2}^{\prime} } \right)\), generate randomly and independently \(2^{{n\left( {R_{2} + R_{2}^{\prime} } \right)}}\) sequences \(v^{n} \left( {m_{2} , m_{2}^{\prime} , m_{1} , m_{1}^{\prime} } \right)\), \(m_{1} \in \left\{ {1, \ldots ,2^{{nR_{1} }} } \right\}\), \(m_{1}^{\prime} \in \left\{ {1, \ldots ,2^{{nR_{1}^{\prime} }} } \right\}\), each one i.i.d. presume to \(\prod\nolimits_{i = 1}^{n} {p_{V\left| U \right.} } \left( {v_{i} \left| {u_{i} \left( {m_{2} , m_{2}^{\prime} } \right)} \right.} \right)\). Bins and their codewords have been assumed for the transmitter and all of the receivers.

Encoding: The messages are bin indices, and we have the message pair \((m_{1} , m_{2} )\) and the SI \(s_{1}^{n}\) in encoder and \(s_{2}^{n}\) in all of receivers. Now in the bin \(m_{2}\) of \(u^{n}\) sequences search a \(m_{2}^{\prime}\) so that the sequence \(u^{n} \left( {m_{2} , m_{2}^{\prime} } \right)\) be jointly typical with the given \(s_{1}^{n}\). Then in the bin \(m_{1}\) of \(v^{n}\) sequences search a \(m_{1}^{\prime}\) so that \(\left( {u^{n} \left( {m_{2} , m_{2}^{\prime} } \right), v^{n} \left( {m_{2} , m_{2}^{\prime} , m_{1} , m_{1}^{\prime} } \right)} \right)\) be jointly typical with the given \(s_{1}^{n}\). At the end, we transmit \(x^{n} \left( {u^{n} , v^{n} , s_{1}^{n} } \right)\) which is generated according to \(\prod\nolimits_{i = 1}^{n} {p_{{X\left| {UVS_{1} } \right.}} } \left( {x_{i} \left| {u_{i} } \right., v_{i} , s_{1i} } \right)\). According to covering lemma in [8], encoding with small error probability will be successful if we have the following inequalities

Decoding: The valid indices are found via the encoding method, that is, \(m_{1}^{\prime} = 1\) and \(m_{2}^{\prime} = 1\). The messages are uniformly distributed, so without losing the whole, suppose that \((m_{1} , m_{2} ) = \left( {1, 1} \right)\) is forward. For the second receiver \(Y_{2}\) having \(y_{2}^{n}\) and \(s_{2}^{n}\), exists the following significant error

Moreover, for receiver \(Y_{1}\), exists the following significant error

and

So, according to packing lemma, decoding is successful, and we don't have decoding error if we have

Now by using Fourier-Motzkin procedure, achievability part for theorem can be concluded.

Converse: To prove the converse section, we need a generalization of Lemma 1 in [7] suitable for the BC with SI.

Lemma 1

Let the channel from \(X\) to \(Y_{1}\) be less noisy than the channel from \(X\) to \(Y_{2}\) in existence of SI. Presume \(\left( {M,S^{n} } \right)\) to be any RV so that.

form a Markov chain, then we have

where \(1 \le i \le n\).

Proof

The proof of this lemma is like the proof of Lemma 1 in [7] with negligible change and is relinquished.

We begin to prove the converse section. Consider \(M_{1}\) and \(M_{2}\) be RVs related to our messages. Now, we have

where since RV \(M_{2}\) is independent of \(S_{1}^{n}\) and \(S_{2}^{n}\), equality (26) is clear. The inequality (28) elicitations from the Fano’s inequality. Equation (33) Elicitations from Lemma 1 and (37) elicitations from Csiszar Korner identity. According to Csiszar–Korner identity, the 5th and 8th ensembles on the (36) are equal. We use Lemma 2 which shows in below (a version of Csiszar–Korner identity modified for our scenarios) to justify this result.

Lemma 2

We have the following identity.

Proof

The proof of this lemma is like the proof of lemma 7 in [8]. Since \(I\left( {K,S_{1,i + 1}^{n} ;S_{2i} \left| {T,S_{2}^{i - 1} } \right.} \right) = \sum\nolimits_{j = i + !}^{n} {I\left( {K,S_{1j} ;S_{2i} \left| {T,S_{2}^{i - 1} } \right.,S_{1}^{j + 1} } \right)} ,\) and \(I\left( {K,S_{2}^{i - 1} ;S_{1i} \left| {T,S_{1,i + 1}^{n} } \right.} \right) = \sum\nolimits_{j = 1}^{i - 1} {I\left( {K,S_{2j} ;S_{1i} \left| {T,S_{1,i + 1}^{n} } \right.,S_{2}^{j - 1} } \right)}\), we see that the left-hand side and the right-hand side of Eq. (36) split into terms of the form \(I\left( {K,S_{2i} ;S_{1j} \left| {T,S_{1}^{j + 1} } \right.,S_{2}^{i - 1} } \right)\) which \(i < j\).

Finally, last equality follows from the definition of the auxiliary random variable \(U_{i} \triangleq \left( {M_{2} ,Y_{1}^{i - 1} ,S_{2}^{i - 1} ,S_{2, i + 1}^{n} ,S_{1,i + 1}^{n} } \right)\). In the following, \(R_{1}\) can be bounded as follows:

where the inequality (43) follows from the Fano’s inequality. Since \(S_{1}^{n}\) is independent of random variables \(M_{1}\) and \(M_{2}\), (44) is clear. The equality (49) follows from Csiszar Korner identity and last equalization elicitation s from the explanation of the auxiliary RV \(V_{i} \triangleq \left( {M_{1} ,M_{2} ,Y_{1}^{i - 1} ,S_{2}^{i - 1} ,S_{2,i + 1}^{n} ,S_{1,i + 1}^{n} } \right)\).

In relations (39) and (51),\(\epsilon_{in}\) for \(\left\{ {i = 1, 2} \right\}\) tends to zero as \(n \to \infty\). Now, via the standard time-sharing outline for these relations capacity region in Theorem 1 is obtained.□

Appendix B

Proof of Theorem 2

To derive the capacity region for this Gaussian channel, we use the capacity region gained in Theorem 1. Split the channel input to 2-independent sections, i.e. \(X_{1} \sim {\mathcal{N}}\left( {0, \beta P} \right)\) and \(X_{2} \sim {\mathcal{N}}\left( {0, \left( {1 - \beta } \right)P} \right)\) so that \(X = X_{1} + X_{2}\), with \(\beta \in \left[ {0,1} \right]\). Now, for various receivers we have

We see, input to channel with smaller noise is themselves considered as noisier channel and input to channel with more noise is considered as interference for channel with smaller noise due to cancellation decoding. However, first, we define appropriate signaling according to the distribution in Theorem 1\(p\left( {u, v, x, s_{1} , s_{2} , y_{1} , y_{2} } \right) = p\left( {s_{1} , s_{2} } \right)p(u\left| {s_{1} )} \right.p\left( {v\left| {u,} \right.s_{1} } \right)p(x\left| u \right.,v,s_{1} )p(y_{1} , y_{2} \left| {x,s_{1} , s_{2} )} \right.\) and considering Gaussian inputs, the optimized auxiliary RVs in each discrete channel can be defined as follows:

where \(S_{i} \sim {\mathcal{N}}\left( {0, Q_{i} } \right)\) and \(Z_{i} \sim {\mathcal{N}}\left( {0, N_{i} } \right)\). These RVs are used to derive the capacity region in Theorem 1 utilizing standard procedures. Also, we consider \(E\left[ {S_{2} Z_{1} } \right] = \rho_{{S_{2} Z_{1} }} \sqrt {Q_{2} N_{1} }\) and \(E\left[ {S_{2} Z_{2} } \right] = \rho_{{S_{2} Z_{2} }} \sqrt {Q_{2} N_{2} }\). Therefore, with these definitions, the amount of \(I\left( {U;Y_{2} ,S_{2} } \right)\), \(I\left( {U;S_{1} } \right)\), \(I(V;Y_{1} ,S_{2} \left| {U)} \right.\), and \(I(V;S_{1} \left| {U)} \right.\) can be easily evaluated as follows:

and

Now, we demonstrate an achievable rate region for the channel. Afterwards, we obtain an upper bound for the capacity region of the channel that meets the obtained lower bound. Hence, it can be concluded the capacity region for the mentioned channel has been gained.

Now, via the deployment of Cover–Chiang capacity theorem determined in (1) for RVs with continuous alphabets that has been established in the similar way as the GP theorem with discrete time and discrete alphabets expands to discrete time and continuous alphabets in Costa theorem [12], [32, p. 184], and results achieved in theorem 1 (that maximum is over all \(\left( {u, v, x, s_{1} , s_{2} , y_{1} , y_{2} } \right)\)’s in \({\mathcal{P}}_{c}\)), since \({\mathcal{P}}_{c}^{\prime} \subseteq {\mathcal{P}}_{c}\), we define

and

Thus, we have

and

that \(R_{2} \left( {\alpha_{2}^{*} } \right)\) and \(R_{1} \left( {\alpha_{1}^{*} } \right)\) are lower bounds for the capacity region. The expressions in (62) and (63) are calculated for distributions \(p^{\prime}\left( {u, v, x, s_{1} , s_{2} , y_{1} , y_{2} } \right)\) in \({\mathcal{P}}_{c} ^{\prime}\) that has been described in above. By substituting (56)–(59) in (60) and (61), we obtain \(R_{2} \left( {\alpha_{2} } \right)\) and \(R_{1} \left( {\alpha_{1} } \right)\) as follows:

and

The optimum value of \(\alpha_{2}\) and \(\alpha_{1}\) corresponding to maximum of.

\(R_{2} \left( {\alpha_{2} } \right)\) and \(R_{1} \left( {\alpha_{1} } \right)\) are easily obtained as:

and

Substituting from (66) and (67) into (64) and (65) and using the Eqs. (62) and (63), finally, it can be concluded that lower bound of capacity region equals to (51). The calculation details have been removed for contraction.

Converse: For all distributions \(p\left( {u, v, x, s_{1} , s_{2} , y_{1} , y_{2} } \right)\) defined in the previous section, we have:

and

where (69) and (73) elicitations from this reality that conditioning lessens entropy and (70) and (74) follows from Markov chain \(S_{2} \to S_{1} \to UX\). So, (71) and (75) are upper bounds for the capacity region of the channel. These inequalities are satisfied for any distributions defined in the previous sections. To compute (71) we write:

The maximum value of (71) is achieved when \(\left( {X,S_{1} ,S_{2} } \right)\) are jointly Gaussian and \(X\) has its maximum variance \(P\). Hence, after the calculation, we will have

and

Thus, by substituting (80)–(83) in (79), we have:

Similarly, to compute (75) we write:

The maximum value of (75) is achieved when \(\left( {X,S_{1} ,S_{2} } \right)\) are jointly Gaussian and \(X\) has its maximum variance \(P\). Similarly, after the calculation, we will have

and

Thus, by substituting (83), (88), (89) and (90) in (87), we have:

Since there exists an adaptation between the upper and the lower bounds gained of the capacity region of the channel, the proof of this theorem is completed.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pakravan, S., Hodtani, G.A. An extension of Cover–Chiang work on point to point channel to less noisy broadcast channel and analysis of the receivers cognition impacts. Telecommun Syst 84, 507–519 (2023). https://doi.org/10.1007/s11235-023-01056-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11235-023-01056-8