Abstract

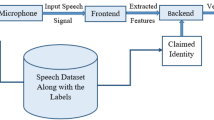

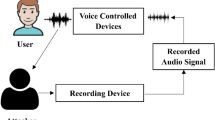

Like any other biometric systems, Automatic Speaker Verification (ASV) systems are also vulnerable to the spoofing attacks. Hence, it is important to develop the countermeasures in order to handle these attacks. In spoofing mainly two types of attacks are considered, logical access attacks and presentation attacks. In the last few decades, several systems have been proposed by various researchers for handling these kinds of attacks. However, noise handling capability of ASV systems is of major concern, as the presence of noise may make an ASV system to falsely evaluate the original human voice as the spoofed audio. Hence, the main objective of this paper is to review and analyze the various noise robust ASV systems proposed by different researchers in recent years. The paper discusses the various front end and back-end approaches that have been used to develop these systems with putting emphasis on the noise handling techniques. Various kinds of noises such as babble, white, background noises, pop noise, channel noises etc. affect the development of an ASV system. This survey starts with discussion about the various components of ASV system. Then, the paper classifies and discusses various enhanced front end feature extraction techniques like phase based, deep learning based, magnitude-based feature extraction techniques etc., which have been proven to be robust in handling noise. Secondly, the survey highlights the various deep learning and other baseline models that are used in backend, for classification of the audio correctly. Finally, it highlights the challenges and issues that still exist in noise handling and detection, while developing noise robust ASV systems. Therefore, on the basis of the proposed survey it can be interpreted that the noise robustness of ASV system is the challenging issue. Hence the researchers should consider the robustness of ASV against noise along with spoofing attacks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

Data sharing and Code availability not applicable to this article as no datasets were generated during current study. This study did not use the individual’s data or images.

Abbreviations

- MFCC:

-

Mel frequency cepstral coefficients

- LPCC:

-

Linear predictive cepstral coefficients

- PLP:

-

Perceptual linear prediction

- GMM:

-

Gaussian mixture models

- UBM:

-

Universal background model

- SVM:

-

Support vector machine

- PLDA:

-

Probabilistic linear discriminant analysis

- MCEP:

-

Mel-cepstral coefficients

- EER:

-

Equal error rate

- FAR:

-

False acceptance rate

- FRR:

-

False rejection rate

- FFT:

-

Fast Fourier Transform

- CNPCC:

-

Cosine normalized phase-based cepstral coefficients

- LPRC:

-

LP residual Cepstral Coefficients

- DNN:

-

Deep neural network

- VAD:

-

Voice activity detection

- STFT:

-

Short-time Fourier Transform

- LMS:

-

Log magnitude spectrum

- RLMS:

-

Residual log magnitude spectrum

- IF:

-

Instantaneous frequency derivative

- BPD:

-

Baseband phase difference

- GD:

-

Group delay

- MGD:

-

Modified group delay

- MLP:

-

Multi-layer perceptron

- SCMC:

-

Subband spectral centroid magnitude

- MHEC:

-

Mean Hilbert envelope coefficient

- RPS:

-

Relative phase shift

- DBN:

-

Deep belief network

- LFCC:

-

Linear frequency cepstral coefficients

- IIR-CQT:

-

Infinite impulse response—constant Q transform

- CQCC:

-

Constant-Q Cepstral Coefficients

- IMFCC:

-

Inverse Mel frequency Cepstral Coefficients

- CNN:

-

Convolutional neural network

- RNN:

-

Recurrent neural network

- LSTM:

-

Long short term memory

- HMM:

-

Hidden Markov model

- STCC:

-

Short term Cepstral coefficients

- CMVN:

-

Cepstral mean variance normalization

- MSRCC:

-

Magnitude based spectral root Cepstral coefficients

- PSRCC:

-

Phase based spectral root Cepstral coefficients

- CFCC-IF:

-

Cochlear filter Cepstral coefficient instantaneous frequency

- CLDNN:

-

Convolutional LSTM Neural Network

- HFCC:

-

High frequency Cepstral Coefficients

- LCNN:

-

Light convolutional neural network

- GRCNN:

-

Gated recurrent convolutional neural networks

- LDA:

-

Linear discriminant analysis

- CGAN:

-

Conditional generative adversarial networks

- TECC:

-

Teager energy Cepstral coefficients

- ResNet:

-

Residual network

- TDNN:

-

Time-delayed neural network

- DCF:

-

Detection cost function

- IMF:

-

Intrinsic mode functions

- HTER:

-

Half total equal error rate

- SDER:

-

Spoofing detection error rate

- EMD:

-

Empirical mode decomposition

- FBCC:

-

Filter-based Cepstral Coefficient

- VAD:

-

Voice activity detection

References

Wu, Z., Evans, N., Kinnunen, T., Yamagishi, J., Alegre, F., & Li, H. (2015). Spoofing and countermeasures for speaker verification: A survey. Speech Communication, 66, 130–153.

Malik, K. M., Malik, H., & Baumann, R. (2019). “Towards vulnerability analysis of voice-driven interfaces and countermeasures for replay attacks. IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), 2019, 523–528.

Patil, H. A., & Kamble, M. R. (2018). A survey on replay attack detection for automatic speaker verification (ASV) system. Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), 2018, 1047–1053.

Qian, Y., Chen, N., Dinkel, H., & Wu, Z. (2017). Deep feature engineering for noise robust spoofing detection. AIEEE/ACM Transactions on Audio Speech and Language Processing, 25(10), 1942–1955.

Lavrentyeva, G., Novoselov, S., Malykh, E., Kozlov, A., Kudashev, O., Shchemelinin, V. (2017). Audio replay attack detection with deep learning frameworks. Interspeech 82–86.

Wu, H., Liu, S., Meng, H., Lee, H. (2020). Defense against adversarial attacks on spoofing countermeasures of ASV. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 6564–6568.

Delgado, H., et al. (2021) ASVspoof 2021: Automatic speaker verification spoofing and countermeasures challenge evaluation plan. arXiv Prepr. arXiv2109.00535

Malik, K. M., Javed, A., Malik, H., & Irtaza, A. (2020). A light-weight replay detection framework for voice controlled IoT devices. IEEE Journal Selected Topics in Signal Processing, 14(5), 982–996.

Li, J., Zhang, X., Sun, M., Zou, X., & Zheng, C. (2019). Attention-based LSTM algorithm for audio replay detection in noisy environments. Applied Sciences, 9(8), 1539.

Kain, A., & Macon, M. W. (2001). Design and evaluation of a voice conversion algorithm based on spectral envelope mapping and residual prediction. In: 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), vol. 2, pp. 813–816.

Wu, Z. et al. (2015). ASVspoof 2015: the first automatic speaker verification spoofing and countermeasures challenge.

Poddar, A., Sahidullah, M., & Saha, G. (2018). Speaker verification with short utterances: A review of challenges, trends and opportunities. IET Biometrics, 7(2), 91–101.

Sahidullah, M., et al. (2019). Introduction to voice presentation attack detection and recent advances. Handbook of biometric anti-spoofing, pp. 321–361, Springer, New York.

Kamble, M. R., Sailor, H. B., Patil, H. A., Li, H. (2020). Advances in anti-spoofing: from the perspective of ASVspoof challenges. APSIPA Transactions on Signal Information Processing, vol. 9.

Mittal, A., & Dua, M. (2021). Automatic speaker verification systems and spoof detection techniques: review and analysis. International Journal of Speech Technology, pp. 1–30.

Das, R. K., Yang, J., & Li, H. (2021). Data augmentation with signal companding for detection of logical access attacks. In: ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 6349–6353.

Yang, Y. et al. (2019). The SJTU robust anti-spoofing system for the ASVspoof 2019 challenge. Interspeech, pp. 1038–1042.

Chen, N., Qian, Y., Dinkel, H., Chen, B., & Yu, K. (2015). Robust deep feature for spoofing detection—The SJTU system for ASVspoof 2015 challenge.

Li, R., Zhao, M., Li, Z., Li, L., & Hong, Q. (2019). Anti-spoofing speaker verification system with multi-feature integration and multi-task learning. Interspeech pp. 1048–1052.

Chettri, B., Benetos, E., & Sturm, B. L. T. (2020). Dataset artefacts in anti-spoofing systems: a case study on the ASVspoof 2017 benchmark. IEEE/ACM Transactions on Audio, Speech, Language Processing, vol. 28, pp. 3018–3028.

Tian, X., Wu, Z., Xiao, X., Chng, E. S., & Li, H. (2016). Spoofing detection under noisy conditions: a preliminary investigation and an initial database. arXiv Prepr. arXiv1602.02950.

Tian, X., Wu, Z., Xiao, X., Chng, E. S., & Li, H. (2016). An Investigation of Spoofing Speech Detection Under Additive Noise and Reverberant Conditions. INTERSPEECH, pp. 1715–1719.

Alsteris, L. D., & Paliwal, K. K. (2007). Short-time phase spectrum in speech processing: A review and some experimental results. Digital Signal Processing, 17(3), 578–616.

Yegnanarayana, B., & Murthy, H. A. (1992). Significance of group delay functions in spectrum estimation. IEEE Transactions on Signal Processing, 40(9), 2281–2289.

Adiban, M., Sameti, H., Maghsoodi, N., & Shahsavari, S. (2017). Sut system description for anti-spoofing 2017 challenge. In: Proceedings of the 29th Conference on Computational Linguistics and Speech Processing (ROCLING 2017), pp. 264–275.

Hanilci, C., Kinnunen, T., Sahidullah, M., & Sizov, A. (2016). Spoofing detection goes noisy: An analysis of synthetic speech detection in the presence of additive noise. Speech Communication, 85, 83–97.

Dua, M., Jain, C., & Kumar, S. (2021). LSTM and CNN based ensemble approach for spoof detection task in automatic speaker verification systems. Journal of Ambient Intelligence and Humanized Computing pp. 1–16

Lin, L., Wang, R., Yan, D., & Dong, L. (2020). A robust method for speech replay attack detection. KSII Transactions on Internet and Information Systems, 14(1), 168–182.

Yu, H., Sarkar, A., Thomsen, D. A. L., Tan, Z.-H., Ma, Z., & Guo, J. (2016). Effect of multi-condition training and speech enhancement methods on spoofing detection. In: 2016 First International Workshop on Sensing, Processing and Learning for Intelligent Machines (SPLINE), pp. 1–5.

Thomas, S., Ganapathy, S., & Hermansky, H. (2012). Multilingual MLP features for low-resource LVCSR systems. In: 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4269–4272.

Jaiswal, R., Fitzgerald, D., Coyle, E., & Rickard, S. (2013). Towards shifted nmf for improved monaural separation.

Variani, E., Lei, X., McDermott, E., Moreno, I. L., & Gonzalez-Dominguez, J. (2014) Deep neural networks for small footprint text-dependent speaker verification. In: 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp. 4052–4056.

Grézl, F., Karafiát, M., Kontár, S., Cernocky, J. (2007). Probabilistic and bottle-neck features for LVCSR of meetings. In: 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP’07, vol. 4, pp. IV–757.

Sercu, T., Puhrsch, C., Kingsbury, B., & LeCun, Y. (2016). Very deep multilingual convolutional neural networks for LVCSR. In: 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp. 4955–4959.

Qian, Y., Bi, M., Tan, T., & Yu, K. (2016). Very deep convolutional neural networks for noise robust speech recognition. IEEE/ACM Transactions on Audio, Speech, Language Processing, vol. 24, no. 12, pp. 2263–2276

Graves, A., & Schmidhuber, J. (2005). Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Networks, 18(5–6), 602–610.

Kinnunen, T. et al. (2017). Reddots replayed: A new replay spoofing attack corpus for text-dependent speaker verification research. In: 2017 IEEE International conference on acoustics, speech and signal processing (ICASSP), pp. 5395–5399.

Yamagishi, J. et al. (2021). ASVspoof 2021: accelerating progress in spoofed and deepfake speech detection. arXiv Prepr. arXiv2109.00537.

Baumann, R., Malik, K. M., Javed, A., Ball, A., Kujawa, B., & Malik, H. (2021). Voice spoofing detection corpus for single and multi-order audio replays. Computer Speech & Language, 65, 101132.

Martin, A., Doddington, G., Kamm, T., Ordowski, M., & Przybocki, M. (1997). The DET curve in assessment of detection task performance. National Institute of Standards and Technology Gaithersburg MD.

Cheng, J.-M., & Wang, H.-C. (2004). A method of estimating the equal error rate for automatic speaker verification. International Symposium on Chinese Spoken Language Processing, 2004, 285–288.

Tan, C. B., Hijazi, M. H. A., Khamis, N., Zainol, Z., Coenen, F., & Gani, A. (2021). A survey on presentation attack detection for automatic speaker verification systems: State-of-the-art, taxonomy, issues and future direction. Multimedia Tools Applications, 80(21), 32725–32762.

Kinnunen, T., et al. (2018). t-DCF: a detection cost function for the tandem assessment of spoofing countermeasures and automatic speaker verification. arXiv Prepr. arXiv1804.09618.

Sizov, A., Khoury, E., Kinnunen, T., Wu, Z., & Marcel, S. (2015). Joint speaker verification and antispoofing in the i-vector space. IEEE Transactions on Information Forensics and Security, 10(4), 821–832.

Indumathi, A., & Chandra, E. (2012). Survey on speech synthesis. Signal Processing: An International Journal, 6(5), 140.

Hautamäki, R. G., Kinnunen, T., Hautamäki, V., & Laukkanen, A.-M. (2014). Comparison of human listeners and speaker verification systems using voice mimicry data. Target, 4000, 5000.

Hautamäki, R. G., Kinnunen, T., Hautamäki, V., Leino, T., & Laukkanen, A.-M. (2013). I-vectors meet imitators: on vulnerability of speaker verification systems against voice mimicry. Interspeech pp. 930–934.

Hirsch, H.-G., & Pearce, D. (2000). The Aurora experimental framework for the performance evaluation of speech recognition systems under noisy conditions.

Adavanne, S., Politis, A., & Virtanen, T. (2019). A multi-room reverberant dataset for sound event localization and detection. arXiv Prepr. arXiv1905.08546

Snyder, D., Chen, G., & Povey, D. (2015). Musan: A music, speech, and noise corpus. arXiv Prepr. arXiv1510.08484.

Shiota, S., Villavicencio, F., Yamagishi, J., Ono, N., Echizen, I., & Matsui, T. (2015). Voice liveness detection algorithms based on pop noise caused by human breath for automatic speaker verification.

Wang, Q. et al. (2019) Voicepop: A pop noise based anti-spoofing system for voice authentication on smartphones. In: IEEE INFOCOM 2019-IEEE Conference on Computer Communications, pp. 2062–2070.

Gong, Y., Yang, J., Huber, J., MacKnight, M., & Poellabauer, C. (2019). ReMASC: Realistic replay attack corpus for voice controlled systems. arXiv Prepr. arXiv1904.03365.

Sahoo, T. R., & Patra, S. (2014). Silence removal and endpoint detection of speech signal for text independent speaker identification. International Journal of Image, Graphics and Signal Processing, 6(6), 27.

Sathya, A., Swetha, J., Das, K. A., George, K. K., Kumar, C. S. , & Aravinth, J. (2016). Robust features for spoofing detection. In: 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), pp. 2410–2414.

Varga, A. (1992). The NOISEX-92 study on the effect of additive noise on automatic speech recognition. ical Report, DRA Speech Res. Unit.

Dean, D., Kanagasundaram, A., Ghaemmaghami, H., Rahman, M. H., & Sridharan, S. (2015). The QUT-NOISE-SRE protocol for the evaluation of noisy speaker recognition. In: Proceedings of the 16th Annual Conference of the International Speech Communication Association, Interspeech, 2015, pp. 3456–3460.

Akimoto, K., Liew, S. P., Mishima, S., Mizushima, R., Lee, K. A. (2020). POCO: A Voice Spoofing and Liveness Detection Corpus Based on Pop Noise. In INTERSPEECH, pp. 1081–1085.

Fletcher, H., & Munson, W. A. (1933). Loudness, its definition, measurement and calculation. Bell System Technical Journal, 12(4), 377–430.

Reddy, C. K. A., Beyrami, E., Pool, J., Cutler, R., Srinivasan, S., & Gehrke, J. (2019). A scalable noisy speech dataset and online subjective test framework. arXiv Prepr. arXiv1909.08050.

Elko, G. W., Meyer, J., Backer, S., & Peissig, J. (2007). Electronic pop protection for microphones. IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, 2007, 46–49.

Shiota, S., Villavicencio, F., Yamagishi, J., Ono, N., Echizen, I., & Matsui, T. (2016). Voice Liveness Detection for Speaker Verification based on a Tandem Single/Double-channel Pop Noise Detector. Odyssey, 2016, 259–263.

van den Oord, A. et al. (2016). Wavenet: A generative model for raw audio. arXiv Prepr. arXiv1609.03499

Mochizuki, S., Shiota, S., Kiya, H. (2018). Voice liveness detection using phoneme-based pop-noise detector for speaker verification. Threshold 5: 0.

Sahidullah, M., et al. (2017). Robust voice liveness detection and speaker verification using throat microphones. IEEE/ACM Transactions on Audio, Speech, and Language Processing 26(1): 44–56.

Tardelli, J. D. (2003). Pilot corpus for multisensor speech processing. Massachusetts Institute of tech Lexington Lincoln Lab.

Patil, S. A., & Hansen, J. H. L. (2010). The physiological microphone (PMIC): A competitive alternative for speaker assessment in stress detection and speaker verification. Speech Communication, 52(4), 327–340.

Dekens, T., Verhelst, W., Capman, F., & Beaugendre, F. (2010). Improved speech recognition in noisy environments by using a throat microphone for accurate voicing detection. In: 2010 18th European Signal Processing Conference, pp. 1978–1982.

Xu, W., Evans, D., & Qi, Y. (2017). Feature squeezing: Detecting adversarial examples in deep neural networks. arXiv Prepr. arXiv1704.01155.

Wu, Z., Chng, E. S., & Li, H. (2012). Detecting converted speech and natural speech for anti-spoofing attack in speaker recognition.

Loweimi, E., Ahadi, S. M., & Drugman, T. (2013). A new phase-based feature representation for robust speech recognition. In: 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 7155–7159.

Alam, M. J., Kenny, P., Bhattacharya, G., & Stafylakis, T. (2015). Development of CRIM system for the automatic speaker verification spoofing and countermeasures challenge 2015.

Nakagawa, S., Wang, L., & Ohtsuka, S. (2011). Speaker identification and verification by combining MFCC and phase information. IEEE Transactions Audio, Speech, Language Processing, 20(4), 1085–1095.

Delgado, H., et al. (2018). ASVspoof 2017 Version 2.0: meta-data analysis and baseline enhancements.

Li, Q., & Huang, Y. (2010). An auditory-based feature extraction algorithm for robust speaker identification under mismatched conditions. IEEE Transactions on Audio, Speech, Language Processing, 19(6), 1791–1801.

Patil, A. T., Acharya, R., Sai, P. K. A., & Patil, H. A. (2019). Energy separation-based instantaneous frequency estimation for cochlear cepstral feature for replay spoof detection. Interspeech, pp. 2898–2902.

Patel, T. B., & Patil, H. A. (2016). Cochlear filter and instantaneous frequency based features for spoofed speech detection. IEEE Journal of Selected Topics in Signal Processing, 11(4), 618–631.

Patel, T. B., Patil, H. A. (2015). Combining evidences from mel cepstral, cochlear filter cepstral and instantaneous frequency features for detection of natural vs. spoofed speech.

Dressler, K. (2006). Sinusoidal extraction using an efficient implementation of a multi-resolution FFT. In: Proceedings of the International Conference on Digital Audio Effects (DAFx-06), pp. 247–252.

Cancela, P., Rocamora, M., López, E. (2009). An efficient multi-resolution spectral transform for music analysis. In: ISMIR, pp. 309–314.

Alam, M. J., Gupta, V., & Kenny, P. (2016). CRIM’s Speech Recognition System for the 4th CHIME Challenge. In: Proceedings on 4th CHIME Challenge, pp. 63–67

Seltzer, M. L., Yu, D., & Wang, Y. (2013). An investigation of deep neural networks for noise robust speech recognition. In: 2013 IEEE international conference on acoustics, speech and signal processing, pp. 7398–7402.

Lippmann, R., Martin, E., & Paul, D. (1987). “Multi-style training for robust isolated-word speech recognition. ICASSP’87 IEEE International Conference on Acoustics, Speech, and Signal Processing, 12, 705–708.

Tan, T. et al. (2016). Speaker-aware training of LSTM-RNNs for acoustic modelling. In: 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5280–5284.

Qian, Y., Tan, T., & Yu, D. (2016). Neural network based multi-factor aware joint training for robust speech recognition. IEEE/ACM Transactions on Audio, Speech, Language Processing, vol. 24, no. 12, pp. 2231–2240

Bu, S., Qian, Y., & Yu, K. (2014). A novel dynamic parameters calculation approach for model compensation.

Soltau, H., Saon, G., & Sainath, T. N. (2014). Joint training of convolutional and non-convolutional neural networks. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5572–5576.

G. E. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever, and R. R. Salakhutdinov, “Improving neural networks by preventing co-adaptation of feature detectors,” arXiv Prepr. arXiv1207.0580, 2012.

Rennie, S. J., Goel, V., & Thomas, S. (2014). “Annealed dropout training of deep networks. IEEE Spoken Language Technology Workshop (SLT), 2014, 159–164.

Evans, N., Kinnunen, T., Yamagishi, J., Wu, Z., Alegre, F., & De Leon, P. (2014). Speaker recognition anti-spoofing. In: Handbook of biometric anti-spoofing, Springer, Berlin, pp. 125–146.

Novoselov, S., Kozlov, A., Lavrentyeva, G., Simonchik, K., & Shchemelinin, V. (2016). STC anti-spoofing systems for the ASVspoof 2015 challenge, In: 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5475–5479.

Alam, J., & Kenny, P. (2017). Spoofing detection employing infinite impulse response—constant Q transform-based feature representations. In: 2017 25th European signal processing conference (EUSIPCO), pp. 101–105.

Patel, T. B., & Patil, H. A. (2017). Significance of source–filter interaction for classification of natural vs. spoofed speech. IEEE Journal of Selected Topics on Signal Processings, 11(4), 644–659.

Suthokumar, G., Sethu, V., Wijenayake, C., & Ambikairajah, E. (2018). Modulation Dynamic Features for the Detection of Replay Attacks. Interspeech, pp. 691–695.

Tapkir, P. A., Patil, A. T., Shah, N., & Patil, H. A. (2018). “Novel spectral root cepstral features for replay spoof detection. Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), 2018, 1945–1950.

Chettri, B., Sturm, B. L., & Benetos, E. (2018). Analysing replay spoofing countermeasure performance under varied conditions. In: 2018 IEEE 28th International Workshop on Machine Learning for Signal Processing (MLSP), pp. 1–6.

Liu, M., Wang, L., Oo, Z., Dang, J., Li, D., & Nakagawa, S. (2018). Replay attacks detection using phase and magnitude features with various frequency resolutions. In: 2018 11th International Symposium on Chinese Spoken Language Processing (ISCSLP), pp. 329–333.

Sriskandaraja, K. (2018). Spoofing countermeasures for secure and robust voice authentication system: Feature extraction and modelling. University of New South Wales

Lavrentyeva, G., Novoselov, S., Volkova, M., Matveev, Y., & De Marsico, M. (2019). Phonespoof: A new dataset for spoofing attack detection in telephone channel. In: ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2572–2576.

Himawan, I., Villavicencio, F., Sridharan, S., & Fookes, C. (2019). Deep domain adaptation for anti-spoofing in speaker verification systems. Computer Speech & Language, 58, 377–402.

Gomez-Alanis, A., Peinado, A. M., Gonzalez, J. A., & Gomez, A. M. (2019). A gated recurrent convolutional neural network for robust spoofing detection. IEEE/ACM Transactions on Audio, Speech, Language Processing, vol. 27, no. 12, pp. 1985–1999.

Bollepalli, B., Juvela, L., & Alku, P. (2019). Generative adversarial network-based glottal waveform model for statistical parametric speech synthesis. arXiv Prepr. arXiv1903.05955.

Faisal, M. Y., & Suyanto, S. (2019). “SpecAugment impact on automatic speaker verification system. International Seminar on Research of Information Technology and Intelligent Systems (ISRITI), 2019, 305–308.

Das, R. K., Tian, X., Kinnunen, T., & Li, H. (2020). The attacker’s perspective on automatic speaker verification: An overview. arXiv Prepr. arXiv2004.08849.

Halpern, B. M., Kelly, F., van Son, R., & Alexander, A. (2020). Residual networks for resisting noise: analysis of an embeddings-based spoofing countermeasure.

Cai, D., Cai, W., & Li, M. (2020). Within-sample variability-invariant loss for robust speaker recognition under noisy environments. ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 6469–6473.

Gomez-Alanis, A., Gonzalez-Lopez, J. A., Dubagunta, S. P., Peinado, A. M., & Doss, M. M. (2020). On joint optimization of automatic speaker verification and Anti-Spoofing in the embedding space. IEEE Transactions on Information Forensics and Security vol. 16, pp. 1579–1593.

Rupesh Kumar, S., & Bharathi, B. (2021). A novel approach towards generalization of countermeasure for spoofing attack on ASV systems. Circuits, Systems, and Signal Processing, 40(2), 872–889.

Dua, M., Sadhu, A., Jindal, A., & Mehta, R. (2022). A hybrid noise robust model for multireplay attack detection in Automatic speaker verification systems. Biomedical Signal Processing and Control, 74, 103517.

Joshi, S., & Dua, M. (2022). LSTM-GTCC based Approach for Audio Spoof Detection. In: 2022 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COM-IT-CON), vol. 1, pp. 656–661.

Chakravarty, N., & Dua, M. (2022). Noise robust ASV spoof detection using integrated features and time delay neural network. SN Computer Science, 4(2), 127. https://doi.org/10.1007/s42979-022-01557-4

Dua, M., Joshi, S., & Dua, S. (2023). “Data augmentation based novel approach to automatic speaker verification system. Ee-Prime-Advances Electric Engineering Electron Energy, 6, 100346.

Joshi, S., & Dua, M. (2022). Multi-order replay attack detection using enhanced feature extraction and deep learning classification. Proceedings of International Conference on Recent Trends in Computing: ICRTC, 2023, 739–745.

Chakravarty, N., & Dua, M. (2023). Data augmentation and hybrid feature amalgamation to detect audio deep fake attacks. Physica Scripta, 98(9), 96001.

Chakravarty, N., & Dua, M. (2024). An improved feature extraction for Hindi language audio impersonation attack detection. Multimedia Tools and Applications pp. 1–26.

Chakravarty, N., & Dua, M. (2024). A lightweight feature extraction technique for deepfake audio detection. Multimedia Tools and Applications, pp. 1–25.

Sriskandaraja, K., Suthokumar, G., Sethu, V., & Ambikairajah, E. (2017). “Investigating the use of scattering coefficients for replay attack detection. Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), 2017, 1195–1198.

Dinkel, H., Qian, Y., & Yu, K. (2018). Investigating raw wave deep neural networks for end-to-end speaker spoofing detection. IEEE/ACM Transactions on Audio, Speech, Language Processing, vol. 26, no. 11, pp. 2002–2014.

Mankad, S. H., & Garg, S. (2020). On the performance of empirical mode decomposition-based replay spoofing detection in speaker verification systems. Progres in Artificial Intelligence, 9(4), 325–339.

Chakravarty, N., & Dua, M. (2023). Spoof detection using sequentially integrated image and audio features. International Journal of Computing and Digital Systems, 13(1), 1–1.

Dua, M., Meena, S., & Chakravarty, N. (2023). Audio Deepfake detection using data augmented graph frequency cepstral coefficients. In: 2023 IEEE International Conference on System, Computation, Automation and Networking (ICSCAN) pp. 1–6.

Funding

This study did not receive any funding from any of the resource.

Author information

Authors and Affiliations

Contributions

Both the authors have equal contribution in preparing manuscript. This study is the authors own original work, which has not been previously published elsewhere. All authors implemented the proposed idea. I, Sanil Joshi, wrote the manuscript, including tables and figures, and Dr Mohit Dua reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

All the authors and the submitted manuscript do not have any conflict of interest.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Joshi, S., Dua, M. Noise robust automatic speaker verification systems: review and analysis. Telecommun Syst 87, 845–886 (2024). https://doi.org/10.1007/s11235-024-01212-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11235-024-01212-8