Abstract

We propose a Bayesian choice model, the Dirichlet–Luce model, for recommender systems that interact with users in a feedback loop. The model is built on a generalization of the Dirichlet distribution and the assumption that the users of a recommender system choose from a subset of all items that are systematically presented based on their previous choices. The model allows efficient inference of user preferences. Its Bayesian construction leads to a bandit algorithm—based on Thompson sampling—for online learning to recommend, which achieves low regret measured in terms of the inherent attractiveness of the options included in the recommendations. The combined setup also eliminates some biases recommender systems might be prone to, where popular, promoted, or initially preferred items are overestimated due to overexposure, or underrepresented items in the recommendations are underestimated. Our model has a potential to be reused as a fundamental building block for recommender systems.

Similar content being viewed by others

References

Abdollahpouri, H.: Popularity bias in ranking and recommendation. In: The 2019 AAAI/ACM Conference on AI, Ethics, and Society, pp. 27–28 (2019). https://doi.org/10.1145/3306618.3314309

Agarwal, A., Wang, X., Li, C., Bendersky, M., Najork, M.: Addressing trust bias for unbiased learning-to-rank. In: The World Wide Web Conference, pp. 4–14 (2019)

Ailon, N., Karnin, Z., Joachims, T.: Reducing dueling bandits to cardinal bandits. In: International Conference on Machine Learning, pp. 856–864 (2014)

Baeza-Yates, R.: Bias on the web. Commun. ACM 61(6), 54–61 (2018)

Baeza-Yates, R.: Bias in search and recommender systems. In: Fourteenth ACM Conference on Recommender Systems, RecSys ’20, p. 2 (2020). https://doi.org/10.1145/3383313.3418435

Balog, M., Tripuraneni, N., Ghahramani, Z., Weller, A.: Lost relatives of the Gumbel trick. In: Proceedings of the 34th International Conference on Machine Learning, ICML’17, vol. 70, pp. 371–379 (2017)

Basilico, J.: Recent trends in personalization: a Netflix perspective. In: ICML 2019 Workshop on Adaptive and Multitask Learning. ICML (2019)

Blei, D.M., Ng, A.Y., Jordan, M.I.: Latent Dirichlet allocation. J. Mach. Learn. Res. 3, 993–1022 (2003)

Bradley, R.A., Terry, M.E.: Rank analysis of incomplete block designs: I the method of paired comparisons. Biometrika 39(3/4), 324–345 (1952)

Busa-Fekete, R., Szorenyi, B., Cheng, W., Weng, P., Hüllermeier, E.: Top-k selection based on adaptive sampling of noisy preferences. In: International Conference on Machine Learning, pp. 1094–1102 (2013)

Busa-Fekete, R., Hüllermeier, E., Szörényi, B.: Preference-based rank elicitation using statistical models: the case of mallows. In: Proceedings of The 31st International Conference on Machine Learning, vol. 32 (2014)

Cantador, I., Brusilovsky, P., Kuflik, T.: 2nd Workshop on information heterogeneity and fusion in recommender systems (hetrec 2011). In: Proceedings of the 5th ACM Conference on Recommender Systems, RecSys 2011. ACM, New York, NY, USA (2011)

Carlson, B.C.: Appell functions and multiple averages. SIAM J. Math. Anal. 2(3), 420–430 (1971). https://doi.org/10.1137/0502040

Caron, F., Doucet, A.: Efficient Bayesian inference for generalized Bradley–Terry models. J. Comput. Graph. Stat. 21(1), 174–196 (2012)

Carpenter, B., Gelman, A., Hoffman, M.D., Lee, D., Goodrich, B., Betancourt, M., Brubaker, M., Guo, J., Li, P., Riddell, A.: Stan: A probabilistic programming language. J. Stat. Softw. 76(1) (2017)

Çapan, G., Bozal, Ö., Gündoğdu, İ., Cemgil, A.T.: Towards fair personalization by avoiding feedback loops. In: NeurIPS 2019 Workshop on Human-Centric Machine Learning (2019)

Chakrabarti, D., Kumar, R., Radlinski, F., Upfal, E.: Mortal multi-armed bandits. In: Koller, D., Schuurmans, D., Bengio, Y., Bottou, L. (eds) Advances in Neural Information Processing Systems, vol. 21 (2009)

Chaney, A.J.B., Stewart, B.M., Engelhardt, B.E.: How algorithmic confounding in recommendation systems increases homogeneity and decreases utility. In: Proceedings of the 12th ACM Conference on Recommender Systems, pp. 224–232 (2018). https://doi.org/10.1145/3240323.3240370

Chen, J., Dong, H., Wang, X., Feng, F., Wang, M., He, X.: Bias and debias in recommender system: a survey and future directions. arXiv:2010.03240 (2020)

Chopin, N.: A sequential particle filter method for static models. Biometrika 89(3), 539–552 (2002)

Chuklin, A., Markov, I., de Rijke, M.: Click models for web search. Synth. Lect. Inf. Concepts Retr. Serv. 7(3), 1–115 (2015). https://doi.org/10.2200/S00654ED1V01Y201507ICR043

Covington, P., Adams, J., Sargin, E.: Deep neural networks for YouTube recommendations. In: Proceedings of the 10th ACM Conference on Recommender Systems, New York, NY, USA (2016). https://doi.org/10.1145/2959100.2959190

Craswell, N., Zoeter, O., Taylor, M., Ramsey, B.: An experimental comparison of click position-bias models. In: Proceedings of the 2008 International Conference on Web Search and Data Mining, pp. 87–94. ACM (2008)

Davidson, R.R., Solomon, D.L.: A Bayesian approach to paired comparison experimentation. Biometrika 60(3), 477–487 (1973)

Dickey, J.M.: Multiple hypergeometric functions: probabilistic interpretations and statistical uses. J. Am. Stat. Assoc. 78(383), 628–637 (1983). https://doi.org/10.2307/2288131

Dickey, J.M., Jiang, J., Kadane, J.B.: Bayesian methods for censored categorical data. J. Am. Stat. Assoc. 82(399), 773–781 (1987). https://doi.org/10.2307/2288786

Doucet, A., Johansen, A.: A tutorial on particle filtering and smoothing: Fifteen years later (01, 2008)

Duane, S., Kennedy, A.D., Pendleton, B.J., Duncan, R.: Hybrid Monte Carlo. Phys. Lett. B 195(2), 216–222 (1987). https://doi.org/10.1016/0370-2693(87)91197-X

Ermis, B., Ernst, P., Stein, Y., Zappella, G.: Learning to rank in the position based model with bandit feedback. In: Proceedings of the 29th ACM International Conference on Information and Knowledge Management, pp. 2405–2412 (2020)

Falahatgar, M., Orlitsky, A., Pichapati, V., Suresh, A.T.: Maximum selection and ranking under noisy comparisons. In: International Conference on Machine Learning, pp. 1088–1096 (2017)

Gentile, C., Li, S., Zappella, G.: Online clustering of bandits. In: Proceedings of the 31st International Conference on International Conference on Machine Learning, vol. 32, pp. II–757 (2014)

Gilks, W.R., Best, N.G., Tan, K.K.C.: Adaptive rejection Metropolis sampling within Gibbs sampling. J. R. Stat. Soc. Ser. C Appl. Stat. 44(4), 455–472 (1995)

Gopalan, A., Mannor, S., Mansour, Y.: Thompson sampling for complex online problems. In: Proceedings of the 31st International Conference on Machine Learning, pp. 100–108 (2014)

Guiver, J., Snelson, E.: Bayesian inference for Plackett–Luce ranking models. In: Proceedings of the 26th Annual International Conference on Machine Learning, pp. 377–384. ACM (2009)

Gumbel, E.J.: Statistical theory of extreme values and some practical applications: a series of lectures. Technical Report (1954)

Gündoğdu, İ: Sequential Monte Carlo approach to inference in Bayesian choice models. Master’s Thesis, Bogazici University, (2019). https://github.com/ilkerg/preference-sampler/raw/master/thesis.pdf

Hankin, R.K.S.: A generalization of the Dirichlet distribution. J. Stat. Softw. 33(11), 1–18 (2010). https://doi.org/10.18637/jss.v033.i11

Herlocker, J.L., Konstan, J.A., Terveen, L.G., Riedl, J.T.: Evaluating collaborative filtering recommender systems. ACM Trans. Inf. Syst. 22(1), 5–53 (2004). https://doi.org/10.1145/963770.963772

Hu, Y., Koren, Y., Volinsky, C.: Collaborative filtering for implicit feedback datasets. In: 2008 Eighth IEEE International Conference on Data Mining, pp. 263–272 (2008). https://doi.org/10.1109/ICDM.2008.22

Hunter, D.R.: MM Algorithms for generalized Bradley–Terry models. Ann. Stat. 32(1), 384–406 (2004). https://doi.org/10.1214/aos/1079120141

Jamieson, K.G., Nowak, R.: Active ranking using pairwise comparisons. In: Advances in Neural Information Processing Systems, pp. 2240–2248 (2011)

Jiang, R., Chiappa, S., Lattimore, T., Agyorgy, A., Kohli, P.: Degenerate feedback loops in recommender systems. In: Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society (2019). https://doi.org/10.1145/3306618.3314288

Jiang, T.J., Kadane, J.B., Dickey, J.M.: Computation of Carlson’s multiple hypergeometric function R for Bayesian applications. J. Comput. Graph. Stat. 1(3), 231–251 (1992). https://doi.org/10.1080/10618600.1992.10474583

Joachims, T., Raimond, Y., Koch, O., Dimakopoulou, M., Vasile, F., Swaminathan, A.: Reveal 2020: Bandit and reinforcement learning from user interactions. In: Fourteenth ACM Conference on Recommender Systems, RecSys ’20, pp. 628–629 (2020). https://doi.org/10.1145/3383313.3411536

Kawale, J., Bui, H.H., Kveton, B., Tran-Thanh, L., Chawla, S.: Efficient Thompson sampling for online matrix-factorization recommendation. In: Advances in Neural Information Processing Systems, pp. 1297–1305 (2015)

Komiyama, J., Qin, T.: Time-decaying bandits for non-stationary systems. In: Liu, T.-Y., Qi, Q., Ye, Y. (eds.) Web and Internet Economics, pp. 460–466. Springer International Publishing, Cham (2014)

Komiyama, J., Honda, J., Kashima, H., Nakagawa, H.: Regret lower bound and optimal algorithm in dueling bandit problem. In: Conference on Learning Theory, pp. 1141–1154 (2015)

Komiyama, J., Honda, J., Nakagawa, H.: Copeland dueling bandit problem: regret lower bound, optimal algorithm, and computationally efficient algorithm. In: International Conference on Machine Learning, pp. 1235–1244 (2016)

Komiyama, J., Honda, J., Takeda, A.: Position-based multiple-play bandit problem with unknown position bias. Adv. Neural Inf. Process. Syst. 30, 4998–5008 (2017)

Kveton, B., Szepesvári, C., Wen, Z., Ashkan, A.: Cascading bandits: learning to rank in the cascade model. In: Proceedings of the 32nd International Conference on International Conference on Machine Learning, vol. 37, pp. 767–776 (2015a)

Kveton, B., Wen, Z. , Ashkan, A., Szepesvári, C.: Combinatorial cascading bandits. In: Proceedings of the 28th International Conference on Neural Information Processing Systems, vol. 1, pp. 1450–1458 (2015b)

Lattimore, T., Szepesvári, C.: Bandit Algorithms. Cambridge University Press (2020). https://doi.org/10.1017/9781108571401

Lattimore, T., Kveton, B., Li, S., Szepesvári, C.: Toprank: A practical algorithm for online stochastic ranking. In: Advances in Neural Information Processing Systems, pp. 3949–3958 (2018)

Leonard, T.: An alternative Bayesian approach to the Bradley–Terry model for paired comparisons. Biometrics 33, 121–132 (1977). https://doi.org/10.2307/2529308

Levine, N., Crammer, K., Mannor, S.: Rotting bandits. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 3077–3086 (2017)

Li, S., Karatzoglou, A., Gentile, C.: Collaborative filtering bandits. In: Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 539–548 (2016)

Liang, D., Charlin, L., Blei, D.M.: Causal inference for recommendation. In: UAI Workshop on Causation: Foundation to Application (2016a)

Liang, D., Charlin, L., McInerney, J., Blei, D.M.: Modeling user exposure in recommendation. In: Proceedings of the 25th International Conference on World Wide Web, WWW ’16, pp. 951–961 (2016b). https://doi.org/10.1145/2872427.2883090

Liang, D., Krishnan, R.G., Hoffman, M.D., Jebara, T.: Variational autoencoders for collaborative filtering. In: Proceedings of the 2018 World Wide Web Conference, WWW ’18. International World Wide Web Conferences Steering Committee, pp. 689–698 (2018). https://doi.org/10.1145/3178876.3186150

Liu, T.-Y.: Learning to rank for information retrieval. Found. Trends® Inf.Retri. 3(3), 225–331 (2009)

Liu, Y., Li, L.: A map of bandits for e-commerce. In: KDD 2021 Workshop on Multi-Armed Bandits and Reinforcement Learning: Advancing Decision Making in E-Commerce and Beyond (2021)

Ducan Luce, R.: Individual Choice Behavior. Wiley, Hoboken (1959)

Mehrotra, R., McInerney, J., Bouchard, H., Lalmas, M., Diaz, F.: Towards a fair marketplace: counterfactual evaluation of the trade-off between relevance, fairness and satisfaction in recommendation systems. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, pp. 2243–2251. ACM (2018). https://doi.org/10.1145/3269206.3272027

Mohajer, S., Suh, C., Elmahdy, A.: Active learning for top-\(k\) rank aggregation from noisy comparisons. In: International Conference on Machine Learning, pp. 2488–2497 (2017)

Neal, R.M.: Slice sampling. Ann. Stat. 31(3), 705–767 (2003)

Nie, X., Tian, X., Taylor, J., Zou, J.: Why adaptively collected data have negative bias and how to correct for it. In: International Conference on Artificial Intelligence and Statistics, pp. 1261–1269 (2018)

Pariser, E.: The filter bubble: What the internet is hiding from you. Penguin, UK (2011)

Plackett, R.L.: The analysis of permutations. Appl. Stat. pp. 193–202 (1975)

Pearl, P., Chen, L., Rong, H.: Evaluating recommender systems from the user’s perspective: survey of the state of the art. User Model. User-Adapt. Interact. 22(4–5), 317–355 (2012). https://doi.org/10.1007/s11257-011-9115-7

Regenwetter, M., Dana, J., Davis-Stober, C.P.: Transitivity of preferences. Psychol. Rev. 118(1), 42 (2011). https://doi.org/10.1037/a0021150

Russo, D.J., Van Roy, B., Kazerouni, A., Osband, I., Wen, Z.: A tutorial on Thompson sampling. Found. Trends® Mach. Learn. 11(1), 1–96 (2018)

Saha, A., Gopalan, A.: Battle of bandits. In: Proceedings of the Thirty-Forth Conference on Uncertainty in Artificial Intelligence, UAI, pp. 06–10 (2018)

Saha, A., Gopalan, A.: Combinatorial bandits with relative feedback. In: Advances in Neural Information Processing Systems, pp. 983–993 (2019)

Schmit, S., Riquelme, C.: Human interaction with recommendation systems. In: Proceedings of the 21th International Conference on Artificial Intelligence and Statistics (2018)

Schnabel, T., Swaminathan, A., Singh, A., Chandak, N., Joachims, T.: Recommendations as treatments: debiasing learning and evaluation. In: International Conference on Machine Learning, pp. 1670–1679 (2016)

Sinha, A., Gleich, D.F., Ramani, K.: Deconvolving feedback loops in recommender systems. In: Advances in Neural Information Processing Systems, pp. 3243–3251 (2016)

Sopher, B.: Intransitive cycles: rational choice or random error? An answer based on estimation of error rates with experimental data. Theor. Decis. 35(3), 311–336 (1993). https://doi.org/10.1007/BF01075203

Sui, Y., Zhuang, V., Burdick, J.W., Yue, Y.: Multi-dueling bandits with dependent arms. In: Proceedings of the Thirty-Forth Conference on Uncertainty in Artificial Intelligence, UAI (2017)

Sui, Y., Zoghi, M., Hofmann, K., Yue, Y.: Advancements in dueling bandits. In: Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI-18, pp. 5502–5510 (2018). https://doi.org/10.24963/ijcai.2018/776

Sun, W., Khenissi, S., Nasraoui, O., Shafto, P.: Debiasing the human-recommender system feedback loop in collaborative filtering. In: Companion Proceedings of the 2019 World Wide Web Conference, WWW ’19, pp.645–651. Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3308560.3317303

Szörényi, B., Busa-Fekete, R., Paul, A., Hüllermeier, E.: Online Rank elicitation for Plackett-Luce: a dueling bandits approach. In: Advances in Neural Information Processing Systems, pp. 604–612 (2015)

Thompson, W.R.: On the likelihood that one unknown probability exceeds another in view of the evidence of two samples. Biometrika 25(3/4), 285–294 (1933)

Urvoy, T., Clerot, F., Féraud, R., Naamane, S.: Generic exploration and k-armed voting bandits. In: International Conference on Machine Learning, pp. 91–99 (2013)

Wang, Y., Liang, D., Charlin, L., Blei, D.M.: Causal inference for recommender systems. In: Fourteenth ACM Conference on Recommender Systems, RecSys ’20, pp. 426–431 (2020). https://doi.org/10.1145/3383313.3412225

Wu, H., Liu, X.: Double Thompson sampling for dueling bandits. In: Advances in Neural Information Processing Systems, pp. 649–657 (2016)

Yang, S.-H., Long, B., Smola, A.J., Zha, H., Zheng, Z.: Collaborative competitive filtering: learning recommender using context of user choice. In: Proceedings of the 34th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 295–304. ACM (2011). https://doi.org/10.1145/2009916.2009959

Yellott, J.I., Jr.: The relationship between Luce’s choice axiom, Thurstone’s theory of comparative judgment, and the double exponential distribution. J. Math. Psychol. 15(2), 109–144 (1977)

Yue, Y., Joachims, T.: Beat the mean bandit. In: International Conference on Machine Learning, pp. 241–248 (2011)

Yue, Y., Broder, J., Kleinberg, R.: The k-armed dueling bandits problem. J. Comput. Syst. Sci. 78(5), 1538–1556 (2012). https://doi.org/10.1016/j.jcss.2011.12.028

Zhao, X., Zhang, W., Wang, J.: Interactive collaborative filtering. In: Proceedings of the 22nd ACM International Conference on Information and Knowledge Management, pp. 1411–1420 (2013)

Zipf, G.K.: Human Behavior and the Principle of Least Effort. Addison Wesley Press Inc, Pearson (1949)

Zoghi, M., Whiteson, S., Munos, R., Rijke, M.: Relative upper confidence bound for the k-armed dueling bandit problem. In: International Conference on Machine Learning, pp. 10–18 (2014a)

Zoghi, M., Whiteson, S.A., De Rijke, M., Munos, R.: Relative confidence sampling for efficient on-line ranker evaluation. In: ACM International Conference on Web Search and Data Mining, pp. 73–82 (2014b). https://doi.org/10.1145/2556195.2556256

Zoghi, M., Karnin, Z.S., Whiteson, S., De Rijke, M.: Copeland dueling bandits. In: Advances in Neural Information Processing Systems, pp. 307–315 (2015)

Zoghi, M., Tunys, T., Ghavamzadeh, M., Kveton, B., Szepesvari, C., Wen, Z.: Online learning to rank in stochastic click models. In: Proceedings of the 34th International Conference on Machine Learning, vol. 70, pp. 4199–4208 (2017)

Zong, S., Ni, H., Sung, K., Ke, N.R., Wen, Z., Kveton, B.: UAI, Cascading bandits for large-scale recommendation problems (2016)

Acknowledgements

The authors would like to thank Çağrı Sofuoğlu and Özge Bozal for fruitful discussions on conceptualizing the modeling ideas and design of some experiments, respectively.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Ali Caner Türkmen: work done prior to joining Amazon.

Appendices

Appendices

1.1 Posterior predictive inference

We can write \(p(k_{1:T}\mid C_{1:T},\alpha , \beta )\), probability of a sequence of choices conditioned on a sequence of presentations and hyperparameters, as ratios of \({\mathcal {R}}\) functions

where the notation \((x)_{(n)} = \frac{\Gamma (x+n)}{\Gamma (x)}\) denotes the rising factorial and \({\mathbf {y}}\) is the vector \((y_1, y_2, \ldots , y_K)\)

This gives the predictive preference of option k (over all [K]) as

where \({\mathbf {k}}\) is the indicator vector of size K where all but the k-th element are 0.

1.2 Learning from preferences

Although the assumptions implied by Luce’s choice axiom come with the trade-off of failing to capture multimodal preferences, they allow fast inference from a small fraction of possible presentations. Motivated by the psychology literature, for the multimodal case a mixture extension is conjectured in the main text.

Dirichlet–Luce is not the only Bayesian choice model we can write that assumes the restricted multinomial likelihood, but it is a natural one considering an interactive system. Let us review an alternative model, due to Caron and Doucet (2012), and then highlight our main motivation.

Caron and Doucet Model: Luce’s choice model corresponds to a random utility model with Gumbel noise (Yellott Jr 1977). That is to say, the multinomial choice can be modeled with the so-called Gumbel-max procedure (Gumbel 1954). Assuming positive mean utilities \(u_\kappa \), \(\forall \kappa \in [K]\), the following procedure:

where \({{\mathcal {G}}}{{\mathcal {U}}}(l, 1)\) denotes the Gumbel distribution with location l and scale 1, gives the restricted multinomial (Luce 1959):

A Bayesian approach would treat an unnormalized choice probability \(u_k \sim {\mathcal {U}}_k\) as a random variable. A notable method is due to Caron and Doucet (2012). There, the multinomial choice restricted to a presentation is cast as an exponential race with the following generative model:

Here, \({\mathcal {G}}(a, b)\) denotes the gamma distribution with shape a and the inverse scale b (i.e., \({\mathbb {E}}U_\kappa = a/b\)). \({\mathcal {E}}(u)\) denotes the exponential distribution with rate u. We refer to Balog et al. (2017) for the relation of the exponential race and the Gumbel-max trick. In Caron and Doucet model, restricted to pairwise choices, we define \(x_{t, i,j} = \min (z_{t, i}, z_{t, j})\). Then of course, \(x_{t, i, j}\sim {\mathcal {E}}(u_{i}+u_{j})\), and \(\sum _t{x_{t, i, j}} = X_{i,j} \sim {\mathcal {G}}(\mu (\{i,j\}), u_{i}+u_{j})\). The complete conditionals, obtained as,

can be utilized to implement a Gibbs sampler. The statistics y and \(\mu \) are identical to the Dirichlet–Luce case. Both models operate with the same statistics, converge to similar (when normalized versions of posterior \(u_\kappa \)’s are used in Caron and Doucet model) preferences, and require sampling for inference.

In the main text, in the case where the observations comply with the Luce choice axiom, the following scenario was described as an example where \(K-1\) distinct pairwise preferences would be sufficient to recover the preferences. There, we first pick an arbitrary pivot option, and then observe preferences from pairs of options formed by the pivot and one of the other \(K-1\) options. We highlight that despite seemingly combinatorial dimensionality of the statistics, both the Dirichlet–Luce model and Caron and Doucet model converge to a reasonable estimate of overall preferences, i.e., \(p(k\mid [K])\) (Fig. 9). There, the Gibbs sampler by Caron and Doucet (2012), and a Hamiltonian Monte Carlo (Duane et al. 1987) sampler implemented in Stan probabilistic programming language (Carpenter et al. 2017) for the Dirichlet–Luce model are used to obtain posterior samples.

Estimated (from 500 samples) posterior mean of \(\theta \) (over 20 runs) conditioned on many (2000) choices from pairwise presentations constructed with a randomly selected pivot option and the others. \(\theta ^*\) is ordered, and \({\mathbb {E}}[\theta ]\) estimates are conformably permuted for visualization. Shaded regions denote the standard deviation

If the task of the hypothesized interactive system was only to infer preferences given a batch of choice observations, other choice models, as, e.g., Caron and Doucet (2012) described for pairwise preferences case and Guiver and Snelson (2009) for L-wise preferences, would serve our purposes. In fact, they converge to similar preferences conditioned on a batch of choice observations. But the system’s task is twofold. In addition to the inference task, the discovery task—as K is so large in practice, i.e., to assume responsibility for finding all good items without overlooking any alternative (Herlocker et al. 2004), is on the learning system.

We need a sequential decision-making procedure, an online presentation mechanism. The fundamental motivation to devising Dirichlet–Luce is that it gives us a conjugate density which can be directly utilized in the interactive learning scenario. The resulting procedure is straightforward, this sequential decision making procedure can be implemented with a sequential sampler, and finally, although the posterior is updated at each interaction round, \(k_{1:T}\mid C_{1:T}, \alpha , \beta \), the sequence of choices, when choice probabilities are integrated out, is exchangeable (as can be seen from Eq. 5).

The preference learning illustration in the main text is not the only example that we can devise to demonstrate the efficiency of the inference procedure. We will presently give other scenarios, which again, utilize the underlying model assumptions. The first one is to ensure consistency, and the second one is by analogy to stochastic ranking algorithms:

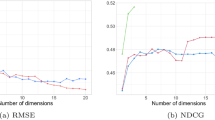

Preference elicitation from feedback to overlapping presentations: In this scenario, we assume we observe preferences to presentations \(C_1 = \{1, 2\}, C_2 = \{2, 3\}, \ldots , C_{K-1} = \{K-1, K\}\). Note that here \(\nu _{k, C}\) (the underlying contingency table in the main text) is ambiguous since the statistics that the model utilizes include only the margins of \(\nu \), and an option is presented together with multiple options. The dimensionality of \(\mu \) is again \(K - 1\). In this scenario, too, both in the case of ours and in the case of Caron and Doucet models, the posterior predictive probabilities converge to latent preferences (Fig. 10a)

Active ranking: As before, assuming a single preference vector underlying choice probabilities leads to the implicit assumption that preferences are (stochastically) transitive—options admit a total ordering in their probability of being chosen against all others.By analogy, a sorting algorithm—relying on transitivity—would find the ordering of K options with \(O(K\log K)\) pairwise comparisons. Similarly, in the field of active learning, stochastic ranking from pairwise preferences has been widely explored (Falahatgar et al. 2017; Szörényi et al. 2015; Jamieson and Nowak 2011; Busa-Fekete et al. 2014, 2013; Mohajer et al. 2017). Here too, the object is to attain a probably approximately correct ranking of preferences within low sample complexity. Analogously to our case, the algorithm receives a stochastic comparison (random preference) feedback instead of a deterministic comparison result.

In this light, we explore whether the Dirichlet–Luce and Caron and Doucet constructions are able to recover a good representation of preferences, given a number of samples on the same order as a stochastic ranking algorithm. We take the Merge-Rank algorithm (Falahatgar et al. 2017), a stochastic variant of the mergesort algorithm for active subset selection—selecting pairs of options to ask for a preference feedback from the environment. We fix a preference vector \(\theta ^*\) and run the Merge-Rank algorithm, generating a set of pairwise comparisons \(C_{1:T}\) (corresponding to our presentations) and stochastic feedback \(k_{1:T}\) (corresponding to choices). In Fig. 10b, we find that \(\theta ^*\) can be recovered accurately based on the same number of samples required by a stochastic ranker. As in mergesort, the number of unique presentations is \(O(K\log K)\).

1.2.1 Maximum a posteriori estimation

The Dirichlet–Luce posterior log potential \(\phi (\theta )\) is defined as

Here, the index C runs over L-way combinations of the set of choices [K], and k indexes the choices themselves. As in the main text, introducing the indicator matrix \(Z \in \{0, 1\}^{K \times |{\mathcal {C}}|}\), defined as \(z_{k, \sigma (C)} = [k \in C]\), \(\phi (\theta )\) is written as

The gradient of the log-posterior potential (7) can be computed in \(O(K+LD)\) time, as the sparsity of Z can be invoked. In large data scenarios, the MAP estimate can be used as a point estimate of preferences as well as for approximate inference as described in the previous section. However, the concern for the number of samples required for an accurate estimate should be raised for MAP estimates as well.

In Fig. 11, we continue the synthetic experiment setup in Fig. 10b. Namely, we generate pairwise comparisons from Merge-Rank for random dense \(\theta ^*\). We then increase the number of random samples T taken from these data and study the accuracy of MAP preference estimates attained by our model. We report the error in estimated \(\theta \) in terms of total absolute deviation and Kullback–Leibler divergence. We find that the MAP routine yields an accurate preference estimate in a reasonable number of observations (Fig. 11).

1.3 Independence of unexplored options

In Sect. 3.3 of the main text, we stated that the posterior leads to fair preference estimates since choice probabilities of unexplored (\(k \in C\) implies \(\mu (C)=0\)) options are invariant independent of other choices. Here, we demonstrate this result deriving the marginal distribution of posterior choice probabilities for such options.

Lemma 3

(Independence of unexplored options) Assume \(\mu (C) = 0, \forall C \ni \ell \). It then follows, \(p(\theta _\ell \mid \alpha , \beta _0, k_{1:T}, C_{1:T}) = p(\theta _\ell \mid \alpha , \beta _0)\).

Proof

Assume, without loss of generality, option 1 was never presented. Since with \(\beta = \beta _0\), prior \(\theta \) is Dirichlet distributed with parameter \(\alpha \), prior marginal \(\theta _1|\alpha , \beta _0\) is beta-distributed with parameters \((\alpha _1, \sum _{j=2}^K \alpha _j)\). The posterior marginal is

where \(T_1 = \{(\theta _2, \ldots , \theta _K)\mid \sum _{j=2}^K\theta _j=1-\theta _1, \theta _j>0\}\).

With a change of variables \(u_j = \frac{\theta _j}{1-\theta _1}\) for \(j \in \{2, \ldots , K\}\), we obtain:

Then, the posterior \(\theta _1\mid \alpha , k_{1:T}, C_{1:T} \sim {\mathcal {B}}(\alpha _1, \sum _{j=2}^K \alpha _j)\) is also Beta distributed with parameters \((\alpha _1, \sum _{j=2}^K \alpha _j)\), identically to the prior \(p(\theta _1|\alpha , \beta _0)\). \(\square \)

1.4 Sequential sampling procedure

The complete online learning to recommend algorithm along with the sequential sampling routine is listed in Algorithm 2. Detailed treatment of the Gibbs-based move kernel and computational aspects of full-conditional density evaluations can be found in Gündoğdu (2019).

1.5 Simulation details

All dueling bandit simulations were run based on a sparse and then a dense \(\theta ^*\). Figure 13a shows these 50-dimensional vectors. One hyperparameter for the MM algorithm is set based on a held-out simulation assuming the same \(\theta ^*\)s. The Last.FM simulation is performed using a \(\theta ^*\) that is inferred by fitting LDA to artist listening data. The top 50 artists in terms of probability of being listened according to this \(\theta ^*\) are shown in Fig. 14.

1.6 L-wise Preferences Simulations

Our bandit algorithm outperforms the baselines in both pairwise (\(L=2\)) and subset-wise (\(L=5\)) selection tasks. In Fig. 12a, fixing \(N=2\), we explore how learning speed improves as the system is allowed to make larger presentations. As expected, growing presentation sizes leads to lower cumulative regret, i.e., the algorithm learns to present the top 2 options sooner. We also report the dimensionality of the statistic \(\mu \)—the number of unique presentations explored before converging to a preference estimate. Despite the potentially high complexity, the algorithm maintains manageably low-dimensional statistics (Fig. 12b). For these simulations, we used a 100-dimensional sparse \(\theta ^*\), as shown in Fig. 13b

For each experiment in the online learning to rank experiments, TopRank hyperparameter is selected based on a held-out simulation assuming the same \(\theta ^*\). Finally with presentation size \(L=5\), we used the \(\theta ^*\), as shown in Fig. 13c.

Rights and permissions

About this article

Cite this article

Çapan, G., Gündoğdu, İ., Türkmen, A.C. et al. Dirichlet–Luce choice model for learning from interactions. User Model User-Adap Inter 32, 611–648 (2022). https://doi.org/10.1007/s11257-022-09331-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11257-022-09331-0