Abstract

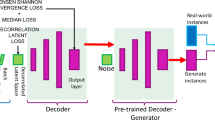

Density estimation is a challenging unsupervised learning problem. Current maximum likelihood approaches for density estimation are either restrictive or incapable of producing high-quality samples. On the other hand, likelihood-free models such as generative adversarial networks, produce sharp samples without a density model. The lack of a density estimate limits the applications to which the sampled data can be put, however. We propose a generative adversarial density estimator (GADE), a density estimation approach that bridges the gap between the two. Allowing for a prior on the parameters of the model, we extend our density estimator to a Bayesian model where we can leverage the predictive variance to measure our confidence in the likelihood. Our experiments on challenging applications such as visual dialog or autonomous driving where the density and the confidence in predictions are crucial shows the effectiveness of our approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Notes

This is merely for theoretical analysis. In practice, we don’t need to explicitly define an invertible function.

A randomized version of this lemma is proposed in Boutsidis et al. (2017).

We observed in practice that if the generator function \(g_{z}\) shares weights with that of \(\phi \) (i.e. second layer of \(g_{z}\) with last layer of \(\phi \) and so forth) the approach does not perform well. In addition, even if we constrain each layer of the \(g_{z}\) network to match in distribution to layers of \(\phi \) the quality of the generated samples deteriorate.

References

Arjovsky, M., Chintala, S., Bottou, L. (2017). Wasserstein gan. arXiv:1701.07875.

Ben-Israel, A. (1999). The change-of-variables formula using matrix volume. SIAM Journal on Matrix Analysis and Applications, 21(1), 300–312. https://doi.org/10.1137/S0895479895296896.

Boutsidis, C., Drineas, P., Kambadur, P., Kontopoulou, E. M., & Zouzias, A. (2017). A randomized algorithm for approximating the log determinant of a symmetric positive definite matrix. Linear Algebra and its Applications, 533, 95–117.

Burda, Y., Grosse, R., Salakhutdinov, R. (2015). Importance weighted autoencoders

Chen X, Duan Y, Houthooft R, Schulman J, Sutskever I, Abbeel P (2016) Infogan: Interpretable representation learning by information maximizing generative adversarial nets. CoRR abs/1606.03657

Das, A., Kottur, S., Gupta, K., Singh, A., Yadav, D., Moura, J.M., Parikh, D., & Batra, D. (2017). Visual Dialog. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR).

Diggle, P. J., & Gratton, R. J. (1984). Monte Carlo methods of inference for implicit statistical models. Journal of the Royal Statistical Society, 46, 193–227.

Dinh, L., Krueger, D., & Bengio, Y. (2014). Nice: Non-linear independent components estimation. CoRR,. abs/1410.8516.

Dinh, L., Sohl-Dickstein, J., & Bengio, S. (2016). Density estimation using real nvp.

Dumoulin, V., Belghazi, I., Poole, B., Mastropietro, O., Lamb, A., Arjovsky, M., & Courville, A. (2017). Adversarially learned inference. In International conference on learning representation.

Englert, P., & Toussaint, M. (2015). Inverse kkt–learning cost functions of manipulation tasks from demonstrations. In Proceedings of the international symposium of robotics research.

Espié, E., Wymann, B., Dimitrakakis, C., Guionneau, C., Coulom, R., & Sumner, A. (2000). Torcs, the open racing car simulator. http://torcs.sourceforge.net.

Finn, C., Christiano, P.F., Abbeel, P., & Levine, S. (2016). A connection between generative adversarial networks, inverse reinforcement learning, and energy-based models. CoRR, arXiv:1611.03852

Goodfellow, I.J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative adversarial nets. In International conference on neural information processing systems.

Gregor, K., Danihelka, I., Mnih, A., Blundell, C., & Wierstra, D. (2014). Deep autoregressive networks. In The international conference on machine learning (icml).

Grover, A., Dhar, M., & Ermon, S. (2018). Flow-gan: Combining maximum likelihood and adversarial learning in generative models. In Proceedings of the thirty-second AAAI conference on artificial intelligence, (AAAI-18), the 30th innovative applications of artificial intelligence (IAAI-18), and the 8th AAAI symposium on educational advances in artificial intelligence (EAAI-18), New Orleans, Louisiana, USA, February 2–7, 2018 (pp. 3069–3076).

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., & Courville, A. C. (2017). Improved training of wasserstein gans. CoRR,. abs/1704.00028.

Gutmann, M. U., Dutta, R., Kaski, S., & Corander, J. (2018). Likelihood-free inference via classification. Statistics and Computing, 28, 411–425.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition.

Ho, J., & Ermon, S. (2016). Generative adversarial imitation learning. In Advances in neural information processing systems.

Jang, E., Gu, S., & Poole, B. (2016). Categorical reparameterization with gumbel-softmax. CoRR.

Karras, T., Aila, T., Laine, S., & Lehtinen, J. (2017). Progressive growing of gans for improved quality, stability, and variation. In The international conference on learning representations (ICLR).

Kingma, D.P., Welling, M. (2014). Auto-encoding variational bayes. In The international conference on learning representations (ICLR).

Kolesnikov, A., & Lampert, C. H. (2017). PixelCNN models with auxiliary variables for natural image modeling. In Precup D., & Teh Y.W. (eds) Proceedings of the 34th international conference on machine learning, PMLR, international convention centre, Sydney, Australia, Proceedings of Machine Learning Research (Vol. 70, pp. 1905–1914).

Konda, V. R., & Tsitsiklis, J. N. (2004). Convergence rate of linear two-time-scale stochastic approximation. The Annals of Applied Probability, 14(2), 796–819.

Krizhevsky, A., & Hinton, G. (2009). Learning multiple layers of features from tiny images.

Levine, S., & Koltun, V. (2013). Guided policy search. In International conference on machine learning.

Li, Y., Song, J., & Ermon, S. (2017). Infogail: interpretable imitation learning from visual demonstrations. arXiv preprint arXiv:1703.08840.

Lin, T. Y., Michael, M., Serge, B., James, H., Pietro, P., Deva, R., Piotr, D., & Lawrence, Z. C. (2014). Microsoft coco: Common objects in context. In Computer vision–ECCV 2014, Berlin: Springer International Publishing.

Liu, Z., Luo, P., Wang, X., & Tang, X. (2015). Deep learning face attributes in the wild. In Proceedings of international conference on computer vision (ICCV).

Lu, J., Yang, J., Batra, D., & Parikh, D. (2016). Hierarchical question-image co-attention for visual question answering. In Proceedings of the 30th international conference on neural information processing systems, Curran Associates Inc., USA, NIPS’16 (pp 289–297). http://dl.acm.org/citation.cfm?id=3157096.3157129.

Lu, J., Kannan, A., Yang, J., Parikh, D., Batra, D. (2017). Best of both worlds: Transferring knowledge from discriminative learning to a generative visual dialog model. NIPS.

Maaløe, L., Sønderby, C. K., Sønderby, S. K., Winther, O. (2016). Auxiliary deep generative models. In Proceedings of the 33rd international conference on international conference on machine learning, ICML’16 (pp. 1445–1454).

Maddison, C. J., Mnih, A., Teh, Y. W. (2016). The concrete distribution: A continuous relaxation of discrete random variables. CoRR.

Martens, J. (2010). Deep learning via hessian-free optimization. In Proceedings of the 27th international conference on international conference on machine learning, Omnipress, Madison, WI, USA, ICML–10, pp. 735–742.

Metz, L., Poole, B., Pfau, D., & Sohl-Dickstein, J. (2016). Unrolled generative adversarial networks. International Conference on Learning,. Representations.

Nguyen, A., Clune, J., Bengio, Y., Dosovitskiy, A., & Yosinski, J. (2017). Plug & play generative networks: Conditional iterative generation of images in latent space. In Proceedings of the IEEE conference on computer vision and pattern recognition, IEEE.

Nowozin, S., Cseke, B., & Tomioka, R. (2016). f-GAN: Training generative neural samplers using variational divergence minimization. arXiv:1606.00709.

Oord, A., Kalchbrenner, N., Vinyals, O., Espeholt, L., Graves, A., Kavukcuoglu, K. (2016). Conditional image generation with pixelcnn decoders. In Proceedings of the 30th international conference on neural information processing systems, NIPS’16 (pp. 4797–4805).

Pfau, D., & Vinyals, O. (2016). Connecting generative adversarial networks and actor-critic methods. CoRR. abs/1610.01945.

Price, B., & Boutilier, C. (2003). A bayesian approach to imitation in reinforcement learning. In Proceedings of the 18th international joint conference on artificial intelligence IJCAI’03 (pp. 712–717).

Radford, A., Metz, L., Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks. CoRR

Ramachandran, D., Amir, E. (2007). Bayesian inverse reinforcement learning. In Proceedings of the 20th international joint conference on artifical intelligence, IJCAI’07 (pp. 2586–2591).

Rezende, D.J., & Mohamed, S. (2015). Variational inference with normalizing flows. In Proceedings of the 32nd international conference on international conference on machine learning, JMLR.org, ICML’15 (pp 1530–1538).

Saatchi, Y., & Wilson, A. G. (2017). Bayesian GAN. In Advances in neural information processing systems.

Salakhutdinov, R., & Hinton, G.E. (2009). Deep boltzmann machines. In International conference on artificial intelligence and statistics, AISTATS’09.

Salimans, T., Karpathy, A., Chen, X., & Kingma, D.P. (2017). Pixelcnn++: A pixelcnn implementation with discretized logistic mixture likelihood and other modifications. In The international conference on learning representations (ICLR).

Schulman, J., Levine, S., Moritz, P., Jordan, M., & Abbeel, P. (2015). Trust region policy optimization. In Proceedings of the 32nd international conference on international conference on machine learning (Vol. 37, pp 1889–1897).

Serban, I.V., Sordoni, A., Bengio, Y., Courville, A., & Pineau, J. (2016). Building end-to-end dialogue systems using generative hierarchical neural network models. In Proceedings AAAI (pp .3776–3783).

Shetty, R., Rohrbach, M., Hendricks, L. A., Fritz, M., & Schiele, B. (2017). Speaking the same language: Matching machine to human captions by adversarial training. CoRR,. abs/1703.10476.

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv.

Stadie, B., Abbeel, P., & Sutskever, I. (2017). Third person imitation learning. In International conference in learning representation.

Theis, L., van den Oord, A., & Bethge, M. (2015). A note on the evaluation of generative models. ArXiv e-prints, 1511, 01844.

Wang, T. C., Liu, M. Y., Zhu, J. Y., Tao, A., Kautz, J., & Catanzaro, B. (2017). High-resolution image synthesis and semantic manipulation with conditional gans.

Welling, M., & Teh, Y. W. (2011). Bayesian learning via stochastic gradient langevin dynamics. In Proceedings of the 28th international conference on international conference on machine learning (pp 681–688).

Zhang, H., Xu, T., Li, H., Zhang, S., Wang, X., Huang, X., & Metaxas, D. (2017). Stackgan: text to photo-realistic image synthesis with stacked generative adversarial networks. In: ICCV.

Zheng, G., Yang, Y., & Carbonell, J. (2017). Likelihood almost free inference networks.

Zheng, G., Yang, Y., & Carbonell, J. (2018). Convolutional normalizing flows. In ICML workshop on theoretical foundations and applications of deep learning.

Ziebart, B. D., Maas, A., Bagnell, J. A., & Dey, A. K. (2008). Maximum entropy inverse reinforcement learning. In Proceedings of the 23rd national conference on artificial intelligence (Vol. 3, AAAI’08).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jun-Yan Zhu, Hongsheng Li, Eli Shechtman, Ming-Yu Liu, Jan Kautz, Antonio Torralba.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proof of Lemma 2

Proof of Lemma 2

Proof

Since B is a positive definite matrix with \(\Vert B\Vert _{2}<1\), it follows that,

where \(\lambda _{i}(B)\) is the ith eigenvalue of the matrix B. We know for the second line that

Then we have,

For the second equality we use Taylor expansion of the \(\log \) since all the eigenvalues of \(B-\mathbf {I}_{d}\) are in (0, 1) and the last equality follows by the linearity of the trace operator. \(\square \)

Rights and permissions

About this article

Cite this article

Abbasnejad, M.E., Shi, J., van den Hengel, A. et al. GADE: A Generative Adversarial Approach to Density Estimation and its Applications. Int J Comput Vis 128, 2731–2743 (2020). https://doi.org/10.1007/s11263-020-01360-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-020-01360-9