Abstract

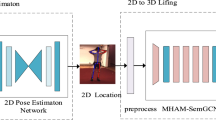

Graph convolution networks (GCNs) based methods for 3D human pose estimation usually aggregate immediate features of single-hop nodes, which are unaware of the correlation of multi-hop nodes and therefore neglect long-range dependency for predicting complex poses. In addition, they typically operate either on single-scale or sequential down-sampled multi-scale graph representations, resulting in the loss of contextual information or spatial details. To address these problems, this paper proposes a parallel hop-aware graph attention network (PHGANet) for 3D human pose estimation, which learns enriched hop-aware correlation of the skeleton joints while maintaining the spatially-precise representations of the human graph. Specifically, we propose a hop-aware skeletal graph attention (HSGAT) module to capture the semantic correlation of multi-hop nodes, which first calculates skeleton-based 1-hop attention and then disseminates it to arbitrary hops via graph connectivity. To alleviate the redundant noise introduced by the interactions with distant nodes, HSGAT uses an attenuation strategy to separate attention from distinct hops and assign them learnable attenuation weights according to their distances adaptively. Upon HSGAT, we further build PHGANet with multiple parallel branches of stacked HSGAT modules to learn the enriched hop-aware correlation of human skeletal structures at different scales. In addition, a joint centrality encoding scheme is proposed to introduce node importance as a bias in the learned graph representation, which makes the core joints (e.g., neck and pelvis) more influential during node aggregation. Experimental results indicate that PHGANet performs favorably against state-of-the-art methods on the Human3.6M and MPI-INF-3DHP benchmarks. Models and code are available at https://github.com/ChenyangWang95/PHGANet/.

Similar content being viewed by others

Data Availability

All datasets generated or analysed during the current study are included in the published articles (Ionescu et al. 2014; Mehta et al. 2017a). These datasets can be derived from the following public domain resources: https://github.com/jfzhang95/PoseAug/blob/main/DATASETS.md.

Change history

30 March 2023

A Correction to this paper has been published: https://doi.org/10.1007/s11263-023-01786-x

References

Abu-El-Haija, S., Perozzi, B., Kapoor, A., Alipourfard, N., Lerman, K., Harutyunyan, H., Steeg, G. V., & Galstyan, A. (2019). Mixhop: Higher-order graph convolutional architectures via sparsified neighborhood mixing. In ICML.

Agarwal, A., & Triggs, B. (2006). Recovering 3D human pose from monocular images. TPAMI, 28(1), 44–58.

Bogo, F., Kanazawa, A., Lassner, C., Gehler, P. V., Romero, J., & Black, M. J. (2016). Keep it SMPL: Automatic estimation of 3D human pose and shape from a single image. In ECCV.

Cai, Y., Ge, L., Liu, J., Cai, J., Cham, T., Yuan, J., & Magnenat-Thalmann, N. (2019). Exploiting spatial-temporal relationships for 3d pose estimation via graph convolutional networks. In ICCV.

Chen, C., & Ramanan, D. (2017). 3D human pose estimation = 2D pose estimation + matching. In CVPR.

Chen, C., Tyagi, A., Agrawal, A., Drover, D., MV, R., Stojanov, S., & Rehg, J. M. (2019a). Unsupervised 3d pose estimation with geometric self-supervision. In CVPR.

Chen, T., Fang, C., Shen, X., Zhu, Y., Chen, Z., & Luo, J. (2021). Anatomy-aware 3D human pose estimation in videos. IEEE Transactions on Circuits and Systems for Video Technology, 32(1), 198–209.

Chen, Y., Huang, S., Yuan, T., Zhu, Y., Qi, S., & Zhu, S. (2019b) Holistic++ scene understanding: Single-view 3D holistic scene parsing and human pose estimation with human-object interaction and physical commonsense. In ICCV.

Chen, Y., Wang, Z., Peng, Y., Zhang, Z., Yu, G., & Sun, J. (2018) Cascaded pyramid network for multi-person pose estimation. In CVPR.

Chen, Z., Huang, Y., Yu, H., Xue, B., Han, K., Guo, Y., & Wang, L. (2020). Towards part-aware monocular 3D human pose estimation: An architecture search approach. In ECCV.

Ci, H., Wang, C., Ma, X., & Wang, Y. (2019). Optimizing network structure for 3D human pose estimation. In ICCV.

Defferrard, M., Bresson, X., & Vandergheynst, P. (2016). Convolutional neural networks on graphs with fast localized spectral filtering. In NIPS.

Doosti, B., Naha, S., Mirbagheri, M., & Crandall, D. J. (2020) Hope-net: A graph-based model for hand-object pose estimation. In CVPR.

Duvenaud, D., Maclaurin, D., Aguilera-Iparraguirre, J., Gómez-Bombarelli, R., Hirzel, T., Aspuru-Guzik, A., & Adams, R. P. (2015). Convolutional networks on graphs for learning molecular fingerprints. In NIPS.

Fang, H., Xu, Y., Wang, W., Liu, X., & Zhu, S. (2018). Learning pose grammar to encode human body configuration for 3D pose estimation. In AAAI.

Fang, Q., Shuai, Q., Dong, J., Bao, H., & Zhou, X. (2021). Reconstructing 3d human pose by watching humans in the mirror. In CVPR.

Garcia-Hernando, G., Yuan, S., Baek, S., & Kim, T. (2018). First-person hand action benchmark with RGB-D videos and 3D hand pose annotations. In CVPR.

Hamilton, W. L, Ying, Z., & Leskovec, J. (2017). Inductive representation learning on large graphs. In NIPS.

Hasson, Y., Varol, G., Tzionas, D., Kalevatykh, I., Black, M. J., Laptev, I., & Schmid, C. (2019). Learning joint reconstruction of hands and manipulated objects. In CVPR.

Henaff, M., Bruna, J., & LeCun, Y. (2015). Deep convolutional networks on graph-structured data. arXiv:1506.05163

Hossain, M. R. I., & Little, J. J. (2018). Exploiting temporal information for 3D human pose estimation. In ECCV.

Hu, J., Shen, L., & Sun, G. (2018). Squeeze-and-excitation networks. In CVPR.

Hu, W., Zhang, C., Zhan, F., Zhang, L., & Wong, T. (2021). Conditional directed graph convolution for 3d human pose estimation. In ACM MM.

Ionescu, C., Papava, D., Olaru, V., & Sminchisescu, C. (2014). Human3.6M: Large scale datasets and predictive methods for 3D human sensing in natural environments. TPAMI, 36(7), 1325–1339.

Kingma, D. P., & Ba, J. (2015). Adam: A method for stochastic optimization. In ICLR.

Kipf, T. N., & Welling, M. (2017). Semi-supervised classification with graph convolutional networks. In ICLR.

Lee, K., Lee, I., & Lee, S. (2018). Propagating LSTM: 3D pose estimation based on joint interdependency. In ECCV.

Li, G., Müller, M., Thabet, A. K., & Ghanem, B. (2019). Deepgcns: Can GCNs go as deep as CNNs? In ICCV.

Li, H., Shi, B., Dai, W., Chen, Y., Wang, B., Sun, Y., Guo, M., Li, C., Zou, J., & Xiong, H. (2021). Hierarchical graph networks for 3D human pose estimation. In BMVC.

Li, S., & Chan, A. B. (2014). 3D human pose estimation from monocular images with deep convolutional neural network. In ACCV.

Li, S., Zhang, W., Chan, A. B. (2017). Maximum-margin structured learning with deep networks for 3D human pose estimation. IJCV.

Li, S., Ke, L., Pratama, K., Tai, Y., Tang, C., & Cheng, K. (2020). Cascaded deep monocular 3D human pose estimation with evolutionary training data. In CVPR.

Lin, T., Dollár, P., Girshick, R. B., He, K., Hariharan, B., & Belongie, S. J. (2017). Feature pyramid networks for object detection. In CVPR.

Liu, K., Ding, R., Zou, Z., Wang, L., & Tang, W. (2020a). A comprehensive study of weight sharing in graph networks for 3D human pose estimation. In ECCV.

Liu, K., Zou, Z., & Tang, W. (2020b). Learning global pose features in graph convolutional networks for 3D human pose estimation. In ACCV.

Liu, M., Liu, H., & Chen, C. (2017). Enhanced skeleton visualization for view invariant human action recognition. Pattern Recognition, 68, 346–362.

Liu, M., & Yuan, J. (2018). Recognizing human actions as the evolution of pose estimation maps. In CVPR.

Liu, R., Shen, J., Wang, H., Chen, C., Cheung, S. S., & Asari, V. K. (2020c) Attention mechanism exploits temporal contexts: Real-time 3D human pose reconstruction. In CVPR.

Liu, R., Shen, J., Wang, H., Chen, C., Cheung, S. S., & Asari, V. K. (2021) Enhanced 3D human pose estimation from videos by using attention-based neural network with dilated convolutions. IJCV.

Luo, C., Chu, X., & Yuille, A. L. (2018). Orinet: A fully convolutional network for 3D human pose estimation. In BMVC.

Luvizon, D. C., Picard, D., & Tabia, H. (2022). Consensus-based optimization for 3D human pose estimation in camera coordinates. IJCV.

Martinez, J., Hossain, R., Romero, J., & Little, J. J. (2017). A simple yet effective baseline for 3D human pose estimation. In ICCV.

Mehta, D., Rhodin, H., Casas, D., Fua, P., Sotnychenko, O., Xu, W., & Theobalt, C. (2017a). Monocular 3D human pose estimation in the wild using improved CNN supervision. In 3DV.

Mehta, D., Sridhar, S., Sotnychenko, O., Rhodin, H., Shafiei, M., Seidel, H., Xu, W., Casas, D., & Theobalt, C. (2017b). Vnect: Real-time 3D human pose estimation with a single RGB camera. ACM Transactions on Graphics, 36(4), 44:1–44:14.

Moon, G., & Lee, K. M. (2020). I2l-meshnet: Image-to-lixel prediction network for accurate 3D human pose and mesh estimation from a single RGB image. In ECCV.

Mueller, F., Bernard, F., Sotnychenko, O., Mehta, D., Sridhar, S., Casas, D., & Theobalt, C. (2018). Ganerated hands for real-time 3D hand tracking from monocular RGB. In CVPR.

Newell, A., Yang, K., & Deng, J. (2016). Stacked hourglass networks for human pose estimation. In ECCV.

Pavlakos, G., Zhou, X., Derpanis, K. G., & Daniilidis, K. (2017). Coarse-to-fine volumetric prediction for single-image 3D human pose. In CVPR.

Pavllo, D., Feichtenhofer, C., Grangier, D., & Auli, M. (2019). 3D human pose estimation in video with temporal convolutions and semi-supervised training. In CVPR.

Pustejovsky, J., & Krishnaswamy, N. (2021). Embodied human computer interaction. Künstliche Intell, 35(3), 307–327.

Quan, J., & Hamza, A. B. (2021). Higher-order implicit fairing networks for 3D human pose estimation. In BMVC.

Sharma, S., Varigonda, P. T., Bindal, P., Sharma, A., & Jain, A. (2019). Monocular 3d human pose estimation by generation and ordinal ranking. In ICCV.

Srivastava, N., Hinton, G. E., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2014). Dropout: A simple way to prevent neural networks from overfitting. The Journal of Machine Learning Research, 15(1), 1929–1958.

Sun, X., Shang, J., Liang, S., & Wei, Y. (2017). Compositional human pose regression. In ICCV.

Takano, W., & Nakamura, Y. (2015). Action database for categorizing and inferring human poses from video sequences. Robotics and Autonomous Systems, 70, 116–125.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017). Attention is all you need. In NIPS.

Velickovic, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., & Bengio, Y. (2018). Graph attention networks. In ICLR.

Wandt B, Ackermann, H., & Rosenhahn, B. (2018). A kinematic chain space for monocular motion capture. In ECCV.

Wang, G., Ying, R., Huang, J., & Leskovec, J. (2021a). Multi-hop attention graph neural networks. In IJCAI.

Wang, J., Sun, K., Cheng, T., Jiang, B., Deng, C., Zhao, Y., Liu, D., Mu, Y., Tan, M., Wang, X., Liu, W., & Xiao, B. (2021). Deep high-resolution representation learning for visual recognition. TPAMI, 43(10), 3349–3364.

Wang, J., Yan, S., Xiong, Y., & Lin, D. (2020). Motion guided 3D pose estimation from videos. In ECCV.

Wang, L., Chen, Y., Guo, Z., Qian, K., Lin, M., Li, H., & Ren, J. S. J. (2019). Generalizing monocular 3D human pose estimation in the wild. In ICCV.

Xie, K., Wang, T., Iqbal, U., Guo, Y., Fidler, S., & Shkurti, F. (2021). Physics-based human motion estimation and synthesis from videos. In ICCV.

Xiong, R., Yang, Y., He, D., Zheng, K., Zheng, S., Xing, C., Zhang, H., Lan, Y., Wang, L., & Liu, T. (2020). On layer normalization in the transformer architecture. In ICML.

Xu, K., Li, C., Tian, Y., Sonobe, T., Kawarabayashi, K., & Jegelka, S. (2018). Representation learning on graphs with jumping knowledge networks. In ICML.

Xu, T., & Takano, W. (2021). Graph stacked hourglass networks for 3D human pose estimation. In CVPR.

Yang, W., Ouyang, W., Wang, X., Ren, J. S. J., Li, H., & Wang, X. (2018). 3D human pose estimation in the wild by adversarial learning. In CVPR.

Zhao, L., Peng, X., Tian, Y., Kapadia, M., & Metaxas, D. N. (2019). Semantic graph convolutional networks for 3D human pose regression. In CVPR.

Zhao, W., Tian, Y., Ye, Q., Jiao, J., & Wang, W. (2022). Graformer: Graph convolution transformer for 3D pose estimation

Zheng, C., Zhu, S., Mendieta, M., Yang, T., Chen, C., & Ding, Z. (2021). 3D human pose estimation with spatial and temporal transformers. In ICCV.

Zhou, K., Han, X., Jiang, N., Jia, K., & Lu, J. (2019). Hemlets pose: Learning part-centric heatmap triplets for accurate 3D human pose estimation. In ICCV.

Zhou, X., Huang, Q., Sun, X., Xue, X., & Wei, Y. (2017). Towards 3D human pose estimation in the wild: A weakly-supervised approach. In ICCV.

Zou, Z., & Tang, W. (2021). Modulated graph convolutional network for 3D human pose estimation. In ICCV.

Zou, Z., Liu, K., Wang, L., & Tang, W. (2020). High-order graph convolutional networks for 3D human pose estimation. In BMVC.

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (Nos. 62272134, 62236003 and 62072141), in part by the Taishan Scholars Program of Shandong Province (No. tsqn201812106), in part by the Shenzhen Colleges and Universities Stable Support Program (No. GXWD20220817144428005), in part by the National Key R &D Program of China (No. 2021ZD0110901) and in part by CAAI-Huawei MindSpore Open Fund.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Wenjun Kevin Zeng.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, S., Wang, C., Nie, L. et al. Learning Enriched Hop-Aware Correlation for Robust 3D Human Pose Estimation. Int J Comput Vis 131, 1566–1583 (2023). https://doi.org/10.1007/s11263-023-01770-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01770-5