Abstract

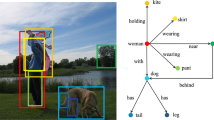

Scene graph aims to faithfully reveal humans’ perception of image content. When humans look at a scene, they usually focus on their interested parts in a special priority. This innate habit indicates a hierarchical preference about human perception. Therefore, we argue to generate the Scene Graph of Interest which should be hierarchically constructed, so that the important primary content is firstly presented while the secondary one is presented on demand. To achieve this goal, we propose the Tree–Guided Importance Ranking (TGIR) model. We represent the scene with a hierarchical structure by firstly detecting objects in the scene and organizing them into a Hierarchical Entity Tree (HET) according to their spatial scale, considering that larger objects are more likely to be noticed instantly. After that, the scene graph is generated guided by structural information of HET which is modeled by the elaborately designed Hierarchical Contextual Propagation (HCP) module. To further highlight the key relationship in the scene graph, all relationships are re-ranked through additionally estimating their importance by the Relationship Ranking Module (RRM). To train RRM, the most direct way is to collect the key relationship annotation, which is the so-called Direct Supervision scheme. As collecting annotation may be cumbersome, we further utilize two intuitive and effective cues, visual saliency and spatial scale, and treat them as Approximate Supervision, according to the findings that these cues are positively correlated with relationship importance. With these readily available cues, the RRM is still able to estimate the importance even without key relationship annotation. Experiments indicate that our method not only achieves state-of-the-art performances on scene graph generation, but also is expert in mining image-specific relationships which play a great role in serving subsequent tasks such as image captioning and cross-modal retrieval.

Similar content being viewed by others

Notes

For convenience, we use “anchor” to refer to the target object that we consider in following parts.

Source code and our collected dataset are available at https://github.com/Kenneth-Wong/TGIR.

There exist some differences between the performances of our conference version and this paper.

References

Antol, S., Agrawal, A., Lu, J., Mitchell, M., Batra, D., Lawrence Zitnick, C., & Parikh, D. (2015). Vqa: Visual question answering. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 2425–2433).

Biederman, I. (2017). On the semantics of a glance at a scene. In: Perceptual Organization (pp. 213–253). Routledge.

Bordalo, P., Gennaioli, N., & Shleifer, A. (2012). Salience theory of choice under risk. The Quarterly Journal of Economics, 127(3), 1243–1285.

Chen, S., Jin, Q., Wang, P., & Wu, Q. (2020). Say as you wish: Fine-grained control of image caption generation with abstract scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 9962–9971).

Chen, T., Yu, W., Chen, R., & Lin, L. (2019). Knowledge-embedded routing network for scene graph generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 6163–6171).

Chiou, M.J., Ding, H., Yan, H., Wang, C., Zimmermann, R., & Feng, J. (2021). Recovering the unbiased scene graphs from the biased ones. In Proceedings of the ACM International Conference on Multimedia (ACM-MM) (pp. 1581–1590).

Chung, J., Gulcehre, C., Cho, K., & Bengio, Y. (2014). Empirical evaluation of gated recurrent neural networks on sequence modeling. In: Advances in Neural Information Processing Systems (NIPS) Workshop on Deep Learning.

Dai, B., Zhang, Y., & Lin, D. (2017). Detecting visual relationships with deep relational networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3298–3308).

Deng, Z., Hu, X., Zhu, L., Xu, X., Qin, J., Han, G., & Heng, P.A. (2018). R3net: Recurrent residual refinement network for saliency detection. In Proceedings of the 27th International Joint Conference on Artificial Intelligence (AAAI) (pp. 684–690).

Dhamo, H., Farshad, A., Laina, I., Navab, N., Hager, G.D., Tombari, F., & Rupprecht, C. (2020). Semantic image manipulation using scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 5213–5222).

Gu, J., Joty, S., Cai, J., Zhao, H., Yang, X., & Wang, G. (2019). Unpaired image captioning via scene graph alignments. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 10,323–10,332).

Gu, J., Zhao, H., Lin, Z., Li, S., Cai, J., & Ling, M. (2019). Scene graph generation with external knowledge and image reconstruction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 1969–1978).

Guo, Y., Gao, L., Wang, X., Hu, Y., Xu, X., Lu, X., Shen, H.T., & Song, J. (2021). From general to specific: Informative scene graph generation via balance adjustment. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 16,383–16,392).

Han, F., & Zhu, S. C. (2008). Bottom-up/top-down image parsing with attribute grammar. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 31(1), 59–73.

He, S., Tavakoli, H.R., Borji, A., & Pugeault, N. (2019). Human attention in image captioning: Dataset and analysis. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 8529–8538).

Herzig, R., Bar, A., Xu, H., Chechik, G., Darrell, T., & Globerson, A. (2020). Learning canonical representations for scene graph to image generation. In Proceedings of European Conference on Computer Vision (ECCV), vol. 12371, (pp. 210–227). Springer.

Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural Computation, 9(8), 1735–1780.

Hou, Q., Cheng, M.M., Hu, X., Borji, A., Tu, Z., & Torr, P. (2017). Deeply supervised salient object detection with short connections. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3203–3212).

Itti, L., Koch, C., & Niebur, E. (1998). A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 20(11), 1254–1259.

Johnson, J., Gupta, A., & Fei-Fei, L. (2018). Image generation from scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 1219–1228).

Johnson, J., Krishna, R., Stark, M., Li, L.J., Shamma, D., Bernstein, M., & Fei-Fei, L. (2015). Image retrieval using scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3668–3678).

Kahneman, D., Slovic, S.P., Slovic, P., & Tversky, A. (1982). Judgment under uncertainty: Heuristics and biases. Cambridge University Press.

Kim, D.J., Choi, J., Oh, T.H., & Kweon, I.S. (2019). Dense relational captioning: Triple-stream networks for relationship-based captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 6271–6280).

Klein, D.A., & Frintrop, S. (2011). Center-surround divergence of feature statistics for salient object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 2214–2219).

Koner, R., Sinhamahapatra, P., & Tresp, V. (2020). Relation transformer network. arXiv:2004.06193.

Krishna, R., Zhu, Y., Groth, O., Johnson, J., Hata, K., Kravitz, J., Chen, S., Kalantidis, Y., Li, L. J., Shamma, D. A., Bernstein, M. S., & Fei-Fei, L. (2017). Visual genome: Connecting language and vision using crowdsourced dense image annotations. International Journal of Computer Vision (IJCV), 123(1), 32–73.

Lee, K.H., Chen, X., Hua, G., Hu, H., & He, X. (2018). Stacked cross attention for image-text matching. In Proceedings of European Conference on Computer Vision (ECCV) vol. 11208, (pp. 201–216). Springer.

Li, G., & Yu, Y. (2015). Visual saliency based on multiscale deep features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 5455–5463).

Li, R., Zhang, S., Wan, B., & He, X. (2021). Bipartite graph network with adaptive message passing for unbiased scene graph generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 11,109–11,119).

Li, X., & Jiang, S. (2019). Know more say less: Image captioning based on scene graphs. IEEE Transactions on Multimedia (TMM), 21(8), 2117–2130.

Li, Y., Ouyang, W., Wang, X., & Tang, X. (2017). Vip-cnn: Visual phrase guided convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 7244–7253).

Li, Y., Ouyang, W., Zhou, B., Shi, J., Zhang, C., & Wang, X. (2018) Factorizable net: an efficient subgraph-based framework for scene graph generation. In Proceedings of European Conference on Computer Vision (ECCV) vol. 11205, (pp. 346–363). Springer.

Li, Y., Ouyang, W., Zhou, B., Wang, K., & Wang, X. (2017). Scene graph generation from objects, phrases and region captions. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 1261–1270).

Liang, X., Lee, L., Xing, E.P. (2017). Deep variation-structured reinforcement learning for visual relationship and attribute detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 4408–4417).

Liang, Y., Bai, Y., Zhang, W., Qian, X., Zhu, L., & Mei, T. (2019). Vrr-vg: Refocusing visually-relevant relationships. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 10,403–10,412).

Lin, L., Wang, G., Zhang, R., Zhang, R., Liang, X., & Zuo, W. (2016). Deep structured scene parsing by learning with image descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 2276–2284).

Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., & Zitnick, C.L. (2014). Microsoft coco: Common objects in context. In Proceedings of European Conference on Computer Vision (ECCV) vol. 8693, (pp. 740–755). Springer.

Lin, X., Ding, C., Zeng, J., & Tao, D. (2020). Gps-net: Graph property sensing network for scene graph generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3746–3755).

Liu, N., Han, J., & Yang, M.H. (2018). Picanet: Learning pixel-wise contextual attention for saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3089–3098).

Lu, C., Krishna, R., Bernstein, M., & Fei-Fei, L. (2016). Visual relationship detection with language priors. In: Proceedings of European Conference on Computer Vision (ECCV) vol. 9905, (pp. 852–869). Springer.

Lu, Y., Rai, H., Chang, J., Knyazev, B., Yu, G., Shekhar, S., Taylor, G.W., & Volkovs, M. (2021). Context-aware scene graph generation with seq2seq transformers. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 15,931–15,941).

Lv, J., Xiao, Q., & Zhong, J. (2020). Avr: Attention based salient visual relationship detection. arXiv:2003.07012.

Miller, G. A. (1992). Wordnet: A lexical database for English. Communication of the ACM, 38(11), 39–41.

Navon, D. (1977). Forest before trees: The precedence of global features in visual perception. Cognitive Psychology, 9(3), 353–383.

Nguyen, K., Tripathi, S., Du, B., Guha, T., & Nguyen, T.Q. (2021) In defense of scene graphs for image captioning. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 1407–1416).

Pennington, J., Socher, R., & Manning, C. (2014). Glove: Global vectors for word representation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP) (pp. 1532–1543).

Peyre, J., Laptev, I., Schmid, C., & Sivic, J. (2017). Weakly-supervised learning of visual relations. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 5179–5188).

Pont-Tuset, J., Uijlings, J., Changpinyo, S., Soricut, R., & Ferrari, V. (2020). Connecting vision and language with localized narratives. In Proceedings of European Conference on Computer Vision (ECCV) vol. 12350, (pp. 647–664). Springer.

Qi, M., Li, W., Yang, Z., Wang, Y., & Luo, J. (2019). Attentive relational networks for mapping images to scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3957–3966).

Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems (NIPS) (pp. 91–99).

Schuster, S., Krishna, R., Chang, A., Fei-Fei, L., & Manning, C.D. (2015) Generating semantically precise scene graphs from textual descriptions for improved image retrieval. In Proceedings of the Fourth Workshop on Vision and Language (pp. 70–80).

Sharma, A., Tuzel, O., & Jacobs, D.W. (2015). Deep hierarchical parsing for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 530–538).

Shi, J., Zhang, H., & Li, J. (2019). Explainable and explicit visual reasoning over scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 8376–8384).

Socher, R., Lin, C.C., Manning, C., & Ng, A.Y. (2011). Parsing natural scenes and natural language with recursive neural networks. In Proceedings of the International Conference on Machine Learning (ICML) (pp. 129–136).

Suhail, M., Mittal, A., Siddiquie, B., Broaddus, C., Eledath, J., Medioni, G., Sigal, L. (2021). Energy-based learning for scene graph generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 13,936–13,945).

Tai, K.S., Socher, R., Manning, C.D. (2015). Improved semantic representations from tree-structured long short-term memory networks. In Proceedings of the Annual Meeting of the Association for Computational Linguistics (ACL) (pp. 1556–1566).

Tang, C., Xie, L., Zhang, X., Hu, X., Tian, Q. (2022). Visual recognition by request. arXiv:2207.14227.

Tang, K., Niu, Y., Huang, J., Shi, J., Zhang, H. (2020). Unbiased scene graph generation from biased training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3716–3725).

Tang, K., Zhang, H., Wu, B., Luo, W., Liu, W. (2019). Learning to compose dynamic tree structures for visual contexts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 6619–6628).

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In Advances in Neural Information Processing Systems (NIPS) (pp. 5998–6008).

Velickovic, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., & Bengio, Y. (2018). Graph attention networks. In International Conference on Learning Representations (ICLR).

Wang, L., Lu, H., Ruan, X., & Yang, M.H. (2015). Deep networks for saliency detection via local estimation and global search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3183–3192).

Wang, S., Wang, R., Yao, Z., Shan, S., & Chen, X. (2020). Cross-modal scene graph matching for relationship-aware image-text retrieval. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV) (pp. 1508–1517).

Wang, T., Borji, A., Zhang, L., Zhang, P., & Lu, H. (2017). A stagewise refinement model for detecting salient objects in images. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 4019–4028).

Wang, W., Wang, R., & Chen, X. (2021). Topic scene graph generation by attention distillation from caption. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 15,900–15,910).

Wang, W., Wang, R., Shan, S., & Chen, X. (2019). Exploring context and visual pattern of relationship for scene graph generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 8188–8197).

Wang, W., Wang, R., Shan, S., & Chen, X. (2020). Sketching image gist: Human-mimetic hierarchical scene graph generation. In Proceedings of European Conference on Computer Vision (ECCV) vol. 12358, (pp. 222–239). Springer.

Xie, S., Girshick, R., Dollár, P., Tu, Z., & He, K. (2017). Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 5987–5995).

Xie, Y., Lu, H., & Yang, M. H. (2012). Bayesian saliency via low and mid level cues. IEEE Transactions on Image Processing (TIP), 22(5), 1689–1698.

Xu, D., Zhu, Y., Choy, C.B., & Fei-Fei, L. (2017). Scene graph generation by iterative message passing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 5410–5419).

Xu, N., Liu, A. A., Liu, J., Nie, W., & Su, Y. (2019). Scene graph captioner: Image captioning based on structural visual representation. Journal of Visual Communication and Image Representation, 58, 477–485.

Yan, S., Shen, C., Jin, Z., Huang, J., Jiang, R., Chen, Y., & Hua, X.S. (2020). Pcpl: Predicate-correlation perception learning for unbiased scene graph generation. In Proceedings of the ACM International Conference on Multimedia (ACM-MM) (pp. 265–273).

Yang, J., Lu, J., Lee, S., Batra, D., Parikh, D. (2018). Graph r-cnn for scene graph generation. In Proceedings of European Conference on Computer Vision (ECCV) vol. 11205, (pp. 690–706). Springer.

Yang, X., Tang, K., Zhang, H., & Cai, J. (2019). Auto-encoding scene graphs for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 10,685–10,694).

Yao, T., Pan, Y., Li, Y., & Mei, T. (2018). Exploring visual relationship for image captioning. In Proceedings of European Conference on Computer Vision (ECCV) vol. 11218, (pp. 711–727). Springer.

Yao, T., Pan, Y., Li, Y., & Mei, T. (2019). Hierarchy parsing for image captioning. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 2621–2629).

Yin, G., Sheng, L., Liu, B., Yu, N., Wang, X., Shao, J., & Loy, C.C. (2018). Zoom-net: Mining deep feature interactions for visual relationship recognition. In Proceedings of European Conference on Computer Vision (ECCV) vol. 11207, (pp. 330–347). Springer.

Yu, J., Chai, Y., Wang, Y., Hu, Y., & Wu, Q. (2021). Cogtree: Cognition tree loss for unbiased scene graph generation. In Proceedings of International Joint Conferences on Artificial Intelligence (IJCAI) (pp. 1274–1280).

Yu, R., Li, A., Morariu, V.I., & Davis, L.S. (2017). Visual relationship detection with internal and external linguistic knowledge distillation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 1974–1982).

Zareian, A., Karaman, S., & Chang, S.F. (2020a). Bridging knowledge graphs to generate scene graphs. In Proceedings of European Conference on Computer Vision (ECCV) vol. 12368, (pp. 606–623). Springer.

Zareian, A., Karaman, S., & Chang, S.F. (2020b). Weakly supervised visual semantic parsing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3736–3745).

Zareian, A., You, H., Wang, Z., & Chang, S.F. (2020c). Learning visual commonsense for robust scene graph generation. In Proceedings of European Conference on Computer Vision (ECCV) vol. 12368, (pp. 642–657). Springer.

Zellers, R., Yatskar, M., Thomson, S., & Choi, Y. (2018). Neural motifs: Scene graph parsing with global context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 5831–5840).

Zhang, H., Kyaw, Z., Chang, S.F., & Chua, T.S. (2017a) Visual translation embedding network for visual relation detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp. 5532–5540).

Zhang, H., Kyaw, Z., Yu, J., & Chang, S.F. (2017b). Ppr-fcn: Weakly supervised visual relation detection via parallel pairwise r-fcn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) (pp. 4233–4241).

Zhang, J., Kalantidis, Y., Rohrbach, M., Paluri, M., Elgammal, A., & Elhoseiny, M. (2019). Large-scale visual relationship understanding. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI) (pp. 9185–9194).

Zhang, J., Shih, K.J., Elgammal, A., Tao, A., & Catanzaro, B. (2019). Graphical contrastive losses for scene graph parsing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 11,535–11,543).

Zhang, L., Zhang, J., Lin, Z., Lu, H., & He, Y. (2019).Capsal: Leveraging captioning to boost semantics for salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp. 6024–6033).

Zhong, Y., Wang, L., Chen, J., Yu, D., & Li, Y.(2020). Comprehensive image captioning via scene graph decomposition. In Proceedings of European Conference on Computer Vision (ECCV) vol. 12359, (pp. 211–229). Springer.

Zhu, L., Chen, Y., Lin, Y., Lin, C., & Yuille, A. (2011). Recursive segmentation and recognition templates for image parsing. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 34(2), 359–371.

Acknowledgements

This work is partially supported by Natural Science Foundation of China under contracts Nos. U21B2025, U19B2036, 61922080, and National Key R &D Program of China No. 2021ZD0111901.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, W., Wang, R., Shan, S. et al. Importance First: Generating Scene Graph of Human Interest. Int J Comput Vis 131, 2489–2515 (2023). https://doi.org/10.1007/s11263-023-01817-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01817-7