Abstract

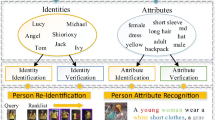

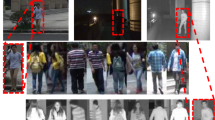

Attribute-image person re-identification (AIPR) is a cross-modal retrieval task that searches person images who meet a list of attributes. Due to large modal gaps between attributes and images, current AIPR methods generally depend on cross-modal feature alignment, but they do not pay enough attention to similarity metric jitters among varying modal configurations (i.e., attribute probe vs. image gallery, image probe vs. attribute gallery, image probe vs. image gallery, and attribute probe vs. attribute gallery). In this paper, we propose a modal-consistent metric learning (MCML) method that stably measures comprehensive similarities between attributes and images. Our MCML is with favorable properties that differ in two significant ways from previous methods. First, MCML provides a complete multi-modal triplet (CMMT) loss function that pulls the distance between the farthest positive pair as close as possible while pushing the distance between the nearest negative pair as far as possible, independent of their modalities. Second, MCML develops a modal-consistent matching regularization (MCMR) to reduce the diversity of matching matrices and guide consistent matching behaviors on varying modal configurations. Therefore, our MCML integrates the CMMT loss function and MCMR, requiring no complex cross-modal feature alignments. Theoretically, we offer the generalization bound to establish the stability of our MCML model by applying on-average stability. Experimentally, extensive results on PETA and Market-1501 datasets show that the proposed MCML is superior to the state-of-the-art approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Notes

We use \(i \in [ m ]\) to denote that i is generated from \([ m ] = \{ {1,2,...,m} \}\). The same definition is also applied to \(l_i\!\in \! [c].\)

The single-modal HMT loss function means only images are applied to the HMT loss function, while the cross-modal HMT loss function means both images and attributes are applied to the HMT loss function.

References

Andrew, G., Arora, R., Bilmes, J., & Livescu, K. (2013). Deep canonical correlation analysis. In ICML (pp. 1247–1255).

Bousquet, O., Klochkov, Y., & Zhivotovskiy, N. (2020). Sharper bounds for uniformly stable algorithms. In PMLR conference on learning theory (pp. 610–626).

Cao, Y. T., Wang, J., & Tao, D. (2020). Symbiotic adversarial learning for attribute-based person search. In ECCV.

Deng, Y., Luo, P., Loy, C. C., & Tang, X. (2014). Pedestrian attribute recognition at far distance. In ACMMM (pp. 789–792).

Dong, Q., Gong, S., & Zhu, X. (2019). Person search by text attribute query as zero-shot learning. In CVPR (pp. 3652–3661).

Eisenschtat, A., & Wolf, L. (2017). Linking image and text with 2-way nets. In CVPR (pp. 4601–4611).

Feldman, V., & Vondrak, J. (2018). Generalization bounds for uniformly stable algorithms. In NeurIPS (pp. 9770–9780).

Feldman, V., & Vondrak, J. (2019). High probability generalization bounds for uniformly stable algorithms with nearly optimal rate. In PMLR conference on learning theory (pp. 1270–1279).

Felix, R., Kumar, V. B., Reid, I., & Carneiro, G. (2018). Multi-modal cycle-consistent generalized zero-shot learning. In ECCV (pp. 21–37).

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative adversarial nets. In NIPS (pp. 2672–2680).

He, K., Fan, H., Wu, Y., Xie, S., & Girshick, R. (2020). Momentum contrast for unsupervised visual representation learning. In CVPR (pp. 9729–9738).

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In CVPR (pp. 770–778).

Hubert Tsai, Y. H., Huang, L. K., & Salakhutdinov, R. (2017). Learning robust visual-semantic embeddings. In ICCV (pp. 3571–3580).

Iodice, S., & Mikolajczyk, K. (2020). Text attribute aggregation and visual feature decomposition for person search. In BMVC (2020).

Ioffe, S., & Szegedy, C. (2015). Batch normalization: Accelerating deep network training by reducing internal covariate shift. In ICML (pp. 448–456).

Jeong, B., Park, J., & Kwak, S. (2021). Asmr: Learning attribute-based person search with adaptive semantic margin regularizer. In ICCV (pp. 12016–12025).

Ji, Z., He, E., Wang, H., & Yang, A. (2019). Image-attribute reciprocally guided attention network for pedestrian attribute recognition. Pattern Recognition Letters, 120, 89–95.

Ji, Z., Hu, Z., He, E., Han, J., & Pang, Y. (2020). Pedestrian attribute recognition based on multiple time steps attention. Pattern Recognition Letters, 138, 170–176.

Ji, Z., Sun, Y., Yu, Y., Pang, Y., & Han, J. (2019). Attribute-guided network for cross-modal zero-shot hashing. IEEE Transactions on Neural Networks and Learning Systems, 31(1), 321–330.

Layne, R., Hospedales, T.M., & Gong, S. (2012a). Towards person identification and re-identification with attributes. In ECCV (pp. 402–412).

Layne, R., Hospedales, T. M., Gong, S., & Mary, Q. (2012b). Person re-identification by attributes. In BMVC (p. 8).

Lei, Y., Ledent, A., & Kloft, M. (2020). Sharper generalization bounds for pairwise learning. NeurIPS 33.

Li, D., Chen, X., & Huang, K. (2015a). Multi-attribute learning for pedestrian attribute recognition in surveillance scenarios. In ACPR (pp. 111–115).

Li, D., Chen, X., & Huang, K. (2015b). Multi-attribute learning for pedestrian attribute recognition in surveillance scenarios. In ACPR (pp. 111–115). IEEE.

Li, S., Xiao, T., Li, H., Yang, W., & Wang, X. (2017). Identity-aware textual-visual matching with latent co-attention. In ICCV (pp. 1890–1899).

Li, W., Zhu, X., & Gong, S. (2020). Scalable person re-identification by harmonious attention. International Journal of Computer Vision, 128(6), 1635–1653.

Li, Z., Min, W., Song, J., Zhu, Y., Kang, L., Wei, X., Wei, X., & Jiang, S. (2022). Rethinking the optimization of average precision: Only penalizing negative instances before positive ones is enough. In AAAI (Vol. 36, pp. 1518–1526).

Lin, X., Ren, P., Xiao, Y., Chang, X., & Hauptmann, A. (2021). Person search challenges and solutions: A survey.

Lin, Y., Zheng, L., Zheng, Z., Wu, Y., Hu, Z., Yan, C., & Yang, Y. (2019). Improving person re-identification by attribute and identity learning. Pattern Recognition, 95, 151–161.

Liu, L., Zhang, H., Xu, X., Zhang, Z., & Yan, S. (2019). Collocating clothes with generative adversarial networks cosupervised by categories and attributes: A multidiscriminator framework. IEEE Transactions on Neural Networks and Learning Systems, 31(9), 3540–3554.

Liu, P., Liu, X., Yan, J., & Shao, J. (2018). Localization guided learning for pedestrian attribute recognition. In BMVC.

Liu, X., Zhao, H., Tian, M., Sheng, L., Shao, J., Yi, S., Yan, J., & Wang, X. (2017). Hydraplus-net: Attentive deep features for pedestrian analysis. In ICCV (pp. 350–359).

Luo, H., Jiang, W., Gu, Y., Liu, F., Liao, X., Lai, S., & Gu, J. (2019). A strong baseline and batch normalization neck for deep person re-identification. IEEE Transactions on Multimedia.

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., & Antiga, L., et al. (2019). Pytorch: An imperative style, high-performance deep learning library. In NeurIPS (pp. 8026–8037).

Schroff, F., Kalenichenko, D., & Philbin, J. (2015). Facenet: A unified embedding for face recognition and clustering. In CVPR (pp. 815–823).

Schumann, A., & Stiefelhagen, R. (2017). Person re-identification by deep learning attribute-complementary information. In CVPR Workshop (pp. 20–28).

Su, C., Zhang, S., Xing, J., Gao, W., & Tian, Q. (2016). Deep attributes driven multi-camera person re-identification. In ECCV (pp. 475–491).

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., & Rabinovich, A. (2015). Going deeper with convolutions. In CVPR (pp. 1–9).

Tan, Z., Yang, Y., Wan, J., Guo, G., & Li, S. Z. (2020). Relation-aware pedestrian attribute recognition with graph convolutional networks. In AAAI (pp. 12055–12062).

Tan, Z., Yang, Y., Wan, J., Hang, H., Guo, G., & Li, S. Z. (2019). Attention-based pedestrian attribute analysis. Transactions on Image Processing, 28(12), 6126–6140.

Vaquero, D. A., Feris, R. S., Tran, D., Brown, L., Hampapur, A., & Turk, M. (2009). Attribute-based people search in surveillance environments. In Workshop on applications of computer vision (pp. 1–8).

Wang, B., Yang, Y., Xu, X., Hanjalic, A., & Shen, H. (2017). Adversarial cross-modal retrieval. In ACM MM (pp. 154–162).

Wang, J., Zhu, X., Gong, S., & Li, W. (2018). Transferable joint attribute-identity deep learning for unsupervised person re-identification. In CVPR (pp. 2275–2284).

Wang, W., Arora, R., Livescu, K., & Bilmes, J. (2015). On deep multi-view representation learning. In ICML (pp. 1083–1092).

Wang, X., Han, X., Huang, W., Dong, D., & Scott, M. R. (2019). Multi-similarity loss with general pair weighting for deep metric learning. In CVPR (pp. 5022–5030).

Wu, M., Huang, D., Guo, Y., & Wang, Y. (2019). Distraction-aware feature learning for human attribute recognition via coarse-to-fine attention mechanism. In AAAI.

Xu, B., Wang, N., Chen, T., & Li, M. (2015). Empirical evaluation of rectified activations in convolutional network. arXiv preprint arXiv:1505.00853.

Yang, Y., Tan, Z., Tiwari, P., Pandey, H. M., Wan, J., Lei, Z., Guo, G., & Li, S. Z. (2021). Cascaded split-and-aggregate learning with feature recombination for pedestrian attribute recognition. International Journal of Computer Vision (pp. 1–14).

Ye, M., Shen, J., Lin, G., Xiang, T., Shao, L., & Hoi, S.C. (2021). Deep learning for person re-identification: A survey and outlook. IEEE Transactions on Pattern Analysis and Machine Intelligence (pp. 1–1).

Yin, J., Wu, A., & Zheng, W. S. (2020). Fine-grained person re-identification. International Journal of Computer Vision, 128(6), 1654–1672.

Yin, Z., Zheng, W. S., Wu, A., Yu, H. X., Wan, H., Guo, X., Huang, F., & Lai, J. (2018). Adversarial attribute-image person re-identification. In IJCAI (pp. 1100–1106).

Yu, K., Leng, B., Zhang, Z., Li, D., & Huang, K. (2017). Weakly-supervised learning of mid-level features for pedestrian attribute recognition and localization. In ECCV.

Zeng, H., Ai, H., Zhuang, Z., & Chen, L. (2020). Multi-task learning via co-attentive sharing for pedestrian attribute recognition. In ICME (pp. 1–6).

Zhan, Y., Yu, J., Yu, T., & Tao, D. (2019). On exploring undetermined relationships for visual relationship detection. In CVPR (pp. 5128–5137).

Zhan, Y., Yu, J., Yu, T., & Tao, D. (2020). Multi-task compositional network for visual relationship detection. International Journal of Computer Vision, 128(8), 2146–2165.

Zhan, Y., Yu, J., Yu, Z., Zhang, R., Tao, D., & Tian, Q. (2018). Comprehensive distance-preserving autoencoders for cross-modal retrieval. In ACM international conference on multimedia (pp. 1137–1145).

Zhang, J., Chen, Z., & Tao, D. (2021). Towards high performance human keypoint detection. International Journal of Computer Vision, 129(9), 2639–2662.

Zhang, S., Song, Z., Cao, X., Zhang, H., & Zhou, J. (2019). Task-aware attention model for clothing attribute prediction. IEEE Transactions on Circuits and Systems for Video, 30(4), 1051–1064.

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., & Tian, Q. (2015). Scalable person re-identification: A benchmark. In ICCV (pp. 1116–1124).

Zhu, J., Liao, S., Lei, Z., & Li, S. Z. (2017). Multi-label convolutional neural network based pedestrian attribute classification. Image and Vision Computing, 58, 224–229.

Zhu, J., Liao, S., Yi, D., Lei, Z., & Li, S.Z. (2015). Multi-label cnn based pedestrian attribute learning for soft biometrics. In ICB (pp. 535–540).

Zhu, J., Zeng, H., Huang, J., Zhu, X., Lei, Z., Cai, C., & Zheng, L. (2019). Body symmetry and part-locality-guided direct nonparametric deep feature enhancement for person reidentification. IEEE Internet of Things Journal, 7(3), 2053–2065.

Zhu, J., Zeng, H., Liao, S., Lei, Z., Cai, C., & Zheng, L. (2017). Deep hybrid similarity learning for person re-identification. IEEE Transactions on Circuits and Systems for Video Technology, 28(11), 3183–3193.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Communicated by Suha Kwak, Ph.D.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported in part by the National Key R &D Program of China under the Grant of 2021YFE0205400, in part by the National Natural Science Foundation of China under the Grants 61976098 and 62002090, in part by the Natural Science Foundation for Outstanding Young Scholars of Fujian Province under the Grant 2022J06023.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhu, J., Liu, L., Zhan, Y. et al. Attribute-Image Person Re-identification via Modal-Consistent Metric Learning. Int J Comput Vis 131, 2959–2976 (2023). https://doi.org/10.1007/s11263-023-01841-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01841-7