Abstract

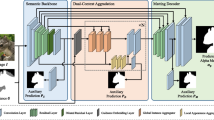

Although conventional matting formulation can separate foreground from background in fractional occupancy which can be caused by highly transparent objects, complex foreground (e.g., net or tree), and objects containing very fine details (e.g., hairs), no previous work has attempted to reason the underlying causes of matting due to various foreground semantics in general. We show how to obtain better alpha mattes by incorporating into our framework semantic classification of matting regions. Specifically, we consider and learn 20 classes of general matting patterns, and propose to extend the conventional trimap to semantic trimap. The proposed semantic trimap can be obtained automatically through patch structure analysis within trimap regions. Meanwhile, we learn a multi-class discriminator to regularize the alpha prediction at semantic level, and content-sensitive weights to balance different regularization losses. Experiments on multiple benchmarks show that our method outperforms other methods benefit from such general alpha semantics and has achieved the most competitive state-of-the-art performance. We further explore the effectiveness of our method on specific semantics by specializing our method into human matting and transparent object matting. Experimental results on specific semantics demonstrate alpha matte semantic information can boost performance for not only general matting but also class-specific matting. Finally, we contribute a large-scale Semantic Image Matting Dataset constructed with careful consideration of data balancing across different semantic classes. Code and dataset are available in https://github.com/nowsyn/SIM.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Availability of data and materials

The dataset proposed in this work is avaiable at https://github.com/nowsyn/SIM.

Code availability

Not applicable.

References

Aksoy, Y., Ozan Aydin, T., & Pollefeys, M. (2017). Designing effective inter-pixel information flow for natural image matting. In CVPR.

Aksoy, Y., Oh, T.-H., Paris, S., Pollefeys, M., & Matusik, W. (2018). Semantic soft segmentation. ACM Transactions on Graphics, 37(4), 1–13.

Amin, B., Riaz, M. M., & Ghafoor, A. (2019). A hybrid defocused region segmentation approach using image matting. Multidimensional Systems and Signal Processing, 30(2), 561–569.

Bai, X., & Sapiro, G. (2007). A geodesic framework for fast interactive image and video segmentation and matting. In ICCV.

Cai, S., Zhang, X., Fan, H., Huang, H., Liu, J., Liu, J., Liu, J., Wang, J., & Sun, J. (2019). Disentangled image matting. In ICCV.

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., & Zagoruyko, S. (2020) End-to-end object detection with transformers. In European conference computer vision.

Chen, Q., Ge, T., Xu, Y., Zhang, Z., Yang, X., & Gai, K. (2018). Semantic human matting. In ACM MM.

Chen, G., Han, K., & Wong, K.-Y. K. (2018). Tom-net: Learning transparent object matting from a single image. In CVPR.

Chen, Q., Li, D., & Tang, C.-K. (2012). Knn matting. In CVPR.

Chen, L.-C., Papandreou, G., Schroff, F., & Adam, H. (2017). Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587

Cheng, B., Misra, I., Schwing, A. G., Kirillov, A., & Girdhar, R. (2022). Masked-attention mask transformer for universal image segmentation. In IEEE/CVF conference on computer vision and pattern recognition.

Cho, D., Tai, Y.-W., & Kweon, I. (2016). Natural image matting using deep convolutional neural networks. In ECCV.

Christoph, R., Carsten, R., Jue, W., Margrit, G., Pushmeet, K., & Pamela, R. Alphamatting. http://www.alphamatting.com/

Chuang, Y.-Y., Curless, B., Salesin, D. H., & Szeliski, R. (2001). A bayesian approach to digital matting. In CVPR.

Dai, Y., Lu, H., & Shen, C. (2021). Learning affinity-aware upsampling for deep image matting. In IEEE conference on computer vision and pattern recognition.

Dai, Y., Price, B., Zhang, H., & Shen, C. (2022). Boosting robustness of image matting with context assembling and strong data augmentation. In IEEE/CVF conference on computer vision and pattern recognition.

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In CVPR.

Deora, R., Sharma, R., & Raj, D. S. S. (2021). Salient image matting.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., & Houlsby, N. (2021). An image is worth 16x16 words: Transformers for image recognition at scale. In International conference on learning representations, ICLR.

Everingham, M., Van Gool, L., Williams, C. K. I., Winn, J., & Zisserman, A. (2010). The pascal visual object classes (VOC) challenge. IJCV, 88(2), 303–338.

Feng, X., Liang, X., & Zhang, Z. (2016). A cluster sampling method for image matting via sparse coding. In ECCV.

Forte, M., & Pitié, F. (2020). F, b, alpha matting. arXiv preprint arXiv:2003.07711

Gastal, E. S., & Oliveira, M. M. (2010). Shared sampling for real-time alpha matting. Computer Graphics Forum, 29(2), 575–584.

Grady, L., Schiwietz, T., Aharon, S., & Westermann, R. (2005). Random walks for interactive alpha-matting. In Proceedings of VIIP.

He, K., Rhemann, C., Rother, C., Tang, X., & Sun, J. (2011). A global sampling method for alpha matting. In CVPR.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In CVPR.

Hou, Q., & Liu, F. (2019). Context-aware image matting for simultaneous foreground and alpha estimation. In ICCV.

Johnson, J., Alahi, A., & Fei-Fei, L. (2016). Perceptual losses for real-time style transfer and super-resolution. In ECCV.

Ke, Z., Li, K., Zhou, Y., Wu, Q., Mao, X., Yan, Q., & Lau, R. W. H. (2020). Is a green screen really necessary for real-time portrait matting?

Köhler, R., Hirsch, M., Schölkopf, B., & Harmeling, S. (2013). Improving alpha matting and motion blurred foreground estimation. In ICIP.

Kong, X.-N., Ng, M. K., & Zhou, Z.-H. (2009). Transductive multi-label learning via alpha matting (Unpublished manuscript).

Levin, A., Lischinski, D., & Weiss, Y. (2006). A closed-form solution to natural image matting. In CVPR.

Levin, A., Rav-Acha, A., & Lischinski, D. (2007). Spectral matting. In CVPR.

Li, Y., & Lu, H. (2020). Natural image matting via guided contextual attention. In AAAI.

Li, J., Zhang, J., Maybank, S.J., & Tao, D. (2020). End-to-end animal image matting.

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., & Zitnick, C. L. (2014). Microsoft coco: Common objects in context. In ECCV.

Lin, S., Ryabtsev, A., Sengupta, S., Curless, B., Seitz, S., & Kemelmacher-Shlizerman, I. (2021). Real-time high-resolution background matting. In CVPR.

Lin, S., Yang, L., Saleemi, I., & Sengupta, S. (2022). Robust high-resolution video matting with temporal guidance. In IEEE/CVF winter conference on applications of computer vision.

Lin, H. T., Tai, Y.-W., & Brown, M. S. (2011). Motion regularization for matting motion blurred objects. TPAMI, 33(11), 2329–2336.

Liu, L., Jiang, H., He, P., Chen, W., Liu, X., Gao, J., & Han, J. (2020). On the variance of the adaptive learning rate and beyond. In ICLR.

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., & Guo, B. (2021). Swin transformer: Hierarchical vision transformer using shifted windows. In IEEE/CVF international conference on computer vision.

Liu, J., Yao, Y., Hou, W., Cui, M., Xie, X., Zhang, C., & Hua, X.-S. (2020). Boosting semantic human matting with coarse annotations. In CVPR.

Lu, H., Dai, Y., Shen, C., Xu, S. (2019). Indices matter: Learning to index for deep image matting. In ICCV.

Lutz, S., Amplianitis, K., & Smolic, A. (2018). Alphagan: Generative adversarial networks for natural image matting. In BMVC.

Mechrez, R., Talmi, I., & Zelnik-Manor, L. (2018). The contextual loss for image transformation with non-aligned data. In ECCV.

Park, G., Son, S., Yoo, J., Kim, S., & Kwak, N. (2022). Matteformer: Transformer-based image matting via prior-tokens. In IEEE/CVF conference on computer vision and pattern recognition.

Qiao, Y., Liu, Y., Yang, X., Zhou, D., Xu, M., Zhang, Q., & Wei, X. (2020). Attention-guided hierarchical structure aggregation for image matting. In CVPR.

Qiao, Y., Liu, Y., Zhu, Q., Yang, X., Wang, Y., Zhang, Q., & Wei, X. (2020). Multi-scale information assembly for image matting. Computer Graphics Forum, 39(7), 565–574. https://doi.org/10.1111/cgf.14168

Rhemann, C., Rother, C., Wang, J., Gelautz, M., Kohli, P., & Rott, P. (2009). A perceptually motivated online benchmark for image matting. In CVPR.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In International conference on medical image computing and computer-assisted intervention.

Ruzon, M. A., & Tomasi, C. (2000). Alpha estimation in natural images. In: CVPR.

Sengupta, S., Jayaram, V., Curless, B., Seitz, S., & Kemelmacher-Shlizerman, I. (2020). Background matting: The world is your green screen. In CVPR.

Sengupta, S., Jayaram, V., Curless, B., Seitz, S. M., & Kemelmacher-Shlizerman, I. (2020). Background matting: The world is your green screen. In IEEE/CVF conference on computer vision and pattern recognition.

Sharma, R., Deora, R., & Vishvakarma, A. (2020). AlphaNet: An attention guided deep network for automatic image matting.

Shen, X., Tao, X., Gao, H., Zhou, C., & Jia, J. (2016). Deep automatic portrait matting. In ECCV.

Sun, Y., Tang, C.-K., & Tai, Y.-W. (2021). Semantic image matting. In CVPR.

Sun, Y., Tang, C., & Tai, Y. (2022). Human instance matting via mutual guidance and multi-instance refinement. In IEEE/CVF conference on computer vision and pattern recognition, CVPR.

Tai, Y.-W., Jia, J., & Tang, C.-K. (2007). Soft color segmentation and its applications. TPAMI, 29(9), 1520–1537.

Tang, J., Aksoy, Y., Oztireli, C., Gross, M., & Aydin, T. O. (2019). Learning-based sampling for natural image matting. In CVPR.

Torralba, A., & Oliva, A. (2003). Statistics of natural image categories. Network: Computation in Neural Systems, 14(3), 391–412.

Wang, J., Cohen, M. F., et al. (2007). Image and video matting: A survey. Foundations and Trends ® in Computer Graphics and Vision, 3(2), 97–175.

Wei, T., Chen, D., Zhou, W., Liao, J., Zhao, H., Zhang, W., & Yu, N. (2021). Improved image matting via real-time user clicks and uncertainty estimation. In CVPR.

Xu, N., Price, B., Cohen, S., & Huang, T. (2017). Deep image matting. In CVPR.

Yeung, S. K., Tang, C.-K., Brown, M. S., & Kang, S. B. (2011). Matting and compositing of transparent and refractive objects. ACM Transactions on Graphics, 30(1), 1–13.

Yu, Z., Li, X., Huang, H., Zheng, W., & Chen, L. (2021). Cascade image matting with deformable graph refinement. In 2021 IEEE/CVF international conference on computer vision.

Yu, H., Xu, N., Huang, Z., Zhou, Y., & Shi, H. (2021). High-resolution deep image matting. In AAAI

Yu, Q., Zhang, J., Zhang, H., Wang, Y., Lin, Z., Xu, N., Bai, Y., & Yuille, A. (2021). Mask guided matting via progressive refinement network. In CVPR.

Zhang, Y., Gong, L., Fan, L., Ren, P., Huang, Q., Bao, H., & Xu, W. (2019). A late fusion CNN for digital matting. In CVPR.

Zhang, X. C., Ng, R., & Chen, Q. (2018). Single image reflection separation with perceptual losses. In CVPR.

Zheng, Y., & Kambhamettu, C. (2009). Learning based digital matting. In ICCV.

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., & Torralba, A. (2016). Learning deep features for discriminative localization. In CVPR.

Zhu, B., Chen, Y., Wang, J., Liu, S., Zhang, B., & Tang, M. (2017). Fast deep matting for portrait animation on mobile phone. In ACM MM.

Funding

This work was supported by Kuaishou Technology and the Research Grant Council of the Hong Kong SAR under grant no. 16201420.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Yanan Sun and supervised by Chi-Keung Tang and Yu-Wing Tai.

Corresponding author

Ethics declarations

Conflict of interest

This work is of non-financial interests.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Communicated by Ming-Hsuan Yang.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, Y., Tang, CK. & Tai, YW. Semantic Image Matting: General and Specific Semantics. Int J Comput Vis 132, 710–730 (2024). https://doi.org/10.1007/s11263-023-01907-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01907-6