Abstract

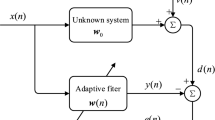

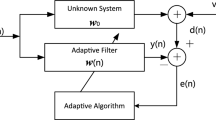

This paper proposes a new sequential block partial update normalized least mean square (SBP-NLMS) algorithm and its nonlinear extension, the SBP-normalized least mean M-estimate (SBP–NLMM) algorithm, for adaptive filtering. These algorithms both utilize the sequential partial update strategy as in the sequential least mean square (S–LMS) algorithm to reduce the computational complexity. Particularly, the SBP–NLMM algorithm minimizes the M-estimate function for improved robustness to impulsive outliers over the SBP–NLMS algorithm. The convergence behaviors of these two algorithms under Gaussian inputs and Gaussian and contaminated Gaussian (CG) noises are analyzed and new analytical expressions describing the mean and mean square convergence behaviors are derived. The robustness of the proposed SBP–NLMM algorithm to impulsive noise and the accuracy of the performance analysis are verified by computer simulations.

Similar content being viewed by others

References

Nagumo, J. I., & Noda, A. (1967). A learning method for system identification. IEEE Transactions on Automatic Control, AC-12, 282–287. doi:10.1109/TAC.1967.1098599.

Aboulnasr, T., & Mayyas, K. (1999). Complexity reduction of the NLMS algorithm via selective coefficient update. IEEE Transactions on Signal Processing, 47(5), 1421–1424. doi:10.1109/78.757235.

Dogancay, K., & Tanrikulu, O. (2001). Adaptive filtering algorithms with selective partial updates. IEEE Transactions on Circuits and Systems II: Analog and Digital Signal Processing, 48(8), 762–769. doi:10.1109/82.959866.

Werner, S., Campos, M. L. R., & Diniz, P. S. R. (2004). Partial-update NLMS algorithms with data-selective updating. IEEE Transactions on Signal Processing, 52(4), 938–949. doi:10.1109/TSP.2004.823483.

S. C. Douglas. (1997). Adaptive filters employing partial updates. IEEE Transactions on Circuits and Systems II: Analog and Digital Signal Processing, 44(3).

Kuo, S. M., & Chen, J. (1993). Multiple-microphone acoustic echo cancellation system with the partial adaptive process. Digital Signal Processing, 3(1), 54–63. doi:10.1006/dspr.1993.1007.

Godavarti, M., & Hero, A. O., III (2005). Partial update LMS algorithms. IEEE Transactions on Signal Processing, 53(7), 2382–2399. doi:10.1109/TSP.2005.849167.

Goodwin, G. C., & Sin, K. S. (1984). Adaptive filtering, prediction, and control. Englewood Cliffs, NJ: Prentice-Hall.

Zhou, Y., Chan, S. C., & Ho, K. L. New sequential partial update least mean M-estimate algorithms for robust adaptive system identification in impulsive noise. IEEE Transactions Circuits and Systems I. submitted to.

Huber, P. J. (1981). Robust statistics. NY: Wiley.

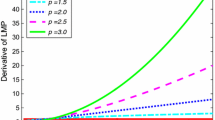

Zou, Y., Chan, S. C., & Ng, T. (2000). Least mean M-estimate algorithms for robust adaptive filtering in impulsive noise. IEEE Transactions on Circuits and Systems II Analog and Digital Signal Processing, 47(12), 1564–1569. doi:10.1109/82.899657.

Price, R. (1958). A useful theorem for nonlinear devices having Gaussian inputs. IRE Transactions on Information Theory, IT-4, 69–72. doi:10.1109/TIT.1958.1057444.

Papoulis, A. (1991). Probability, random variables, and stochastic processes (3rd ed.). NY: McGraw-Hill.

Price, R. (1964). Comments on ‘A useful theorem for nonlinear devices having Gaussian inputs’. IEEE Transactions Information Theory, 10(2), 171–171. doi:10.1109/TIT.1964.1053659.

Koike, S. (2006). Performance analysis of the normalized LMS algorithm for complex-domain adaptive filters in the presence of impulse noise at filter input. IEICE Transactions on Fundamentals of Electronics, Communications and Computer Sciences, E89-A(9), 2422–2428.

Macleod, M. D. (2005). Robust normalized LMS filtering. In Proc IEEE Int Conf Acoust, Speech Signal Processing, 4, 44–40.

Haweel, T. I., & Clarkson, P. M. (1992). A class of order statistic LMS algorithms. IEEE Transactions on Signal Processing, 40(1), 44–53. doi:10.1109/78.157180.

Settineri, R., Najim, M., & Ottaviani, D. (1996). Order statistic fast Kalman filter. In Proc IEEE Int Symp Circuits Syst, 2, 116–119.

Koike, S. (1997). Adaptive threshold nonlinear algorithm for adaptive filters with robustness against impulsive noise. IEEE Transactions on Signal Processing, 45(9), 2391–2395. doi:10.1109/78.622963.

Weng, J. F., & Leung, S. H. (1997). Adaptive nonlinear RLS algorithm for robust filtering in impulsive noise. In Proc IEEE Int Symp Circuits Syst, 4, 2344–2340.

Chan, S. C., & Zou, Y. (2004). A recursive least M-estimate algorithm for robust adaptive filtering in impulsive noise: fast algorithm and convergence performance analysis. IEEE Transactions Signal Processing, 52(4), 975–991. doi:10.1109/TSP.2004.823496.

Hampel, F. R., Ronchetti, E. M., Rousseeuw, P. J., & Stahel, W. A. (2005). Robust statistics: The approach based on influence functions. New York: Wiley.

Bershad, N. J. (1988). On Error-saturation nonlinearities in LMS adaptation. IEEE Transactions on Acoustics and Speech Signal Processing, ASSP-43(4), 440–452. doi:10.1109/29.1548.

Mathews, V. J. (1991). Performance analysis of adaptive filters equipped with the dual sign algorithm. IEEE Transactions on Signal Processing, 39(1), 85–91. doi:10.1109/78.80768.

Zhou, Y. (2006). Improved analysis and design of efficient adaptive transversal filtering algorithms with particular emphasis on noise, input and channel modeling. Hong Kong: Ph.D. Dissertation, The Univ. Hong Kong.

Chan, S. C., & Zhou, Y. (2007). On the convergence analysis of the normalized LMS and the normalized least mean M-estimate algorithms. In Proc IEEE Int Symp Signal Processing and Information Technology, 1059–1065.

Chan, S. C., Zhou, Y., & Ho, K. L. (2007). A new sequential block partial update normalized least mean M-estimate algorithm and its convergence performance analysis. In Proc IEEE Int Symp Signal Processing and Information Technology, 327–332.

Widrow, B., McCool, J., Larimore, M. G., & Johnson Jr., C. R. (1976). Stationary and nonstationary learning characteristics of the LMS adaptive filter. Proc. IEEE, 64, 1151–1162. doi:10.1109/PROC.1976.10286.

Bershad, N. J. (1986). Analysis of the normalized LMS algorithm with Gaussian inputs. IEEE Transactions on Acoustics Speech Signal Processing, ASSP-34, 793–806. doi:10.1109/TASSP.1986.1164914.

Recktenwald, G. (2000). Numerical methods with MATLAB: Implementations and applications. Englewood Cliffs, NJ: Prentice-Hall.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Evaluation of H

In this appendix, we evaluate the expectation \( {\mathbf{H}} = E_{{{\left\{ {{\mathbf{X}},\eta _{g} } \right\}}}} {\left[ {\psi {\left( e \right)}{S_{{\text{x}}} {\mathbf{X}}} \mathord{\left/ {\vphantom {{S_{{\text{x}}} {\mathbf{X}}} {{\left( {\varepsilon + {\mathbf{X}}^{T} {\mathbf{S}}_{{\text{x}}} {\mathbf{X}}} \right)}}}} \right. \kern-\nulldelimiterspace} {{\left( {\varepsilon + {\mathbf{X}}^{T} {\mathbf{S}}_{{\text{x}}} {\mathbf{X}}} \right)}}|{\mathbf{v}}} \right]}\). η g (n) and x(n) are assumed to be statistically independent, and X are jointly Gaussian with autocorrelation matrix R xx. Assuming there are C different combinations for S x, each of which is denoted as \({\mathbf{S}}_{\mathbf{X}}^{\left( i \right)} \), i = 1,2,…,C with equal probability p i = 1/C, one gets

where \(C_R = \left( {2\pi } \right)^{{{ - L} \mathord{\left/ {\vphantom {{ - L} 2}} \right. \kern-\nulldelimiterspace} 2}} |{\mathbf{R}}_{{\text{xx}}} |^{{{ - 1} \mathord{\left/ {\vphantom {{ - 1} 2}} \right. \kern-\nulldelimiterspace} 2}} \) and \(f_{{\eta _{g} }} \) (η g ) is the PDF of the Gaussian noise η g . |·| denotes the determinant of a matrix. Let’s focus on the integral inside the summation as follows:

Similar to [29], let us consider the integral

It can be seen that

Differentiating (A-3) with respect to β, one gets

where

P = L/C, I k is the k×k identity matrix, and P i is a permutation matrix with

Since R Xi _00 is symmetric, its eigenvalue decomposition has the following form

where \(\Lambda _{Xi} = {\text{diag}}\left( {\lambda _{Xi,1} ,\lambda _{Xi,2} , \cdots ,\lambda _{Xi,P} } \right)\) contains the eigenvalues of R Xi _00. Therefore, we have \(|{\mathbf{B}}_{i} | = |{\mathbf{R}}_{{{\text{xx}}}} ||{\mathbf{I}}_{P} + 2\beta {\mathbf{R}}_{{Xi\_00}} |^{{ - 1}} = |{\mathbf{R}}_{{{\mathbf{XX}}}} |{\mathop \Pi \limits_{k = 1}^P }(2\beta \lambda _{{Xi,k}} + 1)^{{ - 1}}\). Using the matrix inverse identity, we can rewrite (A-6) as

where

Furthermore, one can rewrite (A-5) as follows

where \(C_{B_i } = \left( {2\pi } \right)^{{{ - L} \mathord{\left/ {\vphantom {{ - L} 2}} \right. \kern-\nulldelimiterspace} 2}} |{\mathbf{B}}_i |^{{{ - 1} \mathord{\left/ {\vphantom {{ - 1} 2}} \right. \kern-\nulldelimiterspace} 2}} \),\(\gamma _i \left( \beta \right) = \exp \left( { - \beta \varepsilon } \right)|{\mathbf{B}}_i |^{\frac{1}{2}} |{\mathbf{R}}_{{\text{xx}}} |^{ - \frac{1}{2}} \), and \({\mathbf{L}}_1 = E_{\left\{ {{\mathbf{X}},\eta _g } \right\}} \left[ {\psi \left( e \right){\mathbf{X}}|{\mathbf{v}}} \right]|_{E\left[ {{\mathbf{XX}}^T } \right] = {\mathbf{B}}_i } \) is the expectation of ψ(e)X conditioned on v when x i ,x j ∈X are jointly Gaussian with correlation matrix B i .

We shall evaluate L 1 by considering its k-th element as follows:

Using Price’s theorem [12, 13], we have

where \(\overline {\psi \prime } \left( {\sigma _e^2 } \right)\) is the average of \(\psi \prime \left( e \right)\), and e=X T v+η g , which is Gaussian distributed with zero mean and variance \(\sigma _e^2 \left( {\mathbf{v}} \right) = E\left. {\left[ {{\mathbf{v}}^T {\mathbf{XX}}^T {\mathbf{v}}|{\mathbf{v}}} \right]} \right|_{E\left[ {{\mathbf{XX}}^T } \right] = {\mathbf{B}}_i } + \sigma _g^2 = {\mathbf{v}}^T {\mathbf{B}}_i {\mathbf{v}} + \sigma _g^2 \). To save notation, we shall write \(\overline {\psi \prime } \left( {\sigma _e^2 \left( {\mathbf{v}} \right)} \right)\)simply as \(\overline {\psi \prime } \left( {\sigma _e^2 } \right)\). Integrating (A-11) with respect to \(r_{x_k e} \), one gets

where the constant of integration is zero because \(r_{x_k e} \) is equal to zero when x k and e are uncorrelated. Since

where B i,k is the k-th row of B i , we have

Substituting (A-14) in (A-9) and integrating with respect to β yields

where the constant of integration is equal to zero because of the boundary condition F i (∞) = 0. Here, we have assumed that \(\sigma _e^2 \left( {\mathbf{v}} \right)\) depends weakly on β and is taken outside of the integral using mean value theorem. This is a good approximation if the variation of \(\overline {\psi \prime } \left( {\sigma _e^2 } \right)\) is limited, such as in the RLM algorithm [21] with ATS or at the steady state of the algorithm.

We now evaluate

From (A-6), \({\mathbf{S}}_{\mathbf{X}}^{\left( i \right)} {\mathbf{B}}_i \) can be denoted as

Substituting (A-17) into (A-16), we get

where \(I\left( {\Lambda _{Xi} } \right) = \int_0^\infty {\gamma _i \left( \beta \right)\left( {{\mathbf{I}}_P + 2\beta \Lambda _{Xi} } \right)^{ - 1} } d\beta \). Since the integrant is a diagonal matrix, it suffices to evaluate the integral for its diagonal entries as follows:

k = 1,…,P, which is a special integral function defined to facilitate analysis. Finally, from (A-4) and (A-15)–(A-19), we have

And

where we write α i (0) as α i for simplicity.

Appendix B: Evaluation of s3

\({\mathbf{s}}_3 = E_{\left\{ {{\mathbf{X}},\eta _g } \right\}} \left[ {{{\psi ^2 \left( e \right){\mathbf{S}}_{\text{x}} {\mathbf{XX}}^T {\mathbf{S}}_{\text{x}} } \mathord{\left/ {\vphantom {{\psi ^2 \left( e \right){\mathbf{S}}_{\text{x}} {\mathbf{XX}}^T {\mathbf{S}}_{\text{x}} } {\left( {\varepsilon + {\mathbf{X}}^T {\mathbf{S}}_{\text{x}} {\mathbf{X}}} \right)^2 }}} \right. \kern-\nulldelimiterspace} {\left( {\varepsilon + {\mathbf{X}}^T {\mathbf{S}}_{\text{x}} {\mathbf{X}}} \right)^2 }}|{\mathbf{v}}} \right]\) is evaluated in this appendix. As in Appendix A, we can write:

Let us define

Comparing (B-2) with (B-1), it can be seen that

To evaluate \(\overline {\mathbf{F}} _i \left( \beta \right)\), differentiating (B-2) twice with respect to β, one gets

where γ i (β), \(C_{B_i } \), and the correlation matrix B i have been defined in Appendix A. \({\mathbf{L}}_{2i} = E_{\left\{ {{\mathbf{X}},\eta _g } \right\}} \left. {\left[ {\psi ^2 \left( e \right){\mathbf{XX}}^T |{\mathbf{v}}} \right]} \right|_{{\mathbf{B}}_i } \) is the expectation of ψ 2(e)XX T taken over {X,η g } and conditioned on v where x m , x n ∈X, m,n=1,2…,L are jointly Gaussian distributed variables with correlation matrix B i . Let’s evaluate the (m, n)-th element of L 2i as follows:

Using Price’s theorem, we have

For notational simplicity, we shall write \(\overline {\psi ^2 } \left( {\sigma _e^2 } \right)\) as \(B_\psi \left( {\sigma _e^2 } \right)\). Integrating with respect to \(r_{x_m x_n } \) gives

where \(c_{m,n} = E\left. {\left[ {\psi ^2 \left( e \right)x_m x_n } \right]} \right|_{r_{x_m x_n = 0} } \) is the integration constant. Using Price’s theorem again, we have

(From Price’s theorem, \(\frac{1}{2}E\left[ {\frac{{d^2 \psi ^2 \left( e \right)}}{{de}}} \right] = \frac{d}{{d\sigma _e^2 }}E\left[ {\psi ^2 \left( e \right)} \right]\), and we write it as \(C_\psi \left( {\sigma _e^2 } \right)\) hereafter.)

Integrating again, one gets

Combining (B-7) and (B-9), we have

Substituting (B-11) into (B-4) gives

Double integrating (B-12) with respect to β and multiplying \({\mathbf{S}}_{\mathbf{X}}^{\left( i \right)} \) on both sides yield

For SBP–NLMS algorithms with general nonlinearity, the integrals are rather difficult to evaluate because of the presence of \(\sigma _e^2 \). To simplify the analysis, we shall assume that \(\sigma _e^2 \) depends weakly on β and is taken outside the integral. Like \(A_\psi \left( {\sigma _e^2 } \right)\), this is a good approximation if the variations of \(B_\psi \left( {\sigma _e^2 } \right)\) and \(C_\psi \left( {\sigma _e^2 } \right)\) are limited. Therefore, we have

with the boundary conditions: \(\overline {\mathbf{F}} _i \left( \infty \right) = 0\), \(\partial \overline {\mathbf{F}} _i {{\left( \beta \right)} \mathord{\left/ {\vphantom {{\left( \beta \right)} \partial }} \right. \kern-\nulldelimiterspace} \partial }\left. \beta \right|_{\beta = \infty } = 0\), and \({\mathbf{I}}_{1i} = \int_0^\infty {\int_{\beta _1 }^\infty {\gamma _i \left( {\beta _2 } \right){\mathbf{S}}_{\mathbf{X}}^{\left( i \right)} {\mathbf{B}}_i {\mathbf{S}}_{\mathbf{X}}^{\left( i \right)} d\beta _2 d\beta _1 } } \), \({\mathbf{I}}_{2i} = \int_0^\infty {\int_{\beta _1 }^\infty {2\gamma _i \left( {\beta _2 } \right){\mathbf{S}}_{\mathbf{X}}^{\left( i \right)} {\mathbf{B}}_i {\mathbf{vv}}^T {\mathbf{B}}_i {\mathbf{S}}_{\mathbf{X}}^{\left( i \right)} d\beta _2 d\beta _1 } } \).

For the SBP–NLMS algorithm, \(\psi \left( e \right) = e\), \(C_\psi \left( {\sigma _e^2 } \right) = 1\), and \(B_\psi \left( {\sigma _e^2 } \right) = \sigma _e^2 = {\mathbf{v}}^T {\mathbf{B}}_i {\mathbf{v}} + \sigma _g^2 \). Thus,

2.1 Derivation of I1i

where \(\left[ {{\mathbf{I}}\prime \left( {\Lambda _{Xi} } \right)} \right]_{k,k} = \int_0^\infty {\frac{{\beta \exp \left( { - \beta \varepsilon } \right)}}{{\left( {2\beta \lambda _{Xi,k} + 1} \right)}}\mathop \Pi \limits_{j = 1}^p \left( {2\beta \lambda _{Xi,j} + 1} \right)^{{{ - 1} \mathord{\left/ {\vphantom {{ - 1} 2}} \right. \kern-\nulldelimiterspace} 2}} d\beta } ,\)

which is another defined special integral function.

2.2 Derivation of I 2i

Since

Similarly,

Thus

where \({\mathbf{R}}_{i,v} = \left[ {\begin{array}{*{20}c} {\Lambda _{Xi} {\mathbf{U}}_{Xi}^T } & {{\mathbf{U}}_{Xi}^T {\mathbf{R}}_{Xi\_01} } \\ 0 & 0 \\ \end{array} } \right]{\mathbf{P}}_i {\mathbf{vv}}^T {\mathbf{P}}_i^T \left[ {\begin{array}{*{20}c} {{\mathbf{U}}_{Xi} \Lambda _{Xi} } & 0 \\ {{\mathbf{R}}_{Xi\_01}^T {\mathbf{U}}_{Xi} } & 0 \\ \end{array} } \right]\), and \({\mathbf{D}}_{Xi} = \left( {{\mathbf{I}} + 2\beta \Lambda _{Xi} } \right)^{ - 1} \). Thus, the (m, n)-th element of \({\mathbf{D}}_i^\prime = \int_0^\infty {\int_{\beta _1 }^\infty {\gamma _i \left( {\beta _2 } \right)\left[ {\begin{array}{*{20}c} {{\mathbf{D}}_{Xi} } & 0 \\ 0 & 0 \\ \end{array} } \right]{\mathbf{R}}_{i,v} \left[ {\begin{array}{*{20}c} {{\mathbf{D}}_{Xi} } & 0 \\ 0 & 0 \\ \end{array} } \right]d\beta _2 d\beta _1 } } \) is given by

We now evaluate \(I_{mn}^{\prime \prime } \left( {\Lambda _{Xi} } \right)\):

is the third defined special integral function. Summing up the above results yields

where \(\left[ {{\mathbf{I}}\prime \prime \left( {\Lambda _{Xi} } \right)} \right]\)is a P×P matrix whose (m, n)-th element is \(I_{mn}^{\prime \prime } \left( {\Lambda _{Xi} } \right)\) and \( \circ \) denotes element-wise (Hadamard) product of two matrices.

Substituting (B-14), (B-16) and (B-21) into (B-3) yields

Rights and permissions

About this article

Cite this article

Chan, S.C., Zhou, Y. & Ho, K.L. A New Sequential Block Partial Update Normalized Least Mean M-Estimate Algorithm and its Convergence Performance Analysis. J Sign Process Syst Sign Image Video Technol 58, 173–191 (2010). https://doi.org/10.1007/s11265-009-0346-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-009-0346-3