Abstract

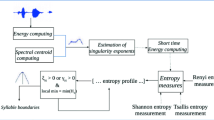

This paper presents an algorithm for syllable segmentation of speech signals based on the calculation of the singularity exponents in each point of the signal combined with Rényi entropy calculation. Rényi entropy, generalization of Shannon entropy, quantifies the degree of signal organization. We then hypothesize that this degree of organization differs which we view a segment containing a phoneme or syllable unit (obtained with singularity exponents). The proposed algorithm has three steps. Firstly, we extracted silence in the speech signal to obtain segments containing only speech. Secondly, relevant information from segments is obtained by examining the local distribution of calculated singularity exponents. Finally, Rényi entropy helps to exploit the voicing degree contained in each candidate syllable segment allowing the enhancement of the syllable boundary detection. Once evaluated, our algorithm produced a good performance with efficient results on two languages, i.e., the Fongbe (an African tonal language spoken especially in Benin, Togo, and Nigeria) and an American English. The overall accuracy of syllable boundaries was obtained on Fongbe dataset and validated subsequently on TIMIT dataset with a margin of error <5m s.

Similar content being viewed by others

Notes

References

Khanagha, V., Daoudi, K., Pont, O., & Yahia, H. (2014). Phonetic segmentation of speech signal using local singularity analysis. Digital Signal Processing, Elsevier, 35, 86–94. doi:10.1016/j.dsp.2014.08.002.

Prasad, V.K., Nagarajan, T., & Murthy, H.A. (2004). Automatic segmentation of continuous speech using minimum phase group delay functions. Speech Communication, 42(34), 429–446. doi:10.1016/j.specom.2003.12.002.

Origlia, A., Cutugno, F., & Galat, V. (2014). Continuous emotion recognition with phonetic syllables. Speech Communication, 57, 155–169. doi:10.1016/j.specom.2013.09.012.

Mermelstein, P. (1957). Automatic segmentation of speech into syllabic units. Journal of the Acoustical Society of America, 58, 880–883.

Zhao, X., & O’Shqughnessy, D. (2008). A new hybrid approach for automatic speech signal segmentation using silence signal detection, energy convex hull, and spectral variation. In Canadian Conference on Electrical and Computer Engineering, IEEE (pp. 145–148).

Pfitzinger, H., Burger, S., & Heid, S. (1996). Syllable detection in read and spontaneous speech. In Proceedings of the Fourth International Conference on Spoken Language (ICSLP), Vol. 2, IEEE (pp. 1261–1264).

Jittiwarangkul, N., Jitapunkul, S., Luksaneeyanavin, S., Ahkuputra, V., & Wutiwiwatchai, C. (1998). Thai syllable segmentation for connected speech based on energy. In The Asia-Pacific Conference on Circuits and Systems, IEEE (pp. 169–172).

Wu, L., Shire, M., Greenberg, S., & Morgan, N. (1997). Integrating syllable boundary information into speech recognition. In Proceedings of International Conference on Acoustics, Speech and Signal Processing, Vol. 2, IEEE (pp. 987–990).

Petrillo, M., & Cutugno, F. (2003). A syllable segmentation algorithm for english and italian. In Proceedings of 8th european conference on speech communication and technology, EUROSPEECH, Geneva (pp. 2913–2916).

Sheikhi, G., & Farshad, A. (2011). Segmentation of speech into syllable units using fuzzy smoothed short term energy contour. In Proceedings of international conference on acoustics, Speech and Signal Processing, IEEE (pp. 195–198).

Pan, F., & Ding, N. (2010). Speech denoising and syllable segmentation based on fractal dimension. In International Conference on Measuring Technology and Mechatronics Automation, IEEE (pp. 433–436).

Obin, N., Lamare, F., & Roebel, A. (2013). Syll-o-matic: an adaptive time-frequency representation for the automatic segmentation of speech into syllables. In International conference on acoustics, Speech and Signal Processing, IEEE (pp. 6699–6703).

Villing, R., Timoney, J., Ward, T., & Costello, J. (2004). Automatic blind syllable segmentation for continuous speech. In Proceedings of the irish signals and systems conference, Belfast, UK (pp. 41–46).

Chou, C.-H., Liu, P.-H., & Cai, B. (2008). On the studies of syllable segmentation and improving mfccs for automatic birdsong recognition. In Asia-Pacific Services Computing Conference, IEEE (pp. 745–750).

Shastri, L., Chang, S., & Greenberg, S. (1999). Syllable detection and segmentation using temporal flow neural networks. In Proceedings of the Fourteenth International Congress of Phonetic Sciences (pp. 1721–1724).

Ching-Tang, H., Mu-Chun, S., Eugene, L., & Chin, H. (1999). A segmentation method for continuous speech utilizing hybrid neuro-fuzzy network. Journal of Information Science and Engineering, 15(4), 615–628.

Makashay, M., Wightman, C., Syrdal, A., & Conkie, A. (2000). Perceptual evaluation of automatic segmentation in text-to-speech synthesis. In Proceedings of the 6th conference of spoken and language processing, Beijing, China.

Vuuren, V.Z., Bosch, L., & Niesler, T. Unconstrained speech segmentation using deep neural networks. In ICPRAM 2015 - Proceedings of the international conference on pattern recognition applications and methods, lisbon, Portugal, Vol. 1.

Demeechai, T., & Makelainen, K. (2001). Recognition of syllables in a tone language. Speech Communication, Elsevier, 33(3), 241–254. doi:10.1016/S0167-6393(00)00017-0.

Khanagha, V., Daoudi, K., Pont, O., & Yahia, H. (2011). Improving text-independent phonetic segmentation based on the microcanonical multiscale formalism. In IEEE International Conference on Acoustics, Speech and Signal Processing, IEEE (pp. 4484–4487).

Fantinato, P.C., Guido, R.C., Chen, S.H., Santos, B.L.S., Vieira, L.S., J, S.B., Rodrigues, L.C., Sanchez, F., Escola, J., Souza, L.M., Maciel, C.D., Scalassara, P.R., & Pereira, J. (2008). A fractal-based approach for speech segmentation. In Tenth IEEE International Symposium on Multimedia, IEEE Computer Society (pp. 551–555).

Kinsner, W., & Grieder, W. (2008). Speech segmentation using multifractal measures and amplification of signal features. In 7th International Conference on Cognitive Informatics, IEEE Computer Society (pp. 351–357).

Hall, T. Encyclopedia of language and linguistics, Elsevier 12.

Pont, O., Turiel, A., & Yahia, H. (2011). An optimized algorithm for the evaluation of local singularity exponents in digital signals. In Combinatorial Image Analysis, Springer Berlin Heidelberg (pp. 346–357).

Turiel, A., & Parga, N. (2000). The multi-fractal structure of contrast changes in natural images: from sharp edges to textures. Neural Computation, 12, 763–793.

Turiel, A., Prez-Vicente, C., & Grazzini, J. (2006). Numerical methods for the estimation of multifractal singularity spectra on sampled data: A comparative study. Journal of Computational Physics, 216(1), 362–390. doi:10.1016/j.jcp.2005.12.004.

Renyi, A. (1961). On measures of entropy and information. In Proceedings of the fourth berkeley symposium on mathematical statistics and probability Vol. 1, University of California Press, Berkeley, Calif (pp. 547–561).

Baraniuk, R., Flandrin, P., Janssen, A., & Michel, O. (2001). Measuring time-frequency information content using the renyi entropies. In IEEE Transactions on Information Theory, Vol. 47, IEEE (pp. 1391–1409).

Boashash, B. Time frequency signal analysis and processing: A comprehensive reference. In Elsevier, Oxford, Elsevier (p. 2003).

Liuni, M., Robel, A., Romito, M., & Rodet, X. (2011). Rényi information measures for spectral change detection. In IEEE International Conference on Acoustics, Speech and Signal Processing, IEEE (pp. 3824–3827).

Rasanen, O., Laine, U., & Altosaar, T. (2009). An improved speech segmentation quality measure: the r-value. In Proceedings of INTERSPEECH (pp. 1851–1854).

Howitt, A. (2002). Vowel landmark detection. Journal of the Acoustical Society of America, 112(5), 2279. doi:10.1121/1.4779139.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Laleye, F.A.A., Ezin, E.C. & Motamed, C. Automatic Text-Independent Syllable Segmentation Using Singularity Exponents And Rényi Entropy. J Sign Process Syst 88, 439–451 (2017). https://doi.org/10.1007/s11265-016-1183-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-016-1183-9