Abstract

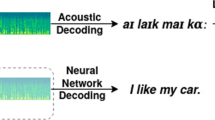

Speech recognition and keyword detection are becoming increasingly popular applications for mobile systems. These applications have large memory and compute resource requirements, making their implementation on a mobile device quite challenging. In this paper, we design low cost neural network architectures for keyword detection and speech recognition. Wepresent techniques to reduce memory requirement by scaling down the precision of weight and biases without compromising on the detection/recognition performance. Experiments conducted on the Resource Management (RM) database show that for the keyword detection neural network, representing the weights by 5 bits results in a 6 fold reduction in memory compared to a floating point implementation with very little loss in performance. Similarly, for the speech recognition neural network, representing the weights by 6 bits results in a 5 fold reduction in memory while maintaining an error rate similar to a floating point implementation. Preliminary results in 40nm TSMC technology show that the networks have fairly small power consumption: 11.12mW for the keyword detection network and 51.96mW for the speech recognition network, making these designs suitable for mobile devices.

Similar content being viewed by others

Notes

1 The extensions to the SiPS’15 paper include (i) development of a fixed-point neural network architecture for speech recognition that has significantly reduced memory requirements compared to a floating point implementation, (ii) evaluation of the speech recognition network on RM database, (iii) hardware implementation of the speech recognition network and (iv) a new technique to reduce the precision of the weights through sensitivity analysis.

References

Su, D., Wu, X., & Xu, L. (2010). GMM-HMM acoustic model training by a two level procedure with gaussian components determined by automatic model selection. In Proceedings of IEEE international conference on acoustics speech and signal processing (pp. 4890–4893).

Sha, F., & Saul, L.K. (2006). Large margin Gaussian mixture modeling for phonetic classification and recognition. In Proceedings of IEEE international conference on acoustics speech and signal processing (pp. 265–268).

Miller, D.R., Kleber, M., Kao, C.-L., Kimball, O., Colthurst, T., Lowe, S.A., Schwartz, R.M., & Gish, H. (2007). Rapid and accurate spoken term detection. In 8th annual conference of the international speech communication association (pp. 314–317).

Parlak, S., & Saraclar, M. (2008). Spoken term detection for Turkish broadcast news. In Proceedings of IEEE international conference on acoustics, speech and signal processing (pp. 5244–5247).

Mamou, J., Ramabhadran, B., & Siohan, O. (2007). Vocabulary independent spoken term detection. In Proceedings of the 30th annual international ACM SIGIR conference on research and development in information retrieval (pp. 615–622).

Rohlicek, J.R., Russell, W., Roukos, S., & Gish, H. (1989). Continuous hidden Markov modeling for speaker-independent word spotting. In Proceedings of IEEE international confernce on acoustics speech and signal processing (pp. 627–630).

Rose, R.C., & Paul, D.B. (1990). A hidden Markov model based keyword recognition system. In Proceedings of IEEE international conference on acoustics speech and signal processing (pp. 129–132).

Wilpon, J., Miller, L., & Modi, P. (1991). Improvements and applications for key word recognition using hidden Markov modeling techniques. In Proceedings of IEEE international conference on acoustics speech and signal processing (pp. 309–312).

Silaghi, M.-C., & Bourlard, H. (1999). Iterative posterior-based keyword spotting without filler models. In Proceedings of the IEEE automatic speech recognition and understanding workshop (pp. 213–216).

Silaghi, M.-C. (2005). Spotting subsequences matching an HMM using the average observation probability criteria with application to keyword spotting. In Proceedings of the national conference on artificial intelligence, (Vol. 20 pp. 1118–1123).

Dahl, G.E., Yu, D., Deng, L., & Acero, A. (2011). Large vocabulary continuous speech recognition with context-dependent DBN-HMMs. In Proceedings of IEEE international conference on acoustics speech and signal processing (pp. 4688–4691).

Chen, G., Parada, C., & Heigold, G. (2014). Small-footprint keyword spotting using deep neural networks. In Proceedings of IEEE international conference on acoustics speech and signal processing (pp. 4087–4091).

He, G., Sugahara, T., Miyamoto, Y., Fujinaga, T., Noguchi, H., Izumi, S., Kawaguchi, H., & Yoshimoto, M. (2012). A 40 nm 144 mw VLSI processor for real-time 60-kword continuous speech recognition. IEEE Transactions on Circuits and Systems I: Regular Papers, 59(8), 1656–1666.

Price, M., Glass, J., & Chandrakasan, A. (2015). A 6mw 5,000 word real-time speech recognizer using WFST models. IEEE Journal of Solid State Circuits, 50(1), 102–112.

Shah, M., Wang, J., Blaauw, D., Sylvester, D., Kim, H.-S., & Chakrabarti, C. (2015). A fixed-point neural network for keyword detection on resource constrained hardware. In IEEE workshop on signal processing systems (SiPS) (pp. 1–6).

Price, P., Fisher, W.M., Bernstein, J., & Pallett, D.S. (1988). The DARPA 1000-word resource management database for continuous speech recognition. In Proceedings of IEEE international conference on acoustics speech and signal processing (pp. 651–654).

Povey, D., Ghoshal, A., Boulianne, G., Burget, L., Glembek, O., Goel, N., Hannemann, M., Motlicek, P., Qian, Y., Schwarz, P., & et al. (2011). The Kaldi speech recognition toolkit. In IEEE 2011 workshop on automatic speech recognition and understanding. no. EPFL-CONF-192584.

Rath, S.P., Povey, D., Veselỳ, K., & Cernockỳ, J. (2013). Improved feature processing for deep neural networks. In INTERSPEECH (pp. 109–113).

Hinton, G., Deng, L., Yu, D., Dahl, G.E., Mohamed, A.-r., Jaitly, N., Senior, A., Vanhoucke, V., Nguyen, P., Sainath, T.N., & et al. (2012). Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Processing Magazine, 29(6), 82–97.

Miao, Y. (2014). Kaldi+ PDNN: building DNN-based ASR systems with Kaldi and PDNN. arXiv:1401.6984.

Venkataramani, S., Ranjan, A., Roy, K., & Raghunathan, A. (2014). axNN: energy-efficient neuromorphic systems using approximate computing. In Proceedings of the international symposium on low power electronics and design (pp. 27–32).

Bradley, A.P. (1997). The use of the area under the roc curve in the evaluation of machine learning algorithms. Pattern Recognition, 30(7), 1145–1159.

Morris, A.C., Maier, V., & Green, P.D. (2004). From WER and RIL to MER and WIl: improved evaluation measures for connected speech recognition. In INTERSPEECH (pp. 2765– 2768).

Park, S., Bong, K., Shin, D., Lee, J., Choi, S., & Yoo, H.-j. (2015). a1.93TOPS/w scalable deep learning/inference processor with tetra-parallel mimd architecture for big-data applications. In IEEE international solid-state circuits conference-(ISSCC) digest of technical papers (pp. 1–3).

Chen, T., Du, Z., Sun, N., Wang, J., Wu, C., Chen, Y., & Temam, O. (2014). Diannao: A small-footprint high-throughput accelerator for ubiquitous machine-learning. ACM Sigplan Notices, 49(4), 269–284.

Moons, B., & Verhelst, M. (2016). A 0.3-2.6 TOPS/W, precision-scalable processor for real-time large-scale conv nets, arXiv:1606.05094 1606.05094.

Chen, Y.-H., Krishna, T., Emer, J., & Sze, V. (2016). Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. In IEEE international solid state circuits conference (ISSCC) (pp. 262–264).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Shah, M., Arunachalam, S., Wang, J. et al. A Fixed-Point Neural Network Architecture for Speech Applications on Resource Constrained Hardware. J Sign Process Syst 90, 727–741 (2018). https://doi.org/10.1007/s11265-016-1202-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-016-1202-x