Abstract

In this paper, we propose a novel single channel speech enhancement approach that takes up the Stationary Wavelet Transform (SWT) and Nonnegative Matrix Factorization (NMF) with Concatenated Framing Process (CFP) and proposes Subband Smooth Ratio Mask (ssRM). Due to downsampling process after filtering, Discrete Wavelet Packet Transform (DWPT) suffers the absence of shift-invariance, and for this reason, some errors occur in the signal reconstruction and to mitigate the problem, firstly we use SWT and NMF with KL cost function. Secondly, we exploit the CFP to build each column of the matrix instead of using NMF directly to take advantage of smooth decomposition. Thirdly, we apply the Auto-Regressive Moving Average (ARMA) filtering process to the newly formed matrices for making the speech more stable and standardized. Finally, we propose an ssRM by combing the Standard Ratio Mask (sRM) and Square Root Ratio Mask (srRM) with Normalized Cross-Correlation Coefficients (NCCC) to take the advantages of them (sRM, srRM and NCCC). In short, the SWT divides the time-domain mixing speech signal into a set of subband signals and then framing and taking the absolute value of each subband signal, and we obtain nonnegative matrices. Then, we form the new matrices by applying the CFP where each column of the formed matrix contains five consequent frames of the nonnegative matrix and performs an ARMA filtering operation. After that, we apply NMF to each newly formed matrix and detect the speech components via proposed ssRM. Finally, the estimated signal can be achieved through them by applying inverse SWT. Our approach is evaluated using IEEE corpus and different types of noises. Objective speech quality and intelligibility improve significantly by applying this approach and outperforms related methods such as conventional STFT-NMF and DWPT-NMF.

Similar content being viewed by others

References

Boll, S. (1979). Suppression of acoustic noise in speech using spectral subtraction. IEEE Transactions on Acoustics, Speech, and Signal Processing, 27(2), 113–120.

Udrea, R. M., Vizireanu, N. D., & Ciochina, S. (2008). An improved spectral subtraction method for speech enhancement using a perceptual weighting filter. Digital Signal Processing, 18(4), 581–587.

Malah, D., Cox, R.V., & Accardi, (1999). Tracking speech-presence uncertainty to improve speech enhancement in non-stationary noise environments. IEEE International Conference on Acoustics, Speech, Signal Processing (pp. 789–792).

McAulay, R., & Malpass, M. (1980). Speech enhancement using a soft-decision noise suppression filter. IEEE Transactions on Acoustics, Speech, and Signal Processing, ASSP-28(2), 137–145.

Lotter, T., & Vary, P. (2005). Speech enhancement by map spectral amplitude estimation using a super-Gaussian speech model. EURASIP Journal of Applied Signal Processing, 2005, 1110–1126.

Ephraim, Y., & Malah, D. (1984). Speech enhancement using a minimum-mean square error short-time spectral amplitude estimator. IEEE Transactions on Acoustics, Speech, and Signal Processing, 32(6), 1109–1121.

Scalart, P., et al. (1996). Speech enhancement based on a priori signal to noise estimation. Acoustics, Speech, and Signal Processing, 629–632.

Ephraim, Y., & Van, T. H. L. (1995). A signal subspace approach for speech enhancement. IEEE Transactions on Speech and Audio Processing, 3(4), 251–266.

Narayanan, A., & Wang, D. (2013). Ideal ratio mask estimation using deep neural networks for robust speech recognition. IEEE Int. Conf. Acoustics, Speech, Signal Process, 7092–7096.

Tu, Y.-H., Du, J., & Lee, C.-H. (2017). A speaker-dependent approach to Single-Channel joint speech separation and acoustic modeling based on deep neural networks for robust recognition of multi-talker speech. Journal of Signal Processing System, 90, 963–973.

Xu, Y., Du, J., Dai, L. R., & Lee, C. H. (2015). A regression approach to speech enhancement based on deep neural networks. IEEE-ACM Trans. Audio, Speech, Language Processing, 23(1), 7–19.

Lu, X., Tsao, Y., Matsuda, S., & Hori, C. (2013). Speech enhancement based on deep denoising autoencoder. INTERSPEECH, 436–440.

Hea, Y., Suna, G. G., & Han, J. (2015). Spectrum enhancement with sparse coding for robust speech recognition. Digital Signal Processing, 43, 59–70.

Adler, A., Elad, M., Hel, Y., & Rivlin, E. (2014). Sparse coding with anomaly detection. Journal of Signal Processing System, 79, 179–188.

Wilson, K. W., Raj, B., Smaragdis, P., & Divakaran, A. (2008). Speech denoising using nonnegative matrix factorization with priors. ICASSP, 4029–4032.

Mowlaee, P., Saeidi, R., & Stylanou, Y. (2014). Phase importance in speech processing applications. Proc. INTERSPEECH.

Wang, S.-S., Chern, A., Tsao, Y., Hung, J.-W., Lu, X., Lai, Y.-H., & Su, B. (2016). Wavelet speech enhancement based on nonnegative matrix factorization. IEEE Signal Processing Letters, 23, 1101–1105.

Lu, C.-T., &Wang, H.-C., (2007). Speech enhancement using hybrid gain factor in critical-band-wavelet-packet transform. Digital Signal Processing (vol. 17, no. 1, pp. 172-188).

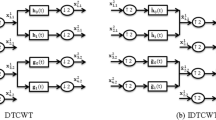

Mortazavi, S. H., & Shahrtash, S. M. (2008). Comparing Denoising performance of DWT, DWPT, SWT and DT-CWT for partial discharge signals. International Universities Power Engineering Conference, 1–6.

Wang, Y., Narayanan, A., & Wang, D. L. (2014). On training targets for supervised speech separation. IEEE/ACM Trans. Audio, Speech, Lang. Processing, 22(12), 1849–1858.

Williamson, D. S., Wang, Y. X., & Wang, D. L. (2016). Complex ratio masking for monaural speech separation. IEEE-ACM trans. Audio, Speech, Lang. Processing, 24(3), 483–493.

Smaragdis, P. (2005). From learning music to learning to separate. Forum Acusticum.

Lee, D. D., & Seung, H. S. (2001). Algorithms for non-negative matrix factorization. Advances in Neural Information Processing Systems, 13, 556–562.

Mallat, S. (1998). A wavelet tour of signal processing. San Diego: Academic.

Shensa, M. J. (1992). The Discrete Wavelet Transform Wedding A Trouse and Mallat Algorithm. IEEE Transactions on Signal Processing, 40, 10.

Mohammadiha, N., Taghia, J., & Leijon, A. (2012). Single channel speech enhancement using bayesian nmf with recursive temporal updates of prior distributions. Proc. ICASSP, 4561–4564.

Chen, C.-P., & Bilmes, J. (2007). MVA processing of speech features. IEEE Transactions on Audio, Speech and Language Processing, 257–270.

Wu, Z., et al., (2013). Exemplar-based voice conversion using non-negative spectrogram deconvolution. 8th ISCA Speech Synthesis Workshop, pp. 201-206.

Wu, Z., Chng, E. S., & Li, H. (2014). Joint nonnegative matrix factorization for exemplar-based voice conversion. Multimedia Tools and Applications, 74.

Subcommittee, I. E. E. E. (1969). IEEE recommended practice for speech and quality measurements. IEEE Transactions on Audio and Electroacoustics, AE-17(3), 225–246.

Hirsch, H.-G., & Pearce, D., (2000). The aurora experimental framework for the performance evaluation of speech recognition systems under noisy conditions. ASR2000-Automatic Speech Recognition: Challenges for the new Millennium ISCA Tutorial And Research Workshop (ITRW).

Varga, A., & Steeneken, H. (1993). Assessment for automatic speech recognition: II. NOISEX-92: A database and an experiment to study the effect of additive noise on speech recognition systems. Speech Communication, 12(3), 247–225.

Kates, J. M., & Arehart, K. H. (2010). The hearing-aid speech quality index (HASQI). Journal of the Audio Engineering Society, 58(5), 363–381.

Kates, J. M., & Arehart, K. H. (2014). The hearing-aid speech perception index (HASPI). Speech Communication, 65, 75–93.

Hollier, M., Rix, A., Beerends, J., & Hekstra, A. (2001). Perceptral evaluation of speech quality (pesq)-a new method for speech quality assessment of tepephone networks and codecs. ICASSP, 749–752.

Taal, C.H., Hendriks, R.C., Heusdens, R., & Jensen, J., (2011). An algorithm for intelligibility prediction of time-frequency weighted noisy speech. Upper Saddle River: Prentice Hall (vol. 19, (7), pp. 2125-2136).

Vincent, E., Gribonval, R., & Fevote, C. (2006). Performance measurement in blind audio source separation. IEEE Transactions on Audio, Speech and Language Processing, 14(4), 1462–1469.

Acknowledgements

This work is supported by the National Natural Science Foundation of China (No. 61671418) and the Advanced Research Fund of University of Science and Technology of China.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Islam, M.S., Al Mahmud, T.H., Khan, W.U. et al. Supervised Single Channel Speech Enhancement Based on Stationary Wavelet Transforms and Non-negative Matrix Factorization with Concatenated Framing Process and Subband Smooth Ratio Mask. J Sign Process Syst 92, 445–458 (2020). https://doi.org/10.1007/s11265-019-01480-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-019-01480-7