Abstract

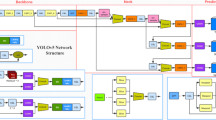

Ship draft reading is an essential link to the draft survey. At present, manual observation is primarily used to determine a ship’s draft. However, manual observation is easily affected by complex situations such as Large waves on the water, Water obstacles, Water traces, Tilted draft characters, and Rusted draft characters. Traditional image-based methods of ship draft reading are difficult to adapt to these complex situations, and existing deep learning-based methods have disadvantages such as the poor robustness of ship draft reading in various complex situations. In this paper, we proposed a method that combines image processing and deep learning and is capable of adapting to a variety of complex situations, particularly in the presence of Large waves on the water and Water obstacles. We also propose a small U\(^\text {2}\)-NetP neural network for semantic segmentation that incorporates Coordinate attention, hence enhancing the capture of information regarding spatial locations. Furthermore, its segmentation accuracy reached 96.47% compared with the original network. In addition, in consideration of the combination of lightweight and multitasking of the method, we use the lightweight Yolov5n network architecture to detect the ship draft characters, which achieves 98% of mAP_0.5 and effectively improves the lightweight of the draft reading. Experimental results on a real dataset encompassing many difficult situations illustrate the state-of-the-art performance of the suggested reading approach when compared to other existing deep learning methods. The average inaccuracy of the draft reading is less than ±0.005 m, and millimeter-level precision is achievable. It can serve as a valuable resource for manual reading. In addition, our work lays the groundwork for future research on the deployment of edge devices.

Similar content being viewed by others

Data Availability

Our dataset is provided by a company and cannot be accessed for free, but for the development and progress of the field, after many discussions with the company, we are only allowed to publish a small number of sample images. (https://github.com/lwh104/draft-reading).

Code Availability

Related code is available as open source.

References

Zhu, J., & Zhu, J. (2021). Error analysis of draft survey based on error transfer principle. Journal of Metrology, 42(5), 609–614. (in China).

Tsujii, T., Yoshida, H., & Iiguni, Y. (2016). Automatic draft reading based on image processing [Article]. Optical Engineering, 55(10), 9, Article 104104. https://doi.org/10.1117/1.Oe.55.10.104104

Canny, J. (1986). A computational approach to edge detection. IEEE transactions on pattern analysis and machine intelligence(6), 679-698.

Dargan, S., Kumar, M., Ayyagari, M. R., & Kumar, G. (2020). A Survey of Deep Learning and Its Applications: A New Paradigm to Machine Learning [Review]. Archives of Computational Methods in Engineering, 27(4), 1071-1092. https://doi.org/10.1007/s11831-019-09344-w

Linardatos, P., Papastefanopoulos, V., & Kotsiantis, S. (2021). Explainable AI: A Review of Machine Learning Interpretability Methods [Review]. Entropy, 23(1), 45, Article 18. https://doi.org/10.3390/e23010018

Alzubaidi, L., Zhang, J. L., Humaidi, A. J., Al-Dujaili, A., Duan, Y., Al-Shamma, O., & Farhan, L. (2021). Review of deep learning: concepts, CNN architectures, challenges, applications, future directions [Review]. Journal of Big Data, 8(1), 74, Article 53. https://doi.org/10.1186/s40537-021-00444-8

Zhang, Z., Zhan, W., He, Z., & Zou, Y. (2020). Application of Spatio-Temporal Context and Convolution Neural Network (CNN) in Grooming Behavior of Bactrocera minax (Diptera: Trypetidae) Detection and Statistics. Insects, 11(9), 565. https://www.mdpi.com/2075-4450/11/9/565

Tabernik, D., Sela, S., Skvarc, J., & Skocaj, D. (2020). Segmentation-based deep-learning approach for surface-defect detection [Article]. Journal of Intelligent Manufacturing, 31(3), 759-776. https://doi.org/10.1007/s10845-019-01476-x

Hong, S., Zhan, W., Dong, T., She, J., Min, C., Huang, H., & Sun, Y. (2022). A Recognition Method of Bactrocera minax (Diptera: Tephritidae) Grooming Behavior via a Multi-Object Tracking and Spatio-Temporal Feature Detection Model. Journal of Insect Behavior, 35(4), 67-81. https://doi.org/10.1007/s10905-022-09802-7

She, J., Zhan, W., Hong, S., Min, C., Dong, T., Huang, H., & He, Z. (2022). A method for automatic real-time detection and counting of fruit fly pests in orchards by trap bottles via convolutional neural network with attention mechanism added. Ecological Informatics, 101690. https://doi.org/10.1016/j.ecoinf.2022.101690

Huang, H., Zhan, W., Du, Z., Hong, S., Dong, T., She, J., & Min, C. (2022). Pork primal cuts recognition method via computer vision. Meat Science, 192, 108898. https://doi.org/10.1016/j.meatsci.2022.108898

Li, Y., Sun, R., & Horne, R. (2019). Deep learning for well data history analysis. In SPE Annual Technical Conference and Exhibition. OnePetro.

Darvishi, H., Ciuonzo, D., Eide, E. R., & Rossi, P. S. (2020). Sensor-fault detection, isolation and accommodation for digital twins via modular data-driven architecture. IEEE Sensors Journal, 21(4), 4827–4838.

Zhang, G., & Li, J. (2020). Search on recognition method of ship water gauge reading basedon improved unet network. Journal of Optoelectronics Laser, 31(11), 1182-1196. (in China) https://doi.org/10.16136/j.joel.2020.11.0175

Badrinarayanan, V., Kendall, A., & Cipolla, R. (2017). Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE transactions on pattern analysis and machine intelligence, 39(12), 2481–2495.

Ronneberger, O., Fischer, P., & Brox, T. (2015, October). U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention (pp. 234-241). Springer, Cham.

Matas, J., Chum, O., Urban, M., & Pajdla, T. (2004). Robust wide-baseline stereo from maximally stable extremal regions. Image and vision computing, 22(10), 761–767.

Wang, B. P., Liu, Z. M., & Wang, H. R. (2021). Computer vision with deep learning for ship draft reading [Article]. Optical Engineering, 60(2), 10, Article 024105. https://doi.org/10.1117/1.Oe.60.2.024105

He, K., Gkioxari, G., Dollár, P., & Girshick, R. (2017). Mask r-cnn. In Proceedings of the IEEE international conference on computer vision (pp. 2961-2969).

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770-778).

Agrahari, A., & Ghosh, R. (2020). Multi-Oriented Text Detection in Natural Scene Images Based on the Intersection of MSER With the Locally Binarized Image. Procedia Computer Science, 171, 322–330.

Zhan, W., Hong, S. B., Sun, Y., & Zhu, C. G. (2021). The System Research and Implementation for Autorecognition of the Ship Draft via the UAV [Article]. International Journal of Antennas and Propagation, 2021, 11, Article 4617242. https://doi.org/10.1155/2021/4617242

Redmon, J., & Farhadi, A. (2018). Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767

Qin, X., Zhang, Z., Huang, C., Dehghan, M., Zaiane, O. R., & Jagersand, M. (2020). U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognition, 106, 107404. https://doi.org/10.1016/j.patcog.2020.107404

Li, Y., Xue, Y., Li, H., Zhang, W., & Gao, Y. (2020). Ship waterline extraction strategy based on deep learning. Control Theory & Applications, 37(11), 2347–2353. (in China).

Zhan, W., Sun, C., Wang, M., She, J., Zhang, Y., Zhang, Z., & Sun, Y. (2021). An improved Yolov5 real-time detection method for small objects captured by UAV. Soft Computing. https://doi.org/10.1007/s00500-021-06407-8

Sun, C., Zhan, W., She, J., & Zhang, Y. (2020). Object Detection from the Video Taken by Drone via Convolutional Neural Networks. Mathematical Problems in Engineering, 2020, 4013647. https://doi.org/10.1155/2020/4013647

Francies, M. L., Ata, M. M., & Mohamed, M. A. (2022). A robust multiclass 3D object recognition based on modern YOLO deep learning algorithms [Article]. Concurrency and Computation-Practice & Experience, 34(1), 24, Article e6517. https://doi.org/10.1002/cpe.6517

Hao, Y., Liu, Y., Wu, Z., Han, L., Chen, Y., Chen, G., & Lai, B. (2021). Edgeflow: Achieving practical interactive segmentation with edge-guided flow. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 1551-1560).

Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2016). You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 779-788).

Redmon, J., & Farhadi, A. (2017). YOLO9000: better, faster, stronger. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 7263-7271).

Bochkovskiy, A., Wang, C. Y., & Liao, H. Y. M. (2020). Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934

Zheng, J.-C., Sun, S.-D., & Zhao, S.-J. (2022). Fast ship detection based on lightweight YOLOv5 network. Iet Image Processing, 16(6), 1585-1593. https://doi.org/10.1049/ipr2.12432

Seber, G. A., & Lee, A. J. (2012). Linear regression analysis. John Wiley & Sons.

Wang, K., Fang, B., Qian, J. Y., Yang, S., Zhou, X., & Zhou, J. (2020). Perspective Transformation Data Augmentation for Object Detection [Article]. IEEE Access, 8, 4935-4943. https://doi.org/10.1109/access.2019.2962572

Huang, Y. P., Li, Y. W., Hu, X., & Ci, W. Y. (2018). Lane Detection Based on Inverse Perspective Transformation and Kalman Filter [Article]. Ksii Transactions on Internet and Information Systems, 12(2), 643-661. https://doi.org/10.3837/tiis.2018.02.006

Li, X., Li, S., Bai, W., Cui, X., Yang, G., Zhou, H., & Zhang, C. (2017, September). Method for rectifying image deviation based on perspective transformation. In IOP Conference Series: Materials Science and Engineering (Vol. 231, No. 1, p. 012029). IOP Publishing.

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., & Yuille, A. L. (2017). Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence, 40(4), 834–848.

Chen, L. C., Zhu, Y., Papandreou, G., Schroff, F., & Adam, H. (2018). Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference On Computer Vision (ECCV) (pp. 801-818).

Zhou, Z., Rahman Siddiquee, M. M., Tajbakhsh, N., & Liang, J. (2018). Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support(pp. 3-11). Springer, Cham.

Qin, X., Fan, D.-P., Huang, C., Diagne, C., Zhang, Z., Sant’Anna, A. C., & Shao, L. (2021). Boundary-aware segmentation network for mobile and web applications. arXiv preprint arXiv:2101.04704.

Qin, X., Zhang, Z., Huang, C., Gao, C., Dehghan, M., & Jagersand, M. (2019). Basnet: Boundary-aware salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 7479-7489).

Hou, Q., Zhou, D., & Feng, J. (2021). Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 13713-13722).

Al-Salam, W., & Verma, A. (1975). A fractional Leibniz q-formula. Pacific Journal of Mathematics, 60(2), 1–9.

Sobczyk, G., & Sanchez, O. L. (2011). Fundamental Theorem of Calculus [Article]. Advances in Applied Clifford Algebras, 21(1), 221-231. https://doi.org/10.1007/s00006-010-0242-8

Bressoud, D. M. (2011). Historical Reflections on Teaching the Fundamental Theorem of Integral Calculus [Article]. American Mathematical Monthly, 118(2), 99-115. https://doi.org/10.4169/amer.math.monthly.118.02.099

Buslaev, A., Iglovikov, V. I., Khvedchenya, E., Parinov, A., Druzhinin, M., & Kalinin, A. A. (2020). Albumentations: Fast and Flexible Image Augmentations. Information, 11(2). https://doi.org/10.3390/info11020125

Long, J., Shelhamer, E., & Darrell, T. (2015). Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 3431-3440).

Funding

The research project of this paper is supported by the fund of National Natural Science Foundation of China(62276032), Method Study on the Grooming Behavior Intelligent Identification of Tephritid fly via Integrating Occurrence Domain of Action and Spatiotemporal Characteristics of Behavior; the China University Industry-University-Research Innovation Fund, the New Generation Information Technology Innovation Project 2020, the research and development of artificial intelligence insect grooming behavior automatic detection and statistical system – Taking Bactrocera minax as an example. (2020ITA03012).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics Approval

Not applicable.

Consent to Participate

Informed consent was obtained from all individual participants included in the study.

Consent for Publication

The authors affirm that human research participants provided informed consent for publication of the images in Figure(s) 1.

Conflict of Interest/Competing Interests

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, W., Zhan, W., Han, T. et al. Research and Application of U\(^2\)-NetP Network Incorporating Coordinate Attention for Ship Draft Reading in Complex Situations. J Sign Process Syst 95, 177–195 (2023). https://doi.org/10.1007/s11265-022-01816-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-022-01816-w