Abstract

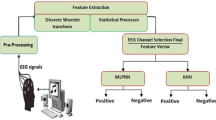

Brain–computer interface (BCI) has created a new era in neuroscience. It has improved the life quality of severely disabled patients. It allows them to regain the power of executing will by their cognitive, expressive and affective brain activities. An electroencephalogram (EEG)-based BCI system with wireless manner was developed to extract EEG signals with Emotiv EPOC head set for recognizing the facial actions in this paper. The extracted feature vectors of EEG can be reduced by the Wavelet transform. Then the reduced EEG signals can then be clearly classified into six clusters by means of support vector machine algorithm with Gaussian kernel function. The better correct rates can be obtained by one-order wavelet transform than those got by three-order wavelet transform. In order to get real-time manner to control an electronic system smoothly, the sampling data have to be reduced. If time consumption is considered, we can choice the one-order wavelet transform with 32 samples. The experimental results showed a promising correct rate for the facial-action recognition through the proposed BCI system with real-time manner.

Similar content being viewed by others

References

Lin, J.-S., Wang, M., Lia, P.-Y., & Li, Z. (2014). An SSVEP-based BCI system for SMS in a mobile phone. Applied Mechanics and Materials, 513–517, 412–415.

Pfurtscheller, G., Müller, G. R., Pfurtscheller, J., Gerner, H. J., & Rupp, R. (2003). Thought-control of functional electrical stimulation to restore hand grasp in a patient with tetraplegia. Neuroscience Letters, 351, 33–36.

Lin, J.-S., Wang, M., & Hsieh, C.-H. (2014). An SSVEP-based BCI system with SOPC platform for electric wheelchairs. Transactions on Computer Science and Technology, 3(2), 35–40.

Lin, J.-S., & Huang, S.-M. (2013). An FPGA-based brain–computer interface for wireless electric wheelchairs. Applied Mechanics and Materials, 283–287, 1616–1621.

Bos Nijholt, D. P. O., & Reuderink, B. (2009). Turning shortcomings into challenges: Brain–computer interfaces for games. Entertainment Computing, 1(2), 85–94.

Mühl, H., Gürkök, H. D., Bos, P. O., Thurlings, M., Scherffig, L., Duvinage, M., et al. (2010). Bacteria Hunt. Journal of Multimodal User Interfaces, 4, 11–25.

Hal, V., Rhodes, S., Dunne, B., & Bossemeyer, R. (2014). Low-cost EEG-based sleep detection. In Proceedings of the 36th IEEE annual international conference (pp. 4571–4574).

Thobbi, A., Kadam, R., & Sheng, W. (2010). Achieving remote presence using a humanoid robot controlled by a non-invasive BCI device. International Journal on Artificial Intelligence and Machine Learning, 10, 41–45.

Szarfir, A., & Signorile, R. (2011). An exploration of the utilization of electroencephalography and neural nets to control robots. Proceedings of Human–Computer Interaction-INTERACT, 2011, 186–194.

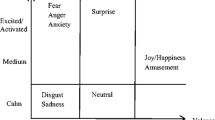

Ramirez, R., & Vamvakousis, Z. (2012). Detecting emotion from EEG signals using the Emotive Epoc device. Lecture Notes in Computer Science, 7670, 175–184.

Duvinage, M., Castermans, T., Petieau, M., Hoellinger, T., Cheron, G., & Dutoit, T. (2013). Performance of the Emotiv EPOC headset for P300-based applications. BioMedical Engineering OnLine, 12, 56. http://www.biomedical-engineering-online.com/content/12/1/56.

Fraga, T., Pichiliani, M., & Louro, D. (2013). Experimental art with brain controlled interface. Lecture Notes in Computer Science, 8009, 642–651.

Khushaba, R. N., Wise, C., Kodagoda, S., Louviere, J., Kahn, B. E., & Townsend, C. (2013). Consumer neuroscience: Assessing the brain response to marketing stimuli using electroencephalogram (EEG) and eye tracking. Expert Systems with Applications, 40(9), 3803–3812.

Liu, Y., Jiang, X., Cao, T., & Wan, F. (2012). Implementation of SSVEP based BCI with Emotiv EPOC. In Virtual environments human–computer interfaces and measurement systems (VECIMS) (pp. 34–37).

Grude, S., Freeland, M., Yang, C., & Ma, H. (2013). Controlling mobile Spykee robot using Emotiv Nero headset. In Proceedings of the 32nd Chinese control conference (pp. 5927–5932).

Vetterli, M., & Kovacevic, J. (1995). Wavelets and subband coding. London: Prentice Hall.

Hajibabazadeh, M., & Azimirad, V. (2014). Brain–robot interface: Distinguishing left and right hand EEG signals through SVM. In 2014 Second RSI/ISM international conference on robotics and mechatronics (pp. 813–816).

Acknowledgments

In this paper, the research was sponsored by the Ministry of Science and Technology of Taiwan under the G

rant NSC103-2221-E-167-027.