Abstract

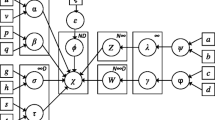

In this paper, we focus on a variational Bayesian learning approach to infinite Dirichlet mixture model (VarInDMM) which inherits the confirmed effectiveness of modeling proportional data from infinite Dirichlet mixture model. Based on the Dirichlet process mixture model, VarInDMM has an interpretation as a mixture model with a countably infinite number of components, and it is able to determine the optimal value of this number according to the observed data. By introducing an extended variational inference framework, we further obtain an analytically tractable solution to estimate the posterior distributions of the parameters for the mixture model. Experimental results on both synthetic and real data demonstrate its good performance on object categorization and text categorization.

Similar content being viewed by others

References

Nguyen, T. M., & Wu, Q. M. (2013). A nonsymmetric mixture model for unsupervised image segmentation. IEEE Transactions on Cybernetics, 43(2), 751–765.

Ma, Z., & Leijon, A. (2011). Bayesian estimation of Beta mixture models with variational inference. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(11), 2160–2173.

Fan, W., Bouguila, N., & Ziou, D. (2012). Variational learning for finite Dirichlet mixture models and applications. IEEE Transactions on Neural Networks and Learning Systems, 23(5), 762–774.

Ma, Z., Leijon, A., & Kleijn, W. B. (2013). Vector quantization of LSF parameters with a mixture of Dirichlet distributions. IEEE Transaction on Audio, Speech, and Language Processing, 21(9), 1777–1790.

Yu, H., Tan, Z.-H., Ma, Z., Martin, R., & Guo, J. (2018). Spoofing detection in automatic speaker verification systems using DNN classifiers and dynamic acoustic features. IEEE Transactions on Neural Networks and Learning Systems, PP(99), 1–12.

Ma, Z., Taghia, J., Kleijn, W. B., Leijon, A., & Guo, J. (2015). Line spectral frequencies modeling by a mixture of von Mises–Fisher distributions. Signal Processing, 114(C), 219–224.

Ma, Z., Chatterjee, S., Kleijn, W. B., & Guo, J. (2014). Dirichlet mixture modeling to estimate an empirical lower bound for LSF quantization signal processing. Signal Processing, 104(6), 291–295.

Figueiredo, M. A. T. (2002). Unsupervised learning of finite mixture models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(3), 381–396.

Bouguila, N., & Ziou, D. (2006). Unsupervised selection of a finite Dirichlet mixture model: An MML-based approach. IEEE Transactions on Knowledge and Data Engineering, 18(8), 993–1009.

Ma, Z., Xie, J., Li, H., Sun, Q., Si, Z., Zhang, J., et al. (2017). The role of data analysis in the development of intelligent energy networks. IEEE Network, 31(5), 88–95.

Reynolds, D. A., & Rose, R. C. (1995). Robust text-independent speaker identification using Gaussian mixture speaker models. IEEE Transactions on Speech and Audio Processing, 3(1), 72–83.

Reynolds, D. A., Quatieri, T. F., & Dunn, R. B. (2000). Speaker verification using adapted Gaussian mixture models. Digital Signal Processing, 10(1–3), 19–41.

Ma, Z., Teschendorff, A. E., Leijon, A., Qiao, Y., Zhang, H., & Guo, J. (2015). Variational Bayesian matrix factorization for bounded support data. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37(4), 876–889.

Ma, Z., Xue, J. H., Leijon, A., Tan, Z. H., Yang, Z., & Guo, J. (2018). Decorrelation of neutral vector variables: Theory and applications. IEEE Transactions on Neural Networks and Learning Systems, 29(1), 129–143.

Ma, Z., Tan, Z. H., & Guo, J. (2016). Feature selection for neutral vector in EEG signal classification. Neurocomputing, 174(PB), 937–945.

Atapattu, S., Tellambura, C., & Jiang, H. (2011). A mixture Gamma distribution to model the SNR of wireless channels. IEEE Transactions on Wireless Communications, 10(12), 4193–4203.

Bouguila, N. (2012). Hybrid generative/discriminative approaches for proportional data modeling and classification. IEEE Transactions on Knowledge and Data Engineering, 24(12), 2184–2202.

Taghia, J., Ma, Z., & Leijon, A. (2014). Bayesian estimation of the von-Mises Fisher mixture model with variational inference. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(9), 1701–1715.

Nguyen, T. M., Wu, Q. M., Mukherjee, D., & Zhang, H. (2014). A Bayesian bounded asymmetric mixture model with segmentation application. IEEE Journal of Biomedical and Health Informatics, 18(1), 109–119.

Seghouane, A. K., & Amari, S. I. (2007). The AIC criterion and symmetrizing the Kullback–Leibler divergence. IEEE Transactions on Neural Networks, 18(1), 97–106.

Antoniak, C. E. (1997). Mixtures of Dirichlet processes with applications to Bayesian nonparametric problems. Annalsis of Statistics, 2(6), 1152–1174.

Wei, X., & Li, C. (2012). The infinite student’s t-mixture for robust modeling. Signal Processing, 92(1), 224–234.

Fan, W., & Bouguila, N. (2014). Variational learning for Dirichlet process mixtures of dirichlet distributions and applications. Multimedia Tools and Applications, 70(3), 1685–1702.

Fan, W., & Bouguila, N. (2013). Online learning of a Dirichlet process mixture of beta-Liouville distributions via variational inference. IEEE Transactions on Neural Networks and Learning Systems, 24(11), 1850–1862.

Gershman, S. J., & Blei, D. M. (2012). A tutorial on Bayesian nonparametric models. Journal of Mathematical Psychology, 56(1), 1–12.

Bishop, C. M. (2006). Pattern recognition and machine learning. New York: Springer.

Ma, Z., Rana, P. K., Taghia, J., Flierl, M., & Leijon, A. (2014). Bayesian estimation of Dirichlet mixture model with variational inference. Pattern Recognition, 47(9), 3143–3157.

Teh, Y. W., Jordan, M. I., Beal, M. J., & Blei, D. M. (2006). Hierarchical Dirichlet processes. Journal of the American Statistical Association, 101(476), 1566–1581.

Blackwell, D., & Macqueen, J. B. (1973). Ferguson distributions via polya URN schemes. The Annals of Statistics, 1(2), 353–355.

Blei, D. M., & Jordan, M. I. (2005). Variational inference for Dirichlet process mixtures. Bayesian Analysis, 1(1), 121–144.

Hoffman, M. D., Blei, D. M. & Cook, P. R. (2010). Bayesian nonparametric matrix factorization for recorded music. In International Conference on Machine Learning (NIPS), pp. 439–446.

Bishop, C. M., Lawrence, N., Jaakkola, T., & Jordan, M. I. (1997). Approximating posterior distributions in belief networks using mixtures. In Conference on Advances in Neural Information Processing Systems (NIPS), pp. 1–7.

Wang, X., Liu, X., Shi, Z., & Sui, H. (2012). A feature binding computational model for multi-class object categorization and recognition. Neural Computing and Applications, 21(6), 1297–1305.

Lampert, C. H., Nickisch, H., & Harmeling, S. (2014). Attribute-based classification for zeroshot visual object categorization. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(3), 453–65.

Bergamo, A., & Torresani, L. (2014). Classemes and other classifier-based features for efficient object categorization. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(10), 1988–2001.

Xu, P., Yin, Q., Huang, Y., Song, Y.-Z., Ma, Z., Wang, L., et al. (2018). Cross-modal subspace learning for fine-grained sketch-based image retrieval. Neurocomputing, 278, 75–86.

Lowe, D. G., & Lowe, D. G. (2004). Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 60(2), 91–110.

Mikolajczyk, K., & Schmid, C. (2005). A performance evaluation of local descriptors. IEEE Transactions on Pattern Analysis and Machine Intelligence, 27(10), 1615–1630.

Dalal, N., & Triggs, B. (2005). Histograms of oriented gradients for human detection. In Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 886–893.

Li, W., Chen, C., Su, H., & Du, Q. (2015). Local binary patterns and extreme learning machine for hyperspectral imagery classification. IEEE Transactions on Geoscience and Remote Sensing, 53(7), 3681–3693.

Ludwig, O., Delgado, D., Goncalves, V., & Nunes, U. (2009). Trainable classifier-fusion schemes: An application to pedestrian detection. In IEEE International Conference on Intelligent Transportation Systems (ITSC), pp. 1–6.

Leibe, B., & Schiele, B. (2003). Analyzing appearance and contour based methods for object categorization. In IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), pp. II-409–II-415.

Fergus, R., Perona, P., & Zisserman, A. (2003). Object class recognition by unsupervised scale-invariant learning. In IEEE Computer Society Conference on computer Vision and Pattern Recognition (CVPR), pp. II-264–II-271.

Li, F. F., Fergus, R., & Perona, P. (2004). Learning generative visual models from few training examples: An incremental Bayesian approach tested on 101 object categories. In IEEE International Conference on Computer Vision and Pattern Recognition Workshop (CVPR), pp. 178–178.

Gao, B., Liu, T. Y., Feng, G., Qin, T., Cheng, Q. S., & Ma, W. Y. (2005). Hierarchical taxonomy preparation for text categorization using consistent bipartite spectral graph copartitioning. IEEE Transactions on Knowledge and Data Engineering, 17(9), 1263–1273.

Cai, D., & He, X. (2012). Manifold adaptive experimental design for text categorization. IEEE Transactions on Knowledge and Data Engineering, 24(4), 707–719.

Ping, Y., Chang, Y., Zhou, Y., Tian, Y., Yang, Y., & Zhang, Z. (2015). Fast and scalable support vector clustering for large-scale data analysis. Knowledge and Information System, 43(2), 281–310.

Ping, Y., Tian, Y., Guo, C., Wang, B., & Yang, Y. (2017). FRSVC: Towards making support vector clustering consume less. Pattern Recognition, 69(9), 286–298.

Juan, A., & Vidal, E. (2002). On the use of Bernoulli mixture models for text classification. Pattern Recognition, 11(35), 2705–2710.

Bouguila, N., & Ziou, D. (2010). A Dirichlet process mixture of generalized Dirichlet distributions for proportional data modeling. IEEE Transactions on Neural Networks, 21(1), 107–122.

Bouguila, N. (2012). Infinite Liouville mixture models with application to text and texture categorization. Pattern Recognition Letters, 33(2), 103–0110.

Tang, B., He, H., Baggenstoss, P. M., & Kay, S. (2016). A Bayesian classification approach using class-specific features for text categorization. IEEE Transactions on Knowledge and Data Engineering, 28(6), 1602–1606.

Ping, Y., & Zhou, Y. (2012). Efficient representation of text with multiple perspectives. The Journal of China Universities of Posts and Telecommunications, 1(19), 101–111.

Porter, M. F. (1980). An algorithm for suffix stripping. Program, 14(3), 130–137.

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant No. 513335004, the Program for Science & Technology Innovation Talents in Universities of Henan Province under Grant No. 18HASTIT022, the Plan For Scientific Innovation Talent of He’nan Province under Grand No. 184100510012, the Foundation for University Key Teacher of Henan Province under Grant No. 2016GGJS-141, the Foundation of Henan Educational Committee under Grant Nos. 16A520025 and 18A520047, the Open Project Foundation of Information Technology Research Base of Civil Aviation Administration of China under Grant No. CAAC-ITRB-201702, Yunnan Provincial Department of Education Science Research Fund Project under Grant No. 2017ZDX045, Heilongjiang Natural Science Foundation under Grant No. H2016100, and Innovation Scientists and Technicians Troop Construction Projects of He’nan Province.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lai, Y., He, W., Ping, Y. et al. Variational Bayesian Inference for Infinite Dirichlet Mixture Towards Accurate Data Categorization. Wireless Pers Commun 102, 2307–2329 (2018). https://doi.org/10.1007/s11277-018-5723-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-018-5723-4