Abstract

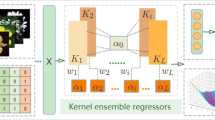

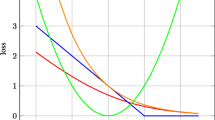

In this paper, co-regularized kernel ensemble regression scheme is brought forward. In the scheme, multiple kernel regressors are absorbed into a unified ensemble regression framework simultaneously and co-regularized by minimizing total loss of ensembles in Reproducing Kernel Hilbert Space. In this way, one kernel regressor with more accurate fitting precession on data can automatically obtain bigger weight, which leads to a better overall ensemble performance. Compared with several single and ensemble regression methods such as Gradient Boosting, Tree Regression, Support Vector Regression, Ridge Regression and Random Forest, our proposed method can achieve best performances of regression and classification tasks on several UCI datasets.

Similar content being viewed by others

References

Basak, D., Pal, S., Patranabis, D.C.: Support vector regression. Neural Inform. Process.-Lett. Rev. 11(10), 203–224 (2007)

Bludszuweit, H., Domínguez-Navarro, J.A., Llombart, A.: Statistical analysis of wind power forecast error. IEEE Trans. Power Syst. 23(3), 983–991 (2008)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Chen, P., Tao, S., Xiao, X., Li, L.: Uncertainty level of voltage in distribution network: an analysis model with elastic net and application in storage configuration. IEEE Transactions on Smart Grid (2016)

Cheng, C.-K., Graham, R., Kang, I., Park, D., Wang, X.: Tree structures and algorithms for physical design. In: Proc. ISPD (2018)

Drucker, H., Burges, C.J., Kaufman, L., Smola, A.J., Vapnik, V.: Support vector regression machines. In: Advances in Neural Information Processing Systems, pp 155–161 (1997)

Exterkate, P., Groenen, P.J., Heij, C., van Dijk, D.: Nonlinear forecasting with many predictors using kernel ridge regression. Int. J. Forecast. 32(3), 736–753 (2016)

Fan, C., Rey, S.J., Myint, S.W.: Spatially filtered ridge regression (sfrr): A regression framework to understanding impacts of land cover patterns on urban climate. Transactions in GIS (2016)

Freund, Y., Schapire, R.E., et al.: Experiments with a new boosting algorithm. In: Icml, vol. 96, pp. 148–156. Bari (1996)

Gao, L., Song, J., Liu, X., Shao, J., Liu, J., Shao, J.: Learning in high-dimensional multimedia data: the state of the art. Multimed Syst 23(3), 303–313 (2017)

Gao, L., Song, J., Nie, F., Yan, Y., Sebe, N., Tao Shen, H.: Optimal graph learning with partial tags and multiple features for image and video annotation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 4371–4379 (2015)

Gao, W., Peng, Y.: Ideal kernel-based multiple kernel learning for spectral-spatial classification of hyperspectral image. In: IEEE Geoscience and Remote Sensing Letters (2017)

Han, Y., Yang, Y., Zhou, X.: Co-regularized ensemble for feature selection. In: IJCAI, vol. 13, pp 1380–1386 (2013)

Hasan, M.A.M., Nasser, M., Pal, B., Ahmad, S.: Support vector machine and random forest modeling for intrusion detection system (ids). J. Intell. Learn. Syst. Appl. 6(1), 45 (2014)

Hearst, M.A., Dumais, S.T., Osuna, E., Platt, J., Scholkopf, B.: Support vector machines. IEEE Intell. Syst. Their. Appl. 13(4), 18–28 (1998)

Heinermann, J., Kramer, O.: Precise wind power prediction with svm ensemble regression. In: International Conference on Artificial Neural Networks, pp. 797–804. Springer (2014)

Homrighausen, D., McDonald, D.J.: Risk estimation for high-dimensional lasso regression (2016). arXiv:1602.01522

Ji, S., Sun, L., Jin, R., Ye, J.: Multi-label multiple kernel learning. In: Advances in Neural Information Processing Systems, pp 777–784 (2009)

Johnson, J., Ballan, L., Fei-Fei, L.: Love thy neighbors: image annotation by exploiting image metadata. In: Proceedings of the IEEE International Conference on Computer Vision, pp 4624–4632 (2015)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

Lenters, V., Portengen, L., Rignell-Hydbom, A., Jönsson, B. A., Lindh, C.H., Piersma, A.H., Toft, G., Bonde, J.P., Heederik, D., Rylander, L., et al.: Prenatal phthalate, perfluoroalkyl acid, and organochlorine exposures and term birth weight in three birth cohorts: multi-pollutant models based on elastic net regression. Environ. Health Perspect. 124(3), 365 (2016)

Li, G., Niu, P.: An enhanced extreme learning machine based on ridge regression for regression. Neural Comput. Appl. 22(3-4), 803–810 (2013)

Lin, Y.-Y., Liu, T.-L., Fuh, C.-S.: Multiple kernel learning for dimensionality reduction. IEEE Trans. Pattern Anal. Mach. Intell. 33(6), 1147–1160 (2011)

Liu, F., Xiang, T., Hospedales, T.M., Yang, W., Sun, C.: Semantic regularisation for recurrent image annotation (2016). arXiv:1611.05490

Liu, W., Liu, H., Tao, D., Wang, Y., Lu, K.: Manifold regularized kernel logistic regression for Web image annotation. Neurocomputing 172, 3–8 (2016)

Lu, J., Wang, G., Moulin, P.: Image set classification using holistic multiple order statistics features and localized multi-kernel metric learning. In: 2013 IEEE International Conference on Computer Vision (ICCV), pp. 329–336, IEEE (2013)

Lu, Y., Zhou, Y., Qu, W., Deng, M., Zhang, C.: A lasso regression model for the construction of microrna-target regulatory networks. Bioinformatics 27 (17), 2406–2413 (2011)

Mao, S., Jiao, L., Xiong, L., Gou, S., Chen, B., Yeung, S.-K.: Weighted classifier ensemble based on quadratic form. Pattern Recogn. 48(5), 1688–1706 (2015)

Montgomery, D.C., Peck, E.A., Vining, G.G.: Introduction to Linear Regression Analysis. Wiley, Hoboken (2015)

Morvant, E., Habrard, A., Ayache, S.: Majority vote of diverse classifiers for late fusion. In: Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR), pp. 153–162. Springer (2014)

Muller, K.-R., Mika, S., Ratsch, G., Tsuda, K., Scholkopf, B.: An introduction to kernel-based learning algorithms. IEEE Trans. Neural Netw. 12(2), 181–201 (2001)

Murphy, K.P.: Machine Learning: A Probabilistic Perspective. MIT Press, Cambridge (2012)

Niazmardi, S., Safari, A., Homayouni, S.: A novel multiple kernel learning framework for multiple feature classification. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing (2017)

Qian, B., Wang, X., Ye, J., Davidson, I.: A reconstruction error based framework for multi-label and multi-view learning. IEEE Trans. Knowl. Data Eng. 27(3), 594–607 (2015)

Qiu, S., Lane, T.: Multiple Kernel Learning for Support Vector Regression, Computer Science Department, The University of New Mexico, Albuquerque, NM, USA, Tech. Rep, sp 1 (2005)

Quinlan, J.R., et al.: Learning with continuous classes. In: 5th Australian Joint Conference on Artificial Intelligence, vol. 92, pp. 343–348, Singapore (1992)

Rathore, S.S., Kumar, S.: A decision tree regression based approach for the number of software faults prediction. ACM SIGSOFT Software Engineering Notes 41(1), 1–6 (2016)

Santamaría-Bonfil, G., Frausto-Solís, J., Vázquez-Rodarte, I.: Volatility forecasting using support vector regression and a hybrid genetic algorithm. Comput. Econ. 45(1), 111–133 (2015)

Schmidhuber, J.: Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015)

Schmidt, U., Jancsary, J., Nowozin, S., Roth, S., Rother, C.: Cascades of regression tree fields for image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 38(4), 677–689 (2016)

Schölkopf, B., Smola, A.J.: Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond. MIT Press, Cambridge (2002)

Shah, S.A.A., Nadeem, U., Bennamoun, M., Sohel, F., Togneri, R.: Efficient image set classification using linear regression based image reconstruction (2017). arXiv:1701.02485

Shawe-Taylor, J., Cristianini, N.: Kernel Methods for Pattern Analysis. Cambridge University Press, Cambridge (2004)

Stransky, N., Ghandi, M., Kryukov, G.V., Garraway, L.A., Lehár, J., Liu, M., Sonkin, D., Kauffmann, A., Venkatesan, K., Edelman, E.J., et al.: Pharmacogenomic agreement between two cancer cell line data sets. Nature 528 (7580), 84 (2015)

Svetnik, V., Liaw, A., Tong, C., Culberson, J.C., Sheridan, R.P., Feuston, B.P.: Random forest: a classification and regression tool for compound classification and qsar modeling. J. Chem. Inf. Comput. Sci. 43(6), 1947–1958 (2003)

Szafranski, M., Grandvalet, Y., Rakotomamonjy, A.: Composite kernel learning. Mach. Learn. 79(1-2), 73–103 (2010)

Tang, J., Tian, Y.: A multi-kernel framework with nonparallel support vector machine. Neurocomputing 266, 226–238 (2017)

Vapnik, V.N., Vapnik, V.: Statistical Learning Theory, vol. 1. Wiley, New York (1998)

Wahba, G.: Spline Models for Observational Data. SIAM (1990)

Wang, J., Qin, S., Zhou, Q., Jiang, H.: Medium-term wind speeds forecasting utilizing hybrid models for three different sites in Xinjiang, China. Renew. Energy 76, 91–101 (2015)

Wang, J., Yang, Y., Mao, J., Huang, Z., Huang, C., Xu, W.: Cnn-rnn: a unified framework for multi-label image classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2285–2294 (2016)

Wang, X., Gao, L., Wang, P., Sun, X., Liu, X.: Two-stream 3d convnet fusion for action recognition in videos with arbitrary size and length. IEEE Transactions on Multimedia (2017)

Wang, Y., Feng, D., Li, D., Chen, X., Zhao, Y., Niu, X.: A mobile recommendation system based on logistic regression and gradient boosting decision trees. In: 2016 International Joint Conference on Neural Networks (IJCNN), pp. 1896–1902, IEEE (2016)

Wu, H., Cai, Y., Wu, Y., Zhong, R., Li, Q., Zheng, J., Lin, D., Li, Y.: Time series analysis of weekly influenza-like illness rate using a one-year period of factors in random forest regression. BioScience Trends, pp. 2017–01 035 (2017)

Yu, M., Xie, Z., Shi, H., Hu, Q.: Locally weighted ensemble learning for regression. In: Pacific-Asia Conference on Knowledge Discovery and Data Mining, pp. 65–76. Springer (2016)

Zhang, C., Fu, H., Hu, Q., Zhu, P., Cao, X.: Flexible multi-view dimensionality co-reduction. IEEE Trans. Image Process. 26(2), 648–659 (2017)

Zhang, F., Du, B., Zhang, L.: Scene classification via a gradient boosting random convolutional network framework. IEEE Trans. Geosci. Remote Sens. 54(3), 1793–1802 (2016)

Zhang, S., Dong, X., Guan, Y.: Synonym recognition based on user behaviors in e-commerce [j]. J. Chin. Inform. Process. 3, 015 (2012)

Zou, K.H., Tuncali, K., Silverman, S.G.: Correlation and simple linear regression. Radiology 227(3), 617–628 (2003)

Acknowledgements

This work was funded in part by the National Natural Science Foundation of China (No.61572240,61601202), Natural Science Foundation of Jiangsu Province(No. BK20140571) and the Open Project Program of the National Laboratory of Pattern Recognition (NLPR) (No.201600005).

Author information

Authors and Affiliations

Corresponding author

Additional information

This article belongs to the Topical Collection: Special Issue on Deep vs. Shallow: Learning for Emerging Web-scale Data Computing and Applications

Guest Editors: Jingkuan Song, Shuqiang Jiang, Elisa Ricci, and Zi Huang

Rights and permissions

About this article

Cite this article

Wornyo, D.K., Shen, XJ., Dong, Y. et al. Co-regularized kernel ensemble regression. World Wide Web 22, 717–734 (2019). https://doi.org/10.1007/s11280-018-0576-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11280-018-0576-z