Abstract

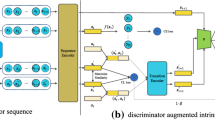

Reinforcement learning (RL) has achieved ideal performance in recommendation systems (RSs) by taking care of both immediate and future rewards from users. However, the existing RL-based recommendation methods assume that only a single type of interaction behavior (e.g., clicking) exists between user and item, whereas practical recommendation scenarios involve multiple types of user interaction behaviors (e.g., adding to cart, purchasing). In this paper, we propose a Multi-Task Reinforcement Learning model for multi-behavior Recommendation (MTRL4Rec), which gives different actions for users’ different behaviors with a single agent. Specifically, we first introduce a modular network in which modules can be shared or isolated to capture the commonalities and differences across users’ behaviors. Then a task routing network is used to generate routes in the modular network for each behavior task. We adopt a hierarchical reinforcement learning architecture to improve the efficiency of MTRL4Rec. Finally, a training algorithm and a further improved training algorithm are proposed for our model training. Experiments on two public datasets validated the effectiveness of MTRL4Rec.

Similar content being viewed by others

Availability of data and materials

All the datasets are public available, and the links are attached in the footnotes.

References

Cai, Q., Filos-Ratsikas, A., Tang, P., Zhang, Y.: Reinforcement mechanism design for e-commerce. In: WWW, pp. 1339–1348 (2018)

Takanobu, R., Zhuang, T., Huang, M., Feng, J., Tang, H., Zheng, B.: Aggregating e-commerce search results from heterogeneous sources via hierarchical reinforcement learning. In: WWW, pp. 1771–1781 (2019)

Zheng, G., Zhang, F., Zheng, Z., Xiang, Y., Yuan, N.J., Xie, X., Li, Z.: DRN: A deep reinforcement learning framework for news recommendation. In: WWW, pp. 167–176 (2018)

van den Oord, A., Dieleman, S., Schrauwen, B.: Deep content-based music recommendation. In: NIPS, pp. 2643–2651 (2013)

Hong, D., Li, Y., Dong, Q.: Nonintrusive-sensing and reinforcementlearning based adaptive personalized music recommendation. In: SIGIR, pp. 1721–1724 (2020)

Zhao, J., Zhao, P., Zhao, L., Liu, Y., Sheng, V.S., Zhou, X.: Variational self-attention network for sequential recommendation. In: ICDE, pp. 1559–1570 (2021)

Xu, C., Feng, J., Zhao, P., Zhuang, F., Wang, D., Liu, Y., Sheng, V.S.: Long- and short-term self-attention network for sequential recommendation. Neurocomputing 423, 580–589 (2021)

Liu, J., Zhao, P., Zhuang, F., Liu, Y., Sheng, V.S., Xu, J., Zhou, X., Xiong, H.: Exploiting aesthetic preference in deep cross networks for crossdomain recommendation. In: WWW, pp. 2768–2774 (2020)

Xiao, K., Ye, Z., Zhang, L., Zhou, W., Ge, Y., Deng, Y.: Multi-user mobile sequential recommendation for route optimization. TKDD 14(5), 52–15228 (2020)

Hidasi, B., Karatzoglou, A.: Recurrent neural networks with top-k gains for session-based recommendations. In: CIKM, pp. 843–852 (2018)

Sun, F., Liu, J., Wu, J., Pei, C., Lin, X., Ou, W., Jiang, P.: Bert4rec: Sequential recommendation with bidirectional encoder representations from transformer. In: CIKM, pp. 1441–1450 (2019)

Zhou, K., Wang, H., Zhao, W.X., Zhu, Y., Wang, S., Zhang, F., Wang, Z., Wen, J.: S3-rec: Self-supervised learning for sequential recommendation with mutual information maximization. In: CIKM, pp. 1893–1902 (2020)

Shani, G., Heckerman, D., Brafman, R.I.: An mdp-based recommender system. J. Mach. Learn. Res. 6, 1265–1295 (2005)

Zhao, X., Zhang, L., Ding, Z., Xia, L., Tang, J., Yin, D.: Recommendations with negative feedback via pairwise deep reinforcement learning. In: KDD, pp. 1040–1048 (2018)

Zhao, D., Zhang, L., Zhang, B., Zheng, L., Bao, Y., Yan, W.: Mahrl: Multi-goals abstraction based deep hierarchical reinforcement learning for recommendations. In: SIGIR, pp. 871–880 (2020)

He, X., Liao, L., Zhang, H., Nie, L., Hu, X., Chua, T.: Neural collaborative filtering. In: WWW, pp. 173–182 (2017)

Wu, J., Wang, X., Feng, F., He, X., Chen, L., Lian, J., Xie, X.: Selfsupervised graph learning for recommendation. In: SIGIR, pp. 726–735 (2021)

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A., Antonoglou, I., Wierstra, D., Riedmiller, M.A.: Playing atari with deep reinforcement learning. CoRR abs/1312.5602 (2013)

van Hasselt, H., Guez, A., Silver, D.: Deep reinforcement learning with double q-learning. In: AAAI, pp. 2094–2100 (2016)

Williams, R.J.: Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 8, 229–256 (1992)

Sutton, R.S., Barto, A.G.: Reinforcement learning: An introduction. IEEE Trans. Neural Networks 9(5), 1054–1054 (1998)

Lillicrap, T.P., Hunt, J.J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., Silver, D., Wierstra, D.: Continuous control with deep reinforcement learning. In: ICLR (Poster) (2016)

Haarnoja, T., Zhou, A., Abbeel, P., Levine, S.: Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In: ICML. Proceedings of machine learning research, vol. 80, pp. 1856–1865 (2018)

Afsar, M.M., Crump, T., Far, B.H.: Reinforcement learning based recommender systems: A survey. ACM Comput. Surv. 55(7), 145–114538 (2023)

Zhang, J., Hao, B., Chen, B., Li, C., Chen, H., Sun, J.: Hierarchical reinforcement learning for course recommendation in moocs. In: AAAI, pp. 435–442 (2019)

Pei, C., Yang, X., Cui, Q., Lin, X., Sun, F., Jiang, P., Ou, W., Zhang, Y.: Value-aware recommendation based on reinforcement profit maximization. In: WWW, pp. 3123–3129 (2019)

Bai, X., Guan, J., Wang, H.: A model-based reinforcement learning with adversarial training for online recommendation. NeurIPS, 10734–10745 (2019)

Wang, P., Fan, Y., Xia, L., Zhao, W.X., Niu, S., Huang, J.: KERL: A knowledge-guided reinforcement learning model for sequential recommendation. In: SIGIR, pp. 209–218 (2020)

Barto, A.G., Mahadevan, S.: Recent advances in hierarchical reinforcement learning. Discret. Event Dyn. Syst. 13(1–2), 41–77 (2003)

Xie, R., Zhang, S., Wang, R., Xia, F., Lin, L.: Hierarchical reinforcement learning for integrated recommendation. In: AAAI, pp. 4521–4528 (2021)

Chen, M., Chang, B., Xu, C., Chi, E.H.: User response models to improve a REINFORCE recommender system. In: WSDM, pp. 121–129 (2021)

Xin, X., Karatzoglou, A., Arapakis, I., Jose, J.M.: Self-supervised reinforcement learning for recommender systems. In: SIGIR, pp. 931–940 (2020)

Wang, P., Fan, Y., Xia, L., Zhao, W.X., Niu, S., Huang, J.X.: KERL: A knowledge-guided reinforcement learning model for sequential recommendation. In: SIGIR, pp. 209–218 (2020)

Gao, C., Xu, K., Zhou, K., Li, L., Wang, X., Yuan, B., Zhao, P.: Value penalized q-learning for recommender systems. In: SIGIR, pp. 2008–2012 (2022)

Deng, Y., Li, Y., Sun, F., Ding, B., Lam, W.: Unified conversational recommendation policy learning via graph-based reinforcement learning. In: SIGIR, pp. 1431–1441 (2021)

Zhao, X., Xia, L., Zou, L., Liu, H., Yin, D., Tang, J.: Whole-chain recommendations. In: CIKM, pp. 1883–1891 (2020)

Caruana, R.: Multitask learning. Machine learning 28(1), 41–75 (1997)

Pinto, L., Gupta, A.: Learning to push by grasping: Using multiple tasks for effective learning. In: ICRA, pp. 2161–2168 (2017)

Crawshaw, M.: Multi-task learning with deep neural networks: A survey. CoRR abs/2009.09796 (2020)

Strezoski, G., van Noord, N., Worring, M.: Many task learning with task routing. In: ICCV, pp. 1375–1384 (2019)

Liu, X., He, P., Chen, W., Gao, J.: Multi-task deep neural networks for natural language understanding. In: ACL (1), pp. 4487–4496 (2019)

Singh, S.P.: Transfer of learning by composing solutions of elemental sequential tasks. Mach. Learn. 8, 323–339 (1992)

Wilson, A., Fern, A., Ray, S., Tadepalli, P.: Multi-task reinforcement learning: a hierarchical bayesian approach. In: ICML, vol. 227, pp. 1015–1022 (2007)

Pinto, L., Gupta, A.: Learning to push by grasping: Using multiple tasks for effective learning. In: ICRA, pp. 2161–2168 (2017)

Yang, R., Xu, H., Wu, Y., Wang, X.: Multi-task reinforcement learning with soft modularization. NeurIPS (2020)

Jacobs, R.A., Jordan, M.I., Nowlan, S.J., Hinton, G.E.: Adaptive mixtures of local experts. Neural Comput. 3(1), 79–87 (1991)

Ma, J., Zhao, Z., Yi, X., Chen, J., Hong, L., Chi, E.H.: Modeling task relationships in multi-task learning with multi-gate mixture-of-experts. In: KDD, pp. 1930–1939 (2018)

Tang, H., Liu, J., Zhao, M., Gong, X.: Progressive layered extraction (PLE): A novel multi-task learning (MTL) model for personalized recommendations. In: RecSys, pp. 269–278 (2020)

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A., Antonoglou, I., Wierstra, D., Riedmiller, M.A.: Playing atari with deep reinforcement learning. CoRR abs/1312.5602 (2013)

Järvelin, K., Kekäläinen, J.: Cumulated gain-based evaluation of IR techniques. ACM Trans. Inf. Syst. 20(4), 422–446 (2002)

Acknowledgements

This research was partially supported by the NSFC (62376180, 62176175), the major project of natural science research in Universities of Jiangsu Province (21KJA520004), Suzhou Science and Technology Development Program (SYC2022139), the Priority Academic Program Development of Jiangsu Higher Education Institutions and the Exploratory Self-selected Project of the State Key Laboratory of Software Development Environment.

Funding

This research was partially supported by the NSFC (62376180, 62176175), the major project of natural science research in Universities of Jiangsu Province (21KJA520004), Suzhou Science and Technology Development Program (SYC2022139), the Priority Academic Program Development of Jiangsu Higher Education Institutions and the Exploratory Self-selected Project of the State Key Laboratory of Software Development Environment.

Author information

Authors and Affiliations

Contributions

Huiwang Zhang: Conceptualization, Methodology, Writing - Original Draft Pengpeng Zhao: Conceptualization, Methodology, Writing - Review & Editing Xuefeng Xian: Writing - Review & Editing Victor S. Sheng: Writing - Review & Editing Yongjing Hao: Conceptualization, Review & Editing Zhiming Cui: Writing - Review & Editing

Corresponding authors

Ethics declarations

Ethical Approval

Not Applicable

Competing Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: Special Issue on Web Information Systems Engineering 2022 Guest Editor: Richard Chbeir, Helen Huang, Yannis Manolopoulos, Fabrizio Silvestri.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, H., Zhao, P., Xian, X. et al. Click is not equal to purchase: multi-task reinforcement learning for multi-behavior recommendation. World Wide Web 26, 4153–4172 (2023). https://doi.org/10.1007/s11280-023-01215-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11280-023-01215-6