Abstract

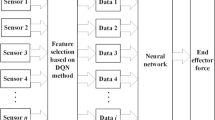

When a robot end-effector contacts human skin, it is difficult to adjust the contact force autonomously in an unknown environment. Therefore, a robot force control algorithm based on reinforcement learning with a state transition model is proposed. In this paper, the dynamic relationship between a robot end-effector and skin contact is established using an impedance control model. To solve the problem that the reference trajectory is difficult to obtain, a skin mechanical model is established to estimate the environmental boundary of impedance control. To address the problem that impedance control parameters are difficult to adjust, a reinforcement learning algorithm is constructed by combining a neural network and a cross-entropy method for control parameter search. The state transition model constructed using a BP neural network can be updated offline, accelerating the search for optimal control parameters, which optimizes the problem of slow reinforcement learning convergence. The uncertainty of the contact process is considered using a probabilistic statistics-based approach to strategy search. Experimental results show that the model-based reinforcement learning algorithm for force control can obtain a relatively smooth force compared to traditional PID algorithms, and the error is basically within ± 0.2 N during the online experiment.

Similar content being viewed by others

References

Golovin V, Arkhipov M, Zhuravlev V (2014) Force training for position/force control of massage robots. In: New trends in medical and service robots. Springer, Switzerland, pp 95–109. https://doi.org/10.1007/978-3-319-05431-5_7

Khoshdel V, Akbarzadeh A, Naghavi N, Sharifnezhad A, Souzanchi-Kashani M (2018) sEMG-based impedance control for lower-limb rehabilitation robot. Intel Serv Robot 11(1):97–108. https://doi.org/10.1007/s11370-017-0239-4

Song P, Yu Y, Zhang X (2019) A tutorial survey and comparison of impedance control on robotic manipulation. Robotica 37(5):801–836. https://doi.org/10.1017/S0263574718001339

Khoramshahi M, Henriks G, Naef A, Salehian SSM, Kim J, et al (2020) Arm-hand motion-force coordination for physical interactions with non-flat surfaces using dynamical systems: toward compliant robotic massage. In: 2020 IEEE international conference on robotics and automation (ICRA). IEEE, pp 4724–4730. https://doi.org/10.1109/ICRA40945.2020.9196593

Luo RC, Hsieh KC (2018) Tapping motion detection incorporate with impedance control of robotics tapotement massage on human tissue. In: 2018 IEEE 15th international workshop on advanced motion control (AMC). IEEE, pp 160–165. https://doi.org/10.1109/AMC.2019.8371080

Meng L, Yu S, Chang H, Findeisen R, Chen H (2020) Path following and terminal force control of robotic manipulators. In: 2020 IEEE 16th international conference on control and automation (ICCA). IEEE, pp 1482–1487. https://doi.org/10.1109/ICCA51439.2020.9264313

Maqsood K, Luo J, Yang C, Ren Q, Li Y (2021) Iterative learning-based path control for robot-assisted upper-limb rehabilitation. In: Neural computing and applications (special issue on human-in-the-loop machine learning and its applications), pp 1–3. https://doi.org/10.1007/s00521-021-06037-z

Dong J, Xu J (2020) Physical human–robot interaction force control method based on adaptive variable impedance. J Franklin Inst 357(12):7864–7878. https://doi.org/10.1016/j.jfranklin.2020.06.007

Bi W, Wu X, Liu Y, Li Z (2019) Role Adaptation and force, impedance learning for physical human-robot interaction. In: 2019 IEEE 4th international conference on advanced robotics and mechatronics (ICARM). IEEE, pp 111–117. https://doi.org/10.1109/ICARM.2019.8834320

Li Y, Ganesh G, Nathanal J, Haddadin S, Albu-Schaeffer A, Burdet E (2018) Force, impedance, and trajectory learning for contact tooling and haptic identification. IEEE Trans Rob 34(5):1170–1182. https://doi.org/10.1109/TRO.2018.2830405

Lau JY, Liang W, Tan KK (2019) Enhanced robust impedance control of a constrained piezoelectric actuator-based surgical device. Sens Actuators A 290:97–106. https://doi.org/10.1016/j.sna.2019.02.015

Joodaki H, Panzer MB (2018) Skin mechanical properties and modeling: a review. Proc Inst Mech Eng 232(4):323–343. https://doi.org/10.1177/0954411918759801

Duan J, Gan Y, Chen M, Dai Z (2018) Adaptive variable impedance control for dynamic contact force tracking in uncertain environment. Robot Auton Syst 102:54–65. https://doi.org/10.1016/j.robot.2018.01.009

Cui Z, Bao P, Xu H, Gong M, Li K, Huang S, Chen Z, Zhang H (2021) A study of force feedback master-slave teleoperation system based on biological tissue interaction. In: International conference on life system modeling and simulation, international conference on intelligent computing for sustainable energy and environment. Springer, pp 134–144. https://doi.org/10.1007/978-981-16-7207-1_14

Stephens TK, Awasthi C, Kowalewski TM (2019) Adaptive impedance control with setpoint force tracking for unknown soft environment interactions. In: 2019 IEEE 58th conference on decision and control (CDC). IEEE, pp 1951–1958. https://doi.org/10.1109/CDC40024.2019.9029686

Zhai J, Zeng X, Su Z (2014) An intelligent control system for robot massaging with uncertain skin characteristics. Ind Robot Int J Robot Res Appl 49(4):634–644. https://doi.org/10.1108/IR-11-2021-0266

Huang Y, Soueres P, Li J (2014) Contact dynamics of massage compliant robotic arm and its coupled stability. In: 2014 IEEE international conference on robotics and automation (ICRA). IEEE, pp 1499–1504. https://doi.org/10.1109/ICRA.2014.6907050

Schindeler R, Hashtrudi-Zaad K (2018) Online identification of environment hunt–crossley models using polynomial linearization. IEEE Trans Rob 34(2):447–458. https://doi.org/10.1109/tro.2017.2776318

Tu Y, Hu W, Shao H, Li G (2021) Research on contact force tracking of robotic manipulators based on active disturbance rejection control. In: 2021 IEEE international conference on networking, sensing and control (ICNSC). IEEE, pp 1–5. https://doi.org/10.1109/ICNSC52481.2021.9702127

Carvalho AS, Martins JM (2019) Exact restitution and generalizations for the hunt–crossley contact model. Mech Mach Theory 139:174–194. https://doi.org/10.1016/j.mechmachtheory.2019.03.028

Seyfi B, Fatouraee N, Vaghasloo M (2016) An instrumented electromechanical apparatus for mechanical characterization of human hand palm soft tissue. In: Iranian conference on biomedical engineering. IEEE, pp 16–20. https://doi.org/10.1109/ICBME.2016.7890921

Quek ZF, Provancher WR, Okamura AM (2019) Evaluation of skin deformation tactile feedback for teleoperated surgical tasks. IEEE Trans Haptics 12(2):102–113. https://doi.org/10.1109/TOH.2018.2873398

Pinto L, Davidson J, Sukthankar R, Gupta GA (2017) Robust adversarial reinforcement learning: proceedings of machine learning research. In: Proceedings of the 34th international conference on machine learning, PMLR, pp 2817–2826. https://doi.org/10.1016/j.robot.2018.01.009

Meng Y, Su J, Wu J (2021) Reinforcement learning based variable impedance control for high precision human-robot collaboration tasks. In: 2021 6th IEEE international conference on advanced robotics and mechatronics (ICARM). IEEE, pp 560–565. https://doi.org/10.1109/ICARM52023.2021.9536100

Andersena RE, Madsena S, Barloa ABK, Johansen SB, Nor M et al (2019) Self-learning processes in smart factories: deep reinforcement learning for process control of robot brine injection. Procedia Manuf 38:171–177. https://doi.org/10.1016/j.promfg.2020.01.023

Nan L, Linrui Z, Chen Y, Zhu Y, Chen R et al (2019) Reinforcement learning for robotic safe control with force sensing. In: Proceedings of the 2nd WRC symposium on advanced robotics and automation. IEEE, pp 148–153. https://doi.org/10.1109/WRC-SARA.2019.8931917

Roveda L, Maskani J, Franceschi P, Abdi ABF (2020) Model-based reinforcement learning variable impedance control for human–robot collaboration. J Intell Rob Syst 100(2):417–433. https://doi.org/10.1007/s10846-020-01183-3

Luo J, Solowjow E, Wen C, Aparicio J, Agogino AM. (2019) Reinforcement learning on variable impedance controller for high-precision robotic assembly. In: 2019 international conference on robotics and automation (ICRA). IEEE, pp 3080–3087. https://doi.org/10.1109/ICRA.2019.8793506

Zhao X, Han S, Tao B, Yin Z, Ding H (2022) Model-based actor−critic learning of robotic impedance control in complex interactive environment. IEEE Trans Industr Electron 69(12):13225–13235. https://doi.org/10.1109/TIE.2021.3134082

Martín-Martín R, Lee MA, Gardner R, Savarese S, Bohg J, Garg A (2019) Variable impedance control in end-effector space: an action space for reinforcement learning in contact-rich tasks. In: 2019 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, pp 1010–1017. https://doi.org/10.1109/IROS40897.2019.8968201

Zhu X, Gao B, Zhong Y, Gu C, Choi KS (2021) Extended kalman filter for online soft tissue characterization based on hunt-crossley contact model. J Mech Behav Biomed Mater 123:104667. https://doi.org/10.1016/j.jmbbm.2021.104667

Recht B (2019) A tour of reinforcement learning: the view from continuous control. Annual Review of Control, Robotics, and Autonomous Systems 2(1):253–279. https://doi.org/10.1146/annurev-control-053018-023825

Zhang X, Zhang X (2017) Cross entropy method meets local search for continuous optimization problems. Int J Artif Intell Tools 26(6):1–14. https://doi.org/10.1142/S0218213017500208

Acknowledgements

This work was supported by the National Natural Science Foundation of China (NNSFC), China; Contract Grant Number: 82172526, 62102268; Guangdong Basic and Applied Basic Research Foundation, China; Contract Grant Number: 2021A1515011042.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors disclosed no relevant relationships.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xiao, M., Zhang, T., Zou, Y. et al. Study on force control for robot massage with a model-based reinforcement learning algorithm. Intel Serv Robotics 16, 509–519 (2023). https://doi.org/10.1007/s11370-023-00474-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11370-023-00474-6