Abstract

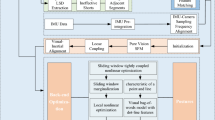

In order to solve the problem of poor performance of traditional point feature algorithm under low texture and poor illumination, this paper presents a new visual SLAM method based on point–line fusion of line structure constraint. This method first uses an algorithm for homogeneity to process the extracted point features, solving the traditional problem of excessive aggregation and overlap of corner points, which makes the visual front end better able to obtain environmental information. In addition, improved line extraction method algorithm by using the strategy of eliminating the line length makes the line extraction performance twice as efficient as the LSD algorithm, the optical flow tracking algorithm is used to replace the traditional matching algorithm to reduce the running time of the system. In particular, the paper proposes a new constraint on the position of the spatially extracted lines, using the parallelism of 3D lines to correct for degraded lines in the projection process, and adding a new constraint on the line structure to the error function of the whole system, the newly constructed error function is optimized by sliding window, which significantly improves the accuracy and completeness of the whole system in constructing maps. Finally, the performance of the algorithm was tested on a publicly available dataset. The experimental results show that our algorithm performs well in point extraction and matching, the proposed point–line fusion system is better than the popular VINS-mono and PL-VINS algorithms in terms of running time, quality of information obtained, and positioning accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The data and code that support the findings of this study are not openly available due to application for further research and are available from the corresponding author upon reasonable request.

References

Xia L, Meng D, Zhang J, Zhang D, Hu Z (2022) Visual-inertial simultaneous localization and mapping: dynamically fused point-line feature extraction and engineered robotic applications. IEEE Trans Instrum Meas 71:1–11

Campos C, Elvira R, Rodriguez JJG, Montiel JMM, Tardos JD (2021) ORB-SLAM3: an accurate open-source library for visual, visual-inertial, and multimap SLAM. IEEE Trans Rob 37(6):1874–1890

Han L, Wang J, Zhang Y, Sun X, Wu X (2022) Research on adaptive ORB-SURF image matching algorithm based on fusion of edge features. IEEE Access 10:109488–109497

Liu Z, Shi D, Li R, Qin W, Zhang Y, Ren X (2022) PLC-VIO: visual-inertial odometry based on point-line constraints. IEEE Trans Autom Sci Eng 19(3):1880–1897

Ren G, Cao Z, Liu X, Tan M, Yu J (2022) PLJ-SLAM: monocular visual SLAM with points, lines, and junctions of coplanar Lines. IEEE Sens J 22(15):15465–15476

Yang G, Wang Q, Liu P, Yan C (2021) PLS-VINS: visual inertial state estimator with point-line features fusion and structural constraints. IEEE Sens J 21(24):27967–27981

Zhang G, Lee JH, Lim J, Suh IH (2015) Building a 3-D line-based map using stereo SLAM. IEEE Trans Robot 31(6):1364–1377

Gomez-Ojeda R, Briales J, Gonzalez-Jimenez J (2016) PL-SVO: semi-direct monocular visual odometry by combining points and line segments, pp 4211–4216

Gomez-Ojeda R, Moreno FA, Zuniga-Noel D, Scaramuzza D, Gonzalez-Jimenez J (2019) PL-SLAM: a stereo SLAM system through the combination of points and line segments. IEEE Trans Robot 35(3):734–746

Mur-Artal R, Tardos JD (2017) ORB-SLAM2: an open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans Robot 33(5):1255–1262

Zuo X, Xie X, Liu Y, Huang G (2017) Robust visual SLAM with point and line features, In: IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 1775–1782

Qin T, Li PL, Shen SJ (2018) VINS-Mono: a robust and versatile monocular visual-inertial state estimator. IEEE Trans Rob 34(4):1004–1020

Fu Q, Wang J, Yu H, Ali I, Guo F, He Y (2020) Pl-vins: real-time monocular visual-inertial slam with point and line features

Lee J, Park SY (2021) PLF-VINS: real-time monocular visual-inertial SLAM with point-line fusion and parallel-line fusion. IEEE Robot Autom Lett 6(4):7033–7040

Glover A, Dinale A, Rosa LDS, Bamford S, Bartolozzi C (2022) luvHarris: a practical corner detector for event-cameras. IEEE Trans Pattern Anal Mach Intell 44(12):10087–10098

Bellavia F (2023) SIFT matching by context exposed. IEEE Trans Pattern Anal Mach Intell 45(2):2445–2457

Morad SD, Nash J, Higa S, Smith R, Parness A, Barnard K (2020) Improving visual feature extraction in glacial environments. IEEE Robot Autom Lett 5(2):385–390

Yang S, Zhao C, Wu Z, Wang Y, Wang G, Li D (2022) Visual SLAM based on semantic segmentation and geometric constraints for dynamic indoor environments. IEEE Access 10:69636–69649

Anifah L, Rusimamto PW, Haryanto, Arsana IM, Haryudo SI, Sumbawati MS (2022) Dentawyanjana character segmentation using K-means clustering CLAHE adaptive thresholding based, pp 19–24

Wei H, Tang F, Xu Z, Zhang C, Wu Y (2021) A point-line VIO system with novel feature hybrids and with novel line predicting-matching. IEEE Robot Autom Lett 6(4):8681–8688

Sun C, Wu X, Sun J, Qiao N, Sun C (2022) Multi-stage refinement feature matching using adaptive ORB features for robotic vision navigation. IEEE Sens J 22(3):2603–2617

Liu B, Zhang Z, Hao D, Liu G, Lu H, Meng Y, Lu X (2022) Collaborative visual inertial SLAM with KNN map matching, pp 1177–1181

Geneva P, Eckenhoff K, Lee W, Yang Y, Huang G (2020) Openvins: a research platform for visual-inertial estimation, IEEE, pp 4666–4672

Akinlar C, Topal C (2011) Edlines: real-time line segment detection by edge drawing, pp 2837–2840

Teplyakov L, Erlygin L, Shvets E (2022) LSDNet: trainable modification of LSD algorithm for real-time line segment detection. IEEE Access 10:45256–45265

He YJ, Zhao J, Guo Y, He WH, Yuan K (2018) PL-VIO: tightly-coupled monocular visual-inertial odometry using point and line features. Sensors 18(4):1159

Kannapiran S, v Baar J, Berman S (2021) A visual inertial odometry framework for 3D points, lines and planes, pp 9206–9211

Donder A, y Baena FR (2022) Kalman-filter-based, dynamic 3-D shape reconstruction for steerable needles with fiber bragg gratings in multicore fibers. IEEE Trans Robot 38(4):2262–2275

Marchisotti D, Zappa E (2022) Feasibility study of drone-based 3-D measurement of defects in concrete structures. IEEE Trans Instrum Meas 71:1–11

Bartoli A, Sturm P (2004) Nonlinear estimation of the fundamental matrix with minimal parameters. IEEE Trans Pattern Anal Mach Intell 26(3):426–432

Mur-Artal R, Montiel JMM, Tardós JD (2015) ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Trans Rob 31(5):1147–1163

Liu X, Wen S, Zhang H (2023) A real-time stereo visual-inertial SLAM system based on point-and-line features. IEEE Trans Veh Technol 72(5):5747–5758

Burri M, Nikolic J, Gohl P, Schneider T, Rehder J, Omari S, Achtelik MW, Siegwart R (2016) The EuRoC micro aerial vehicle datasets. Int J Robot Res 35(10):1157–1163

Kang J, Kim SJ, Chung MJ (2013) Robust clustering of multi-structure data with enhanced sampling, pp 589–590

Xu L, Yin H, Shi T, Jiang D, Huang B (2023) EPLF-VINS: real-time monocular visual-inertial SLAM with efficient point-line flow features. IEEE Robot Autom Lett 8(2):752–759

Funding

The works is partly supported by the Education Committee Project of Liaoning, China, under Grant JYTZD2023176.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, S., Zhang, A., Zhang, Z. et al. Real-time monocular visual–inertial SLAM with structural constraints of line and point–line fusion. Intel Serv Robotics 17, 135–154 (2024). https://doi.org/10.1007/s11370-023-00492-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11370-023-00492-4