Abstract

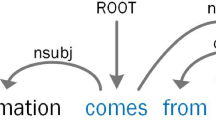

Relation extraction has been widely used to find semantic relations between entities from plain text. Dependency trees provide deeper semantic information for relation extraction. However, existing dependency tree based models adopt pruning strategies that are too aggressive or conservative, leading to insufficient semantic information or excessive noise in relation extraction models. To overcome this issue, we propose the Neural Attentional Relation Extraction Model with Dual Dependency Trees (called DDT-REM), which takes advantage of both the syntactic dependency tree and the semantic dependency tree to well capture syntactic features and semantic features, respectively. Specifically, we first propose novel representation learning to capture the dependency relations from both syntax and semantics. Second, for the syntactic dependency tree, we propose a local-global attention mechanism to solve semantic deficits. We design an extension of graph convolutional networks (GCNs) to perform relation extraction, which effectively improves the extraction accuracy. We conduct experimental studies based on three real-world datasets. Compared with the traditional methods, our method improves the F1 scores by 0.3, 0.1 and 1.6 on three real-world datasets, respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Aghaei S, Raad E, Fensel A. Question answering over knowledge graphs: A case study in tourism. IEEE Access, 2022, 10: 69788-69801. https://doi.org/10.1109/ACCESS.2022.3187178.

Wen Y, Zhu X, Zhang L. CQACD: A concept question-answering system for intelligent tutoring using a domain ontology with rich semantics. IEEE Access, 2022, 10: 67247-67261. https://doi.org/10.1109/ACCESS.2022.3185400.

Lin C, Miller T, Dligach D, Amiri H, Bethard S, Savova G. Self-training improves recurrent neural networks performance for temporal relation extraction. In Proc. the 9th International Workshop on Health Text Mining and Information Analysis, Oct. 2018, pp.165-176. https://doi.org/10.18653/v1/W18-5619.

Sun K, Zhang R, Mao Y et al. Relation extraction with convolutional network over learnable syntax-transport graph. In Proc. the 34th AAAI Conf. Artificial Intelligence, Feb. 2020, pp.8928-8935. https://doi.org/10.1609/aaai.v34i05.6423.

Kipf T N, Welling M. Semi-supervised classification with graph convolutional networks. arXiv:1609.02907, 2016. https://arxiv.org/abs/1609.02907, July 2022.

Nguyen T H, Grishman R. Relation extraction: Perspective from convolutional neural networks. In Proc. the 1st Workshop on Vector Space Modeling for Natural Language Processing, Jun. 2015, pp.39-48. https://doi.org/10.3115/v1/W15-1506.

Wang G, Zhang W, Wang R et al. Label-free distant supervision for relation extraction via knowledge graph embedding. In Proc. the 2018 Conf. Empirical Methods in Natural Language Processing, Oct. 31-Nov. 4, 2018, pp.2246-2255. https://doi.org/10.18653/v1/D18-1248.

Zelenko D, Aone C, Richardella A. Kernel methods for relation extraction. Journal of Machine Learning Research, 2003, 3: 1083-1106.

Sun L, Han X. A feature-enriched tree kernel for relation extraction. In Proc. the 52nd Annual Meeting of the Association for Computational Linguistics, Jun. 2014, pp.61-67. https://doi.org/10.3115/v1/P14-2011.

Chai C, Liu J, Tang N. Selective data acquisition in the wild for model charging. Proceedings of the VLDB Endowment, 2022, 15(7): 1466-1478. https://doi.org/10.14778/3523210.3523223.

Zhou G, Qian L, Fan J. Tree kernel-based semantic relation extraction with rich syntactic and semantic information. Information Sciences, 2010, 180(8): 1313-1325. https://doi.org/10.1016/j.ins.2009.12.006.

Gormley M R, Yu M, Dredze M. Improved relation extraction with feature-rich compositional embedding models. arXiv:1505.02419, 2015. https://arxiv.org/abs/1505.02419, July 2022.

Quan C, Wang M, Ren F. An unsupervised text mining method for relation extraction from biomedical literature. PLoS One, 2014, 9(7): Article No. e102039. https://doi.org/10.1371/journal.pone.0102039.

Rink B, Harabagiu S. UTD: Classifying semantic relations by combining lexical and semantic resources. In Proc. the 5th Int. Workshop on Semantic Evaluation, Jul. 2010, pp.256-259.

Paulus R, Socher R, Manning C D. Global belief recursive neural networks. In Proc. the 27th Int. Conf. Neural Information Processing Systems, Dec. 2014, pp.2888-2896.

Zhang Y, Lin H, Yang Z, Wang J, Zhang S, Sun Y, Yang L. A hybrid model based on neural networks for biomedical relation extraction. Journal of Biomedical Informatics, 2018, 81: 83-92. https://doi.org/10.1016/j.jbi.2018.03.011.

Guo X, Zhang H, Yang H, Xu L, Ye Z. A single attention-based combination of CNN and RNN for relation classification. IEEE Access, 2019, 7: 12467-12475. https://doi.org/10.1109/ACCESS.2019.2891770.

Peng N, Poon H, Quirk C, Toutanova K, Yih W T. Cross-sentence N-ary relation extraction with graph LSTMs. Transactions of the Association for Computational Linguistics, 2017, 5: 101-115. https://doi.org/10.1162/tacl_a_00049.

Geng Z, Chen G, Han Y, Lu G, Li F. Semantic relation extraction using sequential and tree-structured LSTM with attention. Information Sciences, 2020, 509: 183-192. https://doi.org/10.1016/j.ins.2019.09.006.

Song L, Zhang Y, Wang Z, Gildea D. N-ary relation extraction using graph state LSTM. arXiv:1808.09101, 2018. https://arxiv.org/abs/1808.09101, July 2022.

Liang Z, Du J. Sequence to sequence learning for joint extraction of entities and relations. Neurocomputing, 2022, 501: 480-488. https://doi.org/10.1016/j.neucom.2022.05.074.

Liu Z, Chen Y, Dai Y et al. Syntactic and semantic features based relation extraction in agriculture domain. In Proc. the 15th International Conference on Web Information Systems and Applications, Sept. 2018, pp.252-258. https://doi.org/10.1007/978-3-030-02934-0_23.

Ji G, Liu K, He S, Zhao J. Distant supervision for relation extraction with sentence-level attention and entity descriptions. In Proc. the 31st AAAI Conference on Artificial Intelligence, Feb. 2017, pp.3060-3066. https://doi.org/10.1609/aaai.v31i1.10953.

Zeng D, Dai Y, Li F, Sherratt R S. Adversarial learning for distant supervised relation extraction. Computers, Materials & Continua, 2018, 55(1): 121-136. https://doi.org/10.3970/cmc.2018.055.121.

Smirnova A, Cudré-Mauroux P. Relation extraction using distant supervision: A survey. ACM Computing Surveys, 2019, 51(5): Article No. 106. https://doi.org/10.1145/3241741.

Ye Z, Ling Z. Distant supervision relation extraction with intra-bag and inter-bag attentions. arXiv:1904.00143, 2019. https://arxiv.org/abs/1904.00143, July 2022.

Zhang Y, Qi P, Manning C D. Graph convolution over pruned dependency trees improves relation extraction. arXiv:1809.10185, 2018. https://arxiv.org/abs/1809.10185, July 2022.

Mandya A, Bollegala D, Coenen F. Graph convolution over multiple dependency sub-graphs for relation extraction. In Proc. the 28th Annual Meeting of the Association for Computational Linguistics, Dec. 2020, pp.6424-6435. https://doi.org/10.18653/v1/2020.coling-main.565.

Zhou L, Wang T, Qu H, Huang L, Liu Y. A weighted GCN with logical-adjacency matrix for relation extraction. In Proc. the 24th European Conference on Artificial Intelligence, Aug. 29-Sept. 8, 2020, pp.2314-2321. https://doi.org/10.3233/FAIA200360.

Hu Y, Shen H, Liu W, Min F, Qiao X, Jin K. A graph convolutional network with multiple dependency representations for relation extraction. IEEE Access, 2021, 9: 81575-81587. https://doi.org/10.1109/ACCESS.2021.3086480.

Zeng D, Zhao C, Quan Z. CID-GCN: An effective graph convolutional networks for chemical-induced disease relation extraction. Frontiers in Genetics, 2021, 12: Article No. 624307. https://doi.org/10.3389/fgene.2021.624307.

Wang G, Liu S, Wei F. Weighted graph convolution over dependency trees for nontaxonomic relation extraction on public opinion information. Applied Intelligence, 2022, 52(3): 3403-3417. https://doi.org/10.1007/s10489-021-02596-9.

Ojha V K, Abraham A, Snášel V. Metaheuristic design of feedforward neural networks: A review of two decades of research. Engineering Applications of Artificial Intelligence, 2017, 60: 97-116. https://doi.org/10.1016/j.engappai.2017.01.013.

Robinson J. Dependency structures and transformational rules. Language, 1970, 46(2): 259-285. https://doi.org/10.2307/412278.

Bunescu R C, Mooney R J. A shortest path dependency kernel for relation extraction. In Proc. the 2005 Human Language Technology Conference and Empirical Methods in Natural Language Processing, Oct. 2005, pp.724-731. https://doi.org/10.3115/1220575.1220666.

Li N, Zhang H, Chen Y. Convolutional neural network with SDP-based attention for relation classification. In Proc. the 2018 IEEE International Conference on Big Data and Smart Computing, Jan. 2018, pp.615-618. https://doi.org/10.1109/BigComp.2018.00108.

Zhang Y, Zhong V, Chen D, Angeli G, Manning C D. Position-aware attention and supervised data improve slot filling. In Proc. the 2017 Conference on Empirical Methods in Natural Language Processing, Sept. 2017, pp.35-45. https://doi.org/10.18653/v1/D17-1004.

Yan X, Mou L, Li G, Chen Y, Peng H, Jin Z. Classifying relations via long short-term memory networks along shortest dependency path. In Proc. the 2015 Conference on Empirical Methods in Natural Language Processing, Sept. 2015, pp.1785-1794. https://doi.org/10.18653/v1/D15-1206.

Liu Y, Li S, Wei F, Ji H. Relation classification via modeling augmented dependency paths. IEEE/ACM Trans. Audio, Speech, and Language Processing, 2016, 24(9): 1589-1598. https://doi.org/10.1109/TASLP.2016.2573050.

Tai K, Socher R, Manning C D. Improved semantic representations from tree-structured long short-term memory networks. arXiv:1503.00075, 2015. https://arxiv.org/abs/1503.00075, July 2022.

Guo Z, Zhang Y, Lu W. Attention guided graph convolutional networks for relation extraction. In Proc. the 57th Annual Meeting of the Association for Computational Linguistics, Jul. 28-Aug. 2, 2019, pp.241-251. https://doi.org/10.18653/v1/P19-1024.

Author information

Authors and Affiliations

Corresponding author

Supplementary Information

ESM 1

(PDF 101 kb)

Rights and permissions

About this article

Cite this article

Li, D., Lei, ZL., Song, BY. et al. Neural Attentional Relation Extraction with Dual Dependency Trees. J. Comput. Sci. Technol. 37, 1369–1381 (2022). https://doi.org/10.1007/s11390-022-2420-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11390-022-2420-2