Abstract

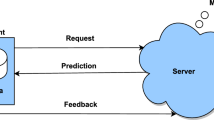

Data in the Internet are scattered on different sites indeliberately, and accumulated and updated frequently but not synchronously. It is infeasible to collect all the data together to train a global learner for prediction; even exchanging learners trained on different sites is costly. In this paper, aggregative-learning is proposed. In this paradigm, every site maintains a local learner trained from its own data. Upon receiving a request for prediction, an aggregative-learner at a local site activates and sends out many mobile agents taking the request to potential remote learners. The prediction of the aggregative-learner is made by combining the local prediction and the responses brought back by the agents. Theoretical analysis and simulation experiments show the superiority of the proposed method.

Similar content being viewed by others

References

Lazarevic A, Obradovic Z. The distributed boosting algorithm. In: Proceedings of the 7th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, 2001. 311–316

Tsoumakas G, Vlahavas I. Effective stacking of distributed classifiers. In: Proceedings of the 15th European Conference on Artificial Intelligence, Lyon, France, 2002. 340–344

Caragea C, Caragea D, Honavar V. Learning support vector machines from distributed data sources. In: Proceedings of the 20th National Conference on Artificial Intelligence, Pittsburgh, PA, 2005. 1602–1603

Aoun-Allah M, Mineau G. Distributed data mining: Why do more than aggregating models. In: Proceedings of the 20th International Joint Conference on Artificial Intelligence, Hyderabad, India, 2007. 2645–2650

Bowyer K, Chawla N, Moore T, et al. A parallel decision tree builder for mining very large visualization datasets. In: Proceedings of 13th IEEE International Conference on Systems, Man, and Cybernetics, Nashville, TN, 2000. 1888–1893

Caragea D, Silvescu A, Honavar V. Decision tree induction from distributed heterogeneous autonomous data sources. In: Proceedings of the 3rd International Conference on Intelligent Systems Design and Applications, Tulsa, 2003. 341–350

Breiman L. Pasting bites together for prediction in large data sets. Mach Learn, 1999, 36: 85–103

Chawla N V, Hall L O, Bowyer K W, et al. Learning ensembles from bites: A scalable and accurate approach. J Mach Learn Research, 2004, 5: 421–451

Luo P, Xiong H, Lu K, et al. Distributed classification in peer-to-peer networks. In: Proceedings of the 13th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Jose, 2007. 968–976

Zhong N, Liu J, Yao Y. In search of the wisdom web. IEEE Comput, 2002, 35: 27–31

Zhou Z H. Ensemble. In: Liu L, Özsu T, eds. Encyclopedia of Database Systems, Berlin: Springer, 2009

Esposito R, Saitta L. Monte Carlo theory as an explanation of bagging and boosting. In: Proceedings of the 18th International Joint Conference on Artificial Intelligence, Acapulco, Mexico, 2003. 499–504

Freund Y, Mansour Y, Schapire R E. Why averaging classifiers can protect against overfitting. In: Proceedings of the 8th International Workshop on Artificial Intelligence and Statistics, Key West, FL, 2001

Friedman J, Hastie T, Tibshirani R. Additive logistic regression: A statistical view of Boosting with discussions. Ann Stat, 2000, 28: 337–407

Blake C, Keogh E, Merz C J. UCI repository of machine learning databases. [http://www.ics.uci.edu/mlearn/MLRepository.html], Department of Information and Computer Science, University of California, Irvine, 1998

Witten I H, Frank E. Data Mining: Practical Machine Learning Tools and Techniques. Morgan Kaufmann, 2005

Zhou Z H, Wu J, Tang W. Ensembling neural networks: Many could be better than all. Artif Intell, 2002. 137: 239–263

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, M., Wang, W. & Zhou, Z. Exploiting remote learners in Internet environment with agents. Sci. China Ser. F-Inf. Sci. 53, 64–76 (2010). https://doi.org/10.1007/s11432-010-0011-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11432-010-0011-2