Abstract

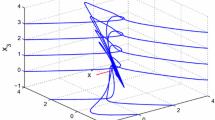

Generalized gradient projection neural network models are proposed to solve nonsmooth convex and nonconvex nonlinear programming problems over a closed convex subset of ℝn. By using Clarke’s generalized gradient, the neural network modeled by a differential inclusion is developed, and its dynamical behavior and optimization capabilities both for convex and nonconvex problems are rigorously analyzed in the framework of nonsmooth analysis and the differential inclusion theory. First for nonconvex optimization problems, the quasiconvergence results similar to those achieved by previous work are proved. For convex optimization problems, the global convergence results are proved, i.e. any trajectory of the neural network converges to an asymptotically stable equilibrium point, which is an optimal solution of the primal problem whenever it exists. This result remains valid even if the initial condition is chosen outside the feasible set. In addition, an asymptotic control result involving a Tykhonov-like regularization shows that any trajectory of the revised neural network can be forced to converge toward a particular optimal solution of the primal problem. Finally, two simulation optimization algorithms for solving the optimization and control problems are designed respectively, and three typical simulation experiments are illustrated to present the accuracy and efficiency of theoretical convergence results of this paper.

Similar content being viewed by others

References

Hopfield J J, Tank D W. Neural computation of decisions in optimization problems. Biol Cybern, 1985, 52: 141–152

Tank D W, Hopfield J J. Simple neural optimization network: an A/D converter, signal decision circuit, and a linear programming circuit. IEEE Trans Circuits Syst, 1986, 33: 533–541

Kennedy M P, Chua L O. Neural networks for nonlinear programming. IEEE Trans Circuits Syst, 1988, 35: 554–562

Rodríguez-Váquez A, Rueda R, Huertas J L, et al. Nonlinear switched-capacitor neural networks for optimization problems. IEEE Trans Circuits Syst, 1990, 37: 384–397

Sudharsanan S, Sundareshan M. Exponential stability and a systematic synthesis of a neural network for quadratic minimization. Neural Netw, 1991, 4: 599–613

Bouzerdoum A, Pattison T R. Neural network for uadratic optimization with bound constraints. IEEE Trans Neural Netw, 1993, 4: 293–304

Xia Y, Leung H, Wang J. A projection neural network and its application to constrained optimization problems. IEEE Trans Circuits Syst I: Fundamental Theory Appl, 2002, 49: 447–457

Liang X. A recurrent neural network for nonlinear continuously differential optimization over a compact convex subset. IEEE Trans Neural Netw, 2001, 12: 1487–1490

Liang X, Wang J. A recurrent neural network for nonlinear optimization with continuously differential objective function and bound constraints. IEEE Trans Neural Netw, 2000, 11: 1251–1262

Leung Y, Chen K, Gao X. A high-performance feedback neural network for solving convex nonlinear programming. IEEE Trans Neural Netw, 2003, 14: 1469–1477

Forti M, Nistri P, Quincampoix M. Generalized neural network for nonsmooth nonlinear programming problems. IEEE Trans Circuits Syst, 2004, 51: 1741–1754

Forti M, Nistri P, Quincampoix M. Convergence of neural network for programming problems via a nonsmooth Łojasiewicz inequality. IEEE Trans Neural Netw Lect, 2006, 17: 1471–1485

Li G, Song S, Wu C, et al. A neural network model for nonsmooth optimization over a compact convex subset. In: Lecture Notes in Computer Science. Berlin: Spring-Verlag, 2006. 3971: 344–349

Li G, Song S, Wu C. Subgradient-based feedback neural network for non-differential convex optimization problems. Sci China Ser F-Inf Sci, 2006, 49: 421–435

Liu Q, Wang J. A one-layer recurrent neural network with a discontinuous hard-limiting activation function for quadratic programming. IEEE Trans Neural Netw, 2008, 19: 558–570

Xue X, Wei B. Subgradient-based neural networks for nonsmooth convex optimization problems. IEEE Trans Circuits Syst, 2008, 55: 2378–2391

Bian W, Xue X. Subgradient-based neural networks for nonsmooth nonconvex optimization problems. IEEE Trans Neural Netw, 2009, 20: 1024–1038

Xue X, Wei B. A project neural network for solving degenerate quadratic minimax problem with linear constraints. Neurcomputing, 2009, 72: 1826–1838

Liao L, Qi H, Qi L. Neurodynamical optimization. J Global Optim, 2004, 28: 175–195

Bolte J. Continuous gradient projection method in Hilbert spaces. J Optim Theory Appl, 2003, 119: 235–259

Clarke F H. Optimization and Non-Smooth Analysis. New York: Wiley, 1969

Aubin J P, Cellina A. Differential Inclusions. Berlin: Springer-Verlag, 1984

Opial Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull American Math Soc, 1967, 73: 591–597

Filippov A F. Differential Equations with Discontinuous Right-Hand Side. Dordrecht: Kluwer Academic, 1988

Attouch H, Cominetti R. A dynamical approach to convex minimization coupling approximation with the steepdescent method. J Differ Equ, 1996, 128: 519–540

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, G., Song, S. & Wu, C. Generalized gradient projection neural networks for nonsmooth optimization problems. Sci. China Inf. Sci. 53, 990–1005 (2010). https://doi.org/10.1007/s11432-010-0110-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11432-010-0110-0