Abstract

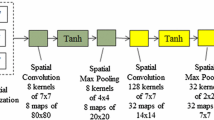

This paper proposes a general conversion theory to reveal the relations between convolutional neural network (CNN) and spiking convolutional neural network (spiking CNN) from structure to information processing. Based on the conversion theory and the statistical features of the activations distribution in CNN, we establish a deterministic conversion rule to convert CNNs into spiking CNNs with definite conversion procedure and the optimal setting of all parameters. Included in conversion rule, we propose a novel “n-scaling” weight mapping method to realize high-accuracy, low-latency and power efficient object classification on hardware. For the first time, the minimum dynamic range of spiking neuron’s membrane potential is studied to help to balance the trade-off between representation range and precise of the data type adopted by dedicated hardware when spiking CNNs run on it. The simulation results demonstrate that the converted spiking CNNs perform well on MNIST, SVHN and CIFAR-10 datasets. The accuracy loss over three datasets is no more than 0.4%. 39% of processing time is shortened at best, and less power consumption is benefited from lower latency achieved by our conversion rule. Furthermore, the results of noise robustness experiments indicate that spiking CNN inherits the robustness from its corresponding CNN.

Similar content being viewed by others

References

Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks. In: Proceedings of Advances in Neural Information Processing Systems, 2012. 1097–1105

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. 2014. ArXiv: 1409.1556

Szegedy C, Liu W, Jia Y Q, et al. Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015. 1–9

He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016. 770–778

Shi C, Yang J, Han Y, et al. A 1000 fps vision chip based on a dynamically reconfigurable hybrid architecture comprising a PE array processor and self-organizing map neural network. IEEE J Solid-State Circ, 2014, 49: 2067–2082

Yang J, Yang Y X, Chen Z, et al. A heterogeneous parallel processor for high-speed vision chip. IEEE Trans Circ Syst Video Technol, 2018, 28: 746–758

Du Z D, Fasthuber R, Chen T S, et al. ShiDianNao: shifting vision processing closer to the sensor. SIGARCH Comput Archit News, 2016, 43: 92–104

Chen Y H, Krishna T, Emer J S, et al. Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J Solid-State Circ, 2017, 52: 127–138

Wang J Q, Yang Y X, Liu L Y, et al. High-speed target tracking system based on multi-interconnection heterogeneous processor and multi-descriptor algorithm. Sci China Inf Sci, 2019, 62: 069401

Andri R, Cavigelli L, Rossi D, et al. YodaNN: an architecture for ultralow power binary-weight CNN acceleration. IEEE Trans Comput-Aided Des Integr Circ Syst, 2018, 37: 48–60

Genc H, Zu Y, Chin T W, et al. Flying IoT: toward low-power vision in the sky. IEEE Micro, 2017, 37: 40–51

Shin D, Lee J, Lee J, et al. 14.2 DNPU: an 8.1 TOPS/W reconfigurable CNN-RNN processor for general-purpose deep neural networks. In: Proceedings of 2017 IEEE International Solid-State Circuits Conference (ISSCC). New York: IEEE, 2017. 240–241

Ando K, Ueyoshi K, Orimo K, et al. BRein memory: a single-chip binary/ternary reconfigurable in-memory deep neural network accelerator achieving 1.4 TOPS at 0.6 W. IEEE J Solid-State Circ, 2018, 53: 983–994

Merolla P A, Arthur J V, Alvarez-Icaza R, et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science, 2014, 345: 668–673

Esser S K, Merolla P A, Arthur J V, et al. Convolutional networks for fast, energy-efficient neuromorphic computing. Proc Nat Acad Sci, 2016, 113: 11441–11446

Benjamin B V, Gao P, McQuinn E, et al. Neurogrid: a mixed-analog-digital multichip system for large-scale neural simulations. Proc IEEE, 2014, 102: 699–716

Shen J C, Ma D, Gu Z H, et al. Darwin: a neuromorphic hardware co-processor based on spiking neural networks. Sci China Inf Sci, 2016, 59: 023401

Wu N J. Neuromorphic vision chips. Sci China Inf Sci, 2018, 61: 060421

Farabet C, Paz R, Pérez-Carrasco J, et al. Comparison between frame-constrained fix-pixel-value and frame-free spiking-dynamic-pixel convNets for visual processing. Front Neurosci, 2012, 6: 32

O’Connor P, Neil D, Liu S C, et al. Real-time classification and sensor fusion with a spiking deep belief network. Front Neurosci, 2013, 7: 178

Brader J M, Senn W, Fusi S. Learning real-world stimuli in a neural network with spike-driven synaptic dynamics. Neural Comput, 2007, 19: 2881–2912

Beyeler M, Dutt N D, Krichmar J L. Categorization and decision-making in a neurobiologically plausible spiking network using a STDP-like learning rule. Neural Netw, 2013, 48: 109–124

Querlioz D, Bichler O, Dollfus P, et al. Immunity to device variations in a spiking neural network with memristive nanodevices. IEEE Trans Nanotechnol, 2013, 12: 288–295

Diehl P U, Cook M. Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front Comput Neurosci, 2015, 9: 99

Zhao B, Ding R X, Chen S S, et al. Feedforward categorization on AER motion events using cortex-like features in a spiking neural network. IEEE Trans Neural Netw Learn Syst, 2015, 26: 1963–1978

Morrison A, Aertsen A, Diesmann M. Spike-timing-dependent plasticity in balanced random networks. Neural Comput, 2007, 19: 1437–1467

Zheng N, Mazumder P. Online supervised learning for hardware-based multilayer spiking neural networks through the modulation of weight-dependent spike-timing-dependent plasticity. IEEE Trans Neural Netw Learn Syst, 2018, 29: 4287–4302

Cao Y, Chen Y, Khosla D. Spiking deep convolutional neural networks for energy-efficient object recognition. Int J Comput Vis, 2015, 113: 54–66

Diehl P U, Neil D, Binas J, et al. Fast-classifying, high-accuracy spiking deep networks through weight and threshold balancing. In: Proceedings of 2015 International Joint Conference on Neural Networks (IJCNN). New York: IEEE, 2015. 1–8

Hunsberger E, Eliasmith C. Training spiking deep networks for neuromorphic hardware. 2016. ArXiv: 1611.05141

Rueckauer B, Lungu I A, Hu Y, et al. Conversion of continuous-valued deep networks to efficient event-driven networks for image classification. Front Neurosci, 2017, 11: 682

Culurciello E, Etienne-Cummings R, Boahen K A. A biomorphic digital image sensor. IEEE J Solid-State Circ, 2003, 38: 281–294

Xu Y, Tang H, Xing J, et al. Spike trains encoding and threshold rescaling method for deep spiking neural networks. In: Proceedings of 2017 IEEE Symposium Series on Computational Intelligence (SSCI). New York: IEEE, 2017. 1–6

Akopyan F, Sawada J, Cassidy A, et al. TrueNorth: design and tool flow of a 65 mW 1 million neuron programmable neurosynaptic chip. IEEE Trans Comput-Aided Des Integr Circ Syst, 2015, 34: 1537–1557

Arthur J V, Merolla P A, Akopyan F, et al. Building block of a programmable neuromorphic substrate: a digital neurosynaptic core. In: Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN). New York: IEEE, 2012. 1–8

Goodfellow I J, Bulatov Y, Ibarz J, et al. Multi-digit number recognition from street view imagery using deep convolutional neural networks. 2013. ArXiv: 1312.6082

Vedaldi A, Lenc K. Matconvnet: convolutional neural networks for matlab. In: Proceedings of the 23rd ACM International Conference on Multimedia. New YorK: ACM, 2015: 689–692

Zhang T L, Zeng Y, Zhao D C, et al. Brain-inspired balanced tuning for spiking neural networks. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence, 2018. 1653–1659

Kulkarni S R, Rajendran B. Spiking neural networks for handwritten digit recognition-supervised learning and network optimization. Neural Netw, 2018, 103: 118–127

Tavanaei A, Maida A S. Bio-inspired spiking convolutional neural network using layer-wise sparse coding and stdp learning. 2016. ArXiv: 1611.03000

Acknowledgements

This work was supported by National Natural Science Foundation of China (Grant Nos. 61704167, 61434004), Beijing Municipal Science and Technology Project (Grant No. Z181100008918009), Youth Innovation Promotion Association Program, Chinese Academy of Sciences (Grant No. 2016107), and Strategic Priority Research Program of Chinese Academy of Science (Grant No. XDB32050200).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, X., Zhang, Z., Zhu, W. et al. Deterministic conversion rule for CNNs to efficient spiking convolutional neural networks. Sci. China Inf. Sci. 63, 122402 (2020). https://doi.org/10.1007/s11432-019-1468-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11432-019-1468-0