Abstract

Breast cancer is a common life-threatening disease among women. Computer-aided methods can provide second opinion or decision support for early diagnosis in mammography images. However, the whole images classification is highly challenging due to small sizes of lesion and slow contrast between lesions and fibro-glandular tissue. In this paper, inspired by conventional machine learning methods, we present a Multi Frequency Attention Network (MFA-Net) to highlight the salient features. The network decomposes the features into low spatial frequency components and high spatial frequency components, and then recalibrates discriminating features based on two-dimensional Discrete Cosine Transform in two different frequency parts separately. Low spatial frequency features help determine if there is a tumor while high spatial frequency features help focus more on the margin of the tumor. Our studies empirically show that compared to traditional convolutional neural network (CNN), the proposed method mitigates the influence of the margin of pectoral muscle and breast in mammography, which brings significant improvement. For malignant and benign classification, by using transfer learning, the proposed MFA-Net achieves the AUC index 91.71% on the INbreast dataset.

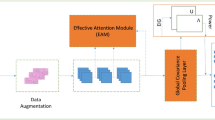

Graphical abstract

Similar content being viewed by others

Change history

24 May 2022

A Correction to this paper has been published: https://doi.org/10.1007/s11517-022-02597-x

References

Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, Bray F (2021) Global cancer statistics 2020: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA: A Cancer Journal for Clinicians 71(3):209–249

Shen Y, Wu N, Phang J, Park J, Liu K, Tyagi S, Heacock L, Kim SG, Moy L, Cho K, Geras KJ (2020) An interpretable classifier for high-resolution breast cancer screening images utilizing weakly supervised localization. Medical Image Analysis, pp 101908

Kontos D, Conant EF (2019) Can AI help make screening mammography “lean”?

Talha MNU (2016) Classification of mammograms for breast cancer detection using fusion of discrete cosine transform and discrete wavelet transform features

Sehrawat D, Sehrawat A, Jaiswal D, Sen A (2017) Detection and classification of tumor in mammograms using discrete wavelet transform and support vector machine. International Research Journal of Engineering and Technology (IRJET) 4(5):1328–1334

Jadoon MM, Zhang Q, Haq IUl , Butt S, Jadoon A (2017) Three-class mammogram classification based on descriptive CNN features. BioMed Research International, 2017

Tsochatzidis L, Costaridou L, Pratikakis I (2019) Deep learning for breast cancer diagnosis from mammograms-a comparative study. Journal of Imaging 5(3):37

Sechopoulos I, Teuwen J, Mann R (2020) Artificial intelligence for breast cancer detection in mammography and digital breast tomosynthesis: state of the art. In: Seminars in cancer biology. Elsevier

Carneiro G, Nascimento J, Bradley AP (2017) Automated analysis of unregistered multi-view mammograms with deep learning. IEEE Transactions on Medical Imaging 36(11):2355–2365

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7794–7803

Quader N, Bhuiyan MMI, Lu J, Dai P, Li W (2020) Weight excitation: Built-in attention mechanisms in convolutional neural networks. In: European conference on computer vision. Springer, pp 87–103

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 7132–7141

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV). pp 3–19

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) Eca-net: Efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 11534–11542

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. arXiv:1706.03762

Zhao X, Yu L, Wang X (2020) Cross-view attention network for breast cancer screening from multi-view mammograms. In: ICASSP 2020-2020 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 1050–1054

Deng J, Ma Y, Li D-A, Zhao J, Liu Y, Zhang H (2020) Classification of breast density categories based on se-attention neural networks. Computer Methods and Programs in Biomedicine, pp 105489

Pi Y, Chen Y, Deng D, Qi X, Li J, Lv Q, Yi Z (2020) Automated diagnosis of multi-plane breast ultrasonography images using deep neural networks. Neurocomputing 403:371–382

Wang H, Wu X, Huang Z, Xing EP (2020) High-frequency component helps explain the generalization of convolutional neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 8684–8694

Chen Y, Fan H, Xu B, Yan Z, Kalantidis Y, Rohrbach M, Yan S, Feng J (2019) Drop an octave: Reducing spatial redundancy in convolutional neural networks with octave convolution. In: Proceedings of the IEEE international conference on computer vision. pp 3435–3444

Lindeberg T (2013) Scale-space theory in computer vision. vol 256. Springer Science & Business Media

Qin Z, Zhang P, Wu F, Li X (2020) Fcanet: Frequency channel attention networks. arXiv:2012.11879

Lee RS, Gimenez F, Hoogi A, Miyake KK, Gorovoy M, Rubin DL (2017) A curated mammography data set for use in computer-aided detection and diagnosis research. Scientific Data 4(1):1–9

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision. pp 1026–1034

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition. IEEE pp 248–255

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 770–778

Misra D, Nalamada T, Arasanipalai AU, Hou Q (2020) Rotate to attend: Convolutional triplet attention module. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision. pp 3139–3148

Chattopadhay A, Sarkar A, Howlader P, Balasubramanian VN (2018) Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In: 2018 IEEE winter conference on applications of computer vision (WACV). IEEE, pp 839–847

Shams S, Platania R, Zhang J, Kim J, Lee K, Park S-J (2018) Deep generative breast cancer screening and diagnosis. In: international conference on medical image computing and computer-assisted intervention. Springer, pp 859–867

Li H, Chen D, Nailon WH, Davies ME, Laurenson D (2020) Dual convolutional neural networks for breastmass segmentation and diagnosis inmammography. arXiv:2008.02957

Dhungel N, Carneiro G, Bradley AP (2017) Fully automated classification of mammograms using deep residual neural networks. In: 2017 IEEE 14th international symposium on biomedical imaging (ISBI 2017). IEEE, pp 310–314

Zhu W, Lou v, Vang YS, Xie X (2017) Deep multi-instance networks with sparse label assignment for whole mammogram classification. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 603–611

Wang C-R, Zhang F, Yu Y, Wang Y (2020) Br-gan: Bilateral residual generating adversarial network for mammogram classification. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 657–666

Funding

This study was funded by the National Natural Science Foundation of China (No. 61961037) and Natural Science Foundation of Gansu Province (No. 18JR3RA288).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

The authors used the data publicly available for their study and did not collect data from any human participant or animal.

Conflict of interest

None.

Additional information

The original online version of this article was revised: Conflict on interest has been changed to None.

Rights and permissions

About this article

Cite this article

Wang, Y., Qi, Y., Xu, C. et al. Learning multi-frequency features in convolutional network for mammography classification. Med Biol Eng Comput 60, 2051–2062 (2022). https://doi.org/10.1007/s11517-022-02582-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-022-02582-4