Abstract

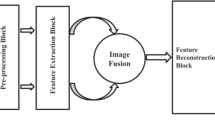

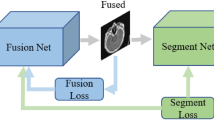

Medical image fusion aims to integrate complementary information from multimodal medical images and has been widely applied in the field of medicine, such as clinical diagnosis, pathology analysis, and healing examinations. For the fusion task, feature extraction is a crucial step. To obtain significant information embedded in medical images, many deep learning-based algorithms have been proposed recently and achieved good fusion results. However, most of them can hardly capture the independent and underlying features, which leads to unsatisfactory fusion results. To address these issues, a multibranch residual attention reconstruction network (MBRARN) is proposed for the medical image fusion task. The proposed network mainly consists of three parts: feature extraction, feature fusion, and feature reconstruction. Firstly, the input medical images are converted into three scales by image pyramid operation and then are input into three branches of the proposed network respectively. The purpose of this procedure is to capture the local detailed information and the global structural information. Then, convolutions with residual attention modules are designed, which can not only enhance the captured outstanding features, but also make the network converge fast and stably. Finally, feature fusion is performed with the designed fusion strategy. In this step, a new more effective fusion strategy is correspondently designed for MRI-SPECT based on the Euclidean norm, called feature distance ratio (FDR). The experimental results conducted on Harvard whole brain atlas dataset demonstrate that the proposed network can achieve better results in terms of both subjective and objective evaluation, compared with some state-of-the-art medical image fusion algorithms.

Graphical Abstract

Similar content being viewed by others

Data availability

All data included in this study are available upon request by contact with the corresponding author.

References

Kang TW, Lee MW, Song KD et al (2017) Added value of contrast-enhanced ultrasound on biopsies of focal hepatic lesions invisible on fusion imaging guidance. Korean J Radiol 18(1):152–161

Wu XB, Li KY, Luo HC et al (2018) The diagnostic value of focal liver lesion (≤2 cm) undetectable on conventional ultrasound by image fusion with contrast-enhanced ultrasound. Chin J Ultrason 27(10):860–864

Hermessi H, Mourali O, Zagrouba E (2021) Multimodal medical image fusion review: theoretical background and recent advances. Signal Process 183:108036

Tawfik N, Elnemr HA, Fakhr M et al (2021) Survey study of multimodality medical image fusion methods. Multimedia Tools Appl 80:6369–6396

Zhou T, Cheng QR, Lu HL et al (2023) Deep learning methods for medical image fusion: a review. Comput Biol Med 160:106959

Dong Y, Wang WP, Mao F et al (2016) Application of imaging fusion combining contrast-enhanced ultrasound and magnetic resonance imaging in detection of hepatic cellular carcinomas undetectable by conventional ultrasound. J Gastroenterol Hepatol 31(4):822–828

Wu DF, He W, Lin S et al (2018) The application of volume navigation with ultrasound and MR fusion image in neurosurgical brain tumor resection. Chinese J Ultrason 27(12):1036–1041

Zhang SY, Lv B (2023) A review of the diagnostic value of ultrasound image fusion in liver space occupying lesions. J Shandong First Med Un Shandong Acad Med Sci 44(4):313–316

Ma HL, Kui GH, Zhang Z (2020) Value of multi-modality medical image fusion technology in evaluating hepatic focal lesions in chronic aplastic anemia patients administered androgens. J Clin Exp Med 19(22):2456–2459

Ma BR, Yang H (2005) Image fusion technology and its application in epileptic. Chin J Biomed Eng 24(3):357–361

Hu J, Dong XQ, Lin Q et al (2018) Medical image registration and fusion technology applied in cancer radiotherapy. Chin Med Equip J 39(8):75–7884

Du J, LI W, XIAO B et al (2016) Union Laplacian pyramid with multiple features for medical image fusion. Neurocomputing 194:326–339

Wang Z, Cui Z, Zhu Y (2020) Multi-modal medical image fusion by Laplacian pyramid and adaptive sparse representation. Comput Biol Med 123:103823

Bhat S, Koundal D (2021) Multi-focus image fusion using neutrosophic based wavelet transform. Appl Soft Comput 106:107307

Liu Y, Liu S, Wang Z (2014) Medical image fusion by combining nonsubsampled contourlet transform and sparse representation. In: Pattern Recognition: 6th Chinese Conference (CCPR). pp 17–19

Ibrahim SI, Makhlouf MA, El-Tawel GS (2023) Multimodal medical image fusion algorithm based on pulse coupled neural networks and nonsubsampled contourlet transform. Med Biol Eng Compu 61(1):155–177

Xu W, Fu YL, Xu H (2023) Medical image fusion using enhanced cross-visual cortex model based on artificial selection and impulse-coupled neural network. Comput Methods Programs Biomed 229:107304

Yin M, Liu X, Liu Y et al (2018) Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled shearlet transform domain. IEEE Trans Instrum Meas 68(1):49–64

Wang L, Dou J, Qin P et al (2021) Multimodal medical image fusion based on nonsubsampled shearlet transform and convolutional sparse representation. Multimedia Tools Appl 80:36401–36421

Gai D, Shen X, Cheng H et al (2019) Medical image fusion via PCNN based on edge preservation and improved sparse representation in NSST domain. IEEE Access 7:85413–85429

Dinh PH (2023) Combining spectral total variation with dynamic threshold neural P systems for medical image fusion. Biomed Signal Process Control 80:104343

Li J, Han D, Wang X et al (2023) Multi-sensor medical-image fusion technique based on embedding bilateral filter in least squares and salient detection. Sensors 23(7):3490

Dinh PH (2023) Medical image fusion based on enhanced three-layer image decomposition and Chameleon swarm algorithm. Biomed Signal Process Control 84:104740

Barba-J L, Vargas-Quintero L, Calderón-Agudelo JA (2022) Bone SPECT/CT image fusion based on the discrete hermite transform and sparse representation. Biomed Signal Process Control 71:103096

Chen J, Zhang L, Lu L et al (2021) A novel medical image fusion method based on rolling guidance filtering. Int Things 14:100172

Zhao H, Zhang JX, Zhang ZG (2021) PCNN medical image fusion based on NSCT and DWT. Adv Laser Optoelectron 58(20):445–454

Liu Y, Chen X, Cheng J et al (2017) A medical image fusion method based on convolutional neural networks[C]//2017 20th international conference on information fusion (Fusion). IEEE 2017:1–7

Wang L, Zhang J, Liu Y et al (2021) Multimodal medical image fusion based on Gabor representation combination of multi-CNN and fuzzy neural network. IEEE Access 9:67634–67647

Zhang Y, Liu Y, Sun P et al (2020) IFCNN: A general image fusion framework based on convolutional neural network. Inform Fusion 54:99–118

Xu H, Ma J (2021) EMFusion: An unsupervised enhanced medical image fusion network. Inform Fusion 76:177–186

Azam MA, Khan KB, Salahuddin S et al (2022) A review on multimodal medical image fusion: compendious analysis of medical modalities, multimodal databases, fusion techniques and quality metrics. Comput Biol Med 144:105253

Zhang G, Nie R, Cao J et al (2023) FDGNet: A pair feature difference guided network for multimodal medical image fusion. Biomed Signal Process Control 81:104545

Fu J, He B, Yang J et al (2023) CDRNet: Cascaded dense residual network for grayscale and pseudocolor medical image fusion. Comput Methods Programs Biomed 234:107506

Fu J, Li W, Peng X et al (2023) MDRANet: A multiscale dense residual attention network for magnetic resonance and nuclear medicine image fusion. Biomed Signal Process Control 80:104382

Ding ZS, Li HY, Guo Y et al (2023) M4FNet: Multimodal medical image fusion network via multi-receptive-field and multi-scale feature integration. Comput Biol Med 159:106923

Panigrahy C, Seal A, Gonzalo-Martín C et al (2023) Parameter adaptive unit-linking pulse coupled neural network based MRI-PET/SPECT image fusion. Biomed Signal Process Control 83:104659

Zhao C, Wang T, Lei B (2021) Medical image fusion method based on dense block and deep convolutional generative adversarial network. Neural Comput Appl 33:6595–6610

Huang J, Le Z, Ma Y et al (2020) MGMDcGAN: medical image fusion using multi-generator multi-discriminator conditional generative adversarial network. IEEE Access 8:55145–55157

Ma J, Xu H, Jiang J et al (2020) DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans Image Process 29:4980–4995

Fu J, Li W, Du J et al (2021) DSAGAN: A generative adversarial network based on dual-stream attention mechanism for anatomical and functional image fusion. Inf Sci 576:484–506

Wang J, Yu L, Tian S (2022) MsRAN: a multi-scale residual attention network for multi-model image fusion. Med Biol Eng Compu 60:3615–3634

He K, Zhang X, Ren S, et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 770–778

Mnih V, Heess N, Graves A (2014) Recurrent models of visual attention. In: Advances in neural information processing systems

Wang F, Jiang M, Qian C, et al (2017) Residual attention network for image classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 3156–3164

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980. https://doi.org/10.48550/arXiv.1412.6980

Tang LF, Zhang H, Xu H, Ma JY (2023) Deep learning-based image fusion: a survey. J Image Graphics 28(1):3–36

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Wang Z, Li Q (2011) Information content weighting for perceptual image quality assessment. IEEE Trans Image Process 20(5):1185–1198

Qu G, Zhang D, Yan P (2002) Information measure for performance of image fusion. Electron Lett 38(7):313–315

Haghighat MBA, Aghagolzadeh A, Seyedarabi H (2011) A non-reference image fusion metric based on mutual information of image features. Comput Electr Eng 37(5):744–756

Sheikh HR, Bovik AC (2016) Image information and visual quality. IEEE Trans Image Process 15(2):430–444

Xydeas CS, Petrovic V (2000) Objective image fusion performance measure. Electron Lett 36(4):308–309

Hossny M, Nahavandi S, Creighton D et al (2010) Image fusion performance metric based on mutual information and entropy driven quadtree decomposition. Electron Lett 46(18):1266–1268

Aslantas V, Bendes E (2015) A new image quality metric for image fusion: the sum of the correlations of differences. Aeu-int J Elect Commun 69(12):1890–1896

Zhu Z, Zheng M, Qi G et al (2019) A phase congruency and local Laplacian energy based multi-modality medical image fusion method in NSCT domain. IEEE Access 7:20811–20824

Tan W, Zhang J, Xiang P et al (2020) Infrared and visible image fusion via NSST and PCNN in multiscale morphological gradient domain[C]//Optics, photonics and digital technologies for imaging applications VI. SPIE 11353:297–303

Tan W, Thitøn W, Xiang P et al (2021) Multi-modal brain image fusion based on multi-level edge-preserving filtering. Biomed Signal Process Control 64:102280

Liu Y, Liu S, Wang Z (2015) A general framework for image fusion based on multi-scale transform and sparse representation. Inform Fusion 24:147–164

Vanitha K, Satyanarayana D, Prasad MNG (2020) Medical image fusion algorithm based on weighted local energy motivated PAPCNN in NSST domain. J Adv Res Dyn Control Syst 12(SP3):960–967

Khorasani A, Tavakoli MB, Saboori M et al (2021) Preliminary study of multiple b-value diffusion-weighted images and T1 post enhancement magnetic resonance imaging images fusion with Laplacian Re-decomposition (LRD) medical fusion algorithm for glioma grading. Eur J Radiol Open 8:100378

Li W, Peng X, Fu J et al (2022) A multiscale double-branch residual attention network for anatomical–functional medical image fusion. Comput Biol Med 141:105005

Acknowledgements

The authors would like to thank the anonymous reviewers for their critical and constructive comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, W., Lu, Y., Zheng, H. et al. MBRARN: multibranch residual attention reconstruction network for medical image fusion. Med Biol Eng Comput 61, 3067–3085 (2023). https://doi.org/10.1007/s11517-023-02902-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-023-02902-2