Abstract

Purpose

We aim to develop quantitative performance metrics and a deep learning model to objectively assess surgery skills between the novice and the expert surgeons for arthroscopic rotator cuff surgery. These proposed metrics can be used to give the surgeon an objective and a quantitative self-assessment platform.

Methods

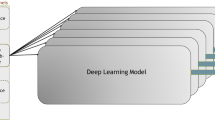

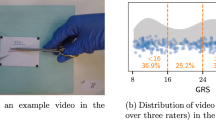

Ten shoulder arthroscopic rotator cuff surgeries were performed by two novices, and fourteen were performed by two expert surgeons. These surgeries were statistically analyzed. Two existing evaluation systems: Basic Arthroscopic Knee Skill Scoring System (BAKSSS) and the Arthroscopic Surgical Skill Evaluation Tool (ASSET), were used to validate our proposed metrics. In addition, a deep learning-based model called Automated Arthroscopic Video Evaluation Tool (AAVET) was developed toward automating quantitative assessments.

Results

The results revealed that novice surgeons used surgical tools approximately 10% less effectively and identified and stopped bleeding less swiftly. Our results showed a notable difference in the performance score between the experts and novices, and our metrics successfully identified these at the task level. Moreover, the F1-scores of each class are found as 78%, 87%, and 77% for classifying cases with no-tool, electrocautery, and shaver tool, respectively.

Conclusion

We have constructed quantitative metrics that identified differences in the performances of expert and novice surgeons. Our ultimate goal is to validate metrics further and incorporate these into our virtual rotator cuff surgery simulator (ViRCAST), which has been under development. The initial results from AAVET show that the capability of the toolbox can be extended to create a fully automated performance evaluation platform.

Similar content being viewed by others

References

Day MA, Westermann RW, Bedard NA, Glass NA, Wolf BR (2019) Trends associated with open versus arthroscopic rotator cuff repair. HSS J 15:133–136

Wang EE, Vozenilek JA, Flaherty J, Kharasch M, Aitchison P, Berg A (2007) An innovative and inexpensive model for teaching cricothyrotomy. Simul Healthc 2:25–29

Pettineo CM, Vozenilek JA, Wang E, Flaherty J, Kharasch M, Aitchison P (2009) Simulated emergency department procedures with minimal monetary investment: cricothyrotomy simulator. Simul Healthc 4:60–64

Cho J, Kang GH, Kim EC, Oh YM, Choi HJ, Im TH, Yang JH, Cho YS, Chung HS (2008) Comparison of manikin versus porcine models in cricothyrotomy procedure training. Emerg Med J 25:732–734

Aggarwal R, Ward J, Balasundaram I, Sains P, Athanasiou T, Darzi A (2007) Proving the effectiveness of virtual reality simulation for training in laparoscopic surgery. Ann Surg 246:771–779. https://doi.org/10.1097/SLA.0b013e3180f61b09

Jamal MH, Rousseau MC, Hanna WC, Doi SA, Meterissian S, Snell L (2011) Effect of the ACGME duty hours restrictions on surgical residents and faculty: a systematic review. Acad Med 86:34–42

Fried MP, Satava R, Weghorst S, Gallagher A, Sasaki C, Ross D, Sinanan M, Cuellar H, Uribe JI, Zeltsan M, Arora H (2005) The use of surgical simulators to reduce errors. In: Henriksen K, Battles JB, Marks ES, Lewin DI (eds) Advances in patient safety: from research to implementation (volume 4: programs, tools, and products). Agency for Healthcare Research and Quality (US), Rockville

Seymour NE, Gallagher AG, Roman SA, O’brien MK, Bansal VK, Andersen DK, Satava RM (2002) Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg 236:458–464

Grantcharov TP, Kristiansen VB, Bendix J, Bardram L, Rosenberg J, Funch-Jensen P (2004) Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg 91:146–150

Rosser JC, Rosser LE (1960) Savalgi RS (1997) Skill acquisition and assessment for laparoscopic surgery. Arch Surg Chic Ill 132:200–204

Koehler RJ, Amsdell S, Arendt EA, Bisson LJ, Braman JP, Butler A, Cosgarea AJ, Harner CD, Garrett WE, Olson T, Warme WJ, Nicandri GT (2013) The Arthroscopic Surgical Skill Evaluation Tool (ASSET). Am J Sports Med 41:1229–1237. https://doi.org/10.1177/0363546513483535

Olson T, Koehler R, Butler A, Amsdell S, Nicandri G (2013) Is there a valid and reliable assessment of diagnostic knee arthroscopy skill? Clin Orthop 471:1670–1676. https://doi.org/10.1007/s11999-012-2744-2

Bayona S, Akhtar K, Gupte C, Emery RJ, Dodds AL, Bello F (2014) Assessing performance in shoulder arthroscopy: the imperial global arthroscopy rating scale (IGARS). J Bone Joint Surg Am 96:e112

Benner P (1982) From novice to expert. Am J Nurs 82:402–407

Demirel D, Yu A, Cooper-Baer S, Dendukuri A, Halic T, Kockara S, Kockara N, Ahmadi S (2017) A hierarchical task analysis of shoulder arthroscopy for a virtual arthroscopic tear diagnosis and evaluation platform (VATDEP). Int J Med Robot 13:e1799. https://doi.org/10.1002/rcs.1799

Peruto CM, Ciccotti MG, Cohen SB (2009) Shoulder arthroscopy positioning: lateral decubitus versus beach chair. Arthrosc J Arthrosc Relat Surg 25:891–896

Palmer B, Sundberg G, Dials J, Karaman B, Demirel D, Abid M, Halic T, Ahmadi S (2020) Arthroscopic Tool Classification using Deep Learning. In: Proceedings of the 2020 the 4th International Conference on Information System and Data Mining. pp 96–99

Thompson BM, Rogers JC (2008) Exploring the learning curve in medical education: using self-assessment as a measure of learning. Acad Med 83:S86–S88

Ji S, Xu W, Yang M, Yu K (2012) 3D convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35:221–231

Ide H, Kurita T (2017) Improvement of learning for CNN with ReLU activation by sparse regularization. In: 2017 International joint conference on neural networks (IJCNN). IEEE, pp 2684–2691

Wang M, Lu S, Zhu D, Lin J, Wang Z (2018) A high-speed and low-complexity architecture for softmax function in deep learning. In: 2018 IEEE Asia Pacific conference on circuits and systems (APCCAS). IEEE, pp 223–226

Zhang Z (2018) Improved adam optimizer for deep neural networks. In: 2018 IEEE/ACM 26th international symposium on quality of service (IWQoS). IEEE, pp 1–2

Kakarla J, Isunuri BV, Doppalapudi KS, Bylapudi KSR (2021) Three-class classification of brain magnetic resonance images using average-pooling convolutional neural network. Int J Imaging Syst Technol 31:1731–1740. https://doi.org/10.1002/ima.22554

Insel A, Carofino B, Leger R, Arciero R, Mazzocca AD (2009) The development of an objective model to assess arthroscopic performance. JBJS 91:2287–2295

Koskinen J, Huotarinen A, Elomaa A-P, Zheng B, Bednarik R (2021) Movement-level process modeling of microsurgical bimanual and unimanual tasks. Int J Comput Assist Radiol Surg 2021:1–10

Narazaki K, Oleynikov D, Stergiou N (2007) Objective assessment of proficiency with bimanual inanimate tasks in robotic laparoscopy. J Laparoendosc Adv Surg Tech 17:47–52

Jonmohamadi Y, Takeda Y, Liu F, Sasazawa F, Maicas G, Crawford R, Roberts J, Pandey AK, Carneiro G (2020) Automatic segmentation of multiple structures in knee arthroscopy using deep learning. IEEE Access 8:51853–51861

Alshirbaji TA, Jalal NA, Möller K (2018) Surgical tool classification in laparoscopic videos using convolutional neural network. Curr Dir Biomed Eng 4:407–410

Jaafari J, Douzi S, Douzi K, Hssina B (2022) The impact of ensemble learning on surgical tools classification during laparoscopic cholecystectomy. J Big Data 9:1–20

Wang S, Raju A, Huang J (2017) Deep learning based multi-label classification for surgical tool presence detection in laparoscopic videos. In: 2017 IEEE 14th international symposium on biomedical imaging (ISBI 2017). IEEE, pp 620–623

Acknowledgements

The authors would like to thank Seth Cooper-Baer and Mustafa Tunc for their contributions to this publication. This publication was made possible by the Grant NIH/NIAMS R44AR075481-01. This project was also supported by the Arkansas INBRE program (NIGMS, P20 GM103429), NIH/NCI 5R01CA197491, and NIH/NHLBI NIH/NIBIB 1R01EB025241, R56EB026490.

Funding

This project was made possible by the Arkansas INBRE program, supported by a grant from the National Institute of General Medical Sciences (NIGMS), P20 GM103429 from the National Institutes of Health (NIH). This project was also supported by NIH/NIAMS R44AR075481-01, NIH/NCI 5R01CA197491, and NIH/NHLBI NIH/NIBIB 1R01EB025241, R56EB026490.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests in regard to this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Demirel, D., Palmer, B., Sundberg, G. et al. Scoring metrics for assessing skills in arthroscopic rotator cuff repair: performance comparison study of novice and expert surgeons. Int J CARS 17, 1823–1835 (2022). https://doi.org/10.1007/s11548-022-02683-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-022-02683-3