Abstract

Purpose

An inevitable feature of ultrasound-based diagnoses is that the quality of the ultrasound images produced depends directly on the skill of the physician operating the probe. This is because physicians have to constantly adjust the probe position to obtain a cross section of the target organ, which is constantly shifting due to patient respiratory motions. Therefore, we developed an ultrasound diagnostic robot that works in cooperation with a visual servo system based on deep learning that will help alleviate the burdens imposed on physicians.

Methods

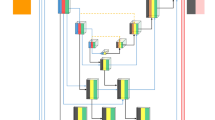

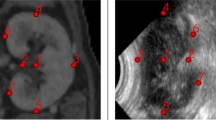

Our newly developed robotic ultrasound diagnostic system consists of three robots: an organ tracking robot (OTR), a robotic bed, and a robotic supporting arm. Additionally, we used different image processing methods (YOLOv5s and BiSeNet V2) to detect the target kidney location, as well as to evaluate the appropriateness of the obtained ultrasound images (ResNet 50). Ultimately, the image processing results are transmitted to the OTR for use as motion commands.

Results

In our experiments, the highest effective tracking rate (0.749) was obtained by YOLOv5s with Kalman filtering, while the effective tracking rate was improved by about 37% in comparison with cases without such filtering. Additionally, the appropriateness probability of the ultrasound images obtained during the tracking process was also the highest and most stable. The second highest tracking efficiency value (0.694) was obtained by BiSeNet V2 with Kalman filtering and was a 75% improvement over the case without such filtering.

Conclusion

While the most efficient tracking achieved is based on the combination of YOLOv5s and Kalman filtering, the combination of BiSeNet V2 and Kalman filtering was capable of detecting the kidney center of gravity closer to the kidney’s actual motion state. Furthermore, this model could also measure the cross-sectional area, maximum diameter, and other detailed information of the target kidney, which meant it is more practical for use in actual diagnoses.

Similar content being viewed by others

References

Boudet S, Gariepy J, and Mansour S. (1997) An integrated robotics and medical control device to quantify atheromatous plaques: experiments on the arteries of a patient. In: Proceedings of the 1997 IEEE/RSJ international conference on intelligent robot and systems. innovative robotics for real-world applications. IROS'97 3: pp 1533–1538 IEEE

Berg S, Torp H, Martens D, Steen E, Samstad S, Høivik I, Olstad B (1999) Dynamic three-dimensional freehand echocardiography using raw digital ultrasound data. Ultrasound Med Biol 25(5):745–753

Davies SC, Hill AL, Holmes RB, Halliwell M, Jackson PC (1994) Ultrasound quantitation of respiratory organ motion in the upper abdomen. Br J Radiol 67(803):1096–1102

Schwartz LH, Richaud J, Buffat L, Touboul E, Schlienger M (1994) Kidney mobility during respiration. Radiother Oncol 32(1):84–86

Mebarki R, Krupa A, Chaumette F (2010) 2-d ultrasound probe complete guidance by visual servoing using image moments. IEEE Trans Rob 26(2):296–306

Zettinig O, Fuerst B, Kojcev R, Esposito M, Salehi M, Wein W, and Navab N (2016) Toward real-time 3D ultrasound registration-based visual servoing for interventional navigation. In: 2016 IEEE international conference on robotics and automation (ICRA) pp 945–950 IEEE

Novotny PM, Stoll JA, Dupont PE, and Howe RD (2007) Real-time visual servoing of a robot using three-dimensional ultrasound. In: Proceedings 2007 IEEE international conference on robotics and automation pp 2655–2660 IEEE

Li Y, Yin K, Liang J, Wang C, and Yin G (2020) A multi-task joint framework for real-time person search. arXiv preprint arXiv:2012.06418

Yu C, Gao C, Wang J, Yu G, Shen C, and Sang N (2020) Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation. arXiv preprint arXiv:2004.02147

He K, Zhang X, Ren S, and Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition pp 770–778

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst 28:91–99

Xinzheng L, Wei J, Gang L, and Caoqian Y (2019) YOLO V2 network with asymmetric convolution kernel for lung nodule detection of CT image, Chin J Biomed Eng

Guo D, Zhang G, Peng H, Yuan J, Paul P, Fu K, Zhu M (2021) Segmentation and measurements of carotid intima-media thickness in ultrasound images using the improved convolutional neural network and support vector machine. J Med Imaging Health Inf 11(1):15–24

Hu Y, Guo L, Lei B, Mao M, Jin Z, Elazab A., Wang T (2019) Fully automatic pediatric echocardiography segmentation using deep convolutional networks based on BiSeNet. In: 2019 41st annual international conference of the ieee engineering in medicine and biology society (EMBC). IEEE, pp 6561–6564

Song R, Tipirneni A, Johnson P, Loeffler RB, Hillenbrand CM (2011) Evaluation of respiratory liver and kidney movements for MRI navigator gating. J Magnetic Res Imaging 33(1):143–148

Xie, S., & Tu, Z. (2015). Holistically-nested edge detection. In: Proceedings of the IEEE international conference on computer vision (pp. 1395–1403).

Welch G,and Bishop G (1995) An introduction to the Kalman filter

Koizumi N, Itagaki Y, Lee D, Tsukihara H, Azuma T, Nomiya A, Yoshinaka K, Sugita N, Homma Y, Matsumoto Y, Mitsuishi M (2013) Construction of kidney phantom model with acoustic shadow by rib bones and respiratory organ motion. J Japan Soc Comput Aid Surg J JSCAS 15(2):228–229

Acknowledgements

The authors gratefully acknowledge the financial support by the Japan Society for the Promotion of Science (JSPS) KAKENHI Grant Numbers JP20H02113.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Human and animal rights

The authors declare that none of the experiments performed in this study involved human participants or animals.

Informed consent

This article does not contain patient data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhou, J., Koizumi, N., Nishiyama, Y. et al. A VS ultrasound diagnostic system with kidney image evaluation functions. Int J CARS 18, 227–246 (2023). https://doi.org/10.1007/s11548-022-02759-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-022-02759-0