Abstract

Purpose

Automatic surgical instrument segmentation is a crucial step for robotic-aided surgery. Encoder–decoder construction-based methods often directly fuse high-level and low-level features by skip connection to supplement some detailed information. However, irrelevant information fusion also increases misclassification or wrong segmentation, especially for complex surgical scenes. Uneven illumination always results in instruments similar to other tissues of background, which greatly increases the difficulty of automatic surgical instrument segmentation. The paper proposes a novel network to solve the problem.

Methods

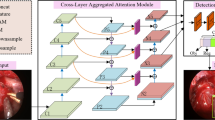

The paper proposes to guide the network to select effective features for instrument segmentation. The network is named context-guided bidirectional attention network (CGBANet). The guidance connection attention (GCA) module is inserted into the network to adaptively filter out irrelevant low-level features. Moreover, we propose bidirectional attention (BA) module for the GCA module to capture both local information and local–global dependency for surgical scenes to provide accurate instrument features.

Results

The superiority of our CGBA-Net is verified by multiple instrument segmentation on two publicly available datasets of different surgical scenarios, including an endoscopic vision dataset (EndoVis 2018) and a cataract surgery dataset. Extensive experimental results demonstrate our CGBA-Net outperforms the state-of-the-art methods on two datasets. Ablation study based on the datasets proves the effectiveness of our modules.

Conclusion

The proposed CGBA-Net increased the accuracy of multiple instruments segmentation, which accurately classifies and segments the instruments. The proposed modules effectively provided instrument-related features for the network.

Similar content being viewed by others

References

Ginesi M, Meli D, Roberti A, Sansonetto N, Fiorini P (2020) Autonomous task planning and situation awareness in robotic surgery. In: 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 3144–3150. https://doi.org/10.1109/IROS45743.2020.9341382

Zisimopoulos O, Flouty E, Luengo I, Giataganas P, Nehme J, Chow A, Stoyanov D (2018) Deepphase: surgical phase recognition in cataracts videos. In: Frangi AF, Schnabel JA, Davatzikos C, Alberola-López C, Fichtinger G (eds) Medical image computing and computer assisted intervention - MICCAI 2018. Springer, Cham, pp 265–272

Ni Z-L, Bian G-B, Wang G-A, Zhou X-H, Hou Z-G, Xie X-L, Li Z, Wang Y-H (2020) Barnet: bilinear attention network with adaptive receptive fields for surgical instrument segmentation. In: Proceedings of the twenty-ninth international joint conference on artificial intelligence, IJCAI-20, pp 832–838. https://doi.org/10.24963/ijcai.2020/116

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention, Springer, pp 234–241

Kamrul Hasan SM, Linte CA (2019) U-netplus: a modified encoder-decoder U-Net architecture for semantic and instance segmentation of surgical instruments from laparoscopic images. In: 2019 41st annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp. 7205–7211. https://doi.org/10.1109/EMBC.2019.8856791

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: Bengio Y, LeCun, Y (eds) 3rd international conference on learning representations, ICLR 2015, San Diego, CA, USA, May 7–9, 2015, Conference track proceedings. arxiv: 1409.1556

Gu Z, Cheng J, Fu H, Zhou K, Hao H, Zhao Y, Zhang T, Gao S, Liu J (2019) Ce-net: context encoder network for 2d medical image segmentation. IEEE Trans Med Imaging 38(10):2281–2292. https://doi.org/10.1109/TMI.2019.2903562

Lin S-Y, Chiang P-L, Chen P-W, Cheng L-H, Chen M-H, Chang P-C, Lin W-C, Chen Y (2022) Toward automated segmentation for acute ischemic stroke using non-contrast computed tomography. Int J Comput Assist Radiol Surg 17:661–671

González C, Sánchez LB, Arbelaez P (2020) Isinet: an instance-based approach for surgical instrument segmentation. CoRR arxiv:2007.05533

Flouty E, Kadkhodamohammadi A, Luengo I, Fuentes-Hurtado F, Taleb H, Barbarisi S, Quellec G, Stoyanov D (2019) Cadis: cataract dataset for image segmentation. CoRR arxiv: 1906.11586

Mnih V, Heess N, Graves A, Kavukcuoglu K (2014) Recurrent models of visual attention. In: Proceedings of the 27th international conference on neural information processing systems, vol 2. NIPS’14, MIT Press, Cambridge, pp 2204–2212

Hu J, Shen L, Albanie S, Sun G, Wu E (2020) Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 42(8):2011–2023. https://doi.org/10.1109/TPAMI.2019.2913372

Park J, Woo S, Lee J-Y, Kweon I-S (2018) Bam: bottleneck attention module. In: BMVC

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: convolutional block attention module. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) Computer vision—ECCV 2018. Springer, Cham, pp 3–19

Banerjee S, Dhara AK, Wikström J, Strand R (2021) Segmentation of intracranial aneurysm remnant in MRA using dual-attention atrous net. In: 2020 25th international conference on pattern recognition (ICPR), pp 9265–9272. https://doi.org/10.1109/ICPR48806.2021.9413175

Ni Z-L, Bian G-B, Hou Z-G, Zhou X-H, Xie X-L, Li Z (2020) Attention-guided lightweight network for real-time segmentation of robotic surgical instruments. In: 2020 IEEE international conference on robotics and automation (ICRA), pp 9939–9945. https://doi.org/10.1109/ICRA40945.2020.9197425

Islam M, Vibashan VS, Ren H (2020) Ap-mtl: attention pruned multi-task learning model for real-time instrument detection and segmentation in robot-assisted surgery. In: 2020 IEEE international conference on robotics and automation (ICRA), pp 8433–8439. https://doi.org/10.1109/ICRA40945.2020.9196905

Dai T, Cai J, Zhang Y, Xia S-T, Zhang L (2019) Second-order attention network for single image super-resolution. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 11057–11066. https://doi.org/10.1109/CVPR.2019.01132

Shelhamer E, Long J, Darrell T (2017) Fully convolutional networks for semantic segmentation. IEEE Trans Pattern Anal Mach Intell 39(4):640–651. https://doi.org/10.1109/TPAMI.2016.2572683

Zhao H, Shi J, Qi X, Wang X, Jia J (2017) Pyramid scene parsing network. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), pp 6230–6239. https://doi.org/10.1109/CVPR.2017.660

Chen L, Papandreou G, Schroff F, Adam H (2017) Rethinking atrous convolution for semantic image segmentation. CoRR arxiv: 1706.05587

Mehta R, Sivaswamy J (2017) M-net: a convolutional neural network for deep brain structure segmentation. In: IEEE international symposium on biomedical imaging, pp 437–440

Feng S, Zhao H, Shi F, Cheng X, Wang M, Ma Y, Xiang D, Zhu W, Chen X (2020) Cpfnet: context pyramid fusion network for medical image segmentation. IEEE Trans Med Imaging 39(10):3008–3018. https://doi.org/10.1109/TMI.2020.2983721

Li L, Verma M, Nakashima Y, Nagahara H, Kawasaki R (2020) Iternet: retinal image segmentation utilizing structural redundancy in vessel networks. In: 2020 IEEE winter conference on applications of computer vision (WACV), pp 3645–3654. https://doi.org/10.1109/WACV45572.2020.9093621

Qiu Y, Liu Y, Li S, Xu J (2022) Miniseg: an extremely minimum network based on lightweight multiscale learning for efficient Covid-19 segmentation. IEEE Transactions on Neural Networks and Learning Systems, 1–15. https://doi.org/10.1109/TNNLS.2022.3230821

Yang L, Gu Y, Bian G, Liu Y (2022) An attention-guided network for surgical instrument segmentation from endoscopic images. Comput Biol Med 151:106216. https://doi.org/10.1016/j.compbiomed.2022.106216

Acknowledgements

The authors thank Dr. Li Xi, Chief Physician, Department of Gastroenterology, Peking University Shenzhen Hospital, to provide support for the work.

Funding

This work was supported in part by General Program of National Natural Science Foundation of China (Grant No.82102189 and 82272086), Guangdong Basic and Applied Basic Research Foundation (Grant No.2021A1515012195 and 2020A1515110286), and Shenzhen Stable Support Plan Program (Grant No.20220815111736001).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

This article uses publicly available datasets.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, Y., Hu, Y., Shen, J. et al. CGBA-Net: context-guided bidirectional attention network for surgical instrument segmentation. Int J CARS 18, 1769–1781 (2023). https://doi.org/10.1007/s11548-023-02906-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-02906-1