Abstract

Purpose

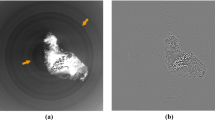

Existing field generators (FGs) for magnetic tracking cause severe image artifacts in X-ray images. While FG with radio-lucent components significantly reduces these imaging artifacts, traces of coils and electronic components may still be visible to trained professionals. In the context of X-ray-guided interventions using magnetic tracking, we introduce a learning-based approach to further reduce traces of field-generator components from X-ray images to improve visualization and image guidance.

Methods

An adversarial decomposition network was trained to separate the residual FG components (including fiducial points introduced for pose estimation), from the X-ray images. The main novelty of our approach lies in the proposed data synthesis method, which combines existing 2D patient chest X-ray and FG X-ray images to generate 20,000 synthetic images, along with ground truth (images without the FG) to effectively train the network.

Results

For 30 real images of a torso phantom, our enhanced X-ray image after image decomposition obtained an average local PSNR of 35.04 and local SSIM of 0.97, whereas the unenhanced X-ray images averaged a local PSNR of 31.16 and local SSIM of 0.96.

Conclusion

In this study, we proposed an X-ray image decomposition method to enhance X-ray image for magnetic navigation by removing FG-induced artifacts, using a generative adversarial network. Experiments on both synthetic and real phantom data demonstrated the efficacy of our method.

Similar content being viewed by others

References

Groves L, Li N, Peters TM, Chen E (2019) Towards a mixed-reality first person point of view needle navigation system. In: International conference on medical image computing and computer-assisted intervention. Springer, p 245

O’Donoghue K, Jaeger HA, Cantillon-Murphy P (2021) A radiolucent electromagnetic tracking system for use with intraoperative x-ray imaging. Sensors 21(10):3357

Levin A, Weiss Y (2007) User assisted separation of reflections from a single image using a sparsity prior. IEEE Trans Pattern Anal Mach Intell 29(9):1647–1654

Cun X, Pun C-M (2021) Split then refine: stacked attention-guided resUNets for blind single image visible watermark removal. In: Proceedings of the AAAI conference on artificial intelligence, vol 35, pp 1184–1192

Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y, Goodfellow IJ, Pouget-Abadie J (2014) Generative adversarial nets. Adv Neural Inf Process Syst 27:2672–2680

Isola P, Zhu J-Y, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, p 1125

Zou Z, Lei S, Shi T, Shi Z, Ye J (2020) Deep adversarial decomposition: a unified framework for separating superimposed images. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, p 12806

Li C, Wand M (2016) Precomputed real-time texture synthesis with Markovian generative adversarial networks. In: European conference on computer vision. Springer, p 702

Sherouse GW, Novins K, Chaney EL (1990) Computation of digitally reconstructed radiographs for use in radiotherapy treatment design. Int J Radiat Oncol Biol Phys 18(3):651–658

Russakoff DB, Rohlfing T, Mori K, Rueckert D, Ho A, Adler JR, Maurer CR (2005) Fast generation of digitally reconstructed radiographs using attenuation fields with application to 2d–3d image registration. IEEE Trans Med Imaging 24(11):1441–1454

Unberath M, Zaech J-N, Lee SC, Bier B, Fotouhi J, Armand M, Navab N (2018) Deepdrr: a catalyst for machine learning in fluoroscopy-guided procedures. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 98–106

Swinehart DF (1962) The Beer–Lambert law. J Chem Educ 39(7):333

Wang X, Pen, Y, Lu L, Lu Z, Bagheri M, Summers R (2017) Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In: IEEE CVPR, vol 7

Farid H (2001) Blind inverse gamma correction. IEEE Trans Image Process 10(10):1428

Zhang L, Zhang L, Mou X, Zhang D (2012) A comprehensive evaluation of full reference image quality assessment algorithms. In: 2012 19th IEEE international conference on image processing. IEEE, p 1477

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600

Acknowledgements

We acknowledge the generous hardware support provided by NVIDIA Inc.

Funding

This work was supported by INOVAIT (2021-1102 Western University).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Uditha Jarayathne and Utsav Pardasani are employed by Northern Digital Inc. Wenyao Xia, SHuwei Xing, Elvis Chen, and Terry Peters declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

This article does not contain patient data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xia, W., Xing, S., Jarayathne, U. et al. X-ray image decomposition for improved magnetic navigation. Int J CARS 18, 1225–1233 (2023). https://doi.org/10.1007/s11548-023-02958-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-02958-3