Abstract

Purpose

Pelvic bone segmentation and landmark definition from computed tomography (CT) images are prerequisite steps for the preoperative planning of total hip arthroplasty. In clinical applications, the diseased pelvic anatomy usually degrades the accuracies of bone segmentation and landmark detection, leading to improper surgery planning and potential operative complications.

Methods

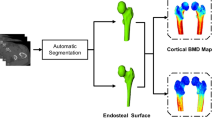

This work proposes a two-stage multi-task algorithm to improve the accuracy of pelvic bone segmentation and landmark detection, especially for the diseased cases. The two-stage framework uses a coarse-to-fine strategy which first conducts global-scale bone segmentation and landmark detection and then focuses on the important local region to further refine the accuracy. For the global stage, a dual-task network is designed to share the common features between the segmentation and detection tasks, so that the two tasks mutually reinforce each other's performance. For the local-scale segmentation, an edge-enhanced dual-task network is designed for simultaneous bone segmentation and edge detection, leading to the more accurate delineation of the acetabulum boundary.

Results

This method was evaluated via threefold cross-validation based on 81 CT images (including 31 diseased and 50 healthy cases). The first stage achieved DSC scores of 0.94, 0.97, and 0.97 for the sacrum, left and right hips, respectively, and an average distance error of 3.24 mm for the bone landmarks. The second stage further improved the DSC of the acetabulum by 5.42%, and this accuracy outperforms the state-of-the-arts (SOTA) methods by 0.63%. Our method also accurately segmented the diseased acetabulum boundaries. The entire workflow took ~ 10 s, which was only half of the U-Net run time.

Conclusion

Using the multi-task networks and the coarse-to-fine strategy, this method achieved more accurate bone segmentation and landmark detection than the SOTA method, especially for diseased hip images. Our work contributes to accurate and rapid design of acetabular cup prostheses.

Similar content being viewed by others

References

Ferguson RJ, Palmer AJ, Taylor A, Porter ML, Malchau H, Glyn-Jones S (2018) Hip replacement. Lancet 392:1662–1671. https://doi.org/10.1016/S0140-6736(18)31777-X

Bishi H, Smith JBV, Asopa V, Field RE, Wang C, Sochart DH (2022) Comparison of the accuracy of 2D and 3D templating methods for planning primary total hip replacement: a systematic review and meta-analysis. EFORT Open Rev 7:70–83. https://doi.org/10.1530/EOR-21-0060

Ogawa T, Takao M, Sakai T, Sugano N (2018) Factors related to disagreement in implant size between preoperative CT-based planning and the actual implants used intraoperatively for total hip arthroplasty. Int J CARS 13:551–562. https://doi.org/10.1007/s11548-017-1693-3

Li J (2021) Development and validation of a finite-element musculoskeletal model incorporating a deformable contact model of the hip joint during gait. J Mech Behav Biomed Mater 113:104136. https://doi.org/10.1016/j.jmbbm.2020.104136

Huppertz A, Radmer S, Wagner M, Roessler T, Hamm B, Sparmann M (2014) Computed tomography for preoperative planning in total hip arthroplasty: what radiologists need to know. Skeletal Radiol 43:1041–1051. https://doi.org/10.1007/s00256-014-1853-2

Chen X, Wang Y, Ma R, Peng H, Zhu S, Li S, Li S, Dong X, Qiu G, Qian W (2022) Validation of CT-based three-dimensional preoperative planning in comparison with acetate templating for primary total hip arthroplasty. Orthop Surg 14:1152–1160. https://doi.org/10.1111/os.13298

Schiffner E, Latz D, Jungbluth P, Grassmann JP, Tanner S, Karbowski A, Windolf J, Schneppendahl J (2019) Is computerised 3D templating more accurate than 2D templating to predict size of components in primary total hip arthroplasty? Hip Int 29:270–275. https://doi.org/10.1177/1120700018776311

Kim JJ, Nam J, Jang IG (2018) Fully automated segmentation of a hip joint using the patient-specific optimal thresholding and watershed algorithm. Comput Methods Programs Biomed 154:161–171. https://doi.org/10.1016/j.cmpb.2017.11.007

Chu C, Chen C, Liu L, Zheng G (2015) FACTS: fully automatic CT segmentation of a hip joint. Ann Biomed Eng 43:1247–1259. https://doi.org/10.1007/s10439-014-1176-4

Chu C, Bai J, Wu X, Zheng G (2016) Fully automatic segmentation of hip CT images. In: Zheng G, Li S (eds) Computational radiology for orthopaedic interventions. Springer International Publishing, Cham, pp 91–110

Wang C, Connolly B, de Oliveira Lopes PF, Frangi AF, Smedby Ö (2019) Pelvis segmentation using multi-pass U-Net and iterative shape estimation. In: Vrtovec T, Yao J, Zheng G, Pozo JM (eds) Computational methods and clinical applications in musculoskeletal imaging. Springer International Publishing, Cham, pp 49–57

Liu C, Xie H, Zhang S, Mao Z, Sun J, Zhang Y (2020) Misshapen pelvis landmark detection with local-global feature learning for diagnosing developmental dysplasia of the hip. IEEE Trans Med Imaging 39:3944–3954. https://doi.org/10.1109/TMI.2020.3008382

Caruana R (1997) Multitask learning. Mach Learn 28:41–75

Amyar A, Modzelewski R, Li H, Ruan S (2020) Multi-task deep learning based CT imaging analysis for COVID-19 pneumonia: classification and segmentation. Comput Biol Med 126:104037. https://doi.org/10.1016/j.compbiomed.2020.104037

Zhang J, Liu M, Wang L, Chen S, Yuan P, Li J, Shen SG-F, Tang Z, Chen K-C, Xia JJ, Shen D (2020) Context-guided fully convolutional networks for joint craniomaxillofacial bone segmentation and landmark digitization. Med Image Anal 60:101621. https://doi.org/10.1016/j.media.2019.101621

Johnson CD, Chen M-H, Toledano AY, Heiken JP, Dachman A, Kuo MD, Menias CO, Siewert B, Cheema JI, Obregon RG, Fidler JL, Zimmerman P, Horton KM, Coakley K, Iyer RB, Hara AK, Halvorsen RA, Casola G, Yee J, Herman BA, Burgart LJ, Limburg PJ (2008) Accuracy of CT colonography for detection of large adenomas and cancers. N Engl J Med 359:1207–1217. https://doi.org/10.1056/NEJMoa0800996

Zhuang M, Chen Z, Wang H, Tang H, He J, Qin B, Yang Y, Jin X, Yu M, Jin B, Li T, Kettunen L (2022) AnatomySketch: an extensible open-source software platform for medical image analysis algorithm development. J Digit Imaging 35:1623–1633. https://doi.org/10.1007/s10278-022-00660-5

Yoo JJ, Lee Y-K, Nho J-H, Yoo J-I, Jo W-L, Koo K-H (2021) Cup positioning using anatomical landmarks of the acetabulum. In: Drescher WR, Koo K-H, Windsor RE (eds) Advances in specialist hip surgery. Springer International Publishing, Cham, pp 201–206

Zhou Z, Sodha V, Rahman Siddiquee MM, Feng R, Tajbakhsh N, Gotway MB, Liang J (2019) Models genesis: generic autodidactic models for 3D medical image analysis. In: Shen D, Liu T, Peters TM, Staib LH, Essert C, Zhou S, Yap P-T, Khan A (eds) Medical image computing and computer assisted intervention—MICCAI 2019. Springer International Publishing, Cham, pp 384–393

Ronneberger O, Fischer P, Brox T (2015) U-Net: convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells WM, Frangi AF (eds) Medical image computing and computer-assisted intervention—MICCAI 2015. Springer International Publishing, Cham, pp 234–241

Milletari F, Navab N, Ahmadi S-A (2016) V-Net: fully convolutional neural networks for volumetric medical image segmentation. In: 2016 Fourth International Conference on 3D Vision (3DV). IEEE, Stanford, pp 565–571

Ruder S (2017) An overview of multi-task learning in deep neural networks. arXiv preprint arXiv:1706.05098

Payer C, Štern D, Bischof H, Urschler M (2019) Integrating spatial configuration into heatmap regression based CNNs for landmark localization. Med Image Anal 54:207–219. https://doi.org/10.1016/j.media.2019.03.007

Lin T-Y, Goyal P, Girshick R, He K, Dollar P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision. pp 2980–2988

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp 3431–3440

Isensee F, Jaeger PF, Kohl SAA, Petersen J, Maier-Hein KH (2021) nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat Methods 18:203–211. https://doi.org/10.1038/s41592-020-01008-z

Acknowledgements

This work was supported in part by the National Key Research and Development Program No. 2020YFB1711500, 2020YFB1711501 and 2020YFB1711503, the general program of National Natural Science Fund of China (No. 81971693, 61971445), the funding of Dalian Engineering Research Center for Artificial Intelligence in Medical Imaging, Hainan Province Key Research and Development Plan ZDYF2021SHFZ244, the Fundamental Research Funds for the Central Universities (No. DUT22YG229), the funding of Liaoning Key Lab of IC & BME System and Dalian Engineering Research Center for Artificial Intelligence in Medical Imaging.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Informed consent

Statement of informed consent is not applicable since the manuscript does not contain any participants’ data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhai, H., Chen, Z., Li, L. et al. Two-stage multi-task deep learning framework for simultaneous pelvic bone segmentation and landmark detection from CT images. Int J CARS 19, 97–108 (2024). https://doi.org/10.1007/s11548-023-02976-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-02976-1