Abstract

Purpose

Concomitant with the significant advances in computing technology, the utilization of augmented reality-based navigation in clinical applications is being actively researched. In this light, we developed novel object tracking and depth realization technologies to apply augmented reality-based neuronavigation to brain surgery.

Methods

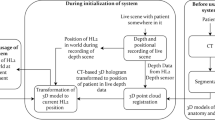

We developed real-time inside-out tracking based on visual inertial odometry and a visual inertial simultaneous localization and mapping algorithm. The cube quick response marker and depth data obtained from light detection and ranging sensors are used for continuous tracking. For depth realization, order-independent transparency, clipping, and annotation and measurement functions were developed. In this study, the augmented reality model of a brain tumor patient was applied to its life-size three-dimensional (3D) printed model.

Results

Using real-time inside-out tracking, we confirmed that the augmented reality model remained consistent with the 3D printed patient model without flutter, regardless of the movement of the visualization device. The coordination accuracy during real-time inside-out tracking was also validated. The average movement error of the X and Y axes was 0.34 ± 0.21 and 0.04 ± 0.08 mm, respectively. Further, the application of order-independent transparency with multilayer alpha blending and filtered alpha compositing improved the perception of overlapping internal brain structures. Clipping, and annotation and measurement functions were also developed to aid depth perception and worked perfectly during real-time coordination. We named this system METAMEDIP navigation.

Conclusions

The results validate the efficacy of the real-time inside-out tracking and depth realization technology. With these novel technologies developed for continuous tracking and depth perception in augmented reality environments, we are able to overcome the critical obstacles in the development of clinically applicable augmented reality neuronavigation.

Similar content being viewed by others

References

Barfield W, Caudell T (2001) Boeing’s wire bundle assembly project. In: Barfield W, Caudell T (eds) Fundamentals of wearable computers and augmented reality. CRC Press, Boca Raton, pp 462–482. https://doi.org/10.1201/9780585383590-21

Sutherland IE (1968) A head-mounted three dimensional display. In: Proceedings of the December 9–11, 1968 fall joint computer conference Part. I, pp 757–764. https://doi.org/10.1145/1476589.1476686

Liberatore MJ, Wagner WP (2021) Virtual, mixed, and augmented reality: a systematic review for immersive systems research. Virtual Real 25:773–799. https://doi.org/10.1007/s10055-020-00492-0

Venkatesan M, Mohan H, Ryan JR, Schürch CM, Nolan GP, Frakes DH, Coskun AF (2021) Virtual and augmented reality for biomedical applications. Cell Rep Med 2:100348. https://doi.org/10.1016/j.xcrm.2021.100348

Galloway RL, Maciunas RJ, Edwards CA (1992) Interactive image-guided neurosurgery. IEEE Trans Biomed Eng 39:1226–1231. https://doi.org/10.1109/10.184698

Kikinis R, Gleason PL, Moriarty TM, Moore MR, Alexander E, Stieg PE, Matsumae M, Lorensen WE, Cline HE, Black PM (1996) Computer-assisted interactive three-dimensional planning neurosurgical procedures. Neurosurg 38:640–649. https://doi.org/10.1227/00006123-199604000-00003

Cho J, Rahimpour S, Cutler A, Goodwin CR, Lad SP, Codd P (2020) Enhancing reality: a systematic review of augmented reality in neuronavigation and education. World Neurosurg 139:186–195. https://doi.org/10.1016/j.wneu.2020.04.043

Mikhail M, Mithani K, Ibrahim GM (2019) Presurgical and intraoperative augmented reality in neuro-oncologic surgery: clinical experiences and limitations. World Neurosurg 128:268–276. https://doi.org/10.1016/j.wneu.2019.04.256

Fick T, van Doormaal JA, Hoving EW, Willems PW, van Doormaal TP (2021) Current accuracy of augmented reality neuronavigation systems: Systematic review and meta-analysis. World Neurosurg 146:179–188. https://doi.org/10.1016/j.wneu.2020.11.029

Haemmerli J, Davidovic A, Meling TR, Chavaz L, Schaller K, Bijlenga P (2021) Evaluation of the precision of operative augmented reality compared to standard neuronavigation using a 3D-printed skull. Neurosurg Focus 50:E17. https://doi.org/10.3171/2020.10.FOCUS20789

Bernard F, Haemmerli J, Zegarek G, Kiss-Bodolay D, Schaller K, Bijlenga P (2021) Augmented reality–assisted roadmaps during periventricular brain surgery. Neurosurg Focus 51:E4. https://doi.org/10.3171/2021.5.FOCUS21220

Louis RG, Steinberg GK, Duma C, Britz G, Mehta V, Pace J, Selman W, Jean WC (2021) Early experience with virtual and synchronized augmented reality platform for preoperative planning and intraoperative navigation: a case series. Oper Neurosurg (Hagerstown) 21:189–196. https://doi.org/10.1093/ons/opab188

Dho YS, Park SJ, Choi H, Kim Y, Moon HC, Kim KM, Kang H, Lee EJ, Kim MS, Kim JW (2021) Development of an inside-out augmented reality technique for neurosurgical navigation. Neurosurg Focus 51:E21. https://doi.org/10.3171/2021.5.FOCUS21184

Bichlmeier C, Wimmer F, Heining SM, Navab N (2007) Contextual anatomic mimesis hybrid in-situ visualization method for improving multi-sensory depth perception in medical augmented reality. In: 6th IEEE and ACM international symposium on mixed and augmented reality, vol. 2007, 2007, pp 129–138. https://doi.org/10.1109/ISMAR.2007.4538837

Sielhorst T, Bichlmeier C, Heining SM, Navab N (2006) Depth perception—a major issue in medical AR: Evaluation study by twenty surgeons. In: Larsen R, Nielsen M, Sporring J (eds) Medical Image Computing and Computer-Assisted Intervention. Lecture Notes in Computer Science, vol 4190, Springer, Berlin, Heidelberg. pp 364–372. https://doi.org/10.1007/11866565_45

Dho YS, Lee D, Ha T, Ji SY, Kim KM, Kang H, Kim M-S, Kim JW, Cho W-S, Kim YH (2021) Clinical application of patient-specific 3D printing brain tumor model production system for neurosurgery. Sci Rep 11:7005. https://doi.org/10.1038/s41598-021-86546-y

Salvi M, Vaidyanathan K (2014) Multi-layer alpha blending. In: Proceedings of the 18th Meeting of the ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, pp 151–158. https://doi.org/10.1145/2556700.2556705

Maruyama K, Watanabe E, Kin T, Saito K, Kumakiri A, Noguchi A, Nagane M, Shiokawa Y (2018) Smart glasses for neurosurgical navigation by augmented reality. Oper Neurosurg (Hagerstown) 15:551–556. https://doi.org/10.1093/ons/opx279

Choi H, Cho B, Mesamune K, Hashizume M, Hong J (2016) An effective visualization technique for depth perception in augmented reality-based surgical navigation. Int J Med Robot 12:62–72. https://doi.org/10.1002/rcs.1657

Cidota MA, Clifford RMS, Lukosch SG, Bilinghurst M (2016) Using visual effects to facilitate depth perception for spatial tasks in virtual and augmented reality. In: IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), vol. 2016, 2016, pp 172–177. https://doi.org/10.1109/ISMAR-Adjunct.2016.0070

El Jamiy F, Marsh R, (2019) Distance estimation in virtual reality and augmented reality: a survey. In: IEEE International Conference on Electro Information Technology (EIT), vol. 2019, 2019, pp 063–068. https://doi.org/10.1109/EIT.2019.8834182

Reitinger B, Welrberger P, Bornik A, Beichel R, Schmalstieg D (2005) Spatial measurements for medical augmented reality. In: Fourth IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR'05). IEEE, pp 208–209. https://doi.org/10.1109/ISMAR.2005.53

Soler L, Hostettler A, Collins T, Pessaux P, Mutter D, Marescaux J (2021) The visible patient: augmented reality in the operating theater. In: Atallah S (ed) Digital surgery, Springer, Cham, pp 247–260. https://doi.org/10.1007/978-3-030-49100-0_18

Funding

This work was supported by the Korea Medical Device Development Fund grant funded by the Korea government (the Ministry of Science and ICT, the Ministry of Trade, Industry and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) (Project Number: 202012E08).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

Sang Joon Park is the founder and CEO of MEDICALIP. Chul-Kee Park and Yun-Sik Dho own stock options and investments in MEDICALIP. The other authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dho, YS., Lee, B.C., Moon, H.C. et al. Validation of real-time inside-out tracking and depth realization technologies for augmented reality-based neuronavigation. Int J CARS 19, 15–25 (2024). https://doi.org/10.1007/s11548-023-02993-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-02993-0