Abstract

Purpose

This study aimed to classify laparoscopic gastric cancer phases. We also aimed to develop a transformer-based artificial intelligence (AI) model for automatic surgical phase recognition and to evaluate the model’s performance using laparoscopic gastric cancer surgical videos.

Methods

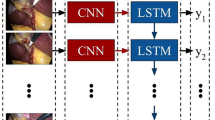

One hundred patients who underwent laparoscopic surgery for gastric cancer were included in this study. All surgical videos were labeled and classified into eight phases (P0. Preparation. P1. Separate the greater gastric curvature. P2. Separate the distal stomach. P3. Separate lesser gastric curvature. P4. Dissect the superior margin of the pancreas. P5. Separation of the proximal stomach. P6. Digestive tract reconstruction. P7. End of operation). This study proposed an AI phase recognition model consisting of a convolutional neural network-based visual feature extractor and temporal relational transformer.

Results

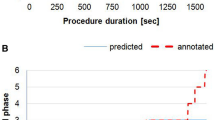

A visual and temporal relationship network was proposed to automatically perform accurate surgical phase prediction. The average time for all surgical videos in the video set was 9114 ± 2571 s. The longest phase was at P1 (3388 s). The final research accuracy, F1, recall, and precision were 90.128, 87.04, 87.04, and 87.32%, respectively. The phase with the highest recognition accuracy was P1, and that with the lowest accuracy was P2.

Conclusion

An AI model based on neural and transformer networks was developed in this study. This model can identify the phases of laparoscopic surgery for gastric cancer accurately. AI can be used as an analytical tool for gastric cancer surgical videos.

Similar content being viewed by others

Data availability

Some or all data, models, or code generated or used during the study is available from the corresponding author by request. People could contact one of the authors to get the data which are not used for commercial purposes.

References

Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, Bray F (2021) Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin 71(3):209–249

Thrift AP, El-Serag HB (2020) Burden of gastric cancer. Clin Gastroenterol Hepatol 18:534–542

National Comprehensive Cancer Network. Gastric Cancer (version 2.2021) [EB/OL]. https://www.nccn.org/professionals/physician_gls/pdf/gastric.pdf. Accessed 1 May 2021

Japanese Gastric Cancer Association, Japanese Gastric Cancer Treatment Guidelines 2021 (6th edition).[J] .Gastric Cancer, 2022.

Igaki T, Kitaguchi D, Matsuzaki H, Nakajima K, Kojima S, Hasegawa H, Takeshita N, Kinugasa Y, Ito M (2023) Automatic surgical skill assessment system based on concordance of standardized surgical field development using artificial intelligence. JAMA Surg 158:e231131

Pedrett R, Mascagni P, Beldi G, Padoy N, Lavanchy JL (2023) Technical skill assessment in minimally invasive surgery using artificial intelligence: a systematic review. Surg Endosc 37:7412–7424

Bodenstedt S, Wagner M, Müller-Stich BP, Weitz J, Speidel S (2020) Artificial intelligence-assisted surgery: potential and challenges. Visc Med 36:450–455

Letourneau-Guillon L, Camirand D, Guilbert F, Forghani R (2020) Artificial intelligence applications for workflow, process optimization and predictive analytics. Neuroimaging Clin N Am 30:e1–e15

Ahmadi SA, Sielhorst T, Stauder R, Horn M, Feussner H, Navab N (2006) Recovery of surgical workflow without explicit models. Med Image Comput Comput Assist Interv 9(Pt 1):420–428

Shinozuka K, Turuda S, Fujinaga A, Nakanuma H, Kawamura M, Matsunobu Y, Tanaka Y, Kamiyama T, Ebe K, Endo Y, Etoh T, Inomata M, Tokuyasu T (2022) Artificial intelligence software available for medical devices: surgical phase recognition in laparoscopic cholecystectomy. Surg Endosc 36(10):7444–7452

Hashimoto DA, Rosman G, Volkov M (2017) Artificial intelligence for intraoperative video analysis: machine learning’s role in surgical education. J Am Coll Surg 225:S171

Kitaguchi D, Takeshita N, Matsuzaki H, Oda T, Watanabe M, Mori K, Kobayashi E, Ito M (2020) Automated laparoscopic colorectal surgery workflow recognition using artificial intelligence: Experimental research. Int J Surg 79:88–94

Yu F, Croso GS, Kim TS, Song Z, Parker F, Hager GD, Reiter A, Vedula SS, Ali H, Sikder S (2019) Assessment of automated identification of phases in videos of cataract surgery using machine learning and deep learning techniques. JAMA Netw Open 2(4):e191860

Meeuwsen FC, van Luyn F, Blikkendaal MD, Jansen FW, van den Dobbelsteen JJ (2019) Surgical phase modelling in minimal invasive surgery. Surg Endosc 33(5):1426–1432

Ramesh S, Dall’Alba D, Gonzalez C, Yu T, Mascagni P, Mutter D, Marescaux J, Fiorini P, Padoy N (2021) Multi-task temporal convolutional networks for joint recognition of surgical phases and steps in gastric bypass procedures. Int J Comput Assist Radiol Surg 16(7):1111–1119

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. IEEE

Bottou L, Bousquet O (2007) The tradeoffs of large scale learning. Advances in neural information processing systems 20. In: Proceedings of the twenty-first annual conference on neural information processing systems, Vancouver, British Columbia, Canada, 3–6 Dec, 2007. Curran Associates Inc.

Paszke A, Gross S, Massa F, Letter A (2019) PyTorch: an imperative style, high-performance deep learning library

Zhang B, Ghanem A, Simes A, Choi H, Yoo A (2021) Surgical workflow recognition with 3DCNN for Sleeve Gastrectomy. Int J Comput Assist Radiol Surg 16(11):2029–2036

Xu M, Islam M, Ren H (2022) Rethinking surgical captioning: end-to-end window-based MLP transformer using patches. Springer, Cham

Czempiel T, Paschali M, Keicher M, Simson W, Feussner H, Kim ST, Navab N (2020) TeCNO: surgical phase recognition with multi-stage temporal convolutional networks. In: Lect notes comput sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics), vol 12263. LNCS, pp 343–352

Jin Y, Dou Q, Chen H, Yu L, Qin J, Fu CW, Heng PA (2018) SV-RCNet: workflow recognition from surgical videos using recurrent convolutional network. IEEE Trans Med Imaging 37(5):1114–1126

Takeuchi M, Collins T, Ndagijimana A, Kawakubo H, Kitagawa Y, Marescaux J, Mutter D, Perretta S, Hostettler A, Dallemagne B (2022) Automatic surgical phase recognition in laparoscopic inguinal hernia repair with artificial intelligence. Hernia 26(6):1669–1678

Masashi T, Hirofumi K, Takayuki T, Yusuke M, Satoru M, Kazumasa F, Rieko N, Yuko K (2023) Evaluation of surgical complexity by automated surgical process recognition in robotic distal gastrectomy using artificial intelligence. Surg Endosc 37(6):4517–4524

Czempiel T, Paschali M, Ostler D, Kim ST, Busam B, Navab N (2021) OperA: attention-regularized transformers for surgical phase recognition. Medical Image computing and computer assisted intervention—MICCAI 2021. MICCAI 2021. Lecture notes in computer science, vol 12904. Springer, Cham

Jin Y, Long Y, Gao X et al (2022) Trans-SVNet: hybrid embedding aggregation Transformer for surgical workflow analysis. Int J CARS 17:2193–2202

Valderrama N et al (2022) Towards holistic surgical scene understanding. In: Wang L, Dou Q, Fletcher PT, Speidel S, Li S (eds) Medical image computing and computer assisted intervention—MICCAI 2022. MICCAI 2022. Lecture notes in computer science, vol 13437. Springer, Cham

Zhang B, Goel B, Sarhan MH et al (2023) Surgical workflow recognition with temporal convolution and transformer for action segmentation. Int J CARS 18:785–794

Nwoye CI, Yu T, Gonzalez C, Seeliger B, Mascagni P, Mutter D, Marescaux J, Padoy N (2022) Rendezvous: attention mechanisms for the recognition of surgical action triplets in endoscopic videos. Med Image Anal 78:102433

Bouarfa L, Jonker P, Dankelman J (2009) Surgical Context Discovery by Monitoring Low-level Activities in the OR. In: MICCAI workshop on modeling and monitoring of computer assisted interventions (M2CAI). London, UK

Cheng K, You J, Wu S, Chen Z, Zhou Z, Guan J, Peng B, Wang X (2022) Artificial intelligence-based automated laparoscopic cholecystectomy surgical phase recognition and analysis. Surg Endosc 36(5):3160–3168

Krenzer A, Makowski K, Hekalo A, Fitting D, Troya J, Zoller WG, Hann A, Puppe F (2022) Fast machine learning annotation in the medical domain: a semi-automated video annotation tool for gastroenterologists. Biomed Eng Online 21(1):33

Acknowledgements

We would like to thank Yunxiao Bi for the support of this study and Editage (www.editage.cn) for English language editing.

Funding

This work is supported by National Key R&D Program of China (No. 2022ZD0160601), National Natural Science Foundation of China (No. 62276260, 61976210, 62076235, 62176254, 62006230, 62206283, 82300646), Beijing Natural Science Foundation, (No.7232334), Beijing Municipal Science & Technology Commission (No. D17100006517003), Beijing Municipal Administration of Hospitals Incubating Program (No. PX2020001, PX20240103), and InnoHK program.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest in this work.

Ethical approval

This study was approved by the Ethics Committee of the Institute of Friendship Hospital, Capital Medical University, and was conducted in accordance with good clinical practice guideline and the Helsinki Declaration.

Informed consent

According to that, informed consent was not required in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhai, Y., Chen, Z., Zheng, Z. et al. Artificial intelligence for automatic surgical phase recognition of laparoscopic gastrectomy in gastric cancer. Int J CARS 19, 345–353 (2024). https://doi.org/10.1007/s11548-023-03027-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-03027-5