Abstract

Purpose

We propose a large-factor super-resolution (SR) method for performing SR on registered medical image datasets. Conventional SR approaches use low-resolution (LR) and high-resolution (HR) image pairs to train a deep convolutional neural network (DCN). However, LR–HR images in medical imaging are commonly acquired from different imaging devices, and acquiring LR–HR image pairs needs registration. Registered LR–HR images have registration errors inevitably. Using LR–HR images with registration error for training an SR DCN causes collapsed SR results. To address these challenges, we introduce a novel SR approach designed specifically for registered LR–HR medical images.

Methods

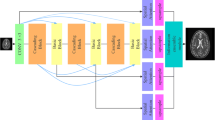

We propose style-subnets-assisted generative latent bank for large-factor super-resolution (SGSR) trained with registered medical image datasets. Pre-trained generative models named generative latent bank (GLB), which stores rich image priors, can be applied in SR to generate realistic and faithful images. We improve GLB by newly introducing style-subnets-assisted GLB (S-GLB). We also propose a novel inter-uncertainty loss to boost our method’s performance. Introducing more spatial information by inputting adjacent slices further improved the results.

Results

SGSR outperforms state-of-the-art (SOTA) supervised SR methods qualitatively and quantitatively on multiple datasets. SGSR achieved higher reconstruction accuracy than recently supervised baselines by increasing peak signal-to-noise ratio from 32.628 to 34.206 dB.

Conclusion

SGSR performs large-factor SR while given a registered LR–HR medical image dataset with registration error for training. SGSR’s results have both realistic textures and accurate anatomical structures due to favorable quantitative and qualitative results. Experiments on multiple datasets demonstrated SGSR’s superiority over other SOTA methods. SR medical images generated by SGSR are expected to improve the accuracy of pre-surgery diagnosis and reduce patient burden.

Similar content being viewed by others

References

Hill L, Ritchie G, Wightman A, Hill A, Murchison J (2010) Comparison between conventional interrupted high-resolution CT and volume multidetector CT acquisition in the assessment of bronchiectasis. Br J Radiol 83(985):67–70

Zhang D, Shao J, Liang Z, Gao L, Shen HT (2020) Large factor image super-resolution with cascaded convolutional neural networks. IEEE Trans Multimed 23:2172–2184

Wang X, Yu K, Wu S, Gu J, Liu Y, Dong C, Qiao Y, Change Loy C (2018) ESRGAN: enhanced super-resolution generative adversarial networks. In: Proceedings of the European conference on computer vision (ECCV) workshops

Lu Z, Li J, Liu H, Huang C, Zhang L, Zeng T (2022) Transformer for single image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 457–466

Chan KC, Wang X, Xu X, Gu J, Loy CC (2021) Glean: generative latent bank for large-factor image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 14245–14254

Zhang X, Zeng H, Guo S, Zhang L (2022) Efficient long-range attention network for image super-resolution. In: Computer vision—ECCV 2022: 17th European conference, Tel Aviv, Israel, October 23–27, 2022, proceedings, part XVII. Springer, pp 649–667

Li Z, Liu Y, Chen X, Cai H, Gu J, Qiao Y, Dong C (2022) Blueprint separable residual network for efficient image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 833–843

Luo Z, Huang Y, Li S, Wang L, Tan T (2022) Learning the degradation distribution for blind image super-resolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6063–6072

Maeda S (2020) Unpaired image super-resolution using pseudo-supervision. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 291–300

Balakrishnan G, Zhao A, Sabuncu MR, Guttag J, Dalca AV (2019) Voxelmorph: a learning framework for deformable medical image registration. IEEE Trans Med Imaging 38(8):1788–1800

Agustsson E, Timofte R (2017) Ntire 2017 challenge on single image super-resolution: dataset and study. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 126–135

Ning Q, Dong W, Li X, Wu J, Shi G (2021) Uncertainty-driven loss for single image super-resolution. Adv Neural Inf Process Syst 34:16398–16409

Lim B, Son S, Kim H, Nah S, Mu Lee K (2017) Enhanced deep residual networks for single image super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 136–144

Marcus DS, Wang TH, Parker J, Csernansky JG, Morris JC, Buckner RL (2007) Open access series of imaging studies (OASIS): cross-sectional MRI data in young, middle aged, nondemented, and demented older adults. J Cogn Neurosci 19(9):1498–1507

Heinrich MP, Jenkinson M, Brady M, Schnabel JA (2013) MRF-based deformable registration and ventilation estimation of lung CT. IEEE Trans Med Imaging 32(7):1239–1248

Hore A, Ziou D (2010) Image quality metrics: Psnr vs. ssim. In: 2010 20th international conference on pattern recognition. IEEE, pp 2366–2369

Xue W, Zhang L, Mou X, Bovik AC (2013) Gradient magnitude similarity deviation: a highly efficient perceptual image quality index. IEEE Trans Image Process 23(2):684–695

Rosner B, Glynn RJ, Lee M-LT (2006) The Wilcoxon signed rank test for paired comparisons of clustered data. Biometrics 62(1):185–192

Zou KH, Warfield SK, Bharatha A, Tempany CM, Kaus MR, Haker SJ, Wells WM III, Jolesz FA, Kikinis R (2004) Statistical validation of image segmentation quality based on a spatial overlap index1: scientific reports. Acad Radiol 11(2):178–189

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 234–241

Acknowledgements

Part of this study was funded by MEXT KAKENHI (26108006, 17H00867, 17K20099), the JSPS Bilateral International Collaboration Grants, and the AMED (JP19lk1010036 and JP20lk1010036).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This study was in accordance with the ethical committee (Nagoya University Ethical Approval No. 2014-0311).

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zheng, T., Oda, H., Hayashi, Y. et al. SGSR: style-subnets-assisted generative latent bank for large-factor super-resolution with registered medical image dataset. Int J CARS 19, 493–506 (2024). https://doi.org/10.1007/s11548-023-03037-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-03037-3