Abstract

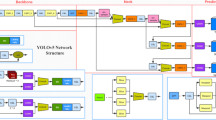

Traffic sign detection (TSD) using convolutional neural networks (CNN) is promising and intriguing for autonomous driving. Especially, with sophisticated large-scale CNN models, TSD can be performed with high accuracy. However, the conventional CNN models suffer the drawbacks of being time-consuming and resource-hungry, which limit their application and deployments in various platforms of limited resources. In this paper, we propose a novel real-time traffic sign detection system with a lightweight backbone network named Depth Separable DetNet (DS-DetNet) and a lite fusion feature pyramid network (LFFPN) for efficient feature fusion. The new model can achieve a performance trade-off between speed and accuracy using a depthwise separable bottleneck block, a lite fusion module, and an improved SSD detection front-end. The testing results on the MS COCO and the GTSDB datasets reveal that 23.1% mAP with 6.39 M parameters and only 1.08B FLOPs on MSCOCO, 81.35% mAP with 5.78 M parameters on GTSDB. With our model, the run speed is 61 frames per second (fps) on GTX 1080ti, 12 fps on Nvidia Jetson Nano and 16 fps on Nvidia Jetson Xavier NX.

Similar content being viewed by others

Availability of data and material

The data used during the current study are available from the corresponding author on reasonable request.

References

Maldonado-Bascon, S., Lafuente-Arroyo, S., Gil-Jimenez, P., Gomez-Moreno, H., Lopez-Ferreras, F.: Road-sign detection and recognition based on support vector machines. IEEE Trans. Intell. Transport. Syst. 8, 264–278 (2007)

Barnes, N., Zelinsky, A., Fletcher, L.S.: Real-time speed sign detection using the radial symmetry detector. IEEE Trans. Intell. Transport. Syst. 9(2), 322–332 (2008)

Mogelmose, A., Trivedi, M.M., Moeslund, T.B.: Vision-based traffic sign detection and analysis for intelligent driver assistance systems: perspectives and survey. IEEE Trans. Intell. Transport. Syst. 13(4), 1484–1497 (2012)

Greenhalgh, J., Mirmehdi, M.: Real-Time detection and recognition of road traffic signs. IEEE Trans. Intell. Transport. Syst. 13(4), 1498–1506 (2012)

Chen, T., Lu, S.J.: Accurate and efficient traffic sign detection using discriminative AdaBoost and support vector regression. IEEE Trans. Veh. Technol. 65(6), 4006–4015 (2016)

Zhu, Y., Zhang, C., Zhou, D., Wang, X., Bai, X., Liu, W.: Traffic sign detection and recognition using fully convolutional network guided proposals. Neurocomputing 214, 758–766 (2016)

Li, J., Wang, Z.: Real-time traffic sign recognition based on efficient CNNs in the wild. IEEE Trans. Intell. Transport. Syst. 20(3), 975–984 (2019)

Dewi, C., Chen, R.C., Tai, S.K.: Evaluation of robust spatial pyramid pooling based on convolutional neural network for traffic sign recognition system. Electronics 9(6), 889–909 (2020)

Liu, Z.G., Li, D.Y., Ge, S.S., Tian, F.: Small traffic sign detection from large image. Appl. Intell. 50(1), 1–13 (2020)

Ouyang, Z.C., Niu, J.W., Liu, Y., Guizani, M.: Deep CNN-based real-time traffic light detector for self-driving vehicles. IEEE Trans. Mob. Comput. 19(2), 300–313 (2020)

Zhang, H.B., Qin, L.F., Li, J., Guo, Y.C., Zhou, Y., Zhang, J.W., Xu, Z.: Real-time detection method for small traffic signs based on Yolov3. IEEE Access 8, 64145–64156 (2020)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, pp. 779–788 (2016)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, pp. 6517–6525 (2017)

Redmon, J., Farhadi, A.: YOLOv3: An incremental improvement. arXiv: Computer Vision and Pattern Recognition (2018)

Bochkovskiy, A., Wang, C. Y., Liao, H. Y. M.: YOLOv4: Optimal speed and accuracy of object detection. arXiv (2020)

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C., Berg, A. C.: SSD: single shot MultiBox detector. In: European Conference on Computer Vision 2016, pp. 21–37 (2016)

Howard, A., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., Adam, H.: MobileNets: efficient convolutional neural networks for mobile vision applications. arXiv: Computer Vision and Pattern Recognition (2017)

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L. C.: MobileNetV2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, pp. 4510–4520 (2018)

Zhang, X., Zhou, X., Lin, M., Sun, J.: ShuffleNet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, pp. 6848–6856 (2018)

Ma, N., Zhang, X., Zheng, H.-T., Jian, S.: ShuffleNet V2: practical guidelines for efficient CNN architecture design. In: European Conference on Computer Vision 2018, pp. 116–131 (2018)

Hmida, R., Ben Abdelali, A., Mtibaa, A.: Hardware implementation and validation of a traffic road sign detection and identification system. J. Real-Time Image Pr 15(1), 13–30 (2018)

Farhat, W., Faiedh, H., Souani, C., Besbes, K.: Real-time embedded system for traffic sign recognition based on ZedBoard. J. Real-Time Image Pr 16(5), 1813–1823 (2019)

Li, Z., Peng, C., Yu, G., Zhang, X., Deng, Y., Sun, J.: DetNet: A Backbone network for Object Detection. arXiv: Computer Vision and Pattern Recognition (2018)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations 2015, pp. 1–14 (2015)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015, pp. 1-9 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, pp. 770–778 (2016)

Huang, G., Liu, Z., Der Maaten, L. V., Weinberger, K. Q.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, pp. 2261–2269 (2017)

Chen, J.S., Ran, X.K.: Deep learning with edge computing: a review. Proc. IEEE 107(8), 1655–1674 (2019)

Borrego-Carazo, J., Castells-Rufas, D., Biempica, E., Carrabina, J.: Resource-constrained machine learning for ADAS: a systematic review. IEEE Access 8, 40573–40598 (2020)

Iandola, F. N., Han, S., Moskewicz, M. W., Ashraf, K., Dally, W. J., Keutzer, K.: SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv (2016)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2014, pp. 580–587 (2014)

Girshick, R.: Fast r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision 2015, pp. 1440-1448 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural. Inf. Process. Syst. 2015, 91–99 (2015)

Gidaris, S., Komodakis, N.: Object detection via a multi-region and semantic segmentation-aware CNN Model. In: Proceedings of the IEEE International Conference on Computer Vision 2015, pp. 1134–1142 (2015)

He, K., Gkioxari, G., Dollar, P., Girshick, R.: Mask R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision 2017, pp. 2980–2988 (2017)

Li, Z., Peng, C., Yu, G., Zhang, X., Deng, Y., Sun, J.: Light-head R-CNN: in defense of two-stage object detector. arXiv: Computer Vision and Pattern Recognition (2017)

Wang, J., Li, X., Ling, C.X.: Pelee: a real-time object detection system on mobile devices. Adv. Neural. Inf. Process. Syst. 2018, 1967–1976 (2018)

Lin, T., Dollar, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, pp. 936–944 (2017)

Shen, Z., Liu, Z., Li, J., Jiang, Y., Chen, Y., Xue, X.: DSOD: learning deeply supervised object detectors from scratch. In: Proceedings of the IEEE International Conference on Computer Vision 2017, pp. 1937–1945 (2017)

Fu, C., Liu, W., Ranga, A., Tyagi, A., Berg, A. C.: DSSD: deconvolutional single shot detector. In: arXiv: Computer Vision and Pattern Recognition (2017)

Li, Z., Zhou, F.: FSSD: Feature fusion single shot multibox detector. arXiv: computer vision and pattern recognition (2017)

Zhou, P., Ni, B., Geng, C., Hu, J., Xu, Y.: Scale-transferrable object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, pp. 528–537 (2018)

Jiang, J., Xu, H., Zhang, S., Fang, Y., Kang, L.: FSNet: A Target Detection Algorithm Based on a Fusion Shared Network. IEEE Access 7, 169417–169425 (2019)

Lin, T., Goyal, P., Girshick, R., He, K., Dollar, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision 2017, pp. 2999–3007 (2017)

Arcosgarcia, A., Alvarezgarcia, J.A., Soriamorillo, L.M.: Evaluation of deep neural networks for traffic sign detection systems. Neurocomputing 316, 332–344 (2018)

Funding

The first four authors were supported by National Key Research and Development Project under Grants 2018YFC1900800-5, National Science Foundation of China under Grants 61890930–5, 61305026, and Beijing Outstanding Young Scientist Program under Grant BJJWZYJH01201910005020.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ren, K., Huang, L., Fan, C. et al. Real-time traffic sign detection network using DS-DetNet and lite fusion FPN. J Real-Time Image Proc 18, 2181–2191 (2021). https://doi.org/10.1007/s11554-021-01102-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-021-01102-1