Abstract

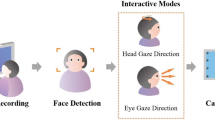

In recent years, the scarcity of effective communication systems has been an essential issue for disabled people [physically disabled, locomotor disability, and amyotrophic lateral sclerosis (ALS)] who cannot speak, walk, or move their hands. The lives of disabled people depend on others for survival, so they need assistive technology to live independently. This research paper aims to develop an efficient real-time eye-gaze communication system using a low-cost webcam for disabled persons. This proposed work developed a Video-Oculography (VOG) based system under natural head movements using a 5-point user-specific calibration (algorithmic calibration) approach for eye-tracking and cursor movement. During calibration, some parameters are calculated and used to control the computer with the eyes. Additionally, we designed a graphical user interface (GUI) to examine the performance and fulfill the basic daily needs of disabled individuals. The proposed method enables disabled persons to operate a computer by moving and blinking their eyes, similar to a typical computer user. The overall cost of the developed system is low (Cost < $50, varies based on camera usage) compared to the cost of various existing systems. The proposed system is tested with disabled and non-disabled individuals and has achieved an average blinking accuracy of 97.66%. The designed system has attained an average typing speed of 15 and 20 characters per minute for disabled and non-disabled participants, respectively. On average, the system has achieved a visual angle accuracy of 2.2 degrees for disabled participants and 0.8 degrees for non-disabled participants. The experiment’s outcomes demonstrate that the developed system is robust and accurate.

Similar content being viewed by others

Data availability

All data generated or analyzed during this study are included in this article.

References

Blignaut, P.: Development of a gaze-controlled support system for a person in an advanced stage of multiple sclerosis: a case study. Univ. Access Inf. Soc. 16(4), 1003–1016 (2017). https://doi.org/10.1007/s10209-016-0493-9

Zarei, S., Carr, K., Reiley, L., Diaz, K., Guerra, O., Altamirano, P.F., Pagani, W., Lodin, D., Orozco, G., Chinea, A.: A comprehensive review of amyotrophic lateral sclerosis. Surg. Neurol. Int. 6, 171–194 (2015). https://doi.org/10.4103/2152-7806.169561

Wankhede, K., Pednekar, S.: Aid for ALS patient using ALS Specs and IOT. In International Conference on Intelligent Autonomous Systems, 2019. ICoIAS 2019. IEEE, pp. 146–149 (2019).

Zhang, X., Kulkarni, H., Morris, M.R.: Smartphone-based gaze gesture communication for people with motor disabilities. In Proceedings of CHI Conference on Human Factors in Computing Systems, 2017, pp. 2878–2889. (2017) https://doi.org/10.1145/3025453.3025790

Spataro, R., Ciriacono, M., Manno, C., La Bella, V.: The eye-tracking computer device for communication in amyotrophic lateral sclerosis. Acta Neurol. Scand. 130(1), 40–45 (2014). https://doi.org/10.1111/ane.12214

Chew, M.T., Penver, K.: Low-cost eye gesture communication system for people with motor disabilities. In International Instrumentation and Measurement Technology Conference, 2019. I2MTC 2019. IEEE. pp. 1–5 (2019). https://doi.org/10.1109/I2MTC.2019.8826976

Pai, S., Bhardwaj, A.: Eye gesture based communication for people with motor disabilities in developing nations. In International Joint Conference on Neural Networks, 2019. IJCNN2019. IEEE. pp. 1–8 (2019). https://doi.org/10.1109/IJCNN.2019.8851999

Zhang, X., Liu, X., Yuan, S.M., Lin, S.F.: Eye tracking based control system for natural human-computer interaction. Comput. Intell. Neurosci. 3, 1–9 (2017). https://doi.org/10.1155/2017/5739301

Majaranta, P., Bulling, A.: Eye tracking and eye-based human–computer interaction. In: Advances in physiological computing, 2014, pp. 39–65. Springer, New York (2014). https://doi.org/10.1007/978-1-4471-6392-3_3

Duchowski, A.T., Duchowski, A.T.: Eye tracking methodology: Theory and practice, 3rd edn. Springer, New York (2017). https://doi.org/10.1007/978-3-319-57883-5

Zhu, Z., Ji, Q.: Novel eye gaze tracking techniques under natural head movement. IEEE Trans. Biomed. Eng. 54(12), 2246–2260 (2007). https://doi.org/10.1109/TBME.2007.895750

Hosp, B., Eivazi, S., Maurer, M., Fuhl, W., Geisler, D., Kasneci, E.: Remoteeye: an open-source high-speed remote eye tracker. Behav. Res. Methods 52(3), 1387–1401 (2020). https://doi.org/10.3758/s13428-019-01305-2

Bafna, T., Bækgaard, P., Paulin Hansen, J.P.: EyeTell: Tablet-based Calibration-free Eye-typing using Smooth-pursuit movements. In ACM Symposium on Eye Tracking Research and Applications, 2021. ACM 2021. pp. 1–6 (2021).

Majaranta, P., Ahola, U.K., Špakov, O.: Fast gaze typing with an adjustable dwell time. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2009. HFCS 2009. ACM. pp. 357–360 (2009). https://doi.org/10.1145/1518701.1518758

Królak, A., Strumiłło, P.: Eye-blink detection system for human–computer interaction. Universal Access in the Information Society. Univ Access Inf Soc. 11(4), 409–419 (2012). https://doi.org/10.1007/s10209-011-0256-6

Wobbrock, J.O., Rubinstein, J., Sawyer, M., Duchowski, A.T.: Not typing but writing: eye-based text entry using letter-like gestures. In Proceedings of the Conference on Communications by Gaze Interaction, 2007. COGAIN 2007. pp. 61–64 (2007).

Tuisku, O., Majaranta, P., Isokoski, P., Räihä, K.J.: Now Dasher! Dash away! Longitudinal study of fast text entry by eye gaze. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications, 2008. ACM. pp. 19–26 (2008). https://doi.org/10.1145/1344471.1344476

Chin, C.A., Barreto, A., Cremades, J.G., Adjouadi, M.: Integrated electromyogram and eye-gaze tracking cursor control system for computer users with motor disabilities. (2008). https://doi.org/10.1682/JRRD.2007.03.0050

Ülkütaş, H.Ö., Yıldız, M.: Computer-based eye-writing system by using EOG. In Medical Technologies National Conference, 2015. TIPTEKNO 2015. IEEE. pp. 1–4 (2015). https://doi.org/10.1109/TIPTEKNO.2015.7374580

Usakli, A.B., Gurkan, S.: Design of a novel efficient human–computer interface: an electrooculagram based virtual keyboard. IEEE Trans. Instrum. Meas. 59(8), 2099–2108 (2009). https://doi.org/10.1109/TIM.2009.2030923

Soman, S., Murthy, B.K.: Using brain computer interface for synthesized speech communication for the physically disabled. Proc. Comput. Sci. 1(46), 292–298 (2015). https://doi.org/10.1016/j.procs.2015.02.023

Akram, F., Han, S.M., Kim, T.S.: An efficient word typing P300-BCI system using a modified T9 interface and random forest classifier. Comput. Biol. Med. 56, 30–36 (2015). https://doi.org/10.1016/j.compbiomed.2014.10.021

Verbaarschot, C., Tump, D., Lutu, A., Borhanazad, M., Thielen, J., van den Broek, P., Farquhar, J., Weikamp, J., Raaphorst, J., Groothuis, J.T., Desain, P.: A visual brain-computer interface as communication aid for patients with amyotrophic lateral sclerosis. Clin. Neurophysiol. 132(10), 2404–2415 (2021). https://doi.org/10.1016/j.clinph.2021.07.012

Chatterjee, I., Xiao, R., Harrison, C.: Gaze+ gesture: Expressive, precise and targeted free-space interactions. In Proceedings of the ACM on International Conference on Multimodal Interaction, pp.131–138 (2015). https://doi.org/10.1145/2818346.2820752

Missimer, E., Betke, M.: Blink and wink detection for mouse pointer control. In Proceedings of the 3rd International Conference on PErvasive Technologies Related to Assistive Environments, 2010. ACM. pp. 1–8 (2010). https://doi.org/10.1145/1839294.1839322

Mukherjee, K., Chatterjee, D.: Augmentative and Alternative Communication device based on eye-blink detection and conversion to Morse-code to aid paralyzed individuals. In International Conference on Communication, Information & Computing Technology, 2015. ICCICT 2015. IEEE. pp. 1–5 (2015). https://doi.org/10.1109/ICCICT.2015.7045754

Aunsri, N., Rattarom, S.: Novel eye-based features for head pose-free gaze estimation with web camera: new model and low-cost device. Ain Shams Eng J 13(5), 101731 (2022). https://doi.org/10.1016/j.asej.2022.101731

Lupu, R.G., Ungureanu, F., Bozomitu, R.G.: Mobile embedded system for human computer communication in assistive technology. In International Conference on Intelligent Computer Communication and Processing, 2012. IEEE. pp. 209–212 (2012). https://doi.org/10.1109/ICCP.2012.6356187

Scott MacKenzie, I., Ashtiani, B.: BlinkWrite: efficient text entry using eye blinks. Univ. Access Inf. Soc. 10, 69–80 (2011). https://doi.org/10.1007/s10209-010-0188-6

Porta, M., Turina, M. Eye-S: a full-screen input modality for pure eye-based communication. In Proceedings of the Symposium on Eye Tracking Research & Applications, 2008. ACM. pp. 27–34 (2008). https://doi.org/10.1145/1344471.1344477

Lee, K.R., Chang, W.D., Kim, S., Im, C.H.: Real-time “eye-writing” recognition using electrooculogram. IEEE Trans. Neural Syst. Rehabil. Eng. 25(1), 37–48 (2016). https://doi.org/10.1109/TNSRE.2016.2542524

Zhang, C., Yao, R., Cai, J.: Efficient eye typing with 9-direction gaze estimation. Multimed Tools Appl. 77(15), 19679–19696 (2018). https://doi.org/10.1007/s11042-017-5426-y

Kumar, S., Verma, P.R., Bharti, M., Agarwal, P.: A CNN based graphical user interface controlled by imagined movements. Int. J. Syst. Assur. Eng. Manag. (2021). https://doi.org/10.1007/s13198-021-01096-w

Ansari, M.F., Kasprowski, P., Peer, P.: Person-specific gaze estimation from low-quality webcam images. Sensors 23(8), 4138 (2023). https://doi.org/10.3390/s23084138

Roy, K., Chanda, D.: A Robust Webcam-based Eye Gaze Estimation System for Human-Computer Interaction. In 2022 International Conference on Innovations in Science, Engineering and Technology (ICISET), IEEE, 146–151) (2022). https://doi.org/10.1109/ICISET54810.2022.9775896

Paing, M.P., Juhong, A., Pintavirooj, C.: Design and development of an assistive system based on eye tracking. Electronics 11(4), 535 (2022). https://doi.org/10.3390/electronics11040535

Sagonas, C., Antonakos, E., Tzimiropoulos, G., Zafeiriou, S., Pantic, M.: 300 faces in-the-wild challenge: database and results. Image Vis Comput 47, 3–18 (2016). https://doi.org/10.1016/j.imavis.2016.01.002

King, D.E.: Dlib-ml: a machine learning toolkit. J. Mach. Learn. Res. 10, 1755–1758 (2009)

Bradski, G.: The openCV library. Dr. Dobb’s J. Softw. Tools Professional Program. 25(11), 120–123 (2000)

Bisen, D., Shukla, R., Rajpoot, N., Maurya, P., Uttam, A.K., Arjaria, S.K.: Responsive human-computer interaction model based on recognition of facial landmarks using machine learning algorithms. Multimed. Tools Appl. 81, 18011–18031 (2022). https://doi.org/10.1007/s11042-022-12775-6

Soukupova, T., Cech, J.: Eye blink detection using facial landmarks. In: Computer vision winter workshop. Rimske Toplice, Slovenia (2016)

Dewi, C., Chen, R.C., Jiang, X., Yu, H.: Adjusting eye aspect ratio for strong eye blink detection based on facial landmarks. PeerJ Comput. Sci. 8, e943 (2022). https://doi.org/10.7717/peerj-cs.943

Kamarudin, N., Jumadi, N.A., Mun, N.L., Ng, C.K., Ching, A.H., Mahmud, W.M., Morsin, M., Mahmud, F.: Implementation of Haar Cascade classifier and eye aspect ratio for driver drowsiness detection using raspberry pi. Univ. J. Electr. Electron. Eng 6(5B), 67–75 (2019). https://doi.org/10.13189/ujeee.2019.061609

Kar, A., Corcoran, P.: A review and analysis of eye-gaze estimation systems, algorithms and performance evaluation methods in consumer platforms. IEEE Access. 5, 16495–16519 (2017). https://doi.org/10.1109/ACCESS.2017.2735633

Bafna, T.: Gaze typing using multi-key selection technique. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, 2018. ACM. pp. 477–479 (2018). https://doi.org/10.1145/3234695.3240992

Acknowledgements

It was a pleasure working with the subjects who were part of the experimentation, and the authors thank them. The work presented in this paper was supported by the Department of Science and Technology (DST), India (Project No.: DST/SEED/TIDE/2018/66).

Author information

Authors and Affiliations

Contributions

GRC did the implementation work and wrote the manuscript. AK, SG, and DK reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

It is declared that the authors do not have any conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chhimpa, G.R., Kumar, A., Garhwal, S. et al. Development of a real-time eye movement-based computer interface for communication with improved accuracy for disabled people under natural head movements. J Real-Time Image Proc 20, 81 (2023). https://doi.org/10.1007/s11554-023-01336-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-023-01336-1