Abstract

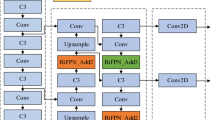

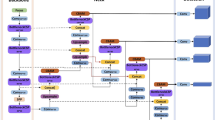

In response to the challenges faced by existing safety helmet detection algorithms when applied to complex construction site scenarios, such as poor accuracy, large number of parameters, large amount of computation and large model size, this paper proposes a lightweight safety helmet detection algorithm based on YOLOv5, which achieves a balance between lightweight and accuracy. First, the algorithm integrates the Distribution Shifting Convolution (DSConv) layer and the Squeeze-and-Excitation (SE) attention mechanism, effectively replacing the original partial convolution and C3 modules, this integration significantly enhances the capabilities of feature extraction and representation learning. Second, multi-scale feature fusion is performed on the Ghost module using skip connections, replacing certain C3 module, to achieve lightweight and maintain accuracy. Finally, adjustments have been made to the Bottleneck Attention Mechanism (BAM) to suppress irrelevant information and enhance the extraction of features in rich regions. The experimental results show that improved model improves the mean average precision (mAP) by 1.0% compared to the original algorithm, reduces the number of parameters by 22.2%, decreases the computation by 20.9%, and the model size is reduced by 20.1%, which realizes the lightweight of the detection algorithm.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request. The publicly available dataset utilized in this research can be accessed via the following link: https://github.com/wujixiu/helmet-detection.

References

Li, Y., Wei, H., Han, Z., Huang, J., Wang, W.: Deep learning-based safety helmet detection in engineering management based on convolutional neural networks. Adv Civil Eng (2020). https://doi.org/10.1155/2020/9703560

Chen, Z., Zhang, F., Liu, H., et al.: Real-time detection algorithm of helmet and reflective vest based on improved YOLOv5. J. Real-Time Image Proc. 20, 4 (2023). https://doi.org/10.1007/s11554-023-01268-w

Wu, J., Cai, N., Chen, W., et al.: Automatic detection of hardhats worn by construction personnel: a deep learning approach and benchmark dataset. Autom. Constr. 106, 102894 (2019). https://doi.org/10.1016/j.autcon.2019.102894

Adarsh, P., Rathi, P., Kumar, M. YOLO v3-Tiny: Object Detection and Recognition using one stage improved model. In: 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS). IEEE, 2020; pp. 687–694.https://doi.org/10.1109/ICACCS48705. 2020.9074315.

Bochkovskiy, A., Wang, C-Y., Liao, H-Y. M.: YOLOv4: Optimal Speed and Accuracy of Object Detection. 2020; http://dx. doi.org/https://doi.org/10.48550/arXiv.2004.10934, arXiv preprintarXiv:2004.10934.

Redmon, J., Divvala, S., Grishick, R., Farhadi, A.: You Only Look Once: Unified, Real-Time Object Detection. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, 2016; pp. 779–788. https://doi.org/10.1109/CVPR.2016.91.

Redmon, J., Farhadi, A.: YOLO9000: Better, Faster, Stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.2017; pp. 7263–7271. https://doi.org/10.48550/arXiv.1612.08242.

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C-Y., Berg, AC.: SSD: Single Shot Multi Box Detector. arXiv.Org. 2015; https://doi.org/10.1007/978-3-319-46448-0_2.

Girshick, R.: Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, 2015; 1440–1448. https://doi.org/10.1109/ICCV.2015.169

Girshick, R., Donahue, J., Darrell, T., et al.: Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, 2013; 580–587. arXiv.Org. https://arxiv.org/abs/1311.2524v5.

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017). https://doi.org/10.1109/TPAMI.2016.2577031

Kerdvibulvech, C.: A methodology for hand and finger motion analysis using adaptive probabilistic models. J Embedded Systems 2014, 18 (2014). https://doi.org/10.1186/s13639-014-0018-7

Singh, C., Mittal, N., Walia, E.: Complementary feature sets for optimal face recognition. J Image Video Proc 2014, 35 (2014). https://doi.org/10.1186/1687-5281-2014-35

Mithun, G.J., Juan, P.W.: Context-based hand gesture recognition for the operating room. Patt Recogn Lett 36, 196–203 (2014)

A-masiri, P., Kerdvibulvech, C.: Anime face recognition to create awareness. Int J Inf Tecnol 15, 3507–3512 (2023). https://doi.org/10.1007/s41870-023-01391-8

Ge, Y., Liu, H., Du, J., Li, Z., Wei, Y.: Masked face recognition with convolutional visual self-attention network. Neurocomputing 518, 496–506 (2023)

Silva R. R. V. e., Aires K. R. T., Veras R. d. M. S.: Helmet Detection on Motorcyclists Using Image Descriptors and Classifiers. 2014 27th SIBGRAPI Conference on Graphics, Patterns and Images, Rio de Janeiro, Brazil, 2014, pp. 141–148, https://doi.org/10.1109/SIBGRAPI.2014.28.

Chen, W., Li, C., Guo, H.: A lightweight face-assisted object detection model for welding helmet use. Expert Syst. Appl. 221, 119764 (2023)

Zhao, L., Tohti, T., Hamdulla, A.: BDC-YOLOv5: a helmet detection model employs improved YOLOv5. SIViP 17, 4435–4445 (2023). https://doi.org/10.1007/s11760-023-02677-x

Xu, H., Wu, Z.: MCX-YOLOv5: efficient helmet detection in complex power warehouse scenarios. J. Real-Time Image Proc. 21, 27 (2024). https://doi.org/10.1007/s11554-023-01406-4

Jin, P., Li, H., Yan, W., Xu, J.: YOLO-ESCA: a high-performance safety helmet standard wearing behavior detection model based on improved YOLOv5. IEEE Access 12, 23854–23868 (2024). https://doi.org/10.1109/ACCESS.2024.3365530

Wang, B., Li, W., Tang, H.: Improved YOLO v3 algorithm and its appl-ication in helmet detection. Comput. Eng. Appl. 56(9), 33 (2020). https://doi.org/10.3778/j.issn.1002-8331.1912-0267

Zhao, H., Tian, X., Yang, Z., Bai, W.: YOLO-S: a novel lightweight model for safety helmet wearing detection. J East China Normal Univ 5, 12 (2021). https://doi.org/10.3969/j.issn.1000-5641.2021.05.01

Song, X., Wu, Y., Liu, B., Zhang, Q.: Safety helmet wearing detection using improved YOLOv5s algorithm. Comput. Eng. Appl. 59(2), 194–201 (2023)

Song, H., Zhang, X., Song, J., et al.: Detection and tracking of safety helmet based on DeepSort and YOLOv5. Multimed Tools Appl 82(7), 10781–10794 (2023). https://doi.org/10.1007/s11042-022-13305-0

Zhang, J., Qu, P., Sun, C., Luo, M., Yan, G., Zhang, J., Liu, H.: DWCA- YOLOv5: an improve single shot detector for safety helmet detection. J Sensors 2021, 1–12 (2021)

Sun, C., Zhang, S., Qu, P., Wu, X., Feng, P., Tao, Z., Zhang, J., Wang, Y.: MCA-YOLOV5-light: a faster, stronger and lighter algorithm for helmet-wearing detection. Appl. Sci. 12, 9697 (2022). https://doi.org/10.3390/app12199697

Ultralytics. YOLOv5. 2021; https://github.com/ultralytics/yolov5.

Ramachandran, P., Zoph, B., Le, QV.: Searching for Activation Functions. arXiv.Org. 2017; https://arxiv.org/abs/1710.05941v2.

Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. IEEE Conf Comput Vision Patt Recog (CVPR) 2017, 936–944 (2017). https://doi.org/10.1109/CVPR.2017.106

Wang, C., Xu, C., Wang, C., Tao, D.: Perceptual adversarial networks for image-to-image transformation. IEEE Trans. Image Process. 27(8), 4066–4079 (2018). https://doi.org/10.1109/TIP.2018.2836316

Gennari, M., Fawcett, R., Prisacariu, V.A.: DSConv: Efficient Convolution Operator. 2019a; https://doi.org/10.48550/arXiv.1901.0192 8v1.

Gennari, M., Fawcett, R., Prisacariu, V.A.: DSConv: Efficient Convolution Operator. 2019b; arXiv.Org. https://arxiv.org/abs/1901.0192 8v2.

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018; pp. 7132–7141. https://doi.org/10.48550/arXiv.1709.01507.

Han, G., Zhu, M., Zhao, X., Gao, H.: Method based on the cross-layer attention mechanism and multiscale perception for safety helmet-wearing detection. Comput Elect Eng 95, 107458 (2021)

Sannasi, G., Devansh, A.: An Intelligent Video Surveillance System for Detecting the Vehicles on Road Using Refined YOLOV4. Comput Elect Eng 113, 109036 (2024)

Han, K., Wang, Y., Tian, Q., Guo, J., Xu, C., Xu, C.: GhostNet: More Features from Cheap Operations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, 2019; 580–587. arXiv.Org. https://arxiv.org/abs/1911.11907v2.

Gao, S.H., Cheng, M.M., Zhao, F., Zhang, X.Y., Yang, M.H., Torr, P.: Res2Net: A New Multi-Scale Backbone Architecture. IEEE Trans Patt Anal Mach Intell IEEE 43, 652–662 (2021)

Park, J., Woo, S., Lee, J-Y., Kweon, I. S.: BAM: Bottleneck Attention Module. 2018; arXiv:1807.06514. https://doi.org/10.48550/arXiv.1807.06514.

Ji, S.J., Ling, Q.H., Han, F.: An improved algorithm for small object detection based on YOLO v4 and multi-scale contextual information. Comput Elect Eng 105, 108490 (2023)

Tan, L., Lv, X., Lian, X., Wang, G.: YOLOv4_Drone: UAV image target detection based on an improved YOLOv4 algorithm. Comput Elect Eng 93, 107261 (2021)

Wu, J., Cai, N., Chen, W., Wang, H., Wang, G.: Automatic detection of hardhats worn by construction personnel: a deep learning approach and benchmark dataset. Autom. Constr. 106, 102894 (2019). https://doi.org/10.1016/j.autcon.2019.102894

Woo, S., Park, J., Lee, J-Y., Kweon, IS. CBAM: Convolutional Block Attention Module. 2018; arXiv:1807.06521. https://doi.org/10.48550/arXiv.1807.06521.

Li, X., Zhong, Z., Wu, J., Yang, Y., Lin, Z., Liu, H. Expectation-Maximization Attention Networks for Semantic Segmentation. IEEE/CVF Inter-national Conference on Computer Vision (ICCV).2019; https://arxiv.org/abs/1907.13426v2.

Cai, H., Li, J., Hu, M., Gan, C., Han, S. EfficientViT: Multi-Scale Linear Attention for High-Resolution Dense Prediction. CVF Conference on Computer Vision and Pattern Recognition (CVPR).2022; https://doi.org/10.48550/arXiv.2205.14756.

Liu, X., Peng, H., Zheng, N., Yang, Y., Hu, H., Yuan, Y. EfficientViT: Memory Efficient Vision Transformer with Cascaded Group Attention. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).2023; pp: 14420–14430. https://arxiv.org/abs/2305.07027v1.

Ge, Z., Liu, S., Wang, F., Li, Z., Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv:2107.08430. https://doi.org/10.48550/arXiv.2107.08430.

Wang, C-Y., Bochkovskiy, A., Liao, H-Y. M.: YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023;7464–7475. https://doi.org/10.1109/CVPR52729.2023.00721.

Acknowledgements

This research is sponsored by National Natural Science Foundation of China [Grant No.: 61203343].

Funding

This study is supported by the National Natural Science Foundation of China, 61203343.

Author information

Authors and Affiliations

Contributions

HR provided guidance throughout the research process and managed the funding. AF conceived the study and wrote the manuscript. JZ reviewed the manuscript. HS collected the data. XL analyzed the data. All authors reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Conflicts of interest

The authors declare no conflict of interest exists.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ren, H., Fan, A., Zhao, J. et al. Lightweight safety helmet detection algorithm using improved YOLOv5. J Real-Time Image Proc 21, 125 (2024). https://doi.org/10.1007/s11554-024-01499-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01499-5