Abstract

Minimally invasive surgery (MIS) is increasingly popular due to its smaller incisions, less pain, and faster recovery. Despite its advantages, challenges like limited visibility and reduced tactile feedback can lead to instrument and organ damage, highlighting the need for precise instrument detection and identification. Current methods face difficulties in detecting multi-scale targets and are often disrupted by blurring, occlusion, and varying lighting conditions during surgeries. Addressing these challenges, this paper introduces URTNet, a novel unstructured feature fusion network designed for the real-time detection of multi-scale surgical instruments in complex environments. Initially, the paper proposes a Stair Aggregation Network (SAN) to efficiently merge multi-scale information, minimizing detail loss in feature fusion and improving detection of blurred and obscured targets. Subsequently, a Multi-scale Feature Weighted Fusion (MFWF) approach is presented to tackle significant scale variations in detection objects and reconstruct the detection layers based on target sizes within endoscopic views. The effectiveness of URTNet is validated through tests on the public laparoscopic dataset m2cai16-tool and another dataset from Sun Yat-sen University Cancer Center, where URTNet achieved average precision scores (\(AP_{0.5}\)) of 93.3% and 97.9%, surpassing other advanced methodologies.

Similar content being viewed by others

Data availability

The associated data sets of the current study are available from the corresponding author on reasonable request.

References

Fuchs, K.: Minimally invasive surgery. Endoscopy 34(02), 154–159 (2002)

Yang, Y., Zhao, Z., Shi, P., Hu, S.: An efficient one-stage detector for real-time surgical tools detection in robot-assisted surgery. In: Medical Image Understanding and Analysis: 25th Annual Conference, MIUA 2021, Oxford, United Kingdom, July 12–14, 2021, Proceedings 25, pp. 18–29. Springer (2021)

Loza, G., Valdastri, P., Ali, S.: Real-time surgical tool detection with multi-scale positional encoding and contrastive learning. Healthc. Technol. Lett. 11(2–3), 48–58 (2023)

Checcucci, E., Piazzolla, P., Marullo, G., Innocente, C., Salerno, F., Ulrich, L., Moos, S., Quará, A., Volpi, G., Amparore, D., Piramide, F., Turcan, A., Garzena, V., Garino, D., De Cillis, S., Sica, M., Verri, P., Piana, A., Castellino, L., Alba, S., Di Dio, M., Fiori, C., Alladio, E., Vezzetti, E., Porpiglia, F.: Development of bleeding artificial intelligence detector (blair) system for robotic radical prostatectomy. J. Clin. Med. (2023). https://doi.org/10.3390/jcm12237355

Chen, X., Mumme, R.P., Corrigan, K.L., Mukai-Sasaki, Y., Koutroumpakis, E., Palaskas, N.L., Nguyen, C.M., Zhao, Y., Huang, K., Yu, C., Xu, T., Daniel, A., Balter, P.A., Zhang, X., Niedzielski, J.S., Shete, S.S., Deswal, A., Court, L.E., Liao, Z., Yang, J.: Deep learning-based automatic segmentation of cardiac substructures for lung cancers. Radiother. Oncol. 191, 110061 (2024). https://doi.org/10.1016/j.radonc.2023.110061

Liu, Y., Zhao, Z., Shi, P., Li, F.: Towards surgical tools detection and operative skill assessment based on deep learning. IEEE Trans. Med. Robot. Bionics 4(1), 62–71 (2022)

Rieke, N., Tan, D.J., di San Filippo, C.A., Tombari, F., Alsheakhali, M., Belagiannis, V., Eslami, A., Navab, N.: Real-time localization of articulated surgical instruments in retinal microsurgery. Med. Image Anal. 34, 82–100 (2016)

de la Fuente López, E., García, Á.M., Del Blanco, L.S., Marinero, J.C.F., Turiel, J.P.: Automatic gauze tracking in laparoscopic surgery using image texture analysis. Comput. Methods Programs Biomed. 190, 105378 (2020)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), vol. 1, pp. 886–893. IEEE (2005)

Girshick, R.: Fast R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2016)

Wang, C.Y., Bochkovskiy, A., Liao, H.Y.M.: Scaled-YOLOv4: scaling cross stage partial network. In: Proceedings of the IEEE/cvf Conference on Computer Vision and Pattern Recognition, pp. 13029–13038 (2021)

Zhu, X., Lyu, S., Wang, X., Zhao, Q.: TPH-YOLOv5: Improved yolov5 based on transformer prediction head for object detection on drone-captured scenarios. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2778–2788 (2021)

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.Y., Berg, A.C.: SSD: Single shot multibox detector. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, pp. 21–37. Springer (2016)

Rieke, N., Tan, D.J., Alsheakhali, M., Tombari, F., di San Filippo, C.A., Belagiannis, V., Eslami, A., Navab, N.: Surgical tool tracking and pose estimation in retinal microsurgery. In: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part I 18, pp. 266–273. Springer (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Proc. Syst. 60, 84–90 (2012)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Chen, Z., Zhao, Z., Cheng, X.: Surgical instruments tracking based on deep learning with lines detection and spatio-temporal context. In: 2017 Chinese Automation Congress (CAC), pp. 2711–2714. IEEE (2017)

Namazi, B., Sankaranarayanan, G., Devarajan, V.: A contextual detector of surgical tools in laparoscopic videos using deep learning. Surg. Endosc., 36(1), 679–688 (2022)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Zhang, B., Wang, S., Dong, L., Chen, P.: Surgical tools detection based on modulated anchoring network in laparoscopic videos. IEEE Access 8, 23748–23758 (2020)

Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2117–2125 (2017)

Liu, S., Qi, L., Qin, H., Shi, J., Jia, J.: Path aggregation network for instance segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8759–8768 (2018)

Tan, M., Pang, R., Le, Q.V.: EfficientDet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10781–10790 (2020)

Xu, W., Liu, R., Zhang, W., Chao, Z., Jia, F.: Surgical action and instrument detection based on multiscale information fusion. In: 2021 IEEE 13th International Conference on Computer Research and Development (ICCRD), pp. 11–15. IEEE (2021)

Wang, X., Zhang, Y., Li, Y.: Research on laparoscopic surgical instrument detection technology based on multi-attention-enhanced feature pyramid network. SIViP 17(5), 2221–2229 (2023)

Ding, G., Zhao, X., Peng, C., Li, L., Guo, J., Li, D., Jiang, X.: Anchor-free feature aggregation network for instrument detection in endoscopic surgery. IEEE Access 11, 29464–29473 (2023)

Zhang, X., Zhou, X., Lin, M., Sun, J.: ShuffleNet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6848–6856 (2018)

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.C.: MobileNetV2: Inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018)

Liu, Y., Zhao, Z., Chang, F., Hu, S.: An anchor-free convolutional neural network for real-time surgical tool detection in robot-assisted surgery. IEEE Access 8, 78193–78201 (2020)

Huang, L., Li, G., Li, Y., Lin, L.: Lightweight adversarial network for salient object detection. Neurocomputing 381, 130–140 (2020)

Zhong, J., Chen, J., Mian, A.: DualConv: dual convolutional kernels for lightweight deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 34(11), 9528–9535 (2022)

Sun, Y., Pan, B., Fu, Y.: Lightweight deep neural network for articulated joint detection of surgical instrument in minimally invasive surgical robot. J. Digit. Imaging 35(4), 923–937 (2022)

Liu, H., Sun, F., Gu, J., Deng, L.: SF-YOLOv5: a lightweight small object detection algorithm based on improved feature fusion mode. Sensors 22(15), 5817 (2022)

Zhao, W., Syafrudin, M., Fitriyani, N.L.: CRAS-YOLO: a novel multi-category vessel detection and classification model based on yolov5s algorithm. IEEE Access 11, 11463–11478 (2023)

Yu, X., Lyu, W., Zhou, D., Wang, C., Xu, W.: ES-Net: efficient scale-aware network for tiny defect detection. IEEE Trans. Instrum. Meas. 71, 1–14 (2022)

Liu, Z., Zheng, L., Gu, L., Yang, S., Zhong, Z., Zhang, G.: Instrumentnet: an integrated model for real-time segmentation of intracranial surgical instruments. Comput. Biol. Med. 166, 107565 (2023)

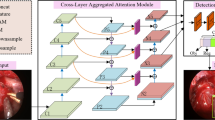

Zhao, X., Guo, J., He, Z., Jiang, X., Lou, H., Li, D.: CLAD-Net: cross-layer aggregation attention network for real-time endoscopic instrument detection. Health Inform. Sci. Syst. 11(1), 58 (2023)

Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft COCO: Common objects in context. In: Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13, pp. 740–755. Springer (2014)

Arthur, D., Vassilvitskii, S., et al.: K-Means++: the advantages of careful seeding. In: Soda 7, 1027–1035 (2007)

Ku, T., Yang, Q., Zhang, H.: Multilevel feature fusion dilated convolutional network for semantic segmentation. Int. J. Adv. Rob. Syst. 18(2), 17298814211007664 (2021)

Pradeep, C.S., Sinha, N.: Multi-tasking dssd architecture for laparoscopic cholecystectomy surgical assistance systems. In: 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), pp. 1–4. IEEE (2022)

Shim, D.S., Shim, J.: A modified stochastic gradient descent optimization algorithm with random learning rate for machine learning and deep learning. Int. J. Control Autom. Syst. 21(11), 3825–3831 (2023)

Zhang, Y.F., Ren, W., Zhang, Z., Jia, Z., Wang, L., Tan, T.: Focal and efficient iou loss for accurate bounding box regression. Neurocomputing 506, 146–157 (2022)

Duan, K., Bai, S., Xie, L., Qi, H., Huang, Q., Tian, Q.: CenterNet: keypoint triplets for object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6569–6578 (2019)

Tian, Z., Shen, C., Chen, H., He, T.: FCOS: fully convolutional one-stage object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9627–9636 (2019)

Zhu, X., Su, W., Lu, L., Li, B., Wang, X., Dai, J.: Deformable DETR: deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159 (2020)

Chen, Q., Wang, Y., Yang, T., Zhang, X., Cheng, J., Sun, J.: You only look one-level feature. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13039–13048 (2021)

Ge, Z., Liu, S., Wang, F., Li, Z., Sun, J.: YOLOX: exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 (2021)

Lyu, C., Zhang, W., Huang, H., Zhou, Y., Wang, Y., Liu, Y., Zhang, S., Chen, K.: RTMDet: an empirical study of designing real-time object detectors. arXiv preprint arXiv:2212.07784 (2022)

Li, Y., Mao, H., Girshick, R., He, K.: Exploring plain vision transformer backbones for object detection. In: European Conference on Computer Vision, pp. 280–296. Springer (2022)

ultralytics: yolov5. (2020). https://github.com/ultralytics/yolov5. Accessed 12 Oct 2021

Acknowledgements

This work is supported by the Science and Technology Department of the State Administration of Traditional Chinese Medicine-Zhejiang Provincial Administration of Traditional Chinese Medicine Co-constructed Science and Technology Plan Project-Key Project (Grant No. 2023019186)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Peng, C., Li, Y., Long, X. et al. Urtnet: an unstructured feature fusion network for real-time detection of endoscopic surgical instruments. J Real-Time Image Proc 21, 190 (2024). https://doi.org/10.1007/s11554-024-01567-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01567-w