Abstract

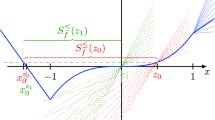

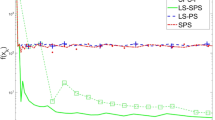

In a recent paper, the spectral projected subgradient (SPS) method was introduced by Loreto et al. for the minimization of a non-differentiable convex piece-wise function, and extensive numerical experimentation showed that this method was very efficient. However, no theoretical convergence was shown. In this paper, a modified version of the spectral projected subgradient (MSPS) is presented. The MSPS is the result of applying to SPS the direction approach used by spectral projected gradient version one (SPG1) proposed by Raydan et al. MSPS presents stronger convergence properties than SPS. We give a comprehensive theoretical analysis of the MSPS and its convergence is shown under some mild assumptions. The proof uses the traditional scheme of descent distance to the optimal value set, and a non-monotone globalization condition is used to get that distance instead of the subgradient definition. To illustrate the behavior of MSPS we present and discuss numerical results for set covering problems.

Similar content being viewed by others

References

Barzilai, J., Borwein, J.M.: Two point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Beasley, J.E.: Or-library: distributing test problems by electronic mail. J. Oper. Res. Soc. 41, 1069–1072 (1990)

Bertsekas, D.P.: On the Goldstein–Levitin–Polyak gradient projection method. IEEE Trans. Autom. Control 21, 174–184 (1976)

Bertsimas, D., Tsitsiklis, J.N.: Introduction to Linear Optimization. Athena Scientific, Belmont (1997)

Birgin, E.G., Martinez, J.M., Raydan, M.: Nonmonotone spectral projected gradient methods on convex set. SIAM J. Opt. 10, 1196–1211 (2000)

Birgin, E.G., Martinez, J.M., Raydan, M.: Algorithm 813: SPG-software for convex-constrained optimization. ACM Trans. Math. Softw. 27, 340–349 (2001)

Birgin, E.G., Martinez, J.M., Raydan, M.: Spectral projected gradient methods. Encycl. Optim. 1, 3652–3659 (2009)

Boyd, S., Mutapcic, A.: Subgradient methods. Notes for EE364b, Stanford University (2008)

Caprara, A., Fischetti, M., Toth, P.: A heuristic method for the set covering problem. Oper. Res. 47, 730–743 (1999)

Crema, A., Loreto, M., Raydan, M.: Spectral projected subgradient with a momentum term for the lagrangean dual approach. Comput. Oper. Res. 34, 3174–3186 (2007)

La Cruz, W., Martinez, J.M., Raydan, M.: Spectral residual method without gradient information for solving large-scale nonlinear systems. Math. Comput. 75, 1449–1466 (2006)

Geoffrion, A.M.: Lagrangean relaxation for integer programing. Math. Progr. Study 2, 82–114 (1974)

Goldstein, A.A.: Convex programming in Hilbert space. Bull. Am. Math. Soc. 70, 709–710 (1964)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Held, M., Wolfe, P., Crowder, H.: Validation of subgradient optimization. Math. Progr. 6, 62–88 (1974)

Levitin, E.S., Polyak, B.T.: Constrained minimization problems. USSR Comput. Math. Math. Phys. 6, 1–50 (1966)

Plaut, D., Nowlan, S., Hinton, G.E.: Experiments on learning by back propagation. Technical Report CMU- CS- 86-126, Department of Computer Science, Carnegie Mellon University, Pittsburgh, PA (1986)

Polyak, B.T.: A general method of solving stremum problems. Sov. Math. Dokl. 8, 593–597 (1967)

Raydan, M.: On the Barzilai and Borwein choice of steplength for the gradient method. IMA J. Numer. Anal. 13, 321–326 (1993)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Opt. 7, 26–33 (1997)

Shor, N.Z.: Minimization Methods for Non-differentiable Functions. Springer Series in Computational Mathematics. Springer, Berlin (1985)

Acknowledgments

The authors thank to José Mario Martínez for his constructive suggestions and to two anonymous referees whose comments helped us to improve the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Loreto, M., Crema, A. Convergence analysis for the modified spectral projected subgradient method. Optim Lett 9, 915–929 (2015). https://doi.org/10.1007/s11590-014-0792-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-014-0792-0