Abstract

We provide upper bounds on the Hausdorff distances between the efficient set and its discretization in the decision space, and between the Pareto set (also called the Pareto front) and its discretization in the objective space, in the context of bi-objective convex quadratic optimization on a compact feasible set. Our results imply that if \(t\) is the dispersion of the sampled points in the discretized feasible set, then the Hausdorff distances in both the decision space and the objective space are \(O(\sqrt{t})\) as \(t\) decreases to zero.

Similar content being viewed by others

References

Applegate, E.A., Feldman, G., Hunter, S.R., Pasupathy, R.: Multi-objective ranking and selection: optimal sampling laws and tractable approximations via SCORE. J. Simul. 14(1), 21–40 (2020). https://doi.org/10.1080/17477778.2019.1633891

Audet, C., Bigeon, J., Cartier, D., Le Digabel, S., Salomon, L.: Performance indicators in multiobjective optimization. Eur. J. Oper. Res. 292, 397–422 (2021). https://doi.org/10.1016/j.ejor.2020.11.016

Augusto, O.B., Bennis, F., Caro, S.: Multiobjective optimization involving quadratic functions. J. Opt. (2014). https://doi.org/10.1155/2014/406092

Beato-Moreno, A., Luque-Calvo, P., Osuna-Gómez, R., Rufián-Lizana, A.: Finding efficient points in multiobjective quadratic programming with strictly convex objective functions. In: Caballero, R., Ruiz, F., Steuer, R. (eds.) Advances in Multiple Objective and Goal Programming, Lecture Notes in Economics and Mathematical Systems, vol. 455. Springer, Heidelberg (1997). https://doi.org/10.1007/978-3-642-46854-4_40

Beck, A.: On the convexity of a class of quadratic mappings and its application to the problem of finding the smallest ball enclosing a given intersection of balls. J. Glob. Opt. 39, 113–126 (2007). https://doi.org/10.1007/s10898-006-9127-8

Beck, A.: Convexity properties associated with nonconvex quadratic matrix functions and applications to quadratic programming. J. Opt. Theory Appl. 142, 1–29 (2009). https://doi.org/10.1007/s10957-009-9539-y

Beck, A., Eldar, Y.C.: Regularization in regression with bounded noise: A Chebyshev center approach. SIAM J. Matrix Anal. Appl. 29(2), 606–625 (2007). https://doi.org/10.1137/060656784

Bogoya, J.M., Vargas, A., Schütze, O.: The averaged Hausdorff distances in multi-objective optimization: a review. Mathematics 7 (2019). https://doi.org/10.3390/math7100894

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, New York (2004)

Eichfelder, G.: Adaptive Scalarization Methods in Multiobjective Optimization. Springer, Heidelberg (2008)

Eldar, Y.C., Beck, A., Teboulle, M.: A minimax Chebyshev estimator for bounded error estimation. IEEE Trans. Signal Process. 56(4), 1388–1397 (2008). https://doi.org/10.1109/TSP.2007.908945

Faulkenberg, S.L., Wiecek, M.M.: On the quality of discrete representations in multiple objective programming. Opt. Eng. 11, 423–440 (2010). https://doi.org/10.1007/s11081-009-9099-x

Feldman, G.: Sampling Laws for Multi-Objective Simulation Optimization on Finite Sets. Ph.D. thesis, Purdue University, West Lafayette, IN, USA (2017)

Glasmachers, T.: Challenges of convex quadratic bi-objective benchmark problems. In: Proceedings of the Genetic and Evolutionary Computation Conference, GECCO ’19, pp. 559 – 567. Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3321707.3321708

Han, Y., Wei, S.W.: \(\phi\)-harmonic maps and \(\phi\)-superstrongly unstable manifolds. The J. Geomet. Anal. 32 (2022). https://doi.org/10.1007/s12220-021-00770-6

Herzel, A., Ruzika, S., Thielen, C.: Approximation methods for multiobjective optimization problems: a survey. INFORMS J. Comput. (2021). https://doi.org/10.1287/ijoc.2020.1028

Hunter, S.R., Applegate, E.A., Arora, V., Chong, B., Cooper, K., Rincón-Guevara, O., Vivas-Valencia, C.: An introduction to multi-objective simulation optimization. ACM Trans. Model. Comput. Simul. 29(1), 7:1–7:36 (2019). https://doi.org/10.1145/3299872

Jägersküpper, J.: How the (1+1) ES using isotropic mutations minimizes positive definite quadratic forms. Theor. Comput. Sci. 361, 38–56 (2006). https://doi.org/10.1016/j.tcs.2006.04.004

Kettner, L.J., Deng, S.: On well-posedness and Hausdorff convergence of solution sets of vector optimization problems. J. Opt. Theory Appl. 153, 619–632 (2012). https://doi.org/10.1007/s10957-011-9947-7

Li, M., Yao, X.: Quality evaluation of solution sets in multiobjective optimisation: A survey. ACM Comput. Surv. 52(2) (2019). https://doi.org/10.1145/3300148

Maioli, D.S., Lavor, C., Gonçalves, D.S.: A note on computing the intersection of spheres in \(\mathbb{R} ^n\). ANZIAM J. 59, 271–279 (2017). https://doi.org/10.1017/S1446181117000372

Miettinen, K.: Nonlinear Multiobjective Optimization. Kluwer Academic Publishers, Boston (1999)

Niederreiter, H.: Random number generation and Quasi-Monte Carlo methods. CBMS-NSF Regional Conference Series in Applied Mathematics. Society for Industrial and Applied Mathematics, Philadelphia, PA (1992). https://doi.org/10.1137/1.9781611970081

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer Series in Operations Research and Financial Engineering, 2nd edn. Springer, New York (2006)

Pardalos, P.M., Žilinskas, A., Žilinskas, J.: Non-Convex Multi-Objective Optimization. Springer Optimization and Its Applications, Springer, Switzerland (2017)

Ruzika, S., Wiecek, M.M.: Approximation methods in multiobjective programming. J. Opt. Theory Appl. 126, 473–501 (2005). https://doi.org/10.1007/s10957-005-5494-4

Sayin, S.: Measuring the quality of discrete representations of efficient sets in multiple objective mathematical programming. Math. Program. Ser. A 87, 543–560 (2000). https://doi.org/10.1007/s101070050011

Schütze, O., Esquivel, X., Lara, A., Coello Coello, C.A.: Using the averaged Hausdorff distance as a performance measure in evolutionary multiobjective optimization. IEEE Trans. Evol. Comput. 16(4), 504–522 (2012). https://doi.org/10.1109/TEVC.2011.2161872

Steponavičė, I., Shirazi-Manesh, M., Hyndman, R.J., Smith-Miles, K., Villanova, L.: On sampling methods for costly multi-objective black-box optimization. In: P.M. Pardalos, A. Zhigljavsky, J. Žilinskas (eds.) Advances in Stochastic and Deterministic Global Optimization, Springer Optimization and Its Applications, chap. 15, pp. 273–296. Springer International, Cham (2016). https://doi.org/10.1007/978-3-319-29975-4_15

Strang, G.: Linear Algebra and its Applications, 4 edn. Cengage Learning (2006)

Tapp, K.: Differential Geometry of Curves and Surfaces. Springer, New York (2016)

Toure, C., Auger, A., Brockhoff, D., Hansen, N.: On bi-objective convex-quadratic problems. In: Deb, K., Goodman, E., Coello Coello, C.A., Klamroth, K., Miettinen, K., Mostaghim, S., Reed, P. (eds.) Evolutionary Multi-Criterion Optimization, pp. 3–14. Springer International Publishing, Cham (2019). https://doi.org/10.1007/978-3-030-12598-1_1

Žilinskas, A.: On the worst-case optimal multi-objective global optimization. Opt. Lett. 7, 1921–1928 (2013). https://doi.org/10.1007/s11590-012-0547-8

Xu, S., Freund, R.M., Sun, J.: Solution methodologies for the smallest enclosing circle problem. Comput. Opt. Appl. 25, 283–292 (2003). https://doi.org/10.1023/A:1022977709811

Yakowitz, S., L’Ecuyer, P., Vázquez-Abad, F.: Global stochastic optimization with low-dispersion point sets. Oper. Res. 48(6), 939–950 (2000). https://doi.org/10.1287/opre.48.6.939.12393

Acknowledgements

Our early work on the upper bound for spherical level sets relied on results in [21], from which we gained significant insight. The authors thank the anonymous referees for helpful comments, Raghu Pasupathy for helpful discussions and comments, and Margaret Wiecek for suggesting references in the Pareto set approximation literature. The second author thanks Susan Sanchez, Dashi Singham, Roberto Szechtman, and the Department of Operations Research, Naval Postgraduate School for their hospitality during her sabbatical in 2021–2022, when parts of this article were written.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors thank the National Science Foundation for support under grant CMMI-1554144.

A Supporting results: gradients, tangents, and inscribed q-Balls

A Supporting results: gradients, tangents, and inscribed q-Balls

In this section, we provide several supporting results regarding the geometry of Problem (Q) under Assumption 1. These results involve viewing the efficient set as a differentiable curve (Sect. A.1); determining bounds on the angles between tangent vectors, gradients, and efficient points (Sect. A.2); and whether a q-ball of certain radius fits inside an ellipsoid (Sect. A.3).

1.1 A.1 The efficient set as a differentiable curve

To begin, from (2), the efficient set can be traced as the curve (see also [32])

where \({ {z}}(0)={ {x}}_2^*={{0}}_q\), \({ {z}}(1)={ {x}}_1^*\), and for simplicity, define the shorthand notation \({ {z}}_n{:}{=}{ {z}}(\beta _n)\) for any subscript n.

By definition, for any efficient point, there exists no direction in which we can improve both objectives at the same time. For each \(k,k'\in \{1,2\},k\ne k'\), let the set of descent directions on objective k at \({ {z}}(\beta )\in {\mathcal {E}}\) be denoted by

where \({\mathcal {L}}_k({ {z}}(\beta ))\subset {\mathcal {H}}_k({ {z}}(\beta ))\); the lack of common descent directions for efficient points implies \({\mathcal {H}}_k({ {z}}(\beta ))\cap {\mathcal {H}}_{k'}({ {z}}(\beta ))=\emptyset\). This fact leads to the following Lemma 12 regarding the relative positions of the points in the efficient set and whether or not their level sets intersect.

Lemma 12

For \(\beta _1,\beta _2\in [0,1]\), \({\mathcal {L}}_1({ {z}}(\beta _1))\cap {\mathcal {L}}_2({ {z}}(\beta _2))=\emptyset\) if and only if \(\beta _2<\beta _1\).

Proof

First, we prove that \({\mathcal {L}}_1({ {z}}(\beta _1))\cap {\mathcal {L}}_2({ {z}}(\beta _2))=\emptyset\) implies \(\beta _2<\beta _1\) by contrapositive. Suppose \(\beta _2\ge \beta _1\). If \(\beta _1=\beta _2\), then \({ {z}}_1={ {z}}_2\in {\mathcal {L}}_1({ {z}}_1)\cap {\mathcal {L}}_2({ {z}}_1)\). If \(\beta _2>\beta _1\), then \(f_2({ {z}}_1)<f_2({ {z}}_2)\) and \(f_1({ {z}}_1)>f_1({ {z}}_2)\). Then for \(\beta _3\in (\beta _1,\beta _2)\), \(f_2({ {z}}_1)<f_2({ {z}}_3)<f_2({ {z}}_2)\) and \(f_1({ {z}}_2)<f_1({ {z}}_3)<f_1({ {z}}_1)\), hence \({ {z}}_3\in {\mathcal {L}}_1({ {z}}_1)\cap {\mathcal {L}}_2({ {z}}_2)\). For the other direction, suppose \(\beta _2<\beta _1\). Then for \(\beta _3\in (\beta _2,\beta _1)\), \(f_2({ {z}}_2)<f_2({ {z}}_3)<f_2({ {z}}_1)\) and \(f_1({ {z}}_2)>f_1({ {z}}_3)>f_1({ {z}}_1)\). Since \({ {z}}_3\in {\mathcal {E}}\), \({\mathcal {H}}_1({ {z}}_3)\cap {\mathcal {H}}_2({ {z}}_3)=\emptyset\). Since \({\mathcal {L}}_2({ {z}}_2)\subset {\mathcal {H}}_2({ {z}}_3)\) and \({\mathcal {L}}_1({ {z}}_1)\subset {\mathcal {H}}_1({ {z}}_3)\), then \({\mathcal {L}}_1({ {z}}_1)\cap {\mathcal {L}}_2({ {z}}_2)=\emptyset\). \(\square\)

Further, for each \(k\in \{1,2\},k\ne k'\), [32] and the parameterization in (34) imply that for any \(\beta \in [0,1]\),

and, for \(H_\beta {:}{=}\beta H_1+(1-\beta )H_2\), the curve \({ {z}}(\beta )\) is a differentiable curve in \(\mathbb {R}^q\) with tangent vector (defined with respect to \(\beta\))

Importantly, from (36) and (37), it follows that \(\Vert { {z}}'(\beta ) \Vert > 0\) for all \(\beta \in [0,1]\).

1.2 A.2 Angles: tangent vectors and gradients

In this section, we provide results on the angles between points in the efficient set, tangent vectors, and gradients. For \(k,k'\in \{1,2\}, k\ne k'\), consider a point in the efficient set, \({ {z}}_k={ {z}}(\beta _k)\in {\mathcal {E}}\), \(\beta _k\in [0,1]\) and any sequence of efficient points

such that \({ {z}}_{k',n}\rightarrow { {z}}_k\) as \(n\rightarrow \infty\), \({ {z}}_{k',n}\ne { {z}}_k\) for all n, \(\beta _{k',n}\in [0,1]\) for all n, and \({ {z}}_{k',n}\) approaches \({ {z}}_k\) exclusively from one side; that is, \(\beta _1<\beta _{2,n}\) or \(\beta _{1,n}>\beta _2\) for all n. Define the tangent vector at \({ {z}}_k\) with respect to the direction of approach as

and notice that \(\Vert { {\tau}}({ {z}}_k) \Vert =1\). Then from (36) and (37),

Therefore if \(\beta _k\in (0,1)\), \(-\nabla f_k({ {z}}_k)/\Vert \nabla f_k({ {z}}_k) \Vert =\nabla f_{k'}({ {z}}_k)/\Vert \nabla f_{k'}({ {z}}_{k}) \Vert\) and from (37) and (40), we have

If \(\beta _k\in \{0,1\}\), so that \({ {z}}_1={ {x}}^*_2\) or \({ {z}}_2={ {x}}^*_1\), then from (40) and using \(\beta =0\) and \(\beta =1\) in (36), we have

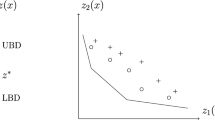

Figure 4 demonstrates the relative positions of the gradient and tangent vectors at the minimizers for an example in which \(q=2\). For general decision space dimension q, [4, Theorem 3.2, p. 369] asserts that \({\mathcal {E}}=\{{ {z}}(\beta ):\beta \in [0,1]\}\) forms a finite arc of a hyperbola whose extreme points are the minimizers \({ {x}}^*_1\) and \({ {x}}^*_2\).

Next, we present Lemma 13 which provides results on the angles between the efficient points, gradients, and tangent vectors at the minimizers.

Lemma 13

Let \(k,k'\in \{1,2\},k\ne k'\) and let \(\{{ {z}}_{k',n} = { {z}}(\beta _{k',n}), n\ge 0\}\) be the sequence of efficient points converging to \({ {z}}_k={ {z}}(\beta _k)\) defined in (38). The following hold:

-

1.

If \(\theta _{k,n}\) is the angle between \({ {z}}_{k',n}-{ {z}}_k\) and \(-\nabla f_{k}({ {z}}_k)\), then \(\cos \theta _{k,n}>0\) for all n.

-

2.

If \(\theta _k\) is the angle between \({ {\tau}}({ {z}}_{k})\) and \(-\nabla f_{k}({ {z}}_k)\), then \(\cos \theta _k\ge (\kappa (H_\beta ))^{-1}\).

-

3.

There exists a constant \(c_0\in [1,\infty )\) such that \(\cos \theta _{k,n}\ge c_0^{-1}>0\) for all n.

Proof

Part 1: First, by the definition of \(\{{ {z}}_{k',n},n\ge 0\}\) in (38), we have \({ {z}}_{k',n}\notin \{{ {z}}_k, { {x}}^*_k\}\) for all n and \({ {z}}_{k}\ne { {x}}^*_{k}\). Then this result holds because under Assumption 1, for \(k,k'\in \{1,2\},k'\ne k\), \({ {z}}_{k'}-{ {z}}_k\) is strictly a descent direction on objective k by (34). Therefore, it must make an acute angle with the steepest descent direction. More formally,

Part 2: From (43), by continuity of the relevant functions, (41), (42), and the facts that for a square positive definite matrix \(H=A^\intercal A\), \(\Vert H{ {x}} \Vert \le \Vert H \Vert \Vert { {x}} \Vert\) for vector \({ {x}}\), \(\Vert H \Vert =\Vert A \Vert ^2=\Vert Q\Lambda Q^\intercal \Vert =\lambda _q(H)\), and \(\Vert H^{-1} \Vert =\Vert Q\Lambda ^{-1}Q^\intercal \Vert =\lambda _q(H^{-1})=1/\lambda _1(H)\),

Part 3: This part follows from the first two. \(\square\)

1.3 A.3 Osculating spheres and inscribed q-Balls

Finally, we complete the supporting results with Lemmas 14–16. First, Lemma 14 gives conditions on the lengths of the major axes of the sublevel sets for a \(t\)-radius ball touching the boundary to fit inside the sublevel set. Then, Lemma 15 gives conditions on the required distance between two efficient points for a \(t\)-radius ball to fit inside the intersection of the relevant sublevel sets. Finally, Lemma 16 uses several inscribed balls to determine the distance between a point in \(B({\mathcal {E}},t)\cap {\mathcal {J}}_{k'}({ {x}}^*_k)\) and the tangent vector \({\mathcal {T}}_{\mathcal {L}}({ {x}}^*_k)\) for small enough t.

Lemma 14

Let \(k\in \{1,2\}\) and \({ {x}}_0\in {\mathcal {X}}\). If \(\hbox { diam(}{\mathcal {L}}_k({ {x}}_0)\hbox {)}\ge 2\kappa ^*t\) and \({ {x}}_1\in {\mathcal {L}}_k({ {x}}_0)\) is such that \({ {x}}_1 = { {x}}_0-t(\nabla f_k({ {x}}_0)/\Vert \nabla f_k({ {x}}_0) \Vert )\), then \(B({ {x}}_1,t)\subset {\mathcal {L}}_k({ {x}}_0)\).

Proof (Sketch)

We show only \(k=2\); \(k=1\) holds by a similar process. Let \({ {x}}_0\in {\mathcal {X}}\), \(\lambda _{2i}{:}{=}\lambda _i(H_2)\), \(i=1,\ldots ,q\), and notice \({ {x}}_1={ {x}}_0-t(H_2{ {x}}_0/\Vert H_2{ {x}}_0 \Vert )\) is a step of length \(t\) from \({ {x}}_0\) in the direction of steepest descent on objective 2. Thus, \({ {x}}_0\in \hbox { bd(}B({ {x}}_1,t)\hbox {)}\). To simplify calculations, for \({\mathcal {L}}_2({ {x}}_0)\), apply the rotation \({ {w}}=Q_2^\intercal { {x}}, { {a}}_0=Q_2^\intercal { {x}}_0\) from the proof of Lemma 5. Define \({\mathcal {L}}_{2r}({ {a}}_0) {:}{=}\{{ {w}}\colon{ {w}}^\intercal \Lambda _2{ {w}}\le { {a}}_0^\intercal \Lambda _2{ {a}}_0\}\), \({\mathcal {J}}_{2r}({ {a}}_0){:}{=}\hbox { bd(}{\mathcal {L}}_{2r}({ {a}}_0) \hbox {)}\). Now, we consider \({ {a}}_1 = {{ {a}}_0-t(\Lambda _2{ {a}}_0)/\Vert \Lambda _2{ {a}}_0 \Vert }\), where \({ {a}}_0\in \hbox { bd(}B({ {a}}_1,t)\hbox {)}\).

To ensure \(B({ {a}}_1,t)\subset {\mathcal {L}}_{2r}({ {a}}_0)\), the maximum curvature at any point on \({\mathcal {J}}_{2r}({ {a}}_0)\) must be less than or equal to that at any point on a \(t\)-radius sphere. The maximum curvature on an ellipse is achieved at a vertex along the major axis; see, e.g., [31]. To determine the maximum curvature at a point on \({\mathcal {J}}_{2r}({ {a}}_0)\), it is sufficient to consider the intersection of \({\mathcal {J}}_{2r}({ {a}}_0)\) with the \(w_1\)-\(w_q\) plane (see [18, p. 43], [15, p. 33]) and let \({ {a}}_0=(a_{01},0,\ldots ,0)\), resulting in the ellipse \(\{(w_1,w_q)\colon\lambda _{21}w_1^2+\lambda _{2q} w_q^2 = \lambda _{21} a_{01}^2 \}=\{(w_1,w_q)\colon w_1^2/a_{01}^2 + w_q^2/(a_{01}^2/\kappa _2) = 1 \}\). Write this ellipse as the plane curve \(g(s)=(a_{01}\cos (s),(a_{01}/\sqrt{\kappa _2})\sin (s))\). The length of the semi-major axis is \(a_{01}\), the length of the semi-minor axis is \((a_{01}/\sqrt{\kappa _2})\), and the maximum curvature is \(a_{01}/(a_{01}^2/\kappa _2)=\kappa _2/a_{01}\) at \(s=0\) [31, p. 77]. Then for an osculating circle at \((a_{11},a_{1q})=(a_{01}-t,0)\) having radius \(t\), curvature \(1/t\), and passing through \((a_{01},0)\) to fit inside the ellipse, we require \((1/t)\ge \kappa _2/a_{01}\), hence \(a_{01}\ge t\kappa _2\). Now since \(a_{01}=\hbox { diam(}{\mathcal {L}}_{2r}({ {a}}_0)\hbox {)}/2 =\hbox { diam(}{\mathcal {L}}_{2}({ {x}}_0)\hbox {)}/2\ge t\kappa _2\), then \(B({ {a}}_1,t)\subset {\mathcal {L}}_{2r}({ {a}}_0)\) and \(B({ {x}}_1,t)\subset {\mathcal {L}}_2({ {x}}_0)\). \(\square\)

Lemma 15

Let \({ {z}}_1,{ {z}}_2\in {\mathcal {E}}, \beta _1,\beta _2\in [0,1], \beta _1<\beta _2\) be efficient points. There exists a constant \(\eta _0\in [1,\infty )\), dependent on \(\kappa ^*\), such that if \(\Vert { {z}}_1-{ {z}}_{2} \Vert > 2t\kappa ^*\eta _0,\) then there exists \({ {x}}_0\in \hbox { conv}(\{{ {z}}_1,{ {z}}_2\})\) such that \(B({ {x}}_0,t)\subset \hbox { int}({\mathcal {L}}_1({ {z}}_1)\cap {\mathcal {L}}_{2}({ {z}}_{2}))\).

Proof

The main idea for the proof is that for each level set \({\mathcal {L}}_k({ {z}}_k)\), \(k\in \{1,2\}\), we can use Lemma 14 to fit a (relatively large) osculating ball at \({ {z}}_k\). Then we fit a smaller t-radius ball, centered at a point \({ {w}}_k\) along \(\hbox { conv(}\{{ {z}}_1,{ {z}}_2\}\hbox {)}\), inside this larger osculating ball. As long as \(\Vert { {z}}_1-{ {z}}_2 \Vert\) is sufficiently large and the respective centers \({ {w}}_1\) and \({ {w}}_2\) of the t-radius balls are appropriately spaced along \(\hbox { conv(}\{{ {z}}_1,{ {z}}_2\}\hbox {)}\), the lemma holds.

To begin, let the postulates hold. Since \(\beta _1<\beta _2\), \({\mathcal {L}}_1({ {z}}_1)\cap {\mathcal {L}}_2({ {z}}_2)\ne \emptyset\) by Lemma 12. To fit the osculating balls inside the level sets, first, for each \(k\in \{1,2\}\), let \({ {x}}_k\) be the point resulting from a step of size \(u_{k}(t)\) from \({ {z}}_k\) in the direction of steepest descent on objective k, so that

and \(\Vert { {x}}_k-{ {z}}_k \Vert =u_{k}(t)\). By Lemma 14, if

then \(B({ {x}}_k, u_{k}(t))\subset {\mathcal {L}}_k({ {z}}_k)\). Let \({ {w}}_k\) be the orthogonal projection of \({ {x}}_k\) onto the line segment \(\hbox { conv(}\{{ {z}}_1,{ {z}}_2\}\hbox {)}\), and let \(\zeta _k\) be the angle between \({ {w}}_k-{ {z}}_k,{ {x}}_k-{ {z}}_k\). Since \(\zeta _1,\zeta _2\) are also the angles between \({ {z}}_2-{ {z}}_1, -\nabla f_1({ {z}}_1)\) and between \({ {z}}_1-{ {z}}_2, -\nabla f_2({ {z}}_2)\), respectively, the results in Lemma 13 apply. Thus, \(\cos \zeta _k=\sin (\pi /2-\zeta _k)\ge c_0^{-1}>0\) implies \(\zeta _k\in [0,\pi /2)\), and hence there exists \(\tilde{c}_0\in [1,\infty )\) such that \(1-\sin \zeta _k\ge (\tilde{c}_0)^{-1}>0\) regardless of t and the choice of \({ {z}}_1,{ {z}}_2\). To ensure \(B({ {w}}_k,t)\subset B({ {x}}_k,u_{k}(t))\), we require

Finally, for each \(k,k'\in \{1,2\},k'\ne k\), by the definition of \({ {w}}_k\), we have \(\Vert { {z}}_k-{ {w}}_k \Vert +\Vert { {w}}_k-{ {z}}_{k'} \Vert =\Vert { {z}}_1-{ {z}}_2 \Vert\). Then to ensure \(\Vert { {z}}_k-{ {w}}_k \Vert \le \Vert { {w}}_k-{ {z}}_{k'} \Vert\), we require

Combining the requirements of (44), (45), and (46), it follows that

If we can find values of \(u_1(t), u_2(t)\), and a requirement on the distance \(\Vert { {z}}_1-{ {z}}_2 \Vert\) such that (47) is satisfied, then for each \(k,k'\in \{1,2\},k'\ne k\), we have \(B({ {w}}_k,t)\subseteq B({ {x}}_k,u_k(t))\subset {\mathcal {L}}_k({ {z}}_k)\) and \(\Vert { {z}}_1-{ {z}}_2 \Vert = \Vert { {z}}_1-{ {w}}_1 \Vert +\Vert { {w}}_1-{ {w}}_2 \Vert +\Vert { {w}}_2-{ {z}}_2 \Vert\), which implies that \(B({ {w}}_k,t)\subset {\mathcal {L}}_{k'}({ {z}}_{k'})\). Since \({\mathcal {L}}_1({ {z}}_1)\cap {\mathcal {L}}_2({ {z}}_2)\) is convex, then \(\hbox { conv(}B({ {w}}_1,t)\cup B({ {w}}_2,t)\hbox {)}\subset {\mathcal {L}}_1({ {z}}_1)\cap {\mathcal {L}}_2({ {z}}_2)\). Thus, \(\exists \,{ {x}}_0\in \hbox { conv(}\{{ {w}}_1,{ {w}}_2\}\hbox {)}\) such that the result holds.

Finally, we find \(u_1(t), u_2(t)\), and a requirement on \(\Vert { {z}}_1-{ {z}}_2 \Vert\) such that (47) is satisfied. First, for each \(k\in \{1,2\}\), \(\beta _1<\beta _2\) and the triangle inequality imply

Since \(\kappa ^*\ge 1\) while \(\cos \zeta _k\le 1\), and since \(1-\sin \zeta _k\ge (\tilde{c}_0)^{-1}\), we satisfy (47) if

Now select \(u_k(t)=t\tilde{c}_0\) for each \(k\in \{1,2\}\) and let \(\eta _0{:}{=}\tilde{c}_0\). Since \(\Vert { {z}}_1-{ {z}}_2 \Vert > 2t\kappa ^*\eta _0\), the result holds. \(\square\)

Lemma 16

Let \(k\in \{1,2\}\), and let \({ {y}}\) be a point in the intersection of the t-expansion of \({\mathcal {E}}\) with the boundary of the sublevel set \({\mathcal {L}}_{k'}({ {x}}^*_k)\); that is, \({ {y}}\in B({\mathcal {E}},t)\cap {\mathcal {J}}_{k'}({ {x}}^*_k)\). Then there exists \(t_1'>0\) and a constant \(c_1\in [1,\infty )\), both dependent on \(\kappa ^*\), such that for all \(t\le t_1'\), \({\text{ dist}}({ {y}},{\mathcal {T}}_{\mathcal {L}}({ {x}}^*_k))\le t c_1\).

Proof

We find an upper bound on \({\text{dist}}({ {y}},{\mathcal {T}}_{\mathcal {L}}({ {x}}^*_k))\) by fitting a series of three balls inside the sublevel set \({\mathcal {L}}_{k'}({ {x}}^*_k)\). The first ball is the largest ball, constructed as follows. Take a step of size \(\ell /\kappa _{k'}\) from \({ {x}}^*_{k}\) in the steepest descent direction,

Then Lemma 14 implies \(B({ {x}}_1,\ell /\kappa _{k'})\subset {\mathcal {L}}_{k'}({ {x}}^*_k)\). Next, we construct the second ball by taking a step \(s_k(t)<\Vert { {x}}_1-{ {x}}^*_k \Vert =\ell /\kappa _{k'}\) from \({ {x}}^*_k\) in the steepest descent direction,

Let \({ {z}}_3({ {x}}_2)\) be the efficient point such that \({ {x}}_2\) is its orthogonal projection onto the line \(\hbox { conv(}\{{ {x}}_1,{ {x}}_k^*\}\hbox {)}\); such a point exists for small enough \(s_k(t)\). We choose \(s_k(t)\) so that the third ball, \(B({ {z}}_3({ {x}}_2),t)\), is a subset of the first ball. That is, we find \(s_k(t)\) such that \(B({ {z}}_3({ {x}}_2),t)\subset\) \(B({ {x}}_1,\ell /\kappa _{k'}) \subset\) \({\mathcal {L}}_{k'}({ {x}}^*_k)\). To find \(s_k(t)\), first, notice that if we ensure

it follows that \(B({ {z}}_3({ {x}}_2),t)\subset B({ {x}}_1,\ell /\kappa _{k'})\). Let \(\theta _{3,t}\) be the angle between \({ {z}}_3({ {x}}_2)-{ {x}}_k^*\) and \({ {x}}_2-{ {x}}_k^*\). By Lemma 13, \(\cos \theta _{3,t}\ge c_0^{-1}\) for all t. Then to ensure (49) holds, we need \(s_k(t)>t\) such that \(\Vert { {z}}_3({ {x}}_2)-{ {x}}_1 \Vert ^2 = (\ell /\kappa _{k'}-s_k(t))^2+(s_k(t)\tan \theta _{3,t})^2 \le (\ell /\kappa _{k'}-t)^2;\) re-arranging terms implies

Choose \(s_k(t)=t(\kappa _{k'}+1)\) and plug into (50) to see that (49) holds for all

Now for t small enough and \(s_k(t)= t(\kappa _{k'}+1)\), we have \(B({ {z}}_3({ {x}}_2),t)\) \(\subset\) \(B({ {x}}_1,\ell /\kappa _{k'})\) \(\subset\) \({\mathcal {L}}_{k'}({ {x}}^*_k)\). Let \({ {y}}_3\) be any point in \(B({ {z}}_3({ {x}}_2),t)\subset B({\mathcal {E}},t)\) such that \({\text{ dist}}({ {y}}_3,{\mathcal {T}}_{\mathcal {L}}({ {x}}^*_k))\ge {\text{ dist}}({ {y}},{\mathcal {T}}_{\mathcal {L}}({ {x}}^*_k))\); such a point exists by construction. Now, the result holds since for all t small enough and \(c_1{:}{=}(1+c_0(\kappa ^*+1))\), we have

\(\square\)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ondes, B.E., Hunter, S.R. An upper bound on the Hausdorff distance between a Pareto set and its discretization in bi-objective convex quadratic optimization. Optim Lett 17, 45–74 (2023). https://doi.org/10.1007/s11590-022-01920-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-022-01920-7