Abstract

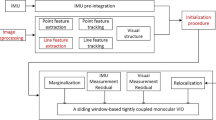

Visual simultaneous localization and mapping (VSLAM) are essential technologies to realize the autonomous movement of vehicles. Visual-inertial odometry (VIO) is often used as the front-end of VSLAM because of its rich information, lightweight, and robustness. This article proposes the FPL-VIO, an optimization-based fast vision-inertial odometer with points and lines. Traditional VIO mostly uses points as landmarks; meanwhile, most of the geometrical structure information is ignored. Therefore, the accuracy will be jeopardized under motion blur and texture-less area. Some researchers improve accuracy by adding lines as landmarks in the system. However, almost all of them use line segment detector (LSD) and line band descriptor (LBD) in line processing, which is very time-consuming. This article first proposes a fast line feature description and matching method based on the midpoint and compares the three line detection algorithms of LSD, fast line detector (FLD), and edge drawing lines (EDLines). Then, the measurement model of the line is introduced in detail. Finally, FPL-VIO is proposed by adding the above method to monocular visual-inertial state estimator (VINS-Mono), an optimization-based fast vision-inertial odometer with lines described by midpoint and points. Compared with VIO using points and lines (PL-VIO), the line processing efficiency of FPL-VIO is increased by 3–4 times while ensuring the same accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Z. R. Wang, C. G. Yang, S. L. Dai. A fast compression framework based on 3D point cloud data for telepresence. International Journal of Automation and Computing, vol. 17, no. 6, pp. 855–866, 2020. DOI: https://doi.org/10.1007/s11633-020-1240-5.

J. Gimenez, A. Amicarelli, J. M. Toibero, F. di Sciascio, R. Carelli. Continuous probabilistic SLAM solved via iterated conditional modes. International Journal of Automation and Computing, vol. 16, no. 6, pp. 838–850, 2019. DOI: https://doi.org/10.1007/s11633-019-1186-7.

Z. C. Zhang, G. Gallego, D. Scaramuzza. On the comparison of gauge freedom handling in optimization-based visual-inertial state estimation. IEEE Robotics and Automation Letters, vol. 3, no. 3, pp. 2710–2717, 2018. DOI: https://doi.org/10.1109/LRA.2018.2833152.

Q. Fu, X. Y. Chen, W. He. A survey on 3D visual tracking of multicopters. International Journal of Automation and Computing, vol. 16, no. 6, pp. 707–719, 2019. DOI: https://doi.org/10.1007/s11633-019-1199-2.

Y. Wu, H. B. Zhu, Q. X. Du, S. M. Tang. A survey of the research status of pedestrian dead reckoning systems based on inertial sensors. International Journal of Automation and Computing, vol. 16, no. 1, pp. 65–83, 2019. DOI: https://doi.org/10.1007/s11633-018-1150-y.

S. Weiss, R. Siegwart. Real-time metric state estimation for modular vision-inertial systems. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Shanghai, China, pp. 4531–4537, 2011. DOI: https://doi.org/10.1109/ICRA.2011.5979982.

S. Weiss, M. W. Achtelik, S. Lynen, M. Chli, R. Siegwar. Real-time onboard visual-inertial state estimation and self-calibration of MAVs in unknown environments. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Saint Paul, USA, pp. 957–964, 2012. DOI: https://doi.org/10.1109/ICRA.2012.6225147.

G. Klein, D. Murray. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, IEEE, Nara, Japan, pp. 255–344, 2007. DOI: https://doi.org/10.1109/ISMAR.2007.4538852.

M. Bloesch, S. Omari, M. Hutter, R. Siegwart. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, Hamburg, Germany, pp. 298–304, 2015. DOI: https://doi.org/10.1109/IROS.2015.7353389.

S. Leutenegger, S. Lynen, M. Bosse, R. Siegwart, P. Furgale. Keyframe-based visual-inertial odometry using nonlinear optimization. The International Journal of Robotics Research, vol. 34, no. 3, pp. 314–334, 2015. DOI: https://doi.org/10.1177/0278364914554813.

T. Qin, P. L. Li, S. J. Shen. VINS-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Transactions on Robotics, vol. 34, no. 4, pp. 1004–1020, 2018. DOI: https://doi.org/10.1109/TRO.2018.2853729.

T. Qin, S. J. Shen. Online temporal calibration for monocular visual-inertial systems. In Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, Madrid, Spain, pp. 3662–3669, 2018. DOI: https://doi.org/10.1109/IROS.2018.8593603.

R. Gomez-Ojeda, J. Briales, J. Gonzalez-Jimenez. PL-SVO: Semi-direct Monocular Visual Odometry by combining points and line segments. In Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, Daejeon, Korea, pp. 4211–4216, 2016. DOI: https://doi.org/10.1109/IROS.2016.7759620.

C. Forster, M. Pizzoli, D. Scaramuzza. SVO: Fast semi-direct monocular visual odometry. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Hong Kong, China, pp. 15–22, 2014. DOI: https://doi.org/10.1109/ICRA.2014.6906584.

R. G. von Gioi, J. Jakubowicz, J. M. Morel, G. Randall. LSD: A fast line segment detector with a false detection control. IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 32, no. 4, pp. 722–732, 2010. DOI: https://doi.org/10.1109/TPAMI.2008.300.

R. Gomez-Ojeda, J. Gonzalez-Jimenez. Robust stereo visual odometry through a probabilistic combination of points and line segments. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Stockholm, Sweden, pp. 2521–2526, 2016. DOI: https://doi.org/10.1109/ICRA.2016.7487406.

L. L. Zhang, R. Koch. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. Journal of Visual Communication and Image Representation, vol. 24, no. 7, pp. 794–805, 2013. DOI: https://doi.org/10.1016/j.jvcir.2013.05.006.

F. Zheng, G. Tsai, Z. Zhang, S. S. Liu, C. C. Chu, H. B. Hu. Trifo-VIO: Robust and efficient stereo visual inertial odometry using points and lines. In Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, Madrid, Spain, pp. 3686–3693, 2018. DOI: https://doi.org/10.1109/IROS.2018.8594354.

X. Zheng, Z. Moratto, M. Y. Li, A. I. Mourikis. Photometric patch-based visual-inertial odometry. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Singapore, pp. 3264–3271, 2017. DOI: https://doi.org/10.1109/ICRA.2017.7989372.

R. Gomez-Ojeda, J. Gonzalez-Jimenez. Robust stereo visual odometry through a probabilistic combination of points and line segments. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Stockholm, Sweden, pp. 2521–2526, 2016. DOI: https://doi.org/10.1109/ICRA.2016.7487406.

R. Gomez-Ojeda, F. A. Moreno, D. Zuñiga-Noël, D. Scaramuzza, J. Gonzalez-Jimenez. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Transactions on Robotics, vol. 35, no. 3, pp. 734–746, 2019. DOI: https://doi.org/10.1109/TRO.2019.2899783.

A. Pumarola, A. Vakhitov, A. Agudo, A. Sanfeliu, F. Moreno-Noguer. PL-SLAM: Real-time monocular visual SLAM with points and lines. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Singapore, pp. 4503–4508, 2017. DOI: https://doi.org/10.1109/ICRA.2017.7989522.

Y. J. He, J. Zhao, Y. Guo, W. H. He, K. Yuan. PL-VIO: Tightly-coupled monocular visual-inertial odometry using point and line features. Sensors, vol. 18, no. 4, Article number 1159, 2018. DOI: https://doi.org/10.3390/s18041159.

J. H. Lee, S. Lee, G. X. Zhang, J. Lim, W. K. Chung, I. H. Suh. Outdoor place recognition in urban environments using straight lines. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Hong Kong, China, pp. 5550–5557, 2014. DOI: https://doi.org/10.1109/ICRA.2014.6907675.

C. Akinlar, C. Topal. EDLines: A real-time line segment detector with a false detection control. Pattern Recognition Letters, vol. 32, no. 13, pp. 1633–1642, 2011. DOI: https://doi.org/10.1016/j.patrec.2011.06.001.

D. Schubert, T. Goll, N. Demmel, V. Usenko, J. Stückler, D. Cremers. The TUM VI benchmark for evaluating visual-inertial odometry. In Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, Madrid, Spain, pp. 1680–1687, 2018. DOI: https://doi.org/10.1109/IROS.2018.8593419.

M. Burri, J. Nikolic, P. Gohl, T. Schneider, J. Rehder, S. Omari, M. W. Achtelik, R. Siegwart. The EuRoC micro aerial vehicle datasets. The International Journal of Robotics Research, vol. 35, no. 10, pp. 1157–1163, 2016. DOI: https://doi.org/10.1177/0278364915620033.

B. Pfrommer, N. Sanket, K. Daniilidis, J. Cleveland. PennCOSYVIO: A challenging Visual Inertial Odometry benchmark. In Proceedings of IEEE International Conference on Robotics and Automation, IEEE, Singapore, pp. 3847–3854, 2017. DOI: https://doi.org/10.1109/ICRA.2017.7989443.

M. Calonder, V. Lepetit, C. Strecha, P. Fua. BRIEF: Binary robust independent elementary features. In Proceedings of the 11th European Conference on Computer Vision, Springer, Heraklion, Greece, pp. 778–792, 2010. DOI: https://doi.org/10.1007/978-3-642-15561-1_56.

A. Bartoli, P. Sturm. Structure-from-motion using lines: Representation, triangulation, and bundle adjustment. Computer Vision and Image Understanding, vol. 100, no. 3, pp. 416–441, 2005. DOI: https://doi.org/10.1016/j.cviu.2005.06.001.

A. Bartoli, P. Sturm. The 3D line motion matrix and alignment of line reconstructions. International Journal of Computer Vision, vol. 57, no. 3, pp. 159–178, 2004. DOI: https://doi.org/10.1023/B:VISI.0000013092.07433.82.

EVO: Python package for the evaluation of odometry and SLAM, [Online], Available: https://github.com/Michael-Grupp/evo, Mar 10, 2020.

J. Gimenez, A. Amicarelli, J. M. Toibero, F. di Sciascio, R. Carelli. Iterated conditional modes to solve simultaneous localization and mapping in Markov random fields context. International Journal of Automation and Computing, vol. 15, no. 3, pp. 310–324, 2018. DOI: https://doi.org/10.1007/s11633-017-1109-4.

Acknowledgements

This work was supported by National Natural Science Foundation of China (No. 61774157 and 81771388), Beijing National Natural Science Foundation of China (No. 4182075), and National Key Research and Development. Plan (Nos. 2020YFC2004501, 2020YFC2004503 and 2017YFF0107704).

Author information

Authors and Affiliations

Corresponding author

Additional information

Recommended by Associate Editor Jin-Hua She

Colored figures are available in the online version at https://link.springer.com/journal/11633

Wen-Kuan Li

received the B. Sc. degree in prospecting technology and engineering from China University of Petroleum, China in 2018. He is a master student in Aerospace Information Research Institute, Chinese Academy of Sciences, China.

His research interests include simultaneous localization and mapping, visual inertial odometer.

Hao-Yuan Cai

received the B. Eng. degree in optoelectronics technology from Tsinghua University, China in 1998, and the Ph. D. degree in physical electronics from Institute of Electronics, Chinese Academy of Sciences, China in 2003. He is currently a researcher at Institute of Electronics of the University of Chinese Academy of Sciences, China.

His research interests include micro-electro-mechanical system (MEMS) sensors and their micro-systems, wireless industrial internet of things (IoT) sensors and mobile robot indoor navigation.

Sheng-Lin Zhao

received the B. Sc. degree in automation from University of Science and Technology Beijing, China in 2019. He is a master student in Aerospace Information Research Institute, Chinese Academy of Sciences, China.

His research interests include simultaneous localization and mapping, multisensor fusion.

Ya-Qian Liu

received the B. Sc. degree in electronic information engineering from China Agricultural University, China in 2020. She is currently a master student in Aerospace Information Research Institute, Chinese Academy of Sciences, China.

Her research interest is industrial smart sensor.

Chun-Xiu Liu

received the B. Eng. degree in biochemical industry from Beijing University of Chemical Technology, China in 1999, the M. Eng. degree in molecular genetics from Institute of Microbiology, Chinese Academy of Sciences, China in 2003, and the Ph. D. degree from Chinese Academy of Sciences Electronics, China in 2008.

Her research interests include sensors and rapid detection systems.

Rights and permissions

About this article

Cite this article

Li, WK., Cai, HY., Zhao, SL. et al. A Fast Vision-inertial Odometer Based on Line Midpoint Descriptor. Int. J. Autom. Comput. 18, 667–679 (2021). https://doi.org/10.1007/s11633-021-1303-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11633-021-1303-2