Abstract

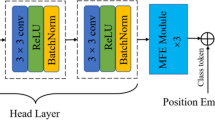

Image fire recognition is of great significance in fire prevention and loss reduction through early fire detection and warning. Aiming at the problems of low accuracy of existing fire recognition and high error rate of tiny target detection, this study proposed a fire recognition model based on a channel space attention mechanism. First, the convolutional block attention module (CBAM) is introduced into the first and last convolutional layers EfficientNetV2, which shows strong feature extraction ability and high computational efficiency as the backbone network. In terms of channel and space aspects, the weights in the feature layer are increased, which enhances the semantic information of flame smoke features and makes the model pay more attention to the feature information of fire images. Then, label smoothing based on the cross-entropy loss function is introduced into this study to avoid predicting labels too confidently in the training process to improve the generalization ability of the recognition model. The experimental results show that the fire image recognition accuracy based on the CBAM-EfficientNetV2 model reaches 98.9%. The accuracy of smoke image recognition can reach 98.5%. The accuracy of small target detection can reach 96.1%. At the same time, we compared the existing methods and found that the proposed method achieved higher accuracy, precision, recall, and F1-score. Finally, the fire image results are visualized using the Grad-CAM technique, which makes the model more effective and more intuitive in detecting tiny targets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Y. F. Guo. Application of fire protection IOT in the fire causes investigation. Fire Protection Today, vol. 7, no. 4, pp. 112–114, 2022. (in Chinese)

Z. C. Liu, K. Zhang, C. Y. Wang, S. Y. Huang. Research on the identification method for the forest fire based on deep learning. Optik, vol. 223, Article number 165491, 2020. DOI: https://doi.org/10.1016/j.ijleo.2020.165491.

P. Barmpoutis, T. Stathaki, K, Dimitropoulos, G. Nikos. Early fire detection based on aerial 360-degree sensors, deep convolution neural networks and exploitation of fire dynamic textures. Remote Sensing, vol. 12, no. 19, Article number 3177, 2020. DOI: https://doi.org/10.3390/rs12193177.

H. Wu, D. Y. Wu, J. S. Zhao. An intelligent fire detection approach through cameras based on computer vision methods. Process Safety and Environmental Protection, vol. 127, pp. 245–256, 2019. DOI: https://doi.org/10.1016/j.psep.2019.05.016.

T. X. Tung, J. M. Kim. An effective four-stage smoke-detection algorithm using video images for early fire-alarm systems. Fire Safety Journal, vol. 46, no. 5, pp. 276–282, 2011. DOI: https://doi.org/10.1016/j.firesaf.2011.03.003.

P. Li, W. D. Zhao. Image fire detection algorithms based on convolutional neural networks. Case Studies in Thermal Engineering, vol. 19, Article number 100625, 2020. DOI: https://doi.org/10.1016/j.csite.2020.100625.

M. Hashemzadeh. Hiding information in videos using motion clues of feature points. Computers & Electrical Engineering, vol. 68, pp. 14–25, 2018. DOI: https://doi.org/10.1016/j.compeleceng.2018.03.046.

M. Hashemzadeh, B. Asheghi, N. Farajzadeh. Content-aware image resizing: An improved and shadow-preserving seam carving method. Signal Processing, vol. 155, pp. 233–246, 2018. DOI: https://doi.org/10.1016/j.sigpro.2018.09.037.

M. Hashemzadeh, B. A. Azar. Retinal blood vessel extraction employing effective image features and combination of supervised and unsupervised machine learning methods. Artificial Intelligence in Medicine, vol. 95, pp. 1–15, 2019. DOI: https://doi.org/10.1016/j.artmed.2019.03.001.

A. Khan, A. Sohail, U. Zahoora, A. S. Qureshi. A survey of the recent architectures of deep convolutional neural networks. Artificial Intelligence Review, vol. 53, no. 8, pp. 5455–5516, 2020. DOI: https://doi.org/10.1007/s10462-020-09825-6.

W. H. Xiong, J. F. Ren, Z. H. Wu, M. Jiang. Research on fire identification based on hybrid convolutional neural network. Computer Technology and Development, vol. 30, no. 7, pp. 81–86, 2020. DOI: https://doi.org/10.3969/j.issn.1673-629X.2020.07.018. (in Chinese)

J. Y. Ren. Research on Image Type Fire Identification Method Based on Convolutional Neural Network, Master dissertation, Xi’an University of Science and Technology, China, 2019. (in Chinese)

Q. Lu, J. Yu, Z. F. Wang. A color model based fire flame detection system. In Proceedings of the 7th Chinese Conference on Pattern Recognition, Springer, Chengdu, China, pp. 474–485, 2016. DOI: https://doi.org/10.1007/978-981-10-3002-4_40.

T. Celik, H. Demirel. Fire detection in video sequences using a generic color model. Fire Safety Journal, vol. 44, no. 2, pp. 147–158, 2009. DOI: https://doi.org/10.1016/j.firesaf.2008.05.005.

Y. H. Kim, A. Kim, H. Y. Jeong. RGB Color model based the fire detection algorithm in video sequences on wireless sensor network. International Journal of Distributed Sensor Networks, vol. 10, no. 4, Article number 923609, 2014. DOI: https://doi.org/10.1155/2014/923609.

T. X. Truong, J. M. Kim. Fire flame detection in video sequences using multi-stage pattern recognition techniques. Engineering Applications of Artificial Intelligence, vol. 25, no. 7, pp. 1365–1372, 2012. DOI: https://doi.org/10.1016/j.engappai.2012.05.007.

L. Rossi, M. Akhloufi, Y. Tison. Dynamic fire 3D modeling using a real-time stereovision system. Journal of Communication and Computer, vol. 6, no. 10, pp. 54–61, 2009.

Z. B. Mei, C. Y. Yu, X. Zhang. Machine vision based fire flame detection using multi-features. In Proceedings of the 24th Chinese Control and Decision Conference, IEEE, Taiyuan, China, pp. 2844–2848, 2012. DOI: https://doi.org/10.1109/CCDC.2012.6244453.

Y. K. Wang, A. G. Wu, J. Zhang, M. Zhao, W. S. Li, N. Dong. Fire smoke detection based on texture features and optical flow vector of contour. In Proceedings of the 12th World Congress on Intelligent Control and Automation, IEEE, Guilin, China, pp. 2879–2883, 2016. DOI: https://doi.org/10.1109/WCICA.2016.7578611.

J. Li, F. Li, L. Li. Research for a new fire image detection technology. Journal of Jiangsu Normal University (Natural Science Edition), vol. 31, no. 3, pp. 25–30, 2013. (in Chinese)

R. Bohush, N. Brouka. Smoke and flame detection in video sequences based on static and dynamic features. In Proceedings of Signal Processing: Algorithms, Architectures, Arrangements, and Applications, IEEE, Poznan, Poland, pp. 20–25, 2013.

G. P. Jiang, F. Shang, F. Wang, X. J. Liu, T. S. Qiu. A combined intelligent fire detector with BP networks. In Proceedings of the 6th World Congress on Intelligent Control and Automation, IEEE, Dalian, China, pp. 5417–5419, 2006. DOI: https://doi.org/10.1109/WCICA.2006.1714106.

A. Hosseini, M. Hashemzadeh, N. Farajzadeh. UFS-net: A unified flame and smoke detection method for early detection of fire in video surveillance applications using CNNs. Journal of Computational Science, vol. 61, Article number 101638, 2022. DOI: https://doi.org/10.1016/j.jocs.2022.101638.

Ü. Atila, M. Uçar, K. Akyol, E. Uçar. Plant leaf disease classification using EfficientNet deep learning model. Ecological Informatics, vol. 61, Article number 101182, 2021. DOI: https://doi.org/10.1016/j.ecoinf.2020.101182.

T. T. Tai, D. N. H. Thanh, N. Q. Hung. A dish recognition framework using transfer learning. IEEE Access, vol. 10, pp. 7793–7799, 2022. DOI: https://doi.org/10.1109/ACCESS.2022.3143119.

Y. T. Li. Forest fire smoke recognition and detection based on EfficientNet. In Proceedings of Conference on Telecommunications, Optics and Computer Science, Dalian, China, pp. 712–719, 2022. DOI: https://doi.org/10.1109/TOCS56154.2022.10016028.

J. Fu, J. Liu, H. J. Tian, Y. Li, Y. J. Bao, Z. W. Fang, H. Q. Lu. Dual attention network for scene segmentation. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, Long Beach, USA, pp. 3141–3149, 2020. DOI: https://doi.org/10.1109/CVPR.2019.00326.

J. Hi, L. Shen, G. Sun. Squeeze-and-excitation networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 8, pp. 2011–2023, 2020. DOI: https://doi.org/10.1109/TPAMI.2019.2913372.

H. C. Li, P. F. Xiong, J. An, L. X. Wang. Pyramid attention network for semantic segmentation. In Proceedings of British Machine Vision Conference, Newcastle, UK, Article number. 285, 2018.

J. Park, S. Woo, J. Y. Lee, I. S. Kweon. BAM: Bottleneck attention module. In Proceedings of British Machine Vision Conference, Newcastle, UK, Article number 147, 2018.

H. J. Zhang, N. Zhang, N. F. Xiao. Fire detection and identification method based on visual attention mechanism. Optik, vol. 126, no. 24, pp. 5011–5018, 2015. DOI: https://doi.org/10.1016/j.ijleo.2015.09.167.

S. Majid, F. Alenezi, S. Masood, M. Ahmad, E. S. Gündüz, K. Polat. Attention based CNN model for fire detection and localization in real-world images. Expert Systems with Applications, vol. 189, Article number 116114, 2022. DOI: https://doi.org/10.1016/j.eswa.2021.116114.

L. J. He, X. L. Gong, S. R. Zhang, L. J. Wang, F. Li. Efficient attention based deep fusion CNN for smoke detection in fog environment. Neurocomputing, vol. 434, pp. 224–238, 2021. DOI: https://doi.org/10.1016/j.neucom.2021.01.024.

Y. X. Mao, T. Z. Zhang, B. Fu, D. N. H. Thanh. A self-attention based wasserstein generative adversarial networks for single image inpainting. Pattern Recognition and Image Analysis, vol. 32, no. 3, pp. 591–599, 2022. DOI: https://doi.org/10.1134/S1054661822030245.

S. Woo, J. Park, J. Y. Lee, I. S. Kweon. CBAM: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision, Springer, Munich, Germany, pp. 3–19, 2018. DOI: https://doi.org/10.1007/978-3-030-01234-2_1.

M. X. Tan, Q. V. Le. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, USA, pp. 6105–6114, 2019.

M. X. Tan, Q. V. Le. EfficientNet: Rethinking M. X. Tan, Q. V. Le. EfficientNetV2: Smaller models and faster training. In Proceedings of the 38th International Conference on Machine Learning, pp. 10096–10106, 2021.

R. Müller, S. Kornblith, G. Hinton. When does label smoothing help? In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, Canada, Article number 422, 2019.

A. Krizhevsky, I. Sutskever, G. E. Hinton. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, USA, pp. 1097–1105, 2012.

M. X. Tan, Q. V. Le. EfficientNet: Rethinking K. Simonyan, A. Zisserman. Very deep convolutional networks for large-scale image recognition. [Online], Available: https://arxiv.org/abs/1409.1556, 2014.

K. M. He, X. Y. Zhang, S. Q. Ren, J. Sun. Deep residual learning for image recognition. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, pp. 770–778, 2016. DOI: https://doi.org/10.1109/CVPR.2016.90.

C. Szegedy, S. Ioffe, V. Vanhoucke, A. A. Alemi. Inception-v4, inception-ResNet and the impact of residual connections on learning. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, USA, pp. 4278–4284, 2017.

C. Szegedy, W. Liu, Y. Q. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, A. Rabinovich. Going deeper with convolutions. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015. DOI: https://doi.org/10.1109/CVPR.2015.7298594.

C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, Z. Wojna. Rethinking the inception architecture for computer vision. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, pp. 2818–2826, 2016. DOI: https://doi.org/10.1109/CVPR.2016.308.

N. N. Ma, X. Y. Zhang, H. T. Zheng, J. Sun. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. In Proceedings of the 15th European Conference on Computer Vision, Springer, Munich, Germany, pp. 122–138, 2018. DOI: https://doi.org/10.1007/978-3-030-01264-9_8.

X. Y. Zhang, X. Y. Zhou, M. X. Lin, J. Sun. ShuffleNet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, Salt Lake City, USA, pp. 6848–6856, 2017. DOI: https://doi.org/10.1109/CV-PR.2018.00716.

Q. B. Hou, D. Q. Zhou, J. S. Feng. Coordinate attention for efficient mobile network design. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, Nashville, USA, pp. 13708–13717, 2021. DOI: https://doi.org/10.1109/CVPR46437.2021.01350.

R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, D. Batra. Grad-CAM: Visual explanations from deep networks via gradient-based localization. International Journal of Computer Vision, vol. 128, no. 2, pp. 336–359, 2020. DOI: https://doi.org/10.1007/s11263-019-01228-7.

Acknowledgements

This work was supported by National Key Research and Development Program of China (No. 2021YFC15235 02-03) and Fundamental Research Funds for the Central Universities, China (No. FRF-IDRY-21-016).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors declared that they have no conflicts of interest to this work.

Additional information

Bo Wang received the M. Sc. degree in safety engineering from School of Civil and Resource Engineering, University of Science and Technology Beijing, China in 2023. Currently, he is working as a safety engineer in Shanghai New Micro Semiconductor, China.

His research interests include image recognition and risk assessment.

Guozhong Huang received the B. Sc., M. Sc. and Ph. D. degrees in municipal engineering from Harbin Institute of Technology, China in 1994, 1997 and 2001, respectively. He is a full-time professor in Department of Safety and Science, University of Science and Technology Beijing, China. He has published more than 60 papers in academic journals.

His research interests include safety assessment and risk analysis, automotive product defect risk assessment and general consumer product safety management, public safety and emergency response, intelligent industrial equipment service safety risk warning and other directions.

Haoxuan Li received the M. Sc. degree in environmental engineering from University of New South Wales, UK in 2020. He is currently a Ph. D. candidate in safety science and engineering at School of Civil and Resource Engineering, University of Science and Technology Beijing, China.

His research interests include indirect injury, risk assessment and big data analysis.

Xiaolong Chen received the M. Sc. degree in security engineering from University of Science and Technology Beijing, China in 2022. He now works for Huawei, China.

His research interests include fire identification and network security.

Lei Zhang received the Ph.D. degree in metallurgical engineering from University of Science and Technology Beijing, China. He is currently a lecturer at School of Civil and Resource Engineering, University of Science and Technology Beijing, China.

His research interests include metal smelting safety and product collateral damage.

Xuehong Gao received the Ph. D. degree in industrial engineering from Pusan National University, Republic of Korea in 2021. He is an associate professor at University of Science and Technology Beijing, China. He has published more than 20 research articles in journals such as IJPR, IJPE, JCLP, CAIE, ANOR, etc.

His research interests include robust optimization, disaster management, safety science, and operations research.

Colored figures are available in the online version at https://link.springer.com/journal/11633

Rights and permissions

About this article

Cite this article

Wang, B., Huang, G., Li, H. et al. Hybrid CBAM-EfficientNetV2 Fire Image Recognition Method with Label Smoothing in Detecting Tiny Targets. Mach. Intell. Res. 21, 1145–1161 (2024). https://doi.org/10.1007/s11633-023-1445-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11633-023-1445-5