Abstract

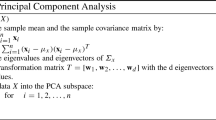

Principal component analysis (PCA) is a widely used method for multivariate data analysis that projects the original high-dimensional data onto a low-dimensional subspace with maximum variance. However, in practice, we would be more likely to obtain a few compressed sensing (CS) measurements than the complete high-dimensional data due to the high cost of data acquisition and storage. In this paper, we propose a novel Bayesian algorithm for learning the solutions of PCA for the original data just from these CS measurements. To this end, we utilize a generative latent variable model incorporated with a structure prior to model both sparsity of the original data and effective dimensionality of the latent space. The proposed algorithm enjoys two important advantages: 1) The effective dimensionality of the latent space can be determined automatically with no need to be pre-specified; 2) The sparsity modeling makes us unnecessary to employ multiple measurement matrices to maintain the original data space but a single one, thus being storage efficient. Experimental results on synthetic and real-world datasets show that the proposed algorithm can accurately learn the solutions of PCA for the original data, which can in turn be applied in reconstruction task with favorable results.

Similar content being viewed by others

References

Jolliffe I T. Principal Component Analysis. 2nd ed. New York: Springer, 2002

Clausen C, Wechsler H. Color image compression using PCA and backpropagation learning. Pattern Recognition, 2000, 33(9): 1555–1560

Du Q, Fowler J E. Hyperspectral image compression using JPEG2000 and principal component analysis. IEEE Geoscience and Remote Sensing Letters, 2007, 4(2): 201–205

Rosipal R, Girolami M, Trejo L J, Cichocki A. Kernel PCA for feature extraction and de-noising in nonlinear regression. Neural Computing and Applications, 2001, 10(3): 231–243

Zhang L, Dong W, Zhang D, Shi G. Two-stage image denoising by principal component analysis with local pixel grouping. Pattern Recognition, 2010, 43(4): 1531–1549

Chen G, Qian S. Denoising of hyperspectral imagery using principal component analysis and wavelet shrinkage. IEEE Transactions on Geoscience and Remote Sensing, 2011, 49(3): 973–980

Thomaz C E, Giraldi G A. A new ranking method for principal components analysis and its application to face image analysis. Image and Vision Computing, 2010, 28(6): 902–913

Yang J, Zhang D, Frangi A F, Yang J Y. Two-dimensional PCA: a new approach to appearance-based face representation and recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2004, 26(1): 131–137

Luan X, Fang B, Liu L, Yang W, Qian J. Extracting sparse error of robust PCA for face recognition in the presence of varying illumination and occlusion. Pattern Recognition, 2014, 47(2): 495–508

Malagonborja L, Fuentes O. Object detection using image reconstruction with PCA. Image and Vision Computing, 2009, 27(1–2): 2–9

Donoho D L. Compressed sensing. IEEE Transactions on Information Theory, 2006, 52(4): 1289–1306

Eldar Y C, Kutyniok G. Compressed Sensing: Theory and Applications. Cambridge: Cambridge University Press, 2012

Qi H, Hughes S M. Invariance of principal components under low-dimensional random projection of the data. In: Proceedings of International Conference on Image Processing. 2012, 937–940

Anaraki F P, Hughes S M. Memory and computation efficient PCA via very sparse random projections. In: Proceedings of International Conference on Machine Learning. 2014, 1341–1349

Anaraki F P. Estimation of the sample covariance matrix from compressive measurements. IET Signal Processing, 2016, 10(9): 1089–1095

Anaraki F P, Becker S. Preconditioned data sparsification for big data with applications to PCA and K-means. IEEE Transactions on Information Theory, 2017, 63(5): 2954–2974

Chen X, Lyu M R, King I. Toward efficient and accurate covariance matrix estimation on compressed data. In: Proceedings of International Conference on Machine Learning. 2017, 767–776

Tipping M E, Bishop C M. Probabilistic principal component analysis. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 1999, 61(3): 611–622

Bishop C M. Bayesian PCA. In: Proceedings of the 11th International Conference on Neural Information Processing Systems. 1999, 382–388

Papoulis A, Pillai S U. Probability, Random Variables, and Stochastic Processes. 4th ed. New York: McGraw-Hill Companies, Inc., 2002

Ji S, Dunson D, Carin L. Multi-task compressive sensing. IEEE Transactions on Signal Processing, 2009, 57(1): 92–106

Stegle O, Lippert C, Mooij J M, Lawrence N D, Borgwardt K M. Efficient inference in matrix-variate gaussian models with iid observation noise. In: Proceedings of the 25th Annual Conference on Neural Information Processing Systems. 2012, 630–638

Bishop C M. Variational principal components. In: Proceedings of International Conference on Artificial Neural Networks. 1999, 509–514

Ilin A, Raiko T. Practical approaches to principal component analysis in the presence of missing values. Journal of Machine Learning Research, 2010, 11(1): 1957–2000

Lecun Y L, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 1998, 86(11): 2278–2324

Lee K C, Ho J, Kriegman D J. Acquiring linear subspaces for face recognition under variable lighting. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2005, 27(5): 684–698

Graham D B, Allinson N M. Characterizing virtual eigensignatures for general purpose face recognition. Face Recognition: From Theory to Applications, 1998, 163(2): 446–456

Cai D, He X F, Han J W. Spectral regression for efficient regularized subspace learning. In: Proceedings of International Conference on Computer Vision. 2007, 1–8

Acknowledgements

This work was supported by the Key Program of the National Natural Science Foundation of China (NSFC) (Grant No. 61732006).

Author information

Authors and Affiliations

Corresponding author

Additional information

Di Ma received the BS degree in computer science from Nanjing University of Aeronautics and Astronautics (NUAA), China in 2013. She is now studying for her PhD degree in NUAA, and her research interests include machine learning and pattern recognition.

Songcan Chen received the BS degree from Hangzhou University (now merged into Zhejiang University), China, the MS degree from Shanghai Jiao Tong University, China, and the PhD degree from Nanjing University of Aeronautics and Astronautics (NUAA), China in 1983, 1985, and 1997, respectively. He joined in NUAA in 1986, and he has been a full-time professor with the Department of Computer Science and Engineering since 1998. He has authored/co-authored over 170 scientific peer-reviewed papers and ever obtained Honorable Mentions of 2006, 2007, and 2010 Best Paper Awards of Pattern Recognition Journal respectively. His current research interests include pattern recognition, machine learning, and neural computing.

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Ma, D., Chen, S. Bayesian compressive principal component analysis. Front. Comput. Sci. 14, 144303 (2020). https://doi.org/10.1007/s11704-019-8308-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-019-8308-9