Abstract

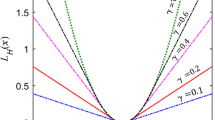

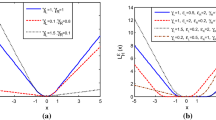

Robust regression plays an important role in many machine learning problems. A primal approach relies on the use of Huber loss and an iteratively reweighted ℓ2 method. However, because the Huber loss is not smooth and its corresponding distribution cannot be represented as a Gaussian scale mixture, such an approach is extremely difficult to handle using a probabilistic framework. To address those limitations, this paper proposes two novel losses and the corresponding probability functions. One is called Soft Huber, which is well suited for modeling non-Gaussian noise. Another is Nonconvex Huber, which can help produce much sparser results when imposed as a prior on regression vector. They can represent any ℓq loss (\({1 \over 2}\) ⩽ q < 2) with tuning parameters, which makes the regression model more robust. We also show that both distributions have an elegant form, which is a Gaussian scale mixture with a generalized inverse Gaussian mixing density. This enables us to devise an expectation maximization (EM) algorithm for solving the regression model. We can obtain an adaptive weight through EM, which is very useful to remove noise data or irrelevant features in regression problems. We apply our model to the face recognition problem and show that it not only reduces the impact of noise pixels but also removes more irrelevant face images. Our experiments demonstrate the promising results on two datasets.

Similar content being viewed by others

References

Andersen R. Modern Methods for Robust Regression. Sage, 2008

Ben-Gal I. Outlier Detection. Data Mining and Knowledge Discovery Handbook. Springer, 2010, 117–130

Stigler S M. Gauss and the invention of least squares. The Annals of Statistics, 1981, 9(3): 465–474

Rousseeuw P J, Hubert M. Robust statistics for outlier detection. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 2011, 1(1): 73–79

Huber P J. Robust regression: asymptotics, conjectures and monte carlo. The Annals of Statistics, 1973, 1(5): 799–821

Huber P J, Ronchetti E M. Robust Statistics. 2nd ed. New Jersey: John Wiley & Sons, 2009

Hartley R, Zisserman A. Multiple View Geometry in Computer Vision. 2nd ed. Cambridge: Cambridge University Press, 2004

Figueiredo M. Adaptive sparseness using jeffreys prior. In: Dietterich T G, Becker S, Ghahramani Z, eds. Advances in Neural Information Processing Systems. MIT Press, 2002, 697–704

Kabán A. On Bayesian classification with laplace priors. Pattern Recognition Letters, 2007, 28(10): 1271–1282

Lange K L, Little R J A, Taylor J M G. Robust statistical modeling using the t distribution. Journal of the American Statistical Association, 1989, 84(408): 881–896

Jylänki P, Vanhatalo J, Vehtari A. Robust gaussian process regression with a student-t likelihood. Journal of Machine Learning Research, 2011, 12: 3227–3257

Lange K, Sinsheimer J S. Normal/independent distributions and their applications in robust regression. Journal of Computational and Graphical Statistics, 1993, 2(2): 175–198

Gao M, Wang K, He L. Probabilistic model checking and scheduling implementation of an energy router system in energy internet for green cities. IEEE Transactions on Industrial Informatics, 2018, 14(4): 1501–1510

Bernardo J M, Smith A F M. Bayesian Theory. New York: John Willey and Sons, 1994

Xu L, Jordan M I. On convergence properties of the EM algorithm for gaussian mixtures. Neural Computation, 1996, 8(1): 129–151

Naseem I, Togneri R, Bennamoun M. Robust regression for face recognition. Pattern Recognition, 2012, 45(1): 104–118

Yang M, Zhang L, Yang J, Zhang D. Robust sparse coding for face recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2011, 625–632

Hu H, Wang K, Lv C, Wu J, Yang Z. Semi-supervised metric learning-based anchor graph hashing for large-scale image retrieval. IEEE Transactions on Image Processing, 2019, 28(2): 739–754

Huber P J. Robust Estimation of a Location Parameter. Breakthroughs in Statistics. Springer, New York, 1992, 492–518

Tibshirani R. Regression shrinkage and selection via the lasso: a retrospective. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 2011, 73(3): 273–282

Wu C F J. On the convergence properties of the EM algorithm. Annals of Statistics, 1983, 11: 95–103

Wright J, Yang A Y, Ganesh A, Sastry S S, Ma Y. Robust face recognition via sparse representation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2008, 31(2): 210–227

Martinez A M. The AR face database. CVC Technical Report, 1998

Georghiades A S, Belhumeur P N, Kriegman D J. From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2001, 23(6): 643–660

Lee K C, Ho J, Kriegman D J. Acquiring linear subspaces for face recognition under variable lighting. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2005, 27(5): 684–698

Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems. 2012, 1097–1105

Yang M, Zhang L, Yang J, Zhang D. Regularized robust coding for face recognition. IEEE Transactions on Image Processing, 2013, 22(5): 1753–1766

Andrews D F, Mallows C L. Scale mixtures of normal distributions. Journal of the Royal Statistical Society: Series B (Methodological), 1974, 36(1): 99–102

Author information

Authors and Affiliations

Corresponding author

Additional information

Jin Li is now a PhD student in the department of Computer science of Shanghai Jiao Tong University, China. He received the BS degree in computer science from East China University of Science and Technology, China. His research interests include machine learning and data mining, particularly, statistical methods and deep learning techniques for real-world applications, such as face recognition, software auto-tuning and recommender systems.

Quan Chen received the BS degree in computer science from the Tongji University, China, the MS and PhD degrees in computer science from the Shanghai Jiao Tong University, China in 2007, 2009, and 2014. From 2014 to 2016, he was a postdoctoral researcher in the Department of Computer Science, University of Michigan — Ann Arbor, USA. He is now a tenure-track associate professor in the Department of Computer Science and Engineering, Shanghai Jiao Tong University, China. His research interests include parallel and distributed processing, task scheduling, cloud computing, datacenter management and accelerator management.

Jingwen Leng is an assistant professor in the John Hopcroft Computer Science Center and Computer Science & Engineering Department at Shanghai Jiao Tong University, China. He received his PhD from the University of Texas at Austin, USA, where he focused on improving the efficiency and resiliency of general-purpose GPUs. He is currently interested at the interaction among system, architecture, and deep learning.

Weinan Zhang is now a tenure-track assistant professor at Shanghai Jiao Tong University, China. His research interests include machine learning and big data mining, particularly, deep learning and reinforcement learning techniques for real-world data mining scenarios, such as computational advertising, recommender systems, text mining, web search and knowledge graphs. He has published over 70 papers on international conferences and journals including KDD, WWW, SIGIR, IJCAI, AAAI, ICML, ICLR, JMLR, TKDE etc.

Minyi Guo received the BS and ME degrees in computer science from the Nanjing University, China, and the PhD degree in information science from the University of Tsukuba, Japan in 1982, 1986, and 1998 respectively. From 1998 to 2000, he was a research associate of NEC Soft, Ltd. Japan. He was a visiting professor in the Department of Computer Science, Georgia Institute of Technology, USA. In addition, he was a full professor with The University of Aizu, Japan and is Head of the Department of Computer Science and Engineering, Shanghai Jiao Tong University, China. He is a fellow of the IEEE and has published more than 200 papers in well-known conferences and journals. His main interests include automatic parallelization and data-parallel languages, bioinformatics, compiler optimization and high-performance computing.

Electronic Supplementary Material

Rights and permissions

About this article

Cite this article

Li, J., Chen, Q., Leng, J. et al. Probabilistic robust regression with adaptive weights — a case study on face recognition. Front. Comput. Sci. 14, 145314 (2020). https://doi.org/10.1007/s11704-019-9097-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-019-9097-x