Abstract

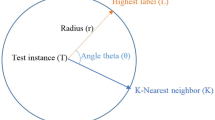

Label distribution learning (LDL) is a new learning paradigm to deal with label ambiguity and many researches have achieved the prominent performances. Compared with traditional supervised learning scenarios, the annotation with label distribution is more expensive. Direct use of existing active learning (AL) approaches, which aim to reduce the annotation cost in traditional learning, may lead to the degradation of their performance. To deal with the problem of high annotation cost in LDL, we propose the active label distribution learning via kernel maximum mean discrepancy (ALDL-kMMD) method to tackle this crucial but rarely studied problem. ALDL-kMMD captures the structural information of both data and label, extracts the most representative instances from the unlabeled ones by incorporating the nonlinear model and marginal probability distribution matching. Besides, it is also able to markedly decrease the amount of queried unlabeled instances. Meanwhile, an effective solution is proposed for the original optimization problem of ALDL-kMMD by constructing auxiliary variables. The effectiveness of our method is validated with experiments on the real-world datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Geng X. Label distribution learning. IEEE Transactions on Knowledge and Data Engineering, 2016, 28(7): 1734–1748

Zhang M L, Zhou Z H. A review on multi-label learning algorithms. IEEE Transactions on Knowledge and Data Engineering, 2014, 26(8): 1819–1837

Geng X, Yin C, Zhou Z H. Facial age estimation by learning from label distributions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(10): 2401–2412

Gao B B, Zhou H Y, Wu J, Geng X. Age estimation using expectation of label distribution learning. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence. 2018, 712–718

Kong S G, Mbouna R O. Head pose estimation from a 2D face image using 3D face morphing with depth parameters. IEEE Transactions on Image Processing, 2015, 24(6): 1801–1808

Zhou Y, Xue H, Geng X. Emotion distribution recognition from facial expressions. In: Proceedings of the 23rd ACM International Conference on Multimedia. 2015, 1247–1250

Zhou D, Zhang X, Zhou Y, Zhao Q, Geng X. Emotion distribution learning from texts. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing. 2016, 638–647

Dong X, Gu S, Zhuge W, Luo T, Hou C. Active label distribution learning. Neurocomputing, 2021, 436: 12–21

Burges C J C. A tutorial on support vector machines for pattern recognition. Data Mining and Knowledge Discovery, 1998, 2(2): 121–167

McCallum A, Nigam K. A comparison of event models for naive Bayes text classification. In: Proceedings of AAAI-98 Workshop on Learning for Text Categorization. 1998, 41–48

Tong S, Koller D. Support vector machine active learning with applications to text classification. The Journal of Machine Learning Research, 2002, 2: 45–66

Freund Y, Seung H S, Shamir E, Tishby N. Selective sampling using the query by committee algorithm. Machine Learning, 1997, 28(2–3): 133–168

Guo Y, Schuurmans D. Discriminative batch mode active learning. In: Proceedings of the 20th International Conference on Neural Information Processing Systems. 2007, 593–600

Ren T, Jia X, Li W, Zhao S. Label distribution learning with label correlations via low-rank approximation. In: Proceedings of the 28th International Joint Conference on Artificial Intelligence. 2019, 3325–3331

Berger A L, Pietra V J D, Pietra S A D. A maximum entropy approach to natural language processing. Computational Linguistics, 1996, 22(1): 39–71

Pietra S D, Pietra V D, Lafferty J. Inducing features of random fields. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1997, 19(4): 380–393

Nocedal J, Wright S J. Numerical Optimization. New York: Springer, 2006

Xu N, Liu Y P, Geng X. Label enhancement for label distribution learning. IEEE Transactions on Knowledge and Data Engineering, 2021, 33(4): 1632–1643

Wang J, Geng X, Xue H. Re-weighting large margin label distribution learning for classification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, DOI: https://doi.org/10.1109/TPAMI.2021.3082623

Seung H S, Opper M, Sompolinsky H. Query by committee. In: Proceedings of the 5th Annual Workshop on Computational Learning Theory. 1992, 287–294

Lewis D D, Catlett J. Heterogeneous uncertainty sampling for supervised learning. In: Cohen W W, ed. Machine Learning Proceedings. New Brunswick: Elsevier, 1994

Balcan M F, Broder A, Zhang T. Margin based active learning. In: Proceedings of the 20th International Conference on Computational Learning Theory. 2007, 35–50

Lindley D V. On a measure of the information provided by an experiment. The Annals of Mathematical Statistics, 1956, 27(4): 986–1005

Yu K, Bi J, Tresp V. Active learning via transductive experimental design. In: Proceedings of the 23rd International Conference on Machine Learning. 2006, 1081–1088

Nguyen H T, Smeulders A. Active learning using pre-clustering. In: Proceedings of the 21st International Conference on Machine Learning. 2004, 9

Nie F, Xu D, Li X. Initialization independent clustering with actively self-training method. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 2012, 42(1): 17–27

Cai D, He X. Manifold adaptive experimental design for text categorization. IEEE Transactions on Knowledge and Data Engineering, 2012, 24(4): 707–719

Huang S J, Jin R, Zhou Z H. Active learning by querying informative and representative examples. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(10): 1936–1949

Huang S J, Zhou Z H. Active query driven by uncertainty and diversity for incremental multi-label learning. In: Proceedings of the 13th IEEE International Conference on Data Mining. 2013, 1079–1084

Huang S J, Zhao J W, Liu Z Y. Cost-effective training of deep CNNs with active model adaptation. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 2018, 1580–1588

Huang S J, Chen J L, Mu X, Zhou Z H. Cost-effective active learning from diverse labelers. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence. 2017, 1879–1885

Yang Y, Zhou D W, Zhan D C, Xiong H, Jiang Y, Yang J. Cost-effective incremental deep model: matching model capacity with the least sampling. IEEE Transactions on Knowledge and Data Engineering, 2021, DOI: https://doi.org/10.1109/TKDE.2021.3132622

Tang Y P, Huang S J. Dual active learning for both model and data selection. In: Proceedings of the 30th International Joint Conference on Artificial Intelligence. 2021, 3052–3058

Borgwardt K M, Gretton A, Rasch M J, Kriegel H P, Schölkopf B, Smola A J. Integrating structured biological data by Kernel Maximum Mean Discrepancy. Bioinformatics, 2006, 22(14): e49–e57

Fortet R, Mourier E. Convergence de la répartition empirique vers la répartition théorique. Annales Scientifiques de l’École Normale Supérieure, 1953, 70(3): 267–285

Eisen M B, Spellman P T, Brown P O, Botstein D. Cluster analysis and display of genome-wide expression patterns. Proceedings of the National Academy of Sciences of the United States of America, 1998, 95(25): 14863–14868

Kapoor A, Grauman K, Urtasun R, Darrell T. Gaussian processes for object categorization. International Journal of Computer Vision, 2010, 88(2): 169–188

Guo F B, Lin Y. Identify protein-coding genes in the genomes of Aeropyrum pernix K1 and Chlorobium tepidum TLS. Journal of Biomolecular Structure and Dynamics, 2009, 26(4): 413–420

Ghafoori Z, Bezdek J C, Leckie C, Karunasekera S. Unsupervised and active learning using maximin-based anomaly detection. In: Proceedings of Joint European Conference on Machine Learning and Knowledge Discovery in Databases. 2019, 90–106

Acknowledgements

This work was partially supported by the National Natural Science Fundation of China (Grant Nos. 61922087, 61906201 and 62006238), and the Science and Technology Innovation Program of Hunan Province (2021RC3070).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Xinyue Dong is studying for her master’s degree in National University of Defense Technology, China. Her main research interests include data analysis and machine learning.

Tingjin Luo received his PhD degrees from National University of Defense Technology, China. He is currently an Associate Professor with the College of Science of the same university. He has authored more than 30 papers in journals and conferences, such as the IEEE TKDE, IEEE TCYB, ACM KDD. He has been a Program Committee member of several conferences including IJCAI and AAAI. His current research interests include machine learning, optimization, and data mining.

Ruidong Fan has received his master’s degree in National University of Defense Technology, China. He is working toward the PhD degree in the College of Science, National University of Denfense Technology, China. His main research interests include statistical data analysis and machine learning.

Wenzhang Zhuge received the BS degree from Shandong University, China and the MS degree from National University of Denfense Technology, China. He has received his PhD degree in the College of Science, National University of Denfense Technology, China. His research interests include machine learning, system science, and data mining.

Chenping Hou received the BS and PhD degrees in applied mathematics from National University of Defense Technology, China in 2004 and 2009, respectively. He is currently a Professor with the College of Science, National University of Defense Technology, China. He has authored more than 50 papers in journals and conferences, such as the IEEE TPAMI, IEEE TKDE, IEEE TNNLS/TNN, IEEE TCYB, IEEE TIP, PR, IJCAI, AAAI. He has been a Program Committee member of several conferences including IJCAI, AAAI. His current research interests include pattern recognition, machine learning, data mining, and computer vision.

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Dong, X., Luo, T., Fan, R. et al. Active label distribution learning via kernel maximum mean discrepancy. Front. Comput. Sci. 17, 174327 (2023). https://doi.org/10.1007/s11704-022-1624-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-022-1624-5