Abstract

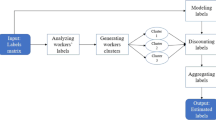

Recently, crowdsourcing has established itself as an efficient labeling solution by distributing tasks to crowd workers. As the workers can make mistakes with diverse expertise, one core learning task is to estimate each worker’s expertise, and aggregate over them to infer the latent true labels. In this paper, we show that as one of the major research directions, the noise transition matrix based worker expertise modeling methods commonly overfit the annotation noise, either due to the oversimplified noise assumption or inaccurate estimation. To solve this problem, we propose a knowledge distillation framework (KD-Crowd) by combining the complementary strength of noise-model-free robust learning techniques and transition matrix based worker expertise modeling. The framework consists of two stages: in Stage 1, a noise-model-free robust student model is trained by treating the prediction of a transition matrix based crowdsourcing teacher model as noisy labels, aiming at correcting the teacher’s mistakes and obtaining better true label predictions; in Stage 2, we switch their roles, retraining a better crowdsourcing model using the crowds’ annotations supervised by the refined true label predictions given by Stage 1. Additionally, we propose one f-mutual information gain (MIGf) based knowledge distillation loss, which finds the maximum information intersection between the student’s and teacher’s prediction. We show in experiments that MIGf achieves obvious improvements compared to the regular KL divergence knowledge distillation loss, which tends to force the student to memorize all information of the teacher’s prediction, including its errors. We conduct extensive experiments showing that, as a universal framework, KD-Crowd substantially improves previous crowdsourcing methods on true label prediction and worker expertise estimation.

Similar content being viewed by others

References

Snow R, O’Connor B, Jurafsky D, Ng A Y. Cheap and fast - but is it good?: evaluating non-expert annotations for natural language tasks. In: Proceedings of Conference on Empirical Methods in Natural Language Processing. 2008, 254–263

Raykar V C, Yu S, Zhao L H, Valadez G H, Florin C, Bogoni L, Moy L. Learning from crowds. The Journal of Machine Learning Research, 2010, 11: 1297–1322

Albarqouni S, Baur C, Achilles F, Belagiannis V, Demirci S, Navab N. AggNet: deep learning from crowds for mitosis detection in breast cancer histology images. IEEE Transactions on Medical Imaging, 2016, 35(5): 1313–1321

Rodrigues F, Pereira F. Deep learning from crowds. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence, 30th Innovative Applications of Artificial Intelligence Conference, and 8th AAAI Symposium on Educational Advances in Artificial Intelligence. 2017

Yang Y, Wei H, Zhu H, Yu D, Xiong H, Yang J. Exploiting crossmodal prediction and relation consistency for semisupervised image captioning. IEEE Transactions on Cybernetics, 2022, doi: https://doi.org/10.1109/TCYB.2022.3156367

Dawid A P, Skene A M. Maximum likelihood estimation of observer error-rates using the EM algorithm. Applied Statistics, 1979, 28(1): 20–28

Cao P, Xu Y, Kong Y, Wang Y. Max-MIG: an information theoretic approach for joint learning from crowds. In: Proceedings of the 7th International Conference on Learning Representations. 2019

Natarajan N, Dhillon I S, Ravikumar P, Tewari A. Learning with noisy labels. In: Proceedings of the 27th Annual Conference on Neural Information Processing Systems. 2013, 1196–1204

Arpit D, Jastrzebski S, Ballas N, Krueger D, Bengio E, Kanwal M S, Maharaj T, Fischer A, Courville A, Bengio Y, Lacoste-Julien S. A closer look at memorization in deep networks. In: Proceedings of the 34th International Conference on Machine Learning. 2017, 233–242

Gui X-J, Wang W, Tian Z-H. Towards understanding deep learning from noisy labels with small-loss criterion. In: Proceedings of the 30th International Joint Conference on Artificial Intelligence. 2021, 2469–2475

Jiang L, Zhou Z, Leung T, Li L-J, Li F-F. MentorNet: learning data-driven curriculum for very deep neural networks on corrupted labels. In: Proceedings of the 35th International Conference on Machine Learning. 2018, 2304–2313

Han B, Yao Q, Yu X, Niu G, Xu M, Hu W, Tsang I W, Sugiyama M. Co-teaching: robust training of deep neural networks with extremely noisy labels. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. 2018, 8536–8546

Yu X, Han B, Yao J, Niu G, Tsang I, Sugiyama M. How does disagreement help generalization against label corruption? In: Proceedings of the 36th International Conference on Machine Learning. 2019, 7164–7173

Malach E, Shalev-Shwartz S. Decoupling “when to update” from “how to update”. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. 2017, 961–971

Li J, Socher R, Hoi S C H. DivideMix: learning with noisy labels as semi-supervised learning. In: Proceedings of the 8th International Conference on Learning Representations. 2020

Liu S, Niles-Weed J, Razavian N, Fernandez-Granda C. Early-learning regularization prevents memorization of noisy labels. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. 2020, 1707

Song H, Kim M, Lee J G. SELFIE: refurbishing unclean samples for robust deep learning. In: Proceedings of the 36th International Conference on Machine Learning. 2019, 5907–5915

Liu Q, Peng J, Ihler A T. Variational inference for crowdsourcing. In: Proceedings of the 25th International Conference on Neural Information Processing Systems. 2012, 692–700

Zhou D, Platt J C, Basu S, Mao Y. Learning from the wisdom of crowds by minimax entropy. In: Proceedings of the 25th International Conference on Neural Information Processing Systems. 2012, 2195–2203

Rodrigues F, Pereira F C, Ribeiro B. Gaussian process classification and active learning with multiple annotators. In: Proceedings of the 31st International Conference on Machine Learning. 2014, 433–441

Guan M Y, Gulshan V, Dai A M, Hinton G E. Who said what: modeling individual labelers improves classification. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence and 30th Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence. 2018

Tanno R, Saeedi A, Sankaranarayanan S, Alexander D C, Silberman N. Learning from noisy labels by regularized estimation of annotator confusion. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019, 11236–11245

Chu Z, Ma J, Wang H. Learning from crowds by modeling common confusions. In: Proceedings of the 35th AAAI Conference on Artificial Intelligence AAAI 2021, 33rd Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, and 11th Symposium on Educational Advances in Artificial Intelligence. 2021, 5832–5840

Li S-Y, Huang S-J, Chen S. Crowdsourcing aggregation with deep Bayesian learning. Science China Information Sciences, 2021, 64(3): 130104

Shi Y, Li S-Y, Huang S-J. Learning from crowds with sparse and imbalanced annotations. Machine Learning, 2023, 112(6): 1823–1845

Li S-Y, Jiang Y, Chawla N V, Zhou Z-H. Multi-label learning from crowds. IEEE Transactions on Knowledge and Data Engineering, 2019, 31(7): 1369–1382

Lee K, Yun S, Lee K, Lee H, Li B, Shin J. Robust inference via generative classifiers for handling noisy labels. In: Proceedings of the 36th International Conference on Machine Learning. 2019, 3763–3772

Yao Y, Liu T, Han B, Gong M, Deng J, Niu G, Sugiyama M. Dual T: reducing estimation error for transition matrix in label-noise learning. In: Proceedings of the 34th Conference on Neural Information Processing Systems. 2020, 7260–7271

Ghosh A, Kumar H, Sastry P S. Robust loss functions under label noise for deep neural networks. In: Proceedings of the 31st AAAI Conference on Artificial Intelligence. 2017, 1919–1925

Zhang Z, Sabuncu M R. Generalized cross entropy loss for training deep neural networks with noisy labels. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. 2018, 8792–8802

Ma X, Huang H, Wang Y, Erfani S R S, Bailey J. Normalized loss functions for deep learning with noisy labels. In: Proceedings of the 37th International Conference on Machine Learning. 2020, 607

Li M, Soltanolkotabi M, Oymak S. Gradient descent with early stopping is provably robust to label noise for overparameterized neural networks. In: Proceedings of the 23rd International Conference on Artificial Intelligence and Statistics. 2020, 4313–4324

Hinton G, Vinyals O, Dean J. Distilling the knowledge in a neural network. 2015. arXiv preprint arXiv: 1503.02531

Zhou Z-H, Jiang Y, Chen S-F. Extracting symbolic rules from trained neural network ensembles. AI Communications, 2003, 16(1): 3–15

Zhou Z-H, Jiang Y. NeC4.5: neural ensemble based C4.5. IEEE Transactions on Knowledge and Data Engineering, 2004, 16(6): 770–773

Li N, Yu Y, Zhou Z-H. Diversity regularized ensemble pruning. In: Proceedings of Joint European Conference on Machine Learning and Knowledge Discovery in Databases. 2012, 330–345

Li Y, Yang J, Song Y, Cao L, Luo J, Li L-J. Learning from noisy labels with distillation. In: Proceedings of IEEE International Conference on Computer Vision. 2017, 1928–1936

Zhang Z, Zhang H, Arik S Ö, Lee H, Pfister T. Distilling effective supervision from severe label noise. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020, 9291–9300

Yang Y, Zhan D-C, Fan Y, Jiang Y, Zhou Z-H. Deep learning for fixed model reuse. In: Proceedings of the 31st AAAI Conference on Artificial Intelligence. 2017, 2831–2837

Xie Q, Luong M T, Hovy E, Le Q V. Self-training with noisy student improves ImageNet classification. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020, 10684–10695

Cubuk E D, Zoph B, Shlens J, Le Q V. Randaugment: practical automated data augmentation with a reduced search space. In: Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. 2020, 3008–3017

Krizhevsky A. Learning multiple layers of features from tiny images. University of Toronto, Dissertation, 2009

Xia X, Liu T, Han B, Wang N, Gong M, Liu H, Niu G, Tao D, Sugiyama M. Part-dependent label noise: towards instance-dependent label noise. In: Proceedings of the 34th Conference on Neural Information Processing Systems. 2020, 7597–7610

Peterson J C, Battleday R M, Griffiths T L, Russakovsky O. Human uncertainty makes classification more robust. In: Proceedings of IEEE/CVF International Conference on Computer Vision. 2019, 9617–9626

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. 2016, 770–778

Ma N, Zhang X, Zheng H-T, Sun J. ShuffleNet V2: practical guidelines for efficient CNN architecture design. In: Proceedings of the 15th European Conference on Computer Vision. 2018, 122–138

Acknowledgements

This work was supported by the National Key R&D Program of China (2022ZD0114801), the National Natural Science Foundation of China (Grant No. 61906089), and the Jiangsu Province Basic Research Program (BK20190408).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests The authors declare that they have no competing interests or financial conflicts to disclose.

Additional information

Shaoyuan Li is an associate professor in the College of Computer Science and Technology at Nanjing University of Aeronautics and Astronautics, China. She received BSc and PhD degrees in computer science from Nanjing University, China in 2010 and 2018, respectively. Her research interests include machine learning and data mining. She has won the Champion of PAKDD’12 Data Mining Challenge, the Best Paper Award of PRICAI’18, 2nd place in Learning and Mining with Noisy Labels Challenge at IJCAI’22, the 4th place in the Continual Learning Challenge at CVPR’23.

Yuxiang Zheng received the BSc degree in computer science from Zhejiang University of Technology, China in 2022. Currently, he is working toward an MS degree in the College of Computer Science and Technology, Nanjing University of Aeronautics and Astronautics, China. His research interests include continual learning and crowdsourcing.

Ye Shi received the BSc degree in computer science from the China University of Mining and Technology, China in 2019, and the MS degree from Nanjing University of Aeronautics and Astronautics, China in 2022. His research focuses on crowdsourcing and multi-label classification.

Shengjun Huang received the BSc and PhD degrees in computer science from Nanjing University, China in 2008 and 2014, respectively. He is now a professor in the College of Computer Science and Technology at Nanjing University of Aeronautics and Astronautics, China. His main research interests include machine learning and data mining. He has been selected to the Young Elite Scientists Sponsorship Program by CAST in 2016, and won the China Computer Federation Outstanding Doctoral Dissertation Award in 2015, the KDD Best Poster Award in 2012, and the Microsoft Fellowship Award in 2011. He is a Junior Associate Editor of Frontiers of Computer Science.

Songcan Chen received a BS degree in mathematics from Hangzhou University (now merged into Zhejiang University), China in 1983, and a MS degree in computer applications from Shanghai Jiaotong University, China in 1985, and then worked with NUAA in January 1986. He received the PhD degree in communication and information systems from the Nanjing University of Aeronautics and Astronautics, China in 1997. Since 1998, as a full-time professor, he has been with the College of Computer Science and Technology, NUAA, China. His research interests include pattern recognition, machine learning, and neural computing. He is also an IAPR fellow.

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Li, S., Zheng, Y., Shi, Y. et al. KD-Crowd: a knowledge distillation framework for learning from crowds. Front. Comput. Sci. 19, 191302 (2025). https://doi.org/10.1007/s11704-023-3578-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-023-3578-7