Abstract

Reinforcement Learning (RL) is gaining importance in automating penetration testing as it reduces human effort and increases reliability. Nonetheless, given the rapidly expanding scale of modern network infrastructure, the limited testing scale and monotonous strategies of existing RL-based automated penetration testing methods make them less effective in practical application. In this paper, we present CLAP (Coverage-Based Reinforcement Learning to Automate Penetration Testing), an RL penetration testing agent that provides comprehensive network security assessments with diverse adversary testing behaviours on a massive scale. CLAP employs a novel neural network, namely the coverage mechanism, to address the enormous and growing action spaces in large networks. It also utilizes a Chebyshev decomposition critic to identify various adversary strategies and strike a balance between them. Experimental results across various scenarios demonstrate that CLAP outperforms state-of-the-art methods, by further reducing attack operations by nearly 35%. CLAP also provides enhanced training efficiency and stability and can effectively perform pen-testing over large-scale networks with up to 500 hosts. Additionally, the proposed agent is also able to discover pareto-dominant strategies that are both diverse and effective in achieving multiple objectives.

Similar content being viewed by others

References

Applebaum A, Miller D, Strom B, Korban C, Wolf R. Intelligent, automated red team emulation. In: Proceedings of the 32nd Annual Conference on Computer Security Applications. 2016

Hoffmann J. Simulated penetration testing: from “dijkstra” to “turing test++”. In: Proceedings of the 25th International Conference on Automated Planning and Scheduling. 2015

Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, Hubert T, Baker L, Lai M, Bolton A, Chen Y, Lillicrap T, Hui F, Sifre L, van den Driessche G, Graepel T, Hassabis D. Mastering the game of Go without human knowledge. Nature, 2017, 550(7676): 354–359

Vinyals O, Babuschkin I, Czarnecki W M, Mathieu M, Dudzik A, Chung J, Choi D H, Powell R, Ewalds T, Georgiev P, Oh J, Horgan D, Kroiss M, Danihelka I, Huang A, Sifre L, Cai T, Agapiou J P, Jaderberg M, Vezhnevets A S, Leblond R, Pohlen T, Dalibard V, Budden D, Sulsky Y, Molloy J, Paine T L, Gulcehre C, Wang Z, Pfaff T, Wu Y, Ring R, Yogatama D, Wünsch D, Mckinney K, Smith O, Schaul T, Lillicrap T, Kavukcuoglu K, Hassabis D, Apps C, Silver D. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature, 2019, 575(7782): 350–354

Laskin M, Lee K, Stooke A, Pinto L, Abbeel P, Srinivas A. Reinforcement learning with augmented data. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. 2020

Hu Z, Beuran R, Tan Y. Automated penetration testing using deep reinforcement learning. In: Proceedings of 2020 IEEE European Symposium on Security and Privacy Workshops. 2020, 2–10

Zhou S, Liu J, Hou D, Zhong X, Zhang Y. Autonomous penetration testing based on improved deep Q-network. Applied Sciences, 2021, 11(19): 8823

Tran K, Akella A, Standen M, Kim J, Bowman D, Richer T, Lin C T. Deep hierarchical reinforcement agents for automated penetration testing. 2021, arXiv preprint arXiv: 2109.06449

Schwartz J, Kurniawati H. Autonomous penetration testing using reinforcement learning. 2019, arXiv preprint arXiv: 1905.05965

Schwartz J, Kurniawatti H. NASim: network attack simulator. Networkattacksimulator.readthedocs.io/, 2019

Baillie C, Standen M, Schwartz J, Docking M, Bowman D, Kim J. CybORG: an autonomous cyber operations research gym. 2020, arXiv preprint arXiv: 2002.10667

Shmaryahu D, Shani G, Hoffmann J, Steinmetz M. Simulated penetration testing as contingent planning. In: Proceedings of the 28th International Conference on Automated Planning and Scheduling. 2018, 241–249

Lallie H S, Debattista K, Bal J. A review of attack graph and attack tree visual syntax in cyber security. Computer Science Review, 2020, 35: 100219

Erdődi L, Zennaro F M. The agent web model: modeling web hacking for reinforcement learning. International Journal of Information Security, 2022, 21(2): 293–309

Li Y, Yan J, Naili M. Deep reinforcement learning for penetration testing of cyber-physical attacks in the smart grid. In: Proceedings of 2022 International Joint Conference on Neural Networks. 2022, 1–9

Gangupantulu R, Cody T, Rahma A, Redino C, Clark R, Park P. Crown jewels analysis using reinforcement learning with attack graphs. In: Proceedings of 2021 IEEE Symposium Series on Computational Intelligence. 2021, 1–6

Ghanem M C, Chen T M. Reinforcement learning for efficient network penetration testing. Information, 2019, 11(1): 6

Zennaro F M, Erdődi L. Modelling penetration testing with reinforcement learning using capture-the-flag challenges: trade-offs between model-free learning and a priori knowledge. IET Information Security, 2023, 17(3): 441–457

Pathak D, Agrawal P, Efros A A, Darrell T. Curiosity-driven exploration by self-supervised prediction. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops. 2017, 2778–2787

Wang P, Liu J, Zhong X, Yang G, Zhou S, Zhang Y. DUSC-DQN: an improved deep Q-network for intelligent penetration testing path design. In: Proceedings of the 7th International Conference on Computer and Communication Systems. 2022, 476–480

Vezhnevets A S, Osindero S, Schaul T, Heess N, Jaderberg M, Silver D, Kavukcuoglu K. Feudal networks for hierarchical reinforcement learning. In: Proceedings of the 34th International Conference on Machine Learning. 2017, 3540–3549

Czarnecki W, Jayakumar S, Jaderberg M, Hasenclever L, Teh Y W, Heess N, Osindero S, Pascanu R. Mix & match agent curricula for reinforcement learning. In: Proceedings of the 35th International Conference on Machine Learning. 2018, 1087–1095

Farquhar G, Gustafson L, Lin Z, Whiteson S, Usunier N, Synnaeve G. Growing action spaces. In: Proceedings of the 37th International Conference on Machine Learning. 2020, 285

Murata T, Ishibuchi H, Gen M. Specification of genetic search directions in cellular multi-objective genetic algorithms. In: Proceedings of the 1st International Conference on Evolutionary Multi-Criterion Optimization. 2001, 82–95

Deb K, Jain H. An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, Part I: solving problems with box constraints. IEEE Transactions on Evolutionary Computation, 2014, 18(4): 577–601

Hsu C H, Chang S H, Liang J H, Chou H P, Liu C H, Chang S C, Pan J Y, Chen Y T, Wei W, Juan D C. MONAS: multi-objective neural architecture search using reinforcement learning. 2018, arXiv preprint arXiv: 1806.10332

Mossalam H, Assael Y M, Roijers D M, Whiteson S. Multi-objective deep reinforcement learning. 2016, arXiv preprint arXiv: 1610.02707

Jaderberg M, Czarnecki W M, Dunning I, Marris L, Lever G, Castaneda A G, Beattie C, Rabinowitz N C, Morcos A S, Ruderman A, Sonnerat N, Green T, Deason L, Leibo J Z, Silver D, Hassabis D, Kavukcuoglu K, Graepel T. Human-level performance in 3D multiplayer games with population-based reinforcement learning. Science, 2019, 364(6443): 859–865

Shen R, Zheng Y, Hao J, Meng Z, Chen Y, Fan C, Liu Y. Generating behavior-diverse game AIs with evolutionary multi-objective deep reinforcement learning. In: Proceedings of the 29th International Joint Conference on Artificial Intelligence. 2021, 3371–3377

Strom B E, Applebaum A, Miller D P, Nickels K C, Pennington A G, Thomas C B. Mitre att&ck: Design and philosophy. Mitre Product MP, 2018

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O. Proximal policy optimization algorithms. 2017, arXiv preprint arXiv: 1707.06347

Schulman J, Moritz P, Levine S, Jordan M I, Abbeel P. High-dimensional continuous control using generalized advantage estimation. In: Proceedings of the 4th International Conference on Learning Representations. 2016

Burda Y, Edwards H, Storkey A J, Klimov O. Exploration by random network distillation. In: Proceedings of the 7th International Conference on Learning Representations. 2019

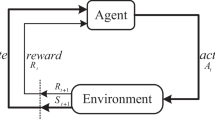

Sutton R S, Barto A G. Reinforcement Learning: An Introduction. 2nd ed. Cambridge: MIT Press, 2018

Roijers D M, Vamplew P, Whiteson S, Dazeley R. A survey of multi-objective sequential decision-making. Journal of Artificial Intelligence Research, 2013, 48: 67–113

Oh I, Rho S, Moon S, Son S, Lee H, Chung J. Creating pro-level AI for a real-time fighting game using deep reinforcement learning. IEEE Transactions on Games, 2022, 14(2): 212–220

Agarwal R, Schwarzer M, Castro P S, Courville A C, Bellemare M. Deep reinforcement learning at the edge of the statistical precipice. In: Proceedings of the 35th International Conference on Neural Information Processing Systems. 2021, 29304–29320

Standen M, Bowman D, Hoang S, Richer T, Lucas M, Van Tassel R. Cyber autonomy gym for experimentation challenge 1, 2021

Katz F H. Breadth vs. depth: best practices teaching cybersecurity in a small public university sharing models. The Cyber Defense Review, 2018, 3(2): 65–72

Acknowledgements

This research was partially supported by te Key Research Project of Zhejiang Lab (No. 2021PB0AV02). We would like to thank Wenli Liu, Mengxuan Chen, Yuejin Ye and Shuitao Gan for their useful comments and support.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests The authors declare that they have no competing interests or financial conflicts to disclose.

Additional information

Yizhou Yang received his PhD degree from The Australian National University, Australia. He is currently an assistant researcher at Zhongguancun Laboratory, China. His research interests include deep learning applications, network security, and wireless communication systems.

Longde Chen is currently an researcher at the National Research Centre of Parallel Computer Engineering and Technology, China. His research interests include parallel computing, large model software application and cybersecurity.

Sha Liu is a doctor student at University of Science and Technology of China, China. He is currently an associate researcher at the National Research Centre of Parallel Computer Engineering and Technology, China. His research interests include parallel computing and large model software application.

Lanning Wang is currently a professor at the Faculty of Geographical Science, Beijing Normal University, China. His research focuses on climate model and earth system model development, specifically in the areas of the dynamical core of the atmospheric model, model coupling technology, and high-performance computing technology for earth system models. He led the development of the Beijing Normal University–Earth System Model (BNU-ESM) and actively participated in the Couple Model Inter-comparison Project Phase 5 (CMIP5). He received the ACM Gordon Bell Prize in 2016.

Haohuan Fu (Senior Member, IEEE) received the PhD degree in computing from Imperial College, London. He is currently a professor with the Ministry of Education Key Laboratory for Earth System Modeling, and the Department of Earth System Science, Tsinghua University, China. He is also the deputy director with National Supercomputing Center, China. His research interests include high-performance computing in earth and environmental sciences, computer architectures, performance optimizations, and programming tools in parallel computing. He was the recipient of ACM Gordon Bell Prize in 2016, 2017, and 2021 and Most Significant Paper Award by FPL in 2015.

Xin Liu received the PhD degree from PLA Information Engineering University, China in 2006. She is currently a research fellow at National Research Centre of Parallel Computer Engineering and Technology, China. Her research interests include the areas of parallel algorithms and parallel application software.

Zuoning Chen is currently a research fellow at the National Research Centre of Parallel Computer Engineering and Technology, China. Her research interests include operation system and system security.

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Yang, Y., Chen, L., Liu, S. et al. Behaviour-diverse automatic penetration testing: a coverage-based deep reinforcement learning approach. Front. Comput. Sci. 19, 193309 (2025). https://doi.org/10.1007/s11704-024-3380-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-024-3380-1