Abstract

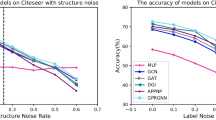

Graph neural networks (GNNs) have gained traction and have been applied to various graph-based data analysis tasks due to their high performance. However, a major concern is their robustness, particularly when faced with graph data that has been deliberately or accidentally polluted with noise. This presents a challenge in learning robust GNNs under noisy conditions. To address this issue, we propose a novel framework called Soft-GNN, which mitigates the influence of label noise by adapting the data utilized in training. Our approach employs a dynamic data utilization strategy that estimates adaptive weights based on prediction deviation, local deviation, and global deviation. By better utilizing significant training samples and reducing the impact of label noise through dynamic data selection, GNNs are trained to be more robust. We evaluate the performance, robustness, generality, and complexity of our model on five real-world datasets, and our experimental results demonstrate the superiority of our approach over existing methods.

Similar content being viewed by others

References

Wu B, Li J, Hou C, Fu G, Bian Y, Chen L, Huang J. Recent advances in reliable deep graph learning: adversarial attack, inherent noise, and distribution shift. 2022, arXiv preprint arXiv: 2202.07114

Li S Y, Huang S J, Chen S. Crowdsourcing aggregation with deep Bayesian learning. Science China Information Sciences, 2021, 64(3): 130104

Hoang N T, Choong J J, Murata T. Learning graph neural networks with noisy labels. In: Proceedings of the ICLR LLD 2019. 2019

Zügner D, Akbarnejad A, Günnemann S. Adversarial attacks on neural networks for graph data. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 2018, 2847–2856

Li Y, Yin J, Chen L. Unified robust training for graph neural networks against label noise. In: Proceedings of the 25th Pacific-Asia Conference on Knowledge Discovery and Data Mining. 2021, 528–540

Zhuo Y, Zhou X, Wu J. Training graph convolutional neural network against label noise. In: Proceedings of the 28th International Conference on Neural Information Processing. 2021, 677–689

Du X, Bian T, Rong Y, Han B, Liu T, Xu T, Huang W, Huang J. PI-GNN: a novel perspective on semi-supervised node classification against noisy labels. 2021, arXiv preprint arXiv: 2106.07451

Dai E, Aggarwal C, Wang S. NRGNN: learning a label noise resistant graph neural network on sparsely and noisily labeled graphs. In: Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 2021, 227–236

Kipf T N, Welling M. Semi-supervised classification with graph convolutional networks. In: Proceedings of ICLR 2017. 2017

Huang L, Zhang C, Zhang H. Self-adaptive training: beyond empirical risk minimization. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. 2020, 1624

Frenay B, Verleysen M. Classification in the presence of label noise: a survey. IEEE Transactions on Neural Networks and Learning Systems, 2014, 25(5): 845–869

Bootkrajang J, Kabán A. Classification of mislabelled microarrays using robust sparse logistic regression. Bioinformatics, 2013, 29(7): 870–877

Shi X, Che W. Combating with extremely noisy samples in weakly supervised slot filling for automatic diagnosis. Frontiers of Computer Science, 2023, 17(5): 175333

Xiao T, Xia T, Yang Y, Huang C, Wang X. Learning from massive noisy labeled data for image classification. In: Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition. 2015, 2691–2699

Tian Q, Sun H, Peng S, Ma T. Self-adaptive label filtering learning for unsupervised domain adaptation. Frontiers of Computer Science, 2023, 17(1): 171308

Beck C, Booth H, El-Assady M, Butt M. Representation problems in linguistic annotations: ambiguity, variation, uncertainty, error and bias. In: Proceedings of the 14th Linguistic Annotation Workshop. 2020, 60–73

Biggio B, Nelson B, Laskov P. Support vector machines under adversarial label noise. In: Proceedings of the 3rd Asian Conference on Machine Learning. 2011, 97–112

Abellán J, Masegosa A R. An experimental study about simple decision trees for bagging ensemble on datasets with classification noise. In: Proceedings of the 10th European Conference on Symbolic and Quantitative Approaches to Reasoning and Uncertainty. 2009, 446–456

Wang R, Liu T, Tao D. Multiclass learning with partially corrupted labels. IEEE Transactions on Neural Networks and Learning Systems, 2018, 29(6): 2568–2580

Sigurdsson S, Larsen J, Hansen L K, Philipsen P A, Wulf H C. Outlier estimation and detection application to skin lesion classification. In: Proceedings of 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing. 2002, 1049–1052

Bouveyron C, Girard S. Robust supervised classification with mixture models: learning from data with uncertain labels. Pattern Recognition, 2009, 42(11): 2649–2658

Brodley C E, Friedl M A. Identifying mislabeled training data. Journal of Artificial Intelligence Research, 1999, 11: 131–167

Thongkam J, Xu G, Zhang Y, Huang F. Support vector machine for outlier detection in breast cancer survivability prediction. In: Proceedings of the Asia-Pacific Web Conference. 2008, 99–109

Miranda A L B, Garcia L P F, Carvalho A C P L F, Lorena A C. Use of classification algorithms in noise detection and elimination. In: Proceedings of the 4th International Conference on Hybrid Artificial Intelligence Systems. 2009, 417–424

Dedeoglu E, Kesgin H T, Amasyali M F. A robust optimization method for label noisy datasets based on adaptive threshold: adaptive-k. Frontiers of Computer Science, 2024, 18(4): 184315

Wang Y, Kucukelbir A, Blei D M. Robust probabilistic modeling with Bayesian data reweighting. In: Proceedings of the 34th International Conference on Machine Learning. 2017, 3646–3655

Jiang L, Zhou Z, Leung T, Li L J, Li F F. MentorNet: learning data-driven curriculum for very deep neural networks on corrupted labels. In: Proceedings of the 35th International Conference on Machine Learning. 2018, 2304–2313

Shu J, Xie Q, Yi L, Zhao Q, Zhou S, Xu Z, Meng D. Meta-weight-net: learning an explicit mapping for sample weighting. In: Proceedings of the 33rd International Conference on Neural Information Processing Systems. 2019, 172

Nguyen D, Mummadi C K, Ngo T P N, Nguyen T H P, Beggel L, Brox T. SELF: learning to filter noisy labels with self-ensembling. In: Proceedings of the 8th International Conference on Learning Representations. 2020

Chen P, Liao B, Chen G, Zhang S. Understanding and utilizing deep neural networks trained with noisy labels. In: Proceedings of the 36th International Conference on Machine Learning. 2019, 1062–1070

Zheng G, Awadallah A H, Dumais S. Meta label correction for noisy label learning. In: Proceedings of the 35th AAAI Conference on Artificial Intelligence. 2021, 11053–11061

Liu J, Li R, Sun C. Co-correcting: noise-tolerant medical image classification via mutual label correction. IEEE Transactions on Medical Imaging, 2021, 40(12): 3580–3592

Malach E, Shalev-Shwartz S. Decoupling “when to update” from “how to update”. In: Proceedings of the 31st International Conference on Neural Information Processing Systems. 2017, 961–971

Han B, Yao Q, Yu X, Niu G, Xu M, Hu W, Tsang I W, Sugiyama M. Co-teaching: robust training of deep neural networks with extremely noisy labels. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. 2018, 8536–8546

Guo X, Wang W. Towards making co-training suffer less from insufficient views. Frontiers of Computer Science, 2019, 13(1): 99–105

Gasteiger J, Bojchevski A, Günnemann S. Predict then propagate: Graph neural networks meet personalized pagerank. In: Proceedings of the International Conference on Learning Representations (ICLR). 2019

Wei X, Gong X, Zhan Y, Du B, Luo Y, Hu W. CLNode: curriculum learning for node classification. In: Proceedings of the 16th ACM International Conference on Web Search and Data Mining. 2023, 670–678

Liu Y, Wu Z, Lu Z, Wen G, Ma J, Lu G, Zhu X. Multi-teacher self-training for semi-supervised node classification with noisy labels. In: Proceedings of the 31st ACM International Conference on Multimedia. 2023, 2946–2954

Kuang K, Cui P, Athey S, Xiong R, Li B. Stable prediction across unknown environments. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 2018, 1617–1626

Hwang M, Jeong Y, Sung W. Data distribution search to select core-set for machine learning. In: Proceedings of the 9th International Conference on Smart Media and Applications. 2020, 172–176

Paul M, Ganguli S, Dziugaite G K. Deep learning on a data diet: finding important examples early in training. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. 2021, 20596–20607

Wang H, Leskovec J. Unifying graph convolutional neural networks and label propagation. 2020, arXiv preprint arXiv: 2002.06755

Dong W, Wu J, Luo Y, Ge Z, Wang P. Node representation learning in graph via node-to-neighbourhood mutual information maximization. In: Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022, 16620–16629

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Computation, 1997, 9(8): 1735–1780

Sen P, Namata G, Bilgic M, Getoor L, Gallagher B, Eliassi-Rad T. Collective classification in network data. AI Magazine, 2008, 29(3): 93–106

Namata G, London B, Getoor L, Huang B. Query-driven active surveying for collective classification. In: Proceedings of the Workshop on Mining and Learning with Graphs. 2012

Mernyei P, Cangea C. Wiki-CS: a wikipedia-based benchmark for graph neural networks. In: Proceedings of the Graph Representation Learning and Beyond Workshop on ICML. 2020

Shchur O, Mumme M, Bojchevski A, Günnemann S. Pitfalls of graph neural network evaluation. 2019, arXiv preprint arXiv: 1811.05868

Zhang Z, Sabuncu M R. Generalized cross entropy loss for training deep neural networks with noisy labels. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. 2018, 8792–8802

Zhu M, Wang X, Shi C, Ji H, Cui P. Interpreting and unifying graph neural networks with an optimization framework. In: Proceedings of the Web Conference 2021. 2021, 1215–1226

Acknowledgment

The work was supported by the National Natural Science Foundation of China (Grant No. 62127808).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests The authors declare that they have no competing interests or financial conflicts to disclose.

Additional information

Yao Wu is an assistant professor at the National University of Defense Technology, China. She obtained her PhD degree from Huazhong University of Science and Technology, China in 2023, following the completion of her BS degree from the same institution in 2016. Her primary research focus is on graph data mining and analysis.

Hong Huang is an associate professor at Huazhong University of Science and Technology, China. She received her PhD degree from the University of Göttingen, Germany in 2016 and her ME degree from Tsinghua University, China in 2012. Her research interests lie in social network analysis, data mining, and knowledge graph.

Yu Song received his BS degree in electronic information engineering and his ME degree in computer science, in 2018 and 2021, from Huazhong University of Science and Technology, China. Currently, he is pursuing his PhD degree at the Université de Montréal, Canada. His research interests include graph data mining and NLP.

Hai Jin is a Chair Professor of computer science and engineering at Huazhong University of Science and Technology (HUST) in China. Jin received his PhD degree in computer engineering from HUST in 1994. In 1996, he was awarded a German Academic Exchange Service fellowship to visit the Technical University of Chemnitz in Germany. Jin worked at The University of Hong Kong, China between 1998 and 2000, and as a visiting scholar at the University of Southern California, USA between 1999 and 2000. He was awarded Excellent Youth Award from the National Science Foundation of China in 2001. Jin is a Fellow of IEEE, Fellow of CCF, and a life member of the ACM. His research interests include computer architecture, parallel and distributed computing, big data processing, data storage, and system security.

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Wu, Y., Huang, H., Song, Y. et al. Soft-GNN: towards robust graph neural networks via self-adaptive data utilization. Front. Comput. Sci. 19, 194311 (2025). https://doi.org/10.1007/s11704-024-3575-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-024-3575-5